“Cloud Numerics” Example: Analyzing Demographics Data from Windows Azure Marketplace

Imagine your sales business is ‘booming’ in cities X, Y, and Z, and you are looking to expand. Given that demographics provide regional indicators of sales potential, how do you find other cities with similar demographics? How do you sift though large sets of data to identify new cities and new expansion opportunities?

In this application example, we use “Cloud Numerics” to analyze demographics data such as average household size and median age between different postal (ZIP) codes in the United States.

This blog post demonstrates the following process for analyzing demographics data:

- We go through the steps of subscribing to the dataset in the Windows Azure Marketplace.

- We create a Cloud Numerics C# application for reading in data and computing correlation between different ZIP codes. We partition the application into two projects: one for reading in the data, and another one for performing the computation.

- Finally, we step through the writing of results to Windows Azure blob storage.

Before You Begin

- Create a Windows Live ID for accessing Windows Azure Marketplace

- Create a Windows Azure subscription for deploying and running the application (if do not have one already)

- Install “Cloud Numerics” on your local computer where you develop your C# applications

- Run the example application as detailed in the “Cloud Numerics” Lab Getting Started wiki page to validate your installation

Because of the memory usage, do not attempt to run this application on your local development machine. Additionally, you will need to run it on at least two compute nodes on your Windows Azure cluster.

| Note! |

| You specify how many compute nodes are allocated when you use the Cloud Numerics Deployment Utility to configure your Windows Azure cluster. For details, see this section in the Getting Started guide. |

Step 1: Subscribe to the Demographics Dataset at Windows Azure Marketplace

For this demonstration, we use the dataset “2010 Key US Demographics by ZIP Code, Place and County (Trial)”

To subscribe to the dataset:

- Sign up to Windows Azure Marketplace using your Windows Live ID at: https://datamarket.azure.com/

- Navigate to the page for the dataset: https://datamarket.azure.com/dataset/c7154924-7cab-47ac-97fb-7671376ff656

- Select Sign Up. Then click the Details tab of the dataset, and take note of the Service Root URI https://api.datamarket.azure.com/Esri/KeyUSDemographicsTrial/ . This URI will be used in creating the service reference in the next step.

- Finally, go to My Account, Account Keys and take note of the long Value string. You will need this string in the next step to access the dataset from the C# application.

Step 2: Set Up the Cloud Numerics Project

To set up your Cloud Numerics Visual Studio project:

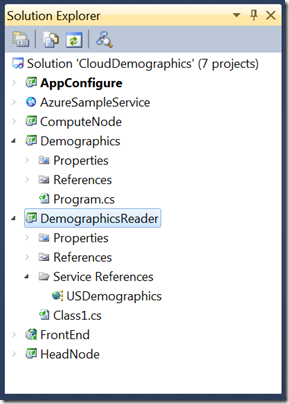

- Start Microsoft Visual Studio and create a solution from the Cloud Numerics project template. Let’s call the solution “CloudDemographics.”

- Rename the “MSCloudNumerics” project with more descriptive language. For the purposes of this blog post, we will name it “Demographics.”

- Next, add a new project to the solution as a C# class library. This project will have the code for reading data. let’s call the project “DemographicsReader.”

We now need to add project dependency from Demographics to DemographicsReader.

- In Solution Explorer, under the Demographics project, right-click References and select Add References.

- Click the Projects tab, and browse and add DemographicsReader as a reference.

- Also add a .NET reference for Microsoft.WindowsAzure.StorageClient. We’ll need it later on when it’s time to write results to blob storage.

Finally, go to the DemographicsReader project, and add references to the following managed assemblies. In the Add References window, click Browse and find the assemblies, typically found at C:\Program Files\Microsoft Numerics\v0.1\Bin

- Microsoft.Numerics.DenseArrays

- Microsoft.Numerics.Distributed.IO

- Microsoft.Numerics.DistributedDenseArrays

- Microsoft.Numerics.ManagedArrayImpl

- Microsoft.Numerics.Runtime

- Microsoft.Numerics.TCDistArrayHandle

Step 3: Add Service Reference to Dataset

To get data into the application, we need to add a service reference to the dataset.

- In Solution Explorer, right-click the DemographicsReader project and select Add Service Reference.

- In the dialog window, enter https://api.datamarket.azure.com/Esri/KeyUSDemographicsTrial/ as the address. Also, specify “USDemographics” as the namespace. Adding the reference provides auto-generated flexible query methods that reside in the DemographicsReader.USDemographics namespace and allow us to access the dataset.

| Note! |

| For an example of how to use a flexible query, see the MSDN Library topic titled Create a Flexible Query Application. |

3. Add the following “using” statements to the DemographicsReader project’s Class1.cs file, and declare a namespace “DemographicsReader” that will contain the classes for accessing the demographics data.

using System;

using System.Collections.Generic;

using System.Linq;

using msnl = Microsoft.Numerics.Local;

using DemographicsReader.USDemographics;

using System.Net;

namespace DemographicsReader

{

}

4. Add a class named “DemographicsReference” to this namespace with the following private members and a constructor.

public class DemographicsReference<T>

{

private Uri serviceURI;

private EsriKeyUSDemographicsTrialContainer context;

public DemographicsReference()

{

serviceURI = new Uri(@"https://api.datamarket.azure.com/Esri/KeyUSDemographicsTrial/");

context = new EsriKeyUSDemographicsTrialContainer(serviceURI);

// Change these to your Live ID and Azure Marketplace key

context.Credentials = new NetworkCredential(@"myemail@live.com",

@"myAzureMarketplaceKey");

}

}

5. Replace "myemail@live.com” with your Live ID and “myAzureMarketplaceKey” with the key from Step 1.

Step 4: Create a Serial Reader for Windows Azure Marketplace Data

Next, let’s build the LINQ query operation for reading in the data. A single transaction returns a maximum of 100 rows. However, we want to read in much more data. Therefore, we create a list where we append and consolidate the individual query results. We structure the query so that we can start from a given row, read in a block of rows, move forward, read in another block, and so forth.

The query, by default, returns objects of type demog1. This is a class that holds the demographics data as fields. However, we want to be able to select an arbitrary subset of columns. We accomplished this by supplying Func<demog1, T> selector function into the query as an argument.

Add the following method to the DemographicsReference<T> class

// Method to get demographics data

public IList<T> GetDemographicsData(int startIndex, int blocks, int blockSize, Func<demog1, T> selector)

{

// Read in blocks of data and append the results to a list

List<T> queryResult = new List<T>();

for (int i = 0; i < blocks; i++)

{

// Try reading each block few times in case reading fails

int retries = 0;

int maxRetries = 10;

bool success = false;

while (success == false)

{

try

{

// Select only geographies that have ZIP codes

// Order the results by ZIP code to ensure consistent ordering

// Skip to specific starting index

// Finally select subset of members and convert to list

queryResult.AddRange(context.demog1.

Where(demographic => demographic.GeographyType == "ZIP Code").

OrderBy(demographic => demographic.GeographyId).

Skip(startIndex + i * blockSize).Take(blockSize).

Select(selector).ToList());

success = true;

}

catch (Exception ex)

{

Console.WriteLine("Error: {0}", ex.Message);

retries++;

if (retries > maxRetries) { throw ex; }

}

}

}

return queryResult;

}

| Note! |

| To increase robustness, the method attempts to read in a block a few times in case the query fails initially. |

Step 5: Parallelize Reader Using IParallelReader Interface

Now, we have the basic framework in place to read in data. Next, we implement the Cloud Numerics IParallelReader interface to read in the data to a distributed array in parallel fashion.

Add the following class “DemographicsData” to the DemographicsReader namespace.

[Serializable]

public class DemographicsData : Microsoft.Numerics.Distributed.IO.IParallelReader<double>

{

private int samples;

private int blockSize = 100; // Maximum number of rows from query is a block of 100 rows

public DemographicsData(int samples)

{

if (samples % blockSize != 0)

{

throw new ArgumentException("Number of samples must be a multiple of " + this.blockSize);

}

this.samples = samples;

}

// Concatenate blocks of rows to form a distributed array

public int DistributedDimension

{

get { return 0; }

set { }

}

// Simple helper struct to keep track of block dimensions

[Serializable]

public struct BlockInfo

{

public int startIndex;

public int blocks;

}

// Attempt to divide blocks between ranks evenly

public Object[] ComputeAssignment(int ranks)

{

Object[] blockInfo = new Object[ranks];

BlockInfo thisBlockInfo;

int blocks = samples / blockSize;

int blocksPerRank = (int)Math.Ceiling((double)blocks / (double)ranks);

int remainingBlocks = blocks;

int startIndex = 0;

for (int i = 0; i < ranks; i++)

{

// Compute block info

thisBlockInfo.startIndex = startIndex;

thisBlockInfo.blocks = System.Math.Max(System.Math.Min(blocksPerRank, remainingBlocks),0);

startIndex = startIndex + thisBlockInfo.blocks * blockSize;

remainingBlocks = remainingBlocks - thisBlockInfo.blocks;

// Assingn thisBlockInfo into blockInfo

blockInfo[i] = thisBlockInfo;

}

return blockInfo;

}

// Method that queries the data and converts results to numeric array

public msnl.NumericDenseArray<double> ReadWorker(Object blockInfoAsObject)

{

var demographicsRef = new DemographicsReference<demog1>();

// Select all data from each row

Func<demog1, demog1> selector = demographic => demographic;

// Get information about blocks to read

BlockInfo blockInfo = (BlockInfo)blockInfoAsObject;

int blocks = blockInfo.blocks;

int startIndex = blockInfo.startIndex;

long columns = 10;

msnl.NumericDenseArray<double> data;

if (blocks == 0)

{

/// If number of blocks is 0 return empty array

data = msnl.NumericDenseArrayFactory.Create<double>(new long[] { 0, columns });

}

else

{

// Execute query

var dmList = demographicsRef.GetDemographicsData(startIndex, blocks, blockSize, selector);

var rows = dmList.Count();

data = msnl.NumericDenseArrayFactory.Zeros<double>(new long[] { rows, columns });

// Copy a subset of data to array columns

for (int i = 0; i < rows; i++)

{

data[i, 0] = (double)dmList[i].AverageHouseholdSize2010;

data[i, 1] = (double)dmList[i].HouseholdGrowth2010To2015Annual;

data[i, 2] = (double)dmList[i].MedianAge2010;

data[i, 3] = (double)dmList[i].MedianHomeValue2010;

data[i, 4] = (double)dmList[i].PctBachelorsDegOrHigher2010;

data[i, 5] = (double)dmList[i].PctOwnerOccupiedHousing2010;

data[i, 6] = (double)dmList[i].PctRenterOccupiedHousing2010;

data[i, 7] = (double)dmList[i].PctVacantHousing2010;

data[i, 8] = (double)dmList[i].PerCapitaIncome2010;

data[i, 9] = (double)dmList[i].PerCapitaIncomeGrowth2010To2015Annual;

}

}

return data;

}

}

The class constructor expects the number of dataset rows (“samples”) as input. The method “ComputeAssignment” divides the total number of rows into groups (referred to here as “blocks”) and attempts to distribute these blocks evenly between ReadWorkers. The “ReadWorker” method is executed in parallel fashion: there is one parallel call in each rank. The method expects as input a start index and a specified number of blocks to read. For example, the first worker might read rows 0-999, the second one rows 1000-1999 and so on. Then, each ReadWorker converts the demographics indicators from the query results into array columns. The arrays from each ReadWorker are then concatenated to form a large distributed array.

See the blog post titled “Cloud Numerics” Example: Using the IParallelReader Interface for more details on how to use the IParallelReader interface.

Step 6: Add Method for Getting Mapping from Rows to Geographies

Because the resulting distributed array is all numeric, we need an auxiliary list to map array rows to geographies. We accomplish this by adding a serial reader named “GetRowMapping.” We also add a struct “ZipCodeAndLocation” that holds the ZIP Code, city name, and state as well as the row index. The GetRowMapping then returns a list of such structs.

1. First, add the following struct to the top-level of the DemographicsReader namespace

// A class to contain zip code and location information, as well as an index

public class ZipCodeAndLocation

{

string _zipCode, _location, _state;

int _idx;

public string zipCode { get { return _zipCode; } }

public string location { get { return _location; } }

public string state { get { return _state; } }

public int idx

{

get { return _idx; }

set { _idx = value; }

}

public ZipCodeAndLocation(string zipCode, string location, string state)

{

_zipCode = zipCode;

_location = location;

_state = state;

_idx = 0;

}

}

2. Next, add a method GetRowMapping to the DemographicsData class from the previous step.

public IList<ZipCodeAndLocation> GetRowMapping()

{

var demographicsRef = new DemographicsReference<ZipCodeAndLocation>();

// select geography ID, name, state abbreviation and index

Func<demog1, ZipCodeAndLocation> selector =

demographic => new ZipCodeAndLocation(demographic.GeographyId, demographic.GeographyName, demographic.StateAbbreviation);

// Execute the query

var rowMapping = demographicsRef.GetDemographicsData(0, this.samples / this.blockSize, this.blockSize, selector);

// Construct mapping

for (int i = 0; i < rowMapping.Count; i++)

{

rowMapping[i].idx = i;

}

return rowMapping;

}

Here we use a selector that only picks out the GeographyID (the ZIP code), GeographyName (the city name), and StateAbbreviation from the full query. Then, because the results are in the same sorted order as those from the earlier demographics query, we can use a simple for-loop to add an index.

Step 7: Compute Correlation Matrix Using Distributed Data

Next, we’ll move to the Demographics project. We add a static method that expects a distributed array as input, normalizes the rows and columns, and then computes a correlation matrix. The columns have to be normalized because otherwise they would be on vastly different scales: for comparing per capita income and median age, for example. We do this by subtracting the mean of each column, and dividing by the standard deviation. Note how the KroneckerProduct method is used to tile vectors into an array that matches the data array size. The rows are then normalized to obtain the correlation coefficients between –1 and 1.

To get the correlation matrix between all ZIP codes, we need to compute correlation coefficients between each pair of rows. An efficient way to perform this computation is to multiply the data with its own transpose. This operation is at the heart of the application. Because it produces a very large matrix (30000-by-30000), performing the computation on a distributed cluster yields benefits.

Delete the default template code from Demographics project source code, and add the following:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using msnl = Microsoft.Numerics.Local;

using msnd = Microsoft.Numerics.Distributed;

using Microsoft.Numerics.LinearAlgebra;

using Microsoft.Numerics.Statistics;

using Microsoft.Numerics.Mathematics;

using Microsoft.WindowsAzure;

using Microsoft.WindowsAzure.StorageClient;

using DemographicsReader;

namespace Demographics

{

public class Program

{

static msnd.NumericDenseArray<double> Correlate(msnd.NumericDenseArray<double> data)

{

// Compute correlation matrix

// Helper vectors

long rows = data.Shape[0];

long columns = data.Shape[1];

msnd.NumericDenseArray<double> columnOfOnes = new msnd.NumericDenseArray<double>(new long[] { rows, 1 });

columnOfOnes.Fill(1);

msnd.NumericDenseArray<double> rowOfOnes = new msnd.NumericDenseArray<double>(new long[] { 1, columns });

rowOfOnes.Fill(1);

// Normalize each column to be between -1 and 1

// Subtract mean

var columnMean = Descriptive.Mean(data, 0);

data = data - Operations.KroneckerProduct(columnOfOnes, columnMean);

// Divide by standard deviation

var columnStandardDeviation = BasicMath.Sqrt(rows * Descriptive.Mean(data * data, 0));

data = data / Operations.KroneckerProduct(columnOfOnes, columnStandardDeviation);

// Next normalize rows

var rowMean = Descriptive.Mean(data, 1);

data = data - Operations.KroneckerProduct(rowMean, rowOfOnes);

var rowStandardDeviation = BasicMath.Sqrt(columns * Descriptive.Mean(data * data, 1));

data = data / Operations.KroneckerProduct(rowOfOnes, rowStandardDeviation);

// Compute correlation matrix by multiplying

var correlationMatrix = Operations.MatrixMultiply(data, data.Transpose());

return correlationMatrix;

}

}

}

In addition to the Correlate method, this code includes the necessary “using” statements for Cloud Numerics namespaces.

Step 8: Select Interesting Subset of Cities and Write the Correlations into a Blob

We store results to Windows Azure blob storage as a blob resembling a .csv formatted file. To save time and memory, we only write a small subset of the 30000-by-30000 correlation matrix to a blob. Add the following method to the Demographics Program class. Also, change “myAccountKey” and “myAccountName” to the key and name of your own storage account.This method takes a list of ZipCodeAndLocation objects we’re interested in, finds the corresponding rows and columns from the correlation matrix, and writes the results to a string. Then it creates a container “demographicsresult” and a blob, “demographicsresult.csv”, makes the blob publicly readable, and uploads the string to https://<myAccountName>.blob.core.net/demographicsresult/demographicsresult.csv .

static void WriteOutput(msnd.NumericDenseArray<double> corr, int[] rowArray, IList<ZipCodeAndLocation> rowLocation)

{

var nRows = rowArray.Count();

StringBuilder output = new StringBuilder();

// Write header rows

output.Append(" , ");

for (int i = 0; i < nRows; i++)

{

output.Append(", " + rowLocation[rowArray[i]].location);

}

output.AppendLine();

output.Append(" , ");

for (int i = 0; i < nRows; i++)

{

output.Append(", ZIP " + rowLocation[rowArray[i]].zipCode);

}

// Write rows

for (int i = 0; i < nRows; i++)

{

output.AppendLine();

output.Append(rowLocation[rowArray[i]].location + ", ZIP " + rowLocation[rowArray[i]].zipCode);

for (int j = 0; j < nRows; j++)

{

output.Append(", " + corr[rowArray[i], rowArray[j]]);

}

}

// Write to blob storage

// Replace "myAccountKey" and "myAccountName" by your own storage account key and name

string accountKey = "myAccountKey";

string accountName = "myAccountName";

// Result blob and container name

string containerName = "demographicsresult";

string blobName = "demographicsresult.csv";

// Create result container and blob

var storageAccountCredential = new StorageCredentialsAccountAndKey(accountName, accountKey);

var storageAccount = new CloudStorageAccount(storageAccountCredential, true);

var blobClient = storageAccount.CreateCloudBlobClient();

var resultContainer = blobClient.GetContainerReference(containerName);

resultContainer.CreateIfNotExist();

var resultBlob = resultContainer.GetBlobReference(blobName);

// Make result blob publicly readable,

var resultPermissions = new BlobContainerPermissions();

resultPermissions.PublicAccess = BlobContainerPublicAccessType.Blob;

resultContainer.SetPermissions(resultPermissions);

// Upload result to blob

resultBlob.UploadText(output.ToString());

}

Step 9: Putting it All Together

Finally, we add a main method that instantiates the reader, reads in data, computes correlations, and selects the interesting subset of cities from the row mapping. For example, let’s select Cambridge, MA, Redmond, WA, and Palo Alto, CA. Add the following main method to the Demographics Program class.

static public void Main()

{

// Initialize distributed runtime

Microsoft.Numerics.NumericsRuntime.Initialize();

// Read in demographics data from Azure marketplace for different ZIP codes

var demographics = new DemographicsData(30000);

var data = Microsoft.Numerics.Distributed.IO.Loader.LoadData<double>(demographics);

Console.WriteLine("Got {0} rows", data.Shape[0]);

// Compute correlation matrix

Console.WriteLine("Computing correlation matrix");

var corr = Correlate(data);

//Get mapping between ZIP codes, city names and matrix rows

Console.WriteLine("Selecting rows");

var rowLocation = demographics.GetRowMapping();

// Select some pairs of cities to demostrate result

var rowSelection = rowLocation.Where(row =>

(row.location == "Cambridge" && row.state == "MA" ) ||

(row.location == "Redmond" && row.state == "WA" )||

(row.location == "Palo Alto" && row.state == "CA" )).

Select(row => row.idx).ToArray();

// Write output

Console.WriteLine("Writing output");

WriteOutput(corr, rowSelection, rowLocation);

// Shut down runtime

Microsoft.Numerics.NumericsRuntime.Shutdown();

}

Now, the application is ready to be deployed. Set AppConfigure as StartUp project, build and deploy using the Deployment Utility. The application will run for a few minutes. The results can be viewed, for example by opening https://<myAccountName>.blob.core.net/demographicsresult/demographicsresult.csv as a spreadsheet

Here the correlation coefficients above 0.9 between different ZIP codes are highlighted.

Source Code

For your convenience, this section of the blog post contains the entire source code for you to copy. Remember to change the “myAccountKey” and “myAccountName” strings to your storage account key and name, and change “myemail@live.com” and “myAzureMarketplaceKey” to your Live ID and key from Step 1.

Demographics Program.cs

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using msnl = Microsoft.Numerics.Local;

using msnd = Microsoft.Numerics.Distributed;

using Microsoft.Numerics.LinearAlgebra;

using Microsoft.Numerics.Statistics;

using Microsoft.Numerics.Mathematics;

using Microsoft.WindowsAzure;

using Microsoft.WindowsAzure.StorageClient;

using DemographicsReader;

namespace Demographics

{

public class Program

{

static msnd.NumericDenseArray<double> Correlate(msnd.NumericDenseArray<double> data)

{

// Compute correlation matrix

// Helper vectors

long rows = data.Shape[0];

long columns = data.Shape[1];

msnd.NumericDenseArray<double> columnOfOnes = new msnd.NumericDenseArray<double>(new long[] { rows, 1 });

columnOfOnes.Fill(1);

msnd.NumericDenseArray<double> rowOfOnes = new msnd.NumericDenseArray<double>(new long[] { 1, columns });

rowOfOnes.Fill(1);

// Normalize each column to be between -1 and 1

// Subtract mean

var columnMean = Descriptive.Mean(data, 0);

data = data - Operations.KroneckerProduct(columnOfOnes, columnMean);

// Divide by standard deviation

var columnStandardDeviation = BasicMath.Sqrt(rows * Descriptive.Mean(data * data, 0));

data = data / Operations.KroneckerProduct(columnOfOnes, columnStandardDeviation);

// Next normalize rows

var rowMean = Descriptive.Mean(data, 1);

data = data - Operations.KroneckerProduct(rowMean, rowOfOnes);

var rowStandardDeviation = BasicMath.Sqrt(columns * Descriptive.Mean(data * data, 1));

data = data / Operations.KroneckerProduct(rowOfOnes, rowStandardDeviation);

// Compute correlation matrix by multiplying

var correlationMatrix = Operations.MatrixMultiply(data, data.Transpose());

return correlationMatrix;

}

// Output writer method

static void WriteOutput(msnd.NumericDenseArray<double> corr, int[] rowArray, IList<ZipCodeAndLocation> rowLocation)

{

var nRows = rowArray.Count();

StringBuilder output = new StringBuilder();

// Write header rows

output.Append(" , ");

for (int i = 0; i < nRows; i++)

{

output.Append(", " + rowLocation[rowArray[i]].location);

}

output.AppendLine();

output.Append(" , ");

for (int i = 0; i < nRows; i++)

{

output.Append(", ZIP " + rowLocation[rowArray[i]].zipCode);

}

// Write rows

for (int i = 0; i < nRows; i++)

{

output.AppendLine();

output.Append(rowLocation[rowArray[i]].location + ", ZIP " + rowLocation[rowArray[i]].zipCode);

for (int j = 0; j < nRows; j++)

{

output.Append(", " + corr[rowArray[i], rowArray[j]]);

}

}

// Write to blob storage

// Replace "myAccountKey" and "myAccountName" by your own storage account key and name

string accountKey = "myAccountKey";

string accountName = "myAccountName";

// Result blob and container name

string containerName = "demographicsresult";

string blobName = "demographicsresult.csv";

// Create result container and blob

var storageAccountCredential = new StorageCredentialsAccountAndKey(accountName, accountKey);

var storageAccount = new CloudStorageAccount(storageAccountCredential, true);

var blobClient = storageAccount.CreateCloudBlobClient();

var resultContainer = blobClient.GetContainerReference(containerName);

resultContainer.CreateIfNotExist();

var resultBlob = resultContainer.GetBlobReference(blobName);

// Make result blob publicly readable,

var resultPermissions = new BlobContainerPermissions();

resultPermissions.PublicAccess = BlobContainerPublicAccessType.Blob;

resultContainer.SetPermissions(resultPermissions);

// Upload result to blob

resultBlob.UploadText(output.ToString());

}

static public void Main()

{

// Initialize distributed runtime

Microsoft.Numerics.NumericsRuntime.Initialize();

// Read in demographics data from Azure marketplace for different ZIP codes

var demographics = new DemographicsData(30000);

var data = Microsoft.Numerics.Distributed.IO.Loader.LoadData<double>(demographics);

Console.WriteLine("Got {0} rows", data.Shape[0]);

// Compute correlation matrix

Console.WriteLine("Computing correlation matrix");

var corr = Correlate(data);

//Get mapping between ZIP codes, city names and matrix rows

Console.WriteLine("Selecting rows");

var rowLocation = demographics.GetRowMapping();

// Select some pairs of cities to demostrate result

var rowSelection = rowLocation.Where(row =>

(row.location == "Cambridge" && row.state == "MA") ||

(row.location == "Redmond" && row.state == "WA") ||

(row.location == "Palo Alto" && row.state == "CA")).

Select(row => row.idx).ToArray();

// Write output

Console.WriteLine("Writing output");

WriteOutput(corr, rowSelection, rowLocation);

// Shut down runtime

Microsoft.Numerics.NumericsRuntime.Shutdown();

}

}

}

DemographicsReader Class1.cs

using System;

using System.Collections.Generic;

using System.Linq;

using msnl = Microsoft.Numerics.Local;

using DemographicsReader.USDemographics;

using System.Net;

namespace DemographicsReader

{

// A class to contain zip code and location information, as well as an index

public class ZipCodeAndLocation

{

string _zipCode, _location, _state;

int _idx;

public string zipCode { get { return _zipCode; } }

public string location { get { return _location; } }

public string state { get { return _state; } }

public int idx

{

get { return _idx; }

set { _idx = value; }

}

public ZipCodeAndLocation(string zipCode, string location, string state)

{

_zipCode = zipCode;

_location = location;

_state = state;

_idx = 0;

}

}

public class DemographicsReference<T>

{

private Uri serviceURI;

private EsriKeyUSDemographicsTrialContainer context;

public DemographicsReference()

{

serviceURI = new Uri(@"https://api.datamarket.azure.com/Esri/KeyUSDemographicsTrial/");

context = new EsriKeyUSDemographicsTrialContainer(serviceURI);

// Change these to your Live ID and Azure Marketplace key

context.Credentials = new NetworkCredential(@"myemail@live.com",

@"myAzureMarketplaceKey");

}

// Method to get demographics data

public IList<T> GetDemographicsData(int startIndex, int blocks, int blockSize, Func<demog1, T> selector)

{

// Read in blocks of data and append the results to a list

List<T> queryResult = new List<T>();

for (int i = 0; i < blocks; i++)

{

// Try reading each block few times in case reading fails

int retries = 0;

int maxRetries = 10;

bool success = false;

while (success == false)

{

try

{

// Select only geographies that have ZIP codes

// Order the results by ZIP code to ensure consistent ordering

// Skip to specific starting index

// Finally select subset of members and convert to list

queryResult.AddRange(context.demog1.

Where(demographic => demographic.GeographyType == "ZIP Code").

OrderBy(demographic => demographic.GeographyId).

Skip(startIndex + i * blockSize).Take(blockSize).

Select(selector).ToList());

success = true;

}

catch (Exception ex)

{

Console.WriteLine("Error: {0}", ex.Message);

retries++;

if (retries > maxRetries) { throw ex; }

}

}

}

return queryResult;

}

}

[Serializable]

public class DemographicsData : Microsoft.Numerics.Distributed.IO.IParallelReader<double>

{

private int samples;

private int blockSize = 100; // Maximum number of rows from query is a block of 100 rows

public DemographicsData(int samples)

{

if (samples % blockSize != 0)

{

throw new ArgumentException("Number of samples must be a multiple of " + this.blockSize);

}

this.samples = samples;

}

// Concatenate blocks of rows to form a distributed array

public int DistributedDimension

{

get { return 0; }

set { }

}

// Simple helper struct to keep track of block dimensions

[Serializable]

public struct BlockInfo

{

public int startIndex;

public int blocks;

}

// Attempt to divide blocks between ranks evenly

public Object[] ComputeAssignment(int ranks)

{

Object[] blockInfo = new Object[ranks];

BlockInfo thisBlockInfo;

int blocks = samples / blockSize;

int blocksPerRank = (int)Math.Ceiling((double)blocks / (double)ranks);

int remainingBlocks = blocks;

int startIndex = 0;

for (int i = 0; i < ranks; i++)

{

// Compute block info

thisBlockInfo.startIndex = startIndex;

thisBlockInfo.blocks = System.Math.Max(System.Math.Min(blocksPerRank, remainingBlocks),0);

startIndex = startIndex + thisBlockInfo.blocks * blockSize;

remainingBlocks = remainingBlocks - thisBlockInfo.blocks;

// Assingn thisBlockInfo into blockInfo

blockInfo[i] = thisBlockInfo;

}

return blockInfo;

}

// Method that queries the data and converts results to numeric array

public msnl.NumericDenseArray<double> ReadWorker(Object blockInfoAsObject)

{

var demographicsRef = new DemographicsReference<demog1>();

// Select all data from each row

Func<demog1, demog1> selector = demographic => demographic;

// Get information about blocks to read

BlockInfo blockInfo = (BlockInfo)blockInfoAsObject;

int blocks = blockInfo.blocks;

int startIndex = blockInfo.startIndex;

long columns = 10;

msnl.NumericDenseArray<double> data;

if (blocks == 0)

{

/// If number of blocks is 0 return empty array

data = msnl.NumericDenseArrayFactory.Create<double>(new long[] { 0, columns });

}

else

{

// Execute query

var dmList = demographicsRef.GetDemographicsData(startIndex, blocks, blockSize, selector);

var rows = dmList.Count();

data = msnl.NumericDenseArrayFactory.Zeros<double>(new long[] { rows, columns });

// Copy a subset of data to array columns

for (int i = 0; i < rows; i++)

{

data[i, 0] = (double)dmList[i].AverageHouseholdSize2010;

data[i, 1] = (double)dmList[i].HouseholdGrowth2010To2015Annual;

data[i, 2] = (double)dmList[i].MedianAge2010;

data[i, 3] = (double)dmList[i].MedianHomeValue2010;

data[i, 4] = (double)dmList[i].PctBachelorsDegOrHigher2010;

data[i, 5] = (double)dmList[i].PctOwnerOccupiedHousing2010;

data[i, 6] = (double)dmList[i].PctRenterOccupiedHousing2010;

data[i, 7] = (double)dmList[i].PctVacantHousing2010;

data[i, 8] = (double)dmList[i].PerCapitaIncome2010;

data[i, 9] = (double)dmList[i].PerCapitaIncomeGrowth2010To2015Annual;

}

}

return data;

}

// Serial method to query the data to get a mapping from array rows to geographies

public IList<ZipCodeAndLocation> GetRowMapping()

{

var demographicsRef = new DemographicsReference<ZipCodeAndLocation>();

// select geography ID, name, state abbreviation and index

Func<demog1, ZipCodeAndLocation> selector =

demographic => new ZipCodeAndLocation(demographic.GeographyId, demographic.GeographyName, demographic.StateAbbreviation);

// Execute the query

var rowMapping = demographicsRef.GetDemographicsData(0, this.samples / this.blockSize, this.blockSize, selector);

// Construct mapping

for (int i = 0; i < rowMapping.Count; i++)

{

rowMapping[i].idx = i;

}

return rowMapping;

}

}

}

Comments

- Anonymous

February 12, 2012

Hi, Roope,A link to the source code for the complete project would also be useful.Thanks in advance,--rj