Build your own predictive model

You can create your own predictive web service based on a public model named Prediction Experiment for Dynamics 365 Business Central. This predictive model is available online in the Microsoft Azure AI Gallery.

To use the predictive model, follow these steps:

Open a browser and go to the Azure AI Gallery.

Search for Prediction Experiment for Dynamics 365 Business Central and then open the model in Microsoft Azure Machine Learning studio.

On the Prediction Experiment for Dynamics 365 Business Central page, select the Open in Studio (Classic) link.

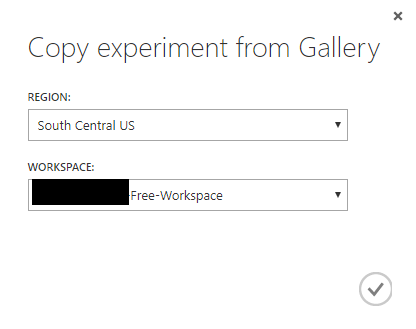

When prompted, use your Microsoft account to sign up for a workspace, and then copy the model.

The model is available in your workspace.

At the bottom of the page select Run > Run Model to run the model. The process can take a couple of minutes to complete.

Publish your model as a web service by selecting Deploy Webservice.

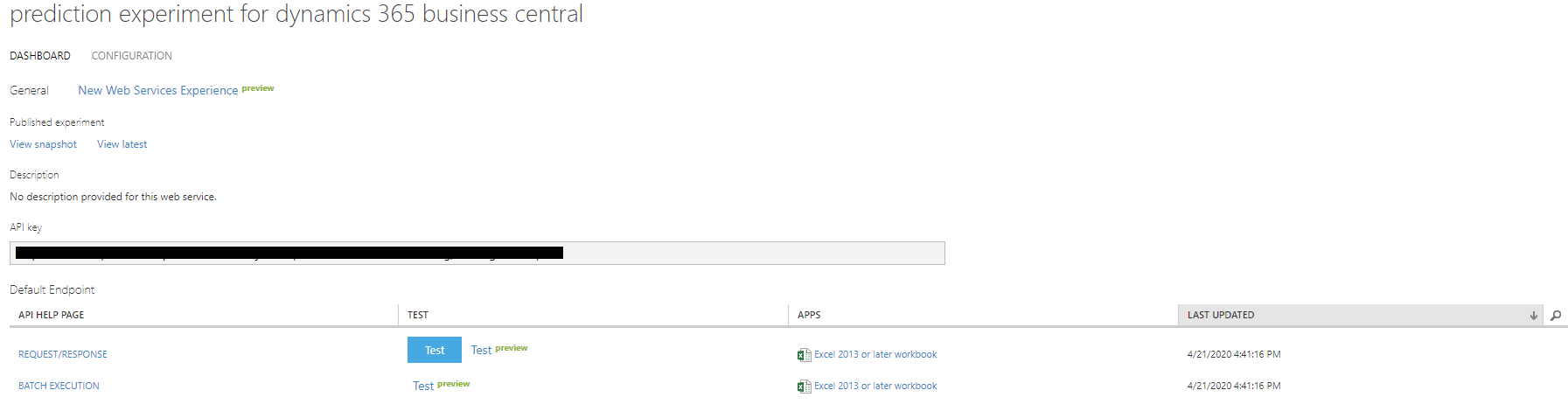

After the web service is created, it should appear similar to the following screenshot.

Note the information that is shown in the API URI and API Key fields. You can use these credentials for a cash flow setup.

Select the Search for page icon, which opens the Tell Me feature. Enter Late Payment Prediction Setup, and then select the related link.

Select the Use My Azure Subscription option.

On the My Model Credentials FastTab, enter the API URI (select REQUEST/RESPONSE and then copy the Request URI) and API Key for your model.

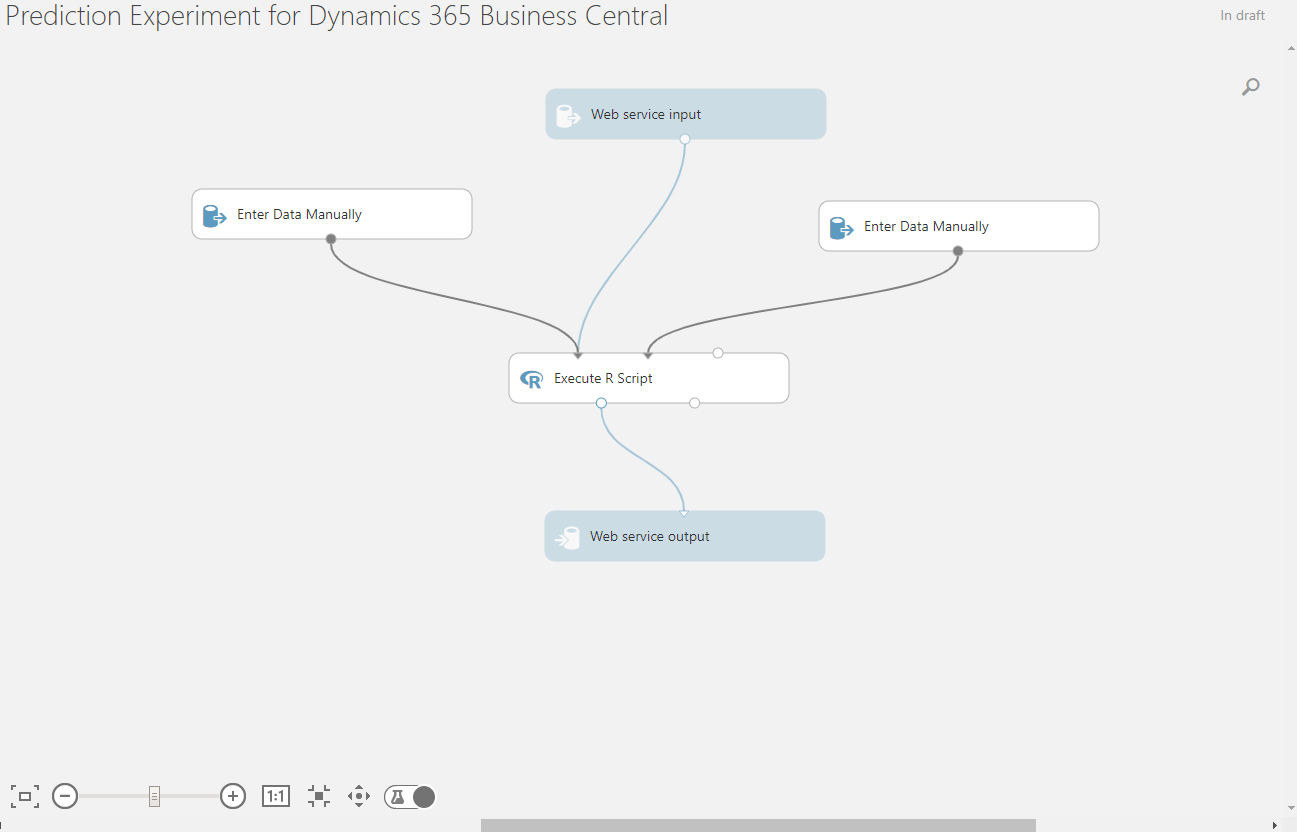

The Prediction Model for Microsoft Dynamics 365 Business Central helps you train, evaluate, and visualize models for prediction purposes. This model uses the Execute R Script module to run the R scripts that performs all the tasks. The two input modules define the expected structure of the input datasets. The first module defines the structure of the dataset, which is flexible and can accept up to 25 features. The second defines the parameters.

When you call the API, you need to pass several parameters:

method (String) - Required parameter. Specifies the Machine Learning procedure to be used. The model supports the following methods:

train (the system decides whether to use classification or regression based on your dataset)

trainclassification

trainregression

predict

evaluate

plotmodel

Based on the selected method, you might need more parameters:

train_percent (Numeric) - Required for train, trainclassification, and trainregression methods. Specifies how to divide a dataset into training and validation sets. The value of 80 means that 80 percent of the dataset is used for training and 20 percent is used for validation of the result.

model (String; base64) - Required for predict, evaluate, and plotmodel methods. This parameter is a content serialized model and is encoded with Base64. You can get the model parameter as a result of run train, trainclassification, or trainregression methods.

captions (String) - Optional parameter that is used with the plotmodel method. This parameter contains comma-separated captions for features. If it's not passed, Feature1..Feature25 are used.

labels (String) - Optional parameter that is used with the plotmodel method. This parameter contains comma-separated alternative captions for labels. If it isn't passed, actual values are used.

dataset - Required for train, trainclassification, trainregression, evaluate, and predict methods, and it consists of the following parameters:

Feature1..25 - The features are the descriptive attributes (also known as dimensions) that describe the single observation (record in dataset). It can be integer, decimal, Boolean, option, code, or string.

Label - This parameter is required, but it should be empty for the predict method. The label is what you're attempting to predict or forecast.

Output of the service includes the following parameters:

model (String; base64) - Result of implementing the train, trainclassification, and trainregression methods. This parameter contains the serialized model, encoded with Base64.

quality (Numeric) - Result of implementing the train, trainclassification, trainregression, and evaluate methods. In the current experiment, you can use the Balanced Accuracy score as a measure of a model's quality.

plot (application/pdf;base64) - Result of implementing the plotmodel method. This parameter contains visualization of the model in PDF format, encoded with Base64.

dataset - Result of implementing the predict method and consists of the following parameters:

Feature1..25 - This parameter is the same as the input.

Label - The predicted value.

Confidence - The probability that classification is correct.