Deploy a Storage Spaces Direct hyper-converged cluster in VMM

This article describes how to set up a hyper-converged cluster running Storage Spaces Direct (S2D) in System Center Virtual Machine Manager (VMM). Learn more about S2D.

You can deploy a hyper-converged S2D cluster by provisioning a Hyper-V cluster and enable S2D from existing Hyper-V hosts or by provisioning from bare-metal servers.

You can't currently enable S2D in a hyper-converged deployment on a Hyper-V cluster deployed from bare metal computers with the Nano operating system.

Note

You must enable S2D before adding the storage provider to VMM.

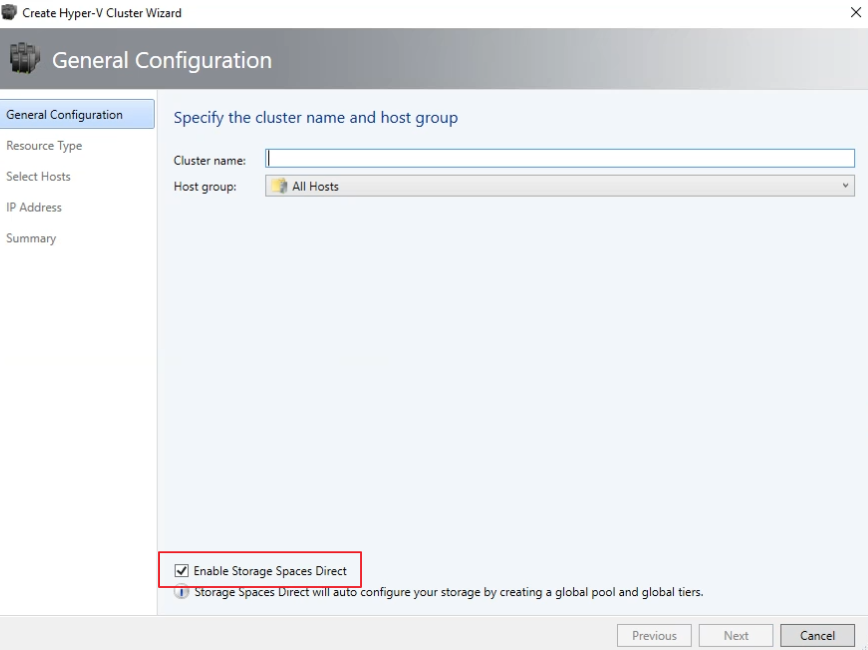

To enable S2D, go to General Configuration > Specify the cluster name and host group, and select the Enable Storage Spaces Direct option as shown below:

After you enable a cluster with S2D, VMM does the following:

- The File Server role and the Failover Clustering feature are enabled.

- Storage replica and data deduplication are enabled.

- The cluster is optionally validated and created.

- S2D is enabled, and a storage array is created with the same name you provide in the wizard.

If you use PowerShell to create a hyper-converged cluster, the pool and the storage tier are automatically created with the Enable-ClusterS2D autoconfig=true option.

Before you start

- Ensure that you're running VMM 2016 or later.

- Hyper-V hosts in a cluster should be running Windows Server 2016 or later with the Hyper-V Role installed and be configured to host VMs.

Note

VMM 2019 UR3 and later supports Azure Stack Hyper Converged Infrastructure (HCI, version 20H2).

Note

VMM 2022 supports Azure Stack Hyper Converged Infrastructure (HCI, version 20H2 and 21H2).

- Ensure that you're running VMM 2019 or later.

- Hyper-V hosts in a cluster must be running Windows Server 2019 or later with the Hyper-V Role installed and be configured to host VMs.

Note

VMM 2025 supports Azure Stack Hyper Converged Infrastructure (HCI, version 23H2 and 22H2).

After these prerequisites are in place, you provision a cluster, and set up storage resources on it. You can then deploy VMs on the cluster or export the storage to other resources using SOFS.

Step 1: Provision the cluster

You can provision a cluster in the following ways:

- From Hyper-V hosts

- From bare metal machines

Select the required tab for the steps to provision a cluster:

Follow these steps to provision a cluster from Hyper-V hosts:

- If you need to add the Hyper-V hosts to the VMM fabric, follow these steps. If they’re already in the VMM fabric, skip to the next step.

- Follow the instructions for provisioning a cluster from standalone Hyper-V hosts managed in the VMM fabric.

Note

- When you set up the cluster, ensure to select the Enable Storage Spaces Direct option on the General Configuration page of the Create Hyper-V Cluster wizard. In Resource Type, select Existing servers running a Windows Server operating system, and select the Hyper-V hosts to add to the cluster.

- If S2D is enabled, you must validate the cluster. Skipping this step isn't supported.

Step 2: Set up networking for the cluster

After the cluster is provisioned and managed in the VMM fabric, you need to set up networking for cluster nodes.

- Start by creating a logical network to mirror your physical management network.

- You need to set up a logical switch with Switch Embedded Teaming (SET) enabled so that the switch is aware of virtualization. This switch is connected to the management logical network and has all the host virtual adapters that are required to provide access to the management network or configure storage networking. S2D relies on a network to communicate between hosts. RDMA-capable adapters are recommended.

- Create VM networks.

Note

The following feature is applicable for VMM 2019 UR1.

Step 3: Configure DCB settings on the S2D cluster

Note

Configuration of DCB settings is an optional step to achieve high performance during S2D cluster creation workflow. Skip to step 4 if you don't wish to configure DCB settings.

Recommendations

If you have vNICs deployed, for optimal performance, we recommend you to map all your vNICs with the corresponding pNICs. Affinities between vNIC and pNIC are set randomly by the operating system, and there could be scenarios where multiple vNICs are mapped to the same pNIC. To avoid such scenarios, we recommend you to manually set affinity between vNIC and pNIC by following the steps listed here.

When you create a network adapter port profile, we recommend you to allow IEEE priority. Learn more. You can also set the IEEE Priority using the following PowerShell commands:

PS> Set-VMNetworkAdapterVlan -VMNetworkAdapterName SMB2 -VlanId "101" -Access -ManagementOS PS> Set-VMNetworkAdapter -ManagementOS -Name SMB2 -IeeePriorityTag on

Before you begin

Ensure the following:

- You're running VMM 2019 or later.

- Hyper-V hosts in the cluster are running Windows Server 2019 or later with the Hyper-V role installed and configured to host VMs.

Before you begin

Ensure the following:

- You're running VMM 2019 or later.

- Hyper-V hosts in the cluster are running Windows Server 2019 or later with the Hyper-V role installed and configured to host VMs.

Note

- You can configure DCB settings on both Hyper-V S2D cluster (Hyper-converged) and SOFS S2D cluster (disaggregated).

- You can configure the DCB settings during cluster creation workflow or on an existing cluster.

- You can't configure DCB settings during SOFS cluster creation; you can only configure on an existing SOFS cluster. All the nodes of the SOFS cluster must be managed by VMM.

- Configuration of DCB settings during cluster creation is supported only when the cluster is created with an existing windows server. It isn't supported with bare metal/operating system deployment workflow.

Use the following steps to configure DCB settings:

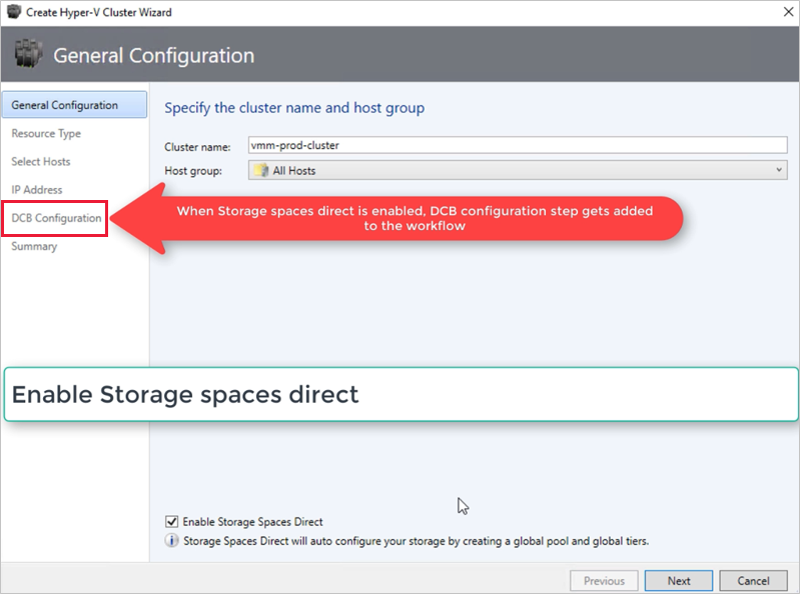

Create a new Hyper-V cluster, and select Enable Storage Spaces Direct. DCB Configuration option gets added to the Hyper-V cluster creation workflow.

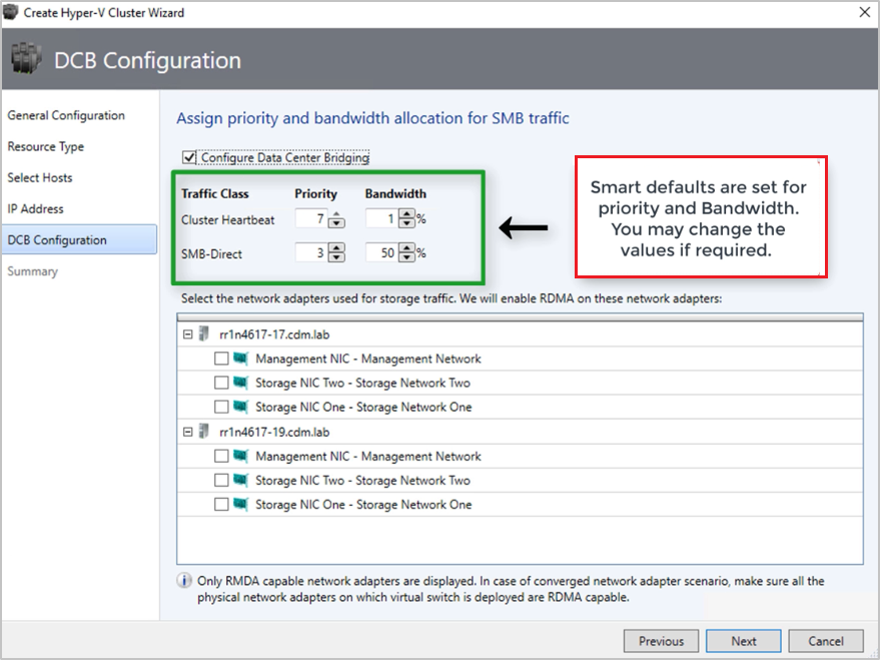

In DCB configuration, select Configure Data Center Bridging.

Provide Priority and Bandwidth values for SMB-Direct and Cluster Heartbeat traffic.

Note

Default values are assigned to Priority and Bandwidth. Customize these values based on your organization's environment needs.

Default values:

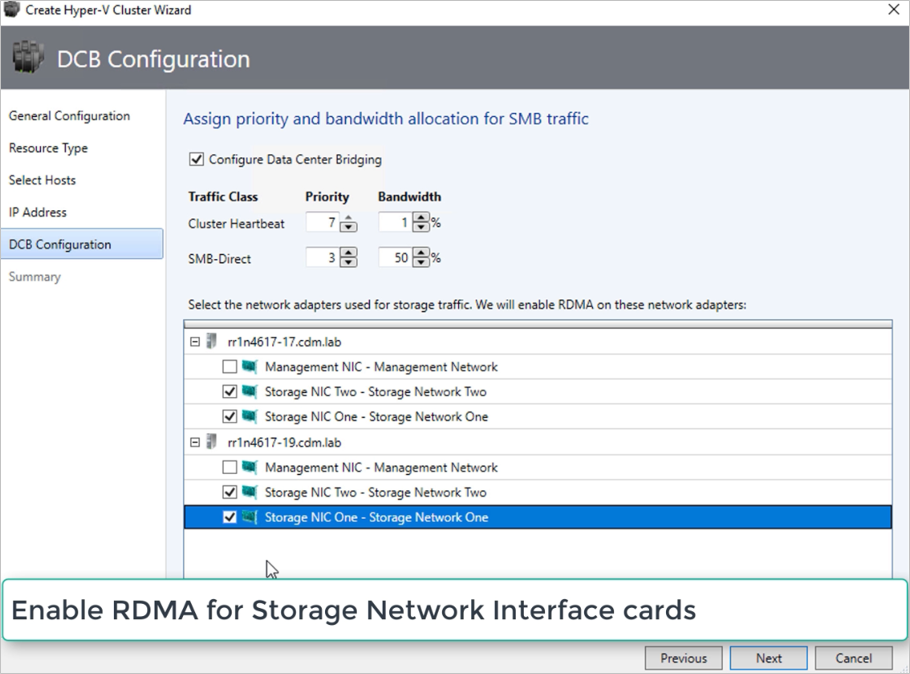

Traffic Class Priority Bandwidth (%) Cluster Heartbeat 7 1 SMB-Direct 3 50 Select the network adapters used for storage traffic. RDMA is enabled on these network adapters.

Note

In a converged NIC scenario, select the storage vNICs. The underlying pNICs must be RDMA capable for vNICs to be displayed and available for selection.

Review the summary and select Finish.

An S2D cluster will be created, and the DCB parameters are configured on all the S2D Nodes.

Note

- DCB settings can be configured on the existing Hyper-V S2D clusters by visiting the Cluster Properties page and navigating to the DCB configuration page.

- Any out-of-band changes to DCB settings on any of the nodes will cause the S2D cluster to be noncompliant in VMM. A Remediate option will be provided in the DCB configuration page of cluster properties, which you can use to enforce the DCB settings configured in VMM on the cluster nodes.

Step 4: Manage the pool and create CSVs

You can now modify the storage pool settings and create virtual disks and CSVs.

Select Fabric > Storage > Arrays.

Right-click the cluster > Manage Pool, and select the storage pool that was created by default. You can change the default name and add a classification.

To create a CSV, right-click the cluster > Properties > Shared Volumes.

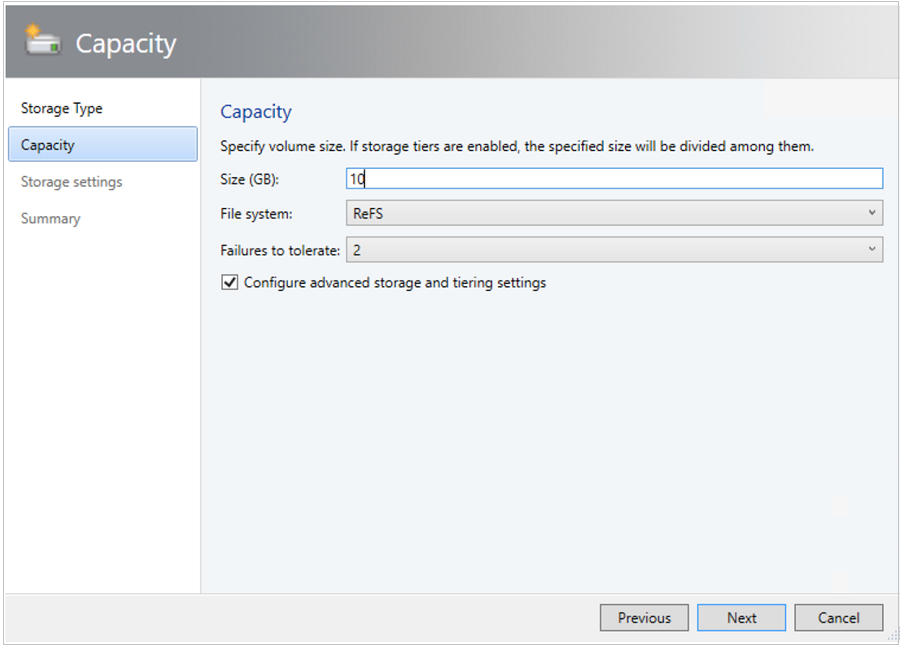

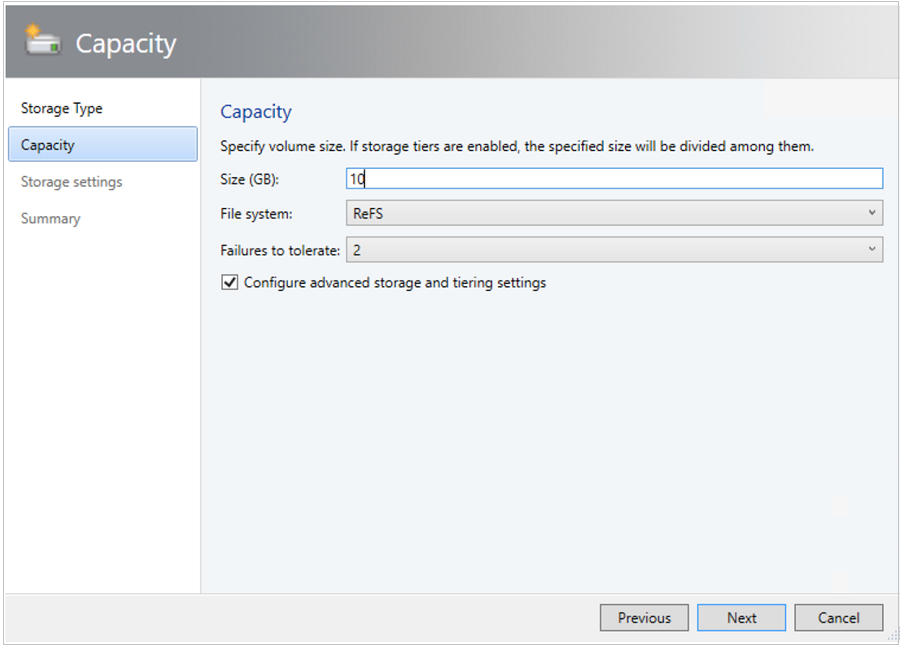

In the Create Volume Wizard > Storage Type, specify the volume name and select the storage pool.

In Capacity, you can specify the volume size, file system, and resiliency settings.

Select Configure advanced storage and tiering settings to set up these options.

Select Next.

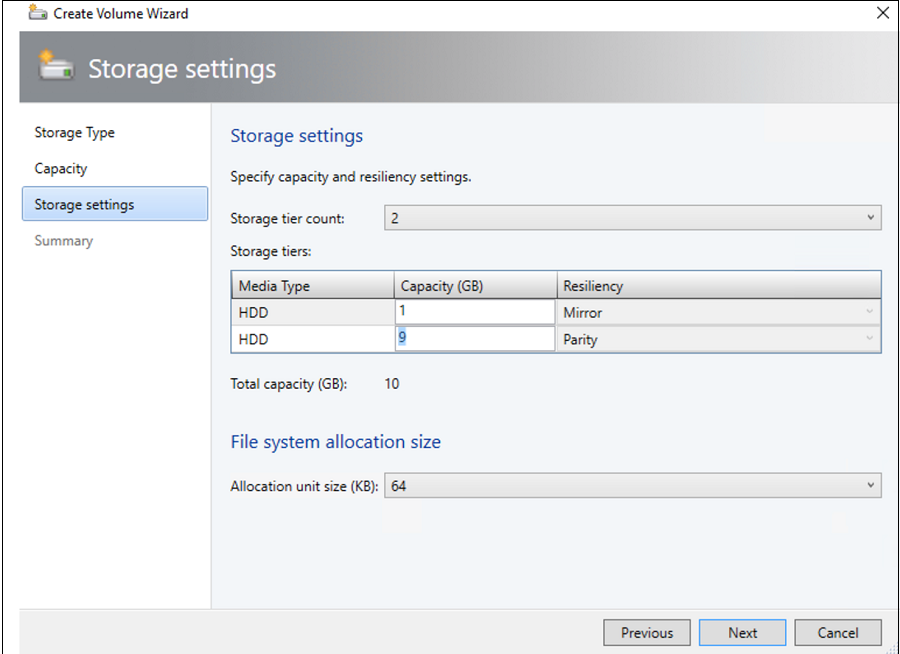

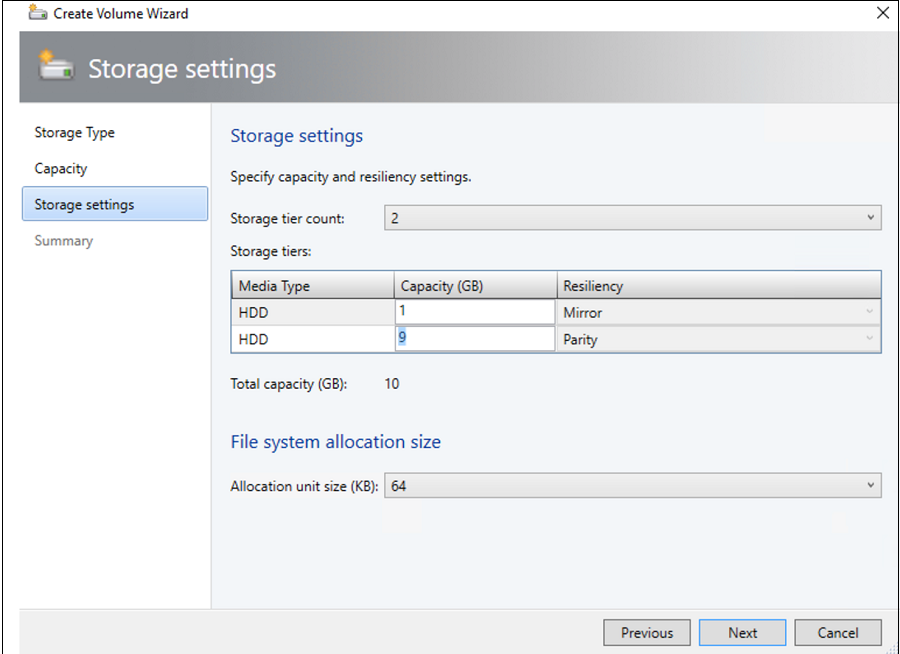

In Storage Settings, specify the storage tier split, capacity, and resiliency settings.

In Summary, verify the settings and finish the wizard. A virtual disk will be created automatically when you create the volume.

If you use PowerShell, the pool and the storage tier are automatically created with the Enable-ClusterS2D autoconfig=true option.

Step 5: Deploy VMs on the cluster

In a hyper-converged topology, VMs can be directly deployed on the cluster. Their virtual hard disks are placed on the volumes you created using S2D. You create and deploy these VMs just as you would any other VM.

Step 3: Manage the pool and create CSVs

You can now modify the storage pool settings and create virtual disks and CSVs.

Select Fabric > Storage > Arrays.

Right-click the cluster > Manage Pool, and select the storage pool that was created by default. You can change the default name and add a classification.

To create a CSV, right-click the cluster > Properties > Shared Volumes.

In the Create Volume Wizard > Storage Type, specify the volume name and select the storage pool.

In Capacity, you can specify the volume size, file system, and resiliency settings.

Select Configure advanced storage and tiering settings to set up these options.

Select Next.

In Storage Settings, specify the storage tier split, capacity, and resiliency settings.

In Summary, verify settings and finish the wizard. A virtual disk will be created automatically when you create the volume.

If you use PowerShell, the pool and the storage tier are automatically created with the Enable-ClusterS2D autoconfig=true option.

Step 4: Deploy VMs on the cluster

In a hyper-converged topology, VMs can be directly deployed on the cluster. Their virtual hard disks are placed on the volumes you created using S2D. You create and deploy these VMs just as you would any other VM.