Security domain: operational security

The operational security domain ensures ISVs implement a strong set of security mitigation techniques against a myriad of threats faced from threat actors. This is designed to protect the operating environment and software development processes to build secure environments.

Awareness training

Security awareness training is important for organizations as it helps to minimize the risks stemming from human error, which is involved in more than 90% of security breaches. It helps employees understand the importance of security measures and procedures. When security awareness training is offered it reinforces the importance of a security-aware culture where users know how to recognize and respond to potential threats. An effective security awareness training program should include content that covers a wide range of topics and threats that users might face, such as social engineering, password management, privacy, and physical security.

Control No. 1

Please provide evidence that:

The organization provides established security awareness training to information system users (including managers, senior executives, and contractors):

As part of initial training for new users.

When required by information system changes.

Organization-defined frequency of awareness training.

Documents and monitors individual information system security awareness activities and retains individual training records over an organization-defined frequency.

Intent: training for new users

This subpoint focuses on establishing a mandatory security awareness training program designed for all employees and for new employees who join the organization, irrespective of their role. This includes managers, senior executives, and contractors. The security awareness program should encompass a comprehensive curriculum designed to impart foundational knowledge about the organization’s information security protocols, policies, and best practices to ensure that all members of the organization are aligned with a unified set of security standards, creating a resilient information security environment.

Guidelines: training for new users

Most organizations will utilize a combination of platform-based security awareness training and administrative documentation such as policy documentation and records to track the completion of the training for all employees across the organization. Evidence supplied must show that employees have completed the training, and this should be backed up with supporting policies/procedures outlining the security awareness requirement.

Example evidence: training for new users

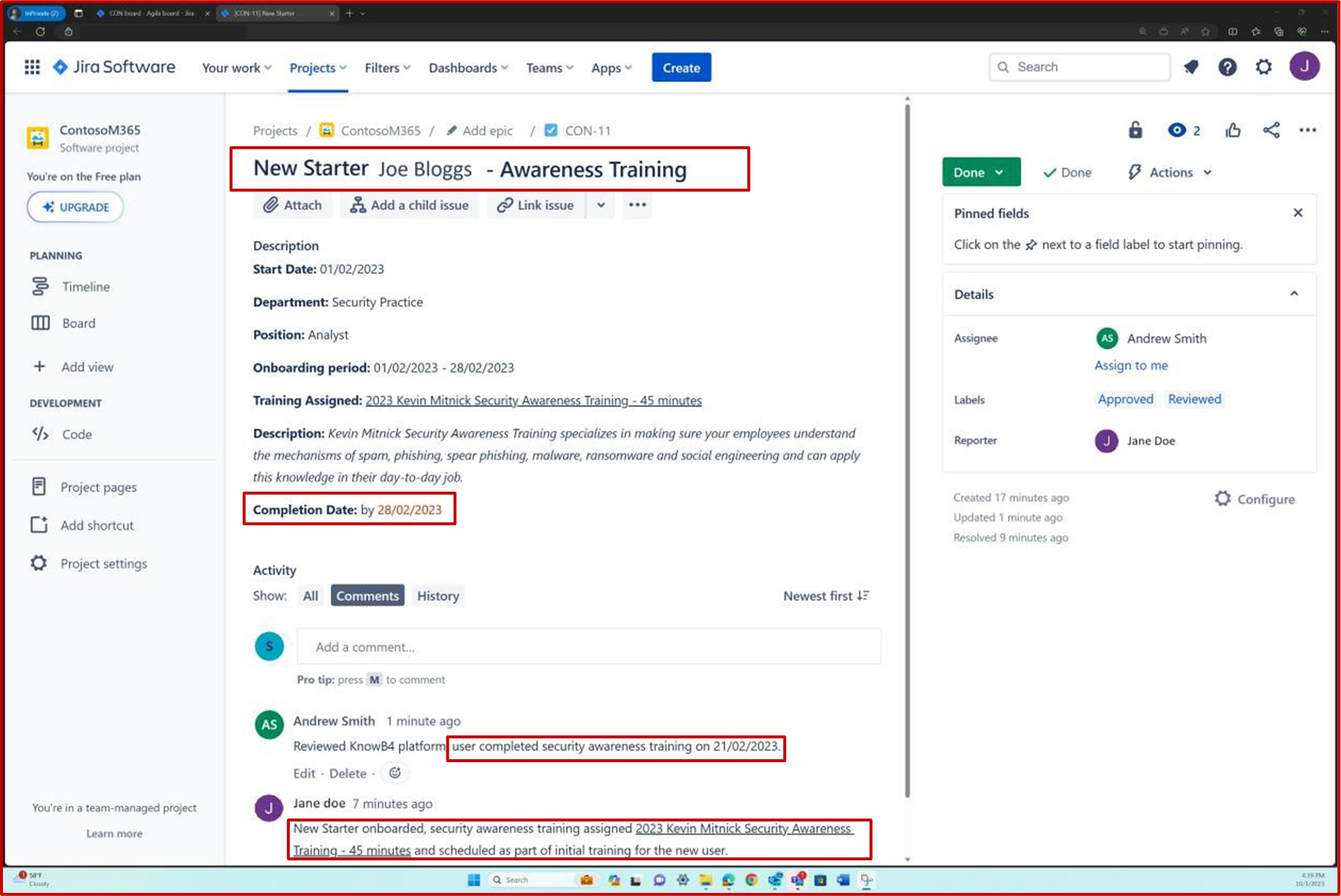

The following screenshot shows the Confluence platform being used to track the onboarding of new employees. A JIRA ticket was raised for the new employee including their assignment, role, department, etc. With the new starter process, the security awareness training has been selected and assigned to the employee which needs to be completed by the due date of 28th of February 2023.

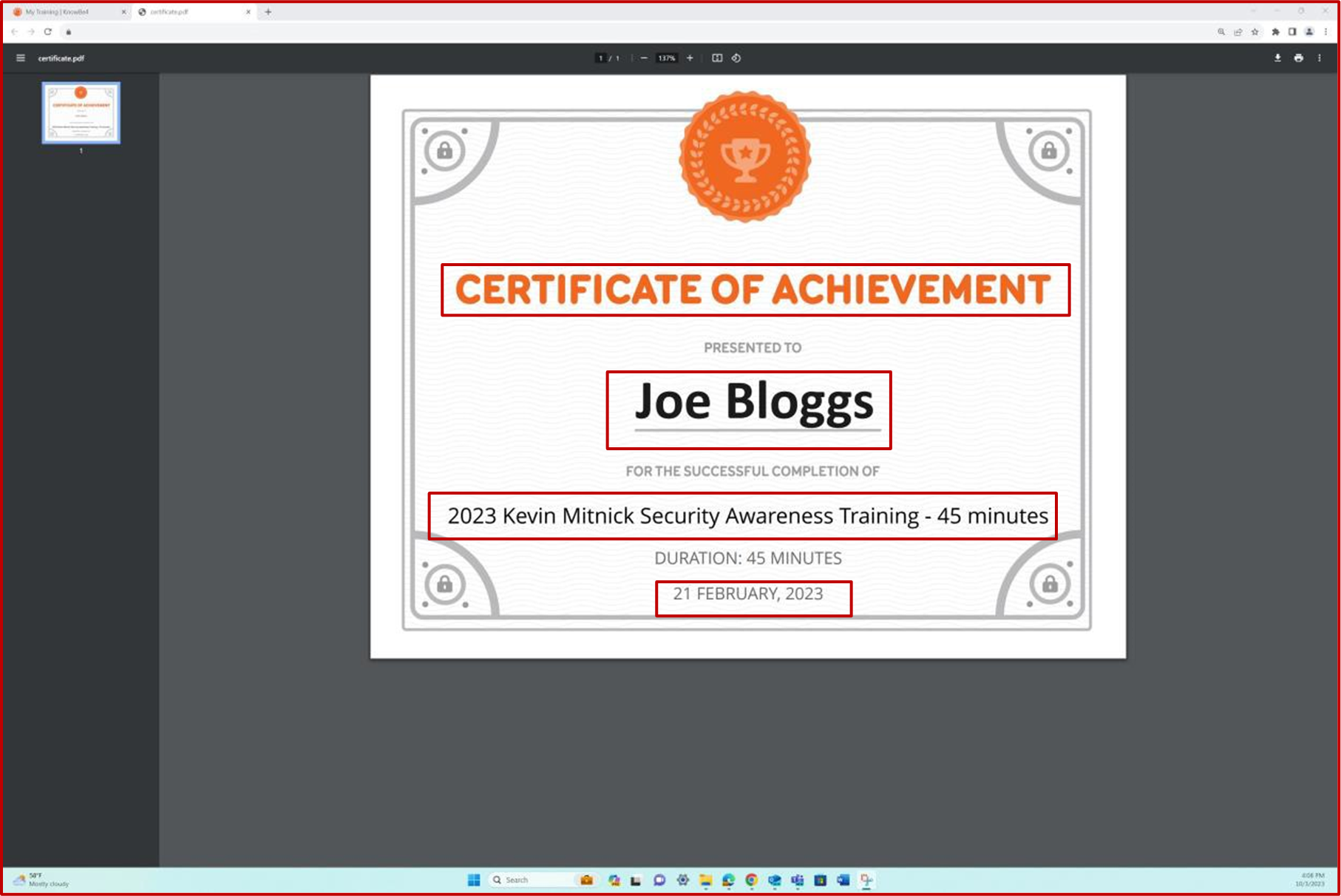

The screenshot shows the certificate of completion generated by Knowb4 upon the employee’s successful completion of the security awareness training. The completion date is 21st of February 2023 which is within the assigned period.

Intent: information system changes.

The objective of this subpoint is to ensure that adaptive security awareness training is initiated whenever there are significant changes to the organization’s information systems. The modifications could arise due to software updates, architectural changes, or new regulatory requirements. The updated training session ensures that all employees are informed about the new changes and the resulting impact on security measures, allowing them to adapt their actions and decisions accordingly. This proactive approach is vital for protecting the organization’s digital assets from vulnerabilities that could arise from system changes.

Guidelines: information system changes.

Most organizations will utilize a combination of platform-based security awareness training, and administrative documentation such as policy documentation and records to track the completion of the training for all employees. Evidence provided must demonstrate that various employees have completed the training based on different changes to the organization’s systems.

Example evidence: information system changes.

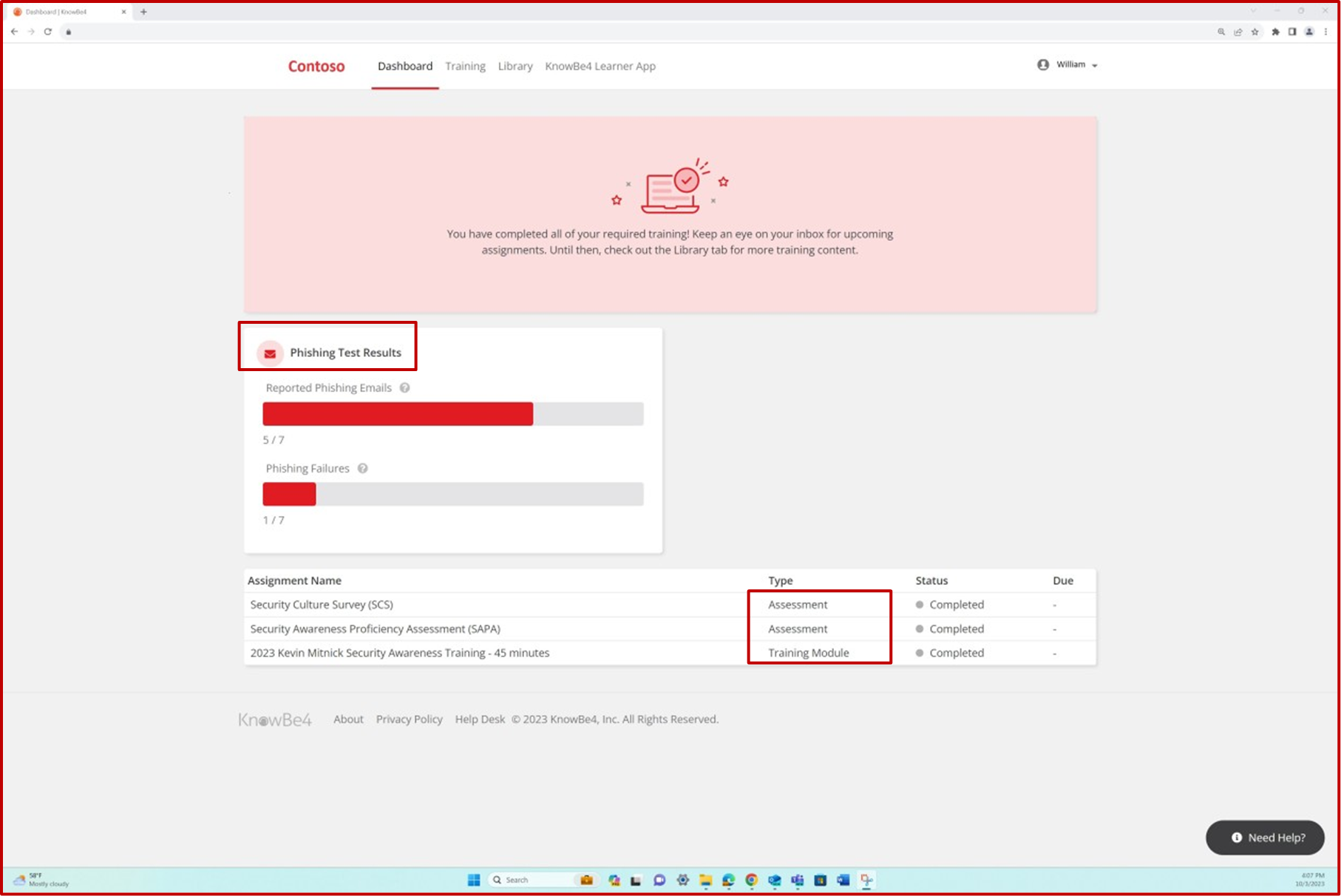

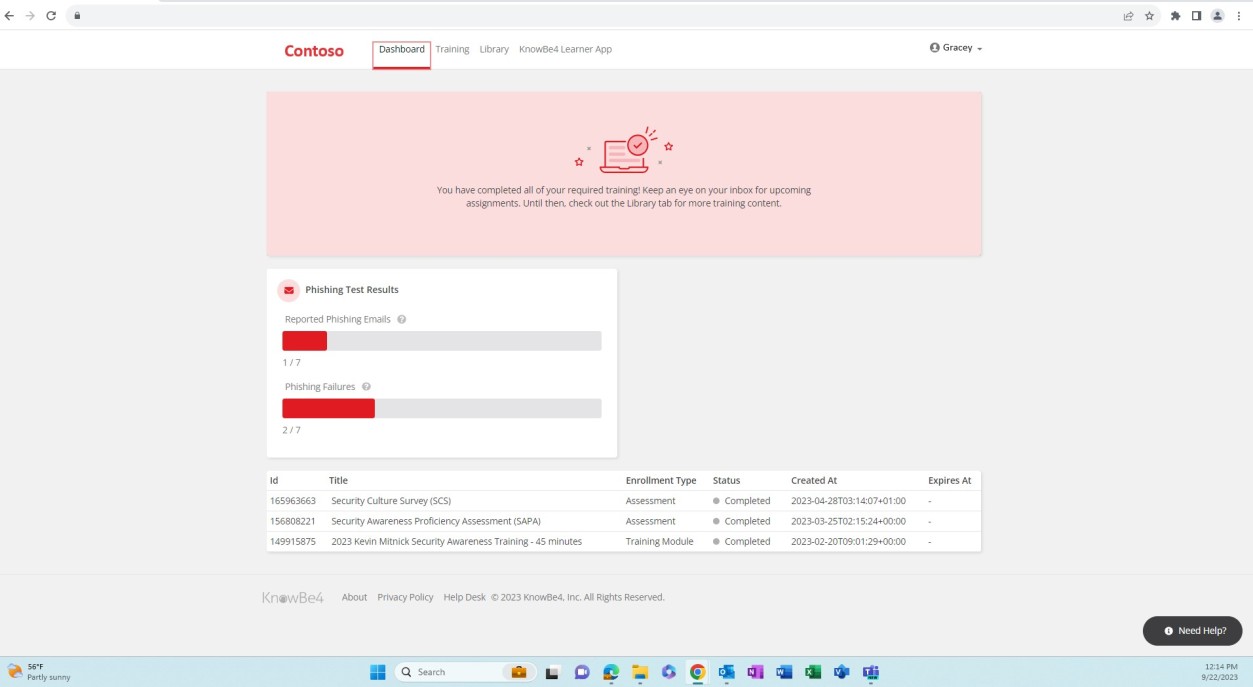

The next screenshots show the assignment of security awareness training to various employees and demonstrates that phishing simulations occur.

The platform is used to assign new training whenever a system change occurs, or a test is failed.

Intent: frequency of awareness training.

The objective of this subpoint is to define an organization-specific frequency for periodic security awareness training. This could be scheduled annually, semi-annually, or at a different interval determined by the organization. By setting a frequency the organization ensures that users are regularly updated on the evolving landscape of threats, as well as on new protective measures and policies. This approach can help to maintain a high level of security awareness among all users and to reinforce previous training components.

Guidelines: frequency of awareness training.

Most organizations will have administrative documentation and/or a technical solution to outline/implement the requirement and procedure for security awareness training as well as define the frequency of the training. Evidence supplied should demonstrate the completion of various awareness training within the defined period and that a defined period by your organization exists.

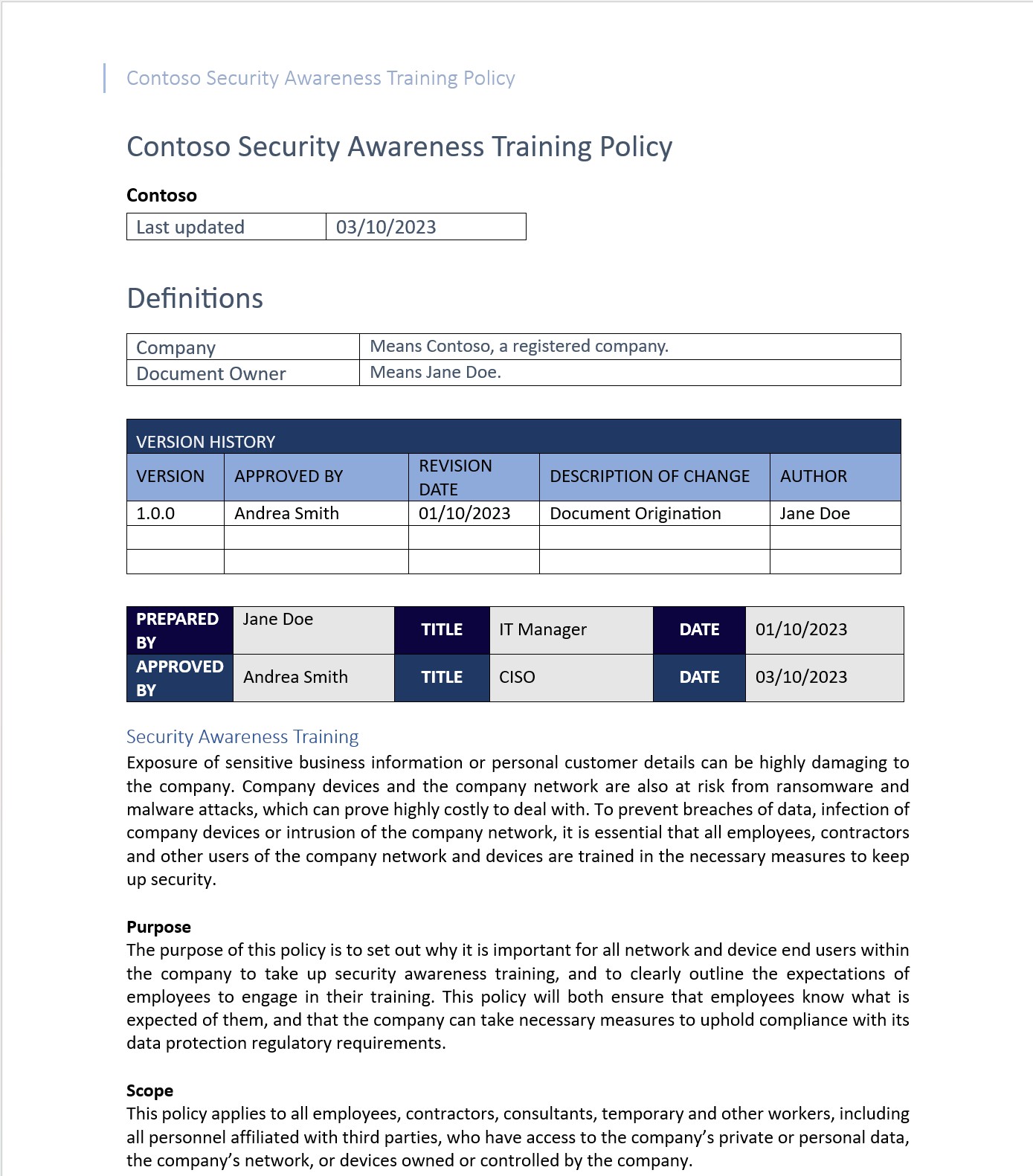

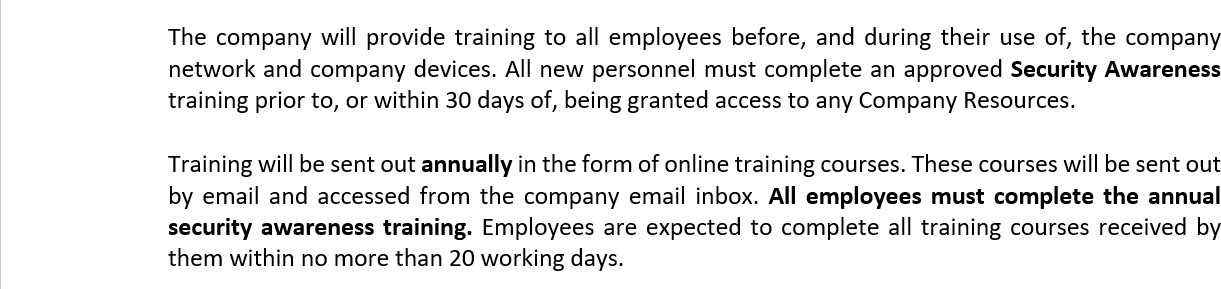

Example evidence: frequency of awareness training.

The following screenshots show snapshots of the security awareness policy documentation and that it exists and is maintained. The policy requires that all employees of the organization receive security awareness training as outlined in the scope section of the policy. The training must be assigned and completed on an annual basis by the relevant department.

According to the policy document, all employees of the organization must complete three courses (one training and two assessments) annually and within twenty days of the assignment. The courses must be sent out via email and assigned through KnowBe4.

The example provided only shows snapshots of the policy, please note that the expectation is that the full policy document will be submitted.

The second screenshot is the continuation of the policy, and it shows the section of the document which mandates the annual training requirement, and it demonstrates that the organization-defined frequency of awareness training is set to annually.

The next two screenshots demonstrate successful completion of the training assessments mentioned previously. The screenshots were taken from two different employees.

Intent: documentation and monitoring.

The objective of this subpoint is to create, maintain, and monitor meticulous records of each user’s participation in security awareness training. These records should be retained over an organization- defined period. This documentation serves as an auditable trail for compliance with regulations and internal policies. The monitoring component allows the organization to assess the effectiveness of the

training, identifying areas for improvement and understanding user engagement levels. By retaining these records over a defined period, the organization can track long-term effectiveness and compliance.

Guidelines: documentation and monitoring.

The evidence that can be provided for security awareness training will depend on how the training is implemented at the organization level. This can include whether the training is conducted through a platform or performed internally based on an in-house process. Evidence supplied must show that historical records of training completed for all users over a period exists and how this is tracked.

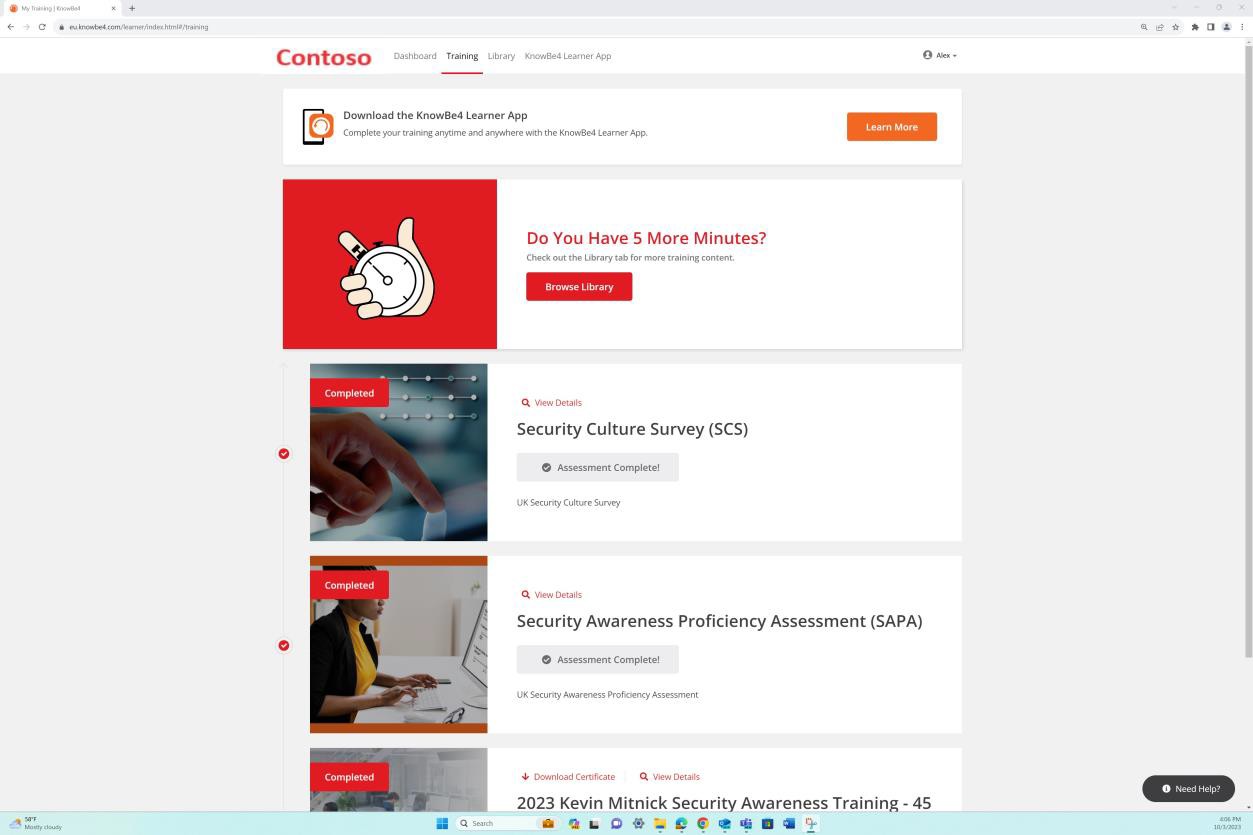

Example evidence: documentation and monitoring.

The next screenshot shows the historical training record for each user including their joining date, completion of training and when the next training is scheduled. Assessment of this document is performed periodically and at least once a year to ensure training records of security awareness for each employee is kept up to date.

Malware protection/anti-malware

Malware poses a significant risk to organizations which can vary the security impact caused to the operational environment, depending upon the malware characteristics. Threat actors have realized that malware can be successfully monetized, which has been realized through the growth of ransomware style malware attacks. Malware can also be used to provide an ingress point for a threat actor to compromise an environment to steal sensitive data, i.e., Remote Access Trojans/Rootkits. Organizations therefore need to implement suitable mechanisms to protect against these threats. Defenses that can be used are anti-virus (AV)/Endpoint Detection and Response (EDR)/Endpoint Detection and Protection Response (EDPR)/Heuristic based scanning using Artificial Intelligence (AI). If you have deployed a different technique to mitigate the risk of malware, then please let the Certification Analyst know who will be happy to explore if this meets the intent or not.

Control No. 2

Please provide evidence that your anti-malware solution is active and enabled across all the sampled system components and configured to meet the following criteria:

if anti-virus that on-access scanning is enabled and that signatures are up to date within 1-day.

for anti-virus that it automatically blocks malware or alerts and quarantines when malware is detected

OR If EDR/EDPR/NGAV:

that periodic scanning is being performed.

is generating audit logs.

is kept up-to-date continually and has self-learning capabilities.

it blocks known malware and identifies and blocks new malware variants based on macro behaviors as well as having full allowance capabilities.

Intent: on-access scanning

This subpoint is designed to verify that the anti-malware software is installed across all sampled system components and is actively performing on-access scanning. The control also mandates that the signature database of the anti-malware solution is up to date within a one-day timeframe. An up-to-date signature database is crucial for identifying and mitigating the latest malware threats, thereby ensuring that the system components are adequately protected.

Guidelines: on-access scanning**.**

To demonstrate that an active instance of AV is running in the assessed environment, provide a screenshot for every device in the sample set agreed with your analyst that supports the use of anti- malware. The screenshot should show that the anti-malware is running, and that the anti-malware software is active. If there is a centralized management console for anti-malware, evidence from the management console may be provided. Also, ensure to provide a screenshot that shows the sampled devices are connected and working.

Example evidence: on-access scanning**.**

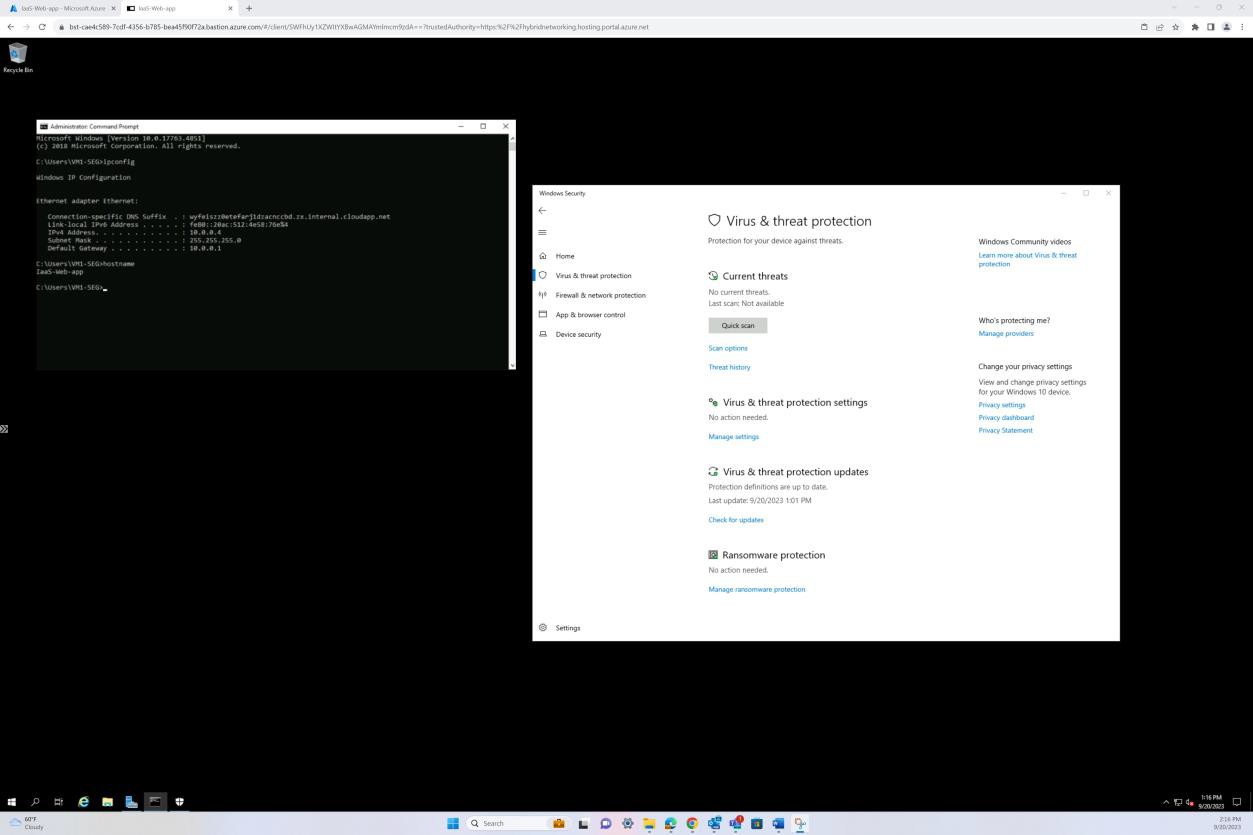

The following screenshot has been taken from a Windows Server device, showing that “Microsoft Defender” is enabled for the host name “IaaS-Web-app”.

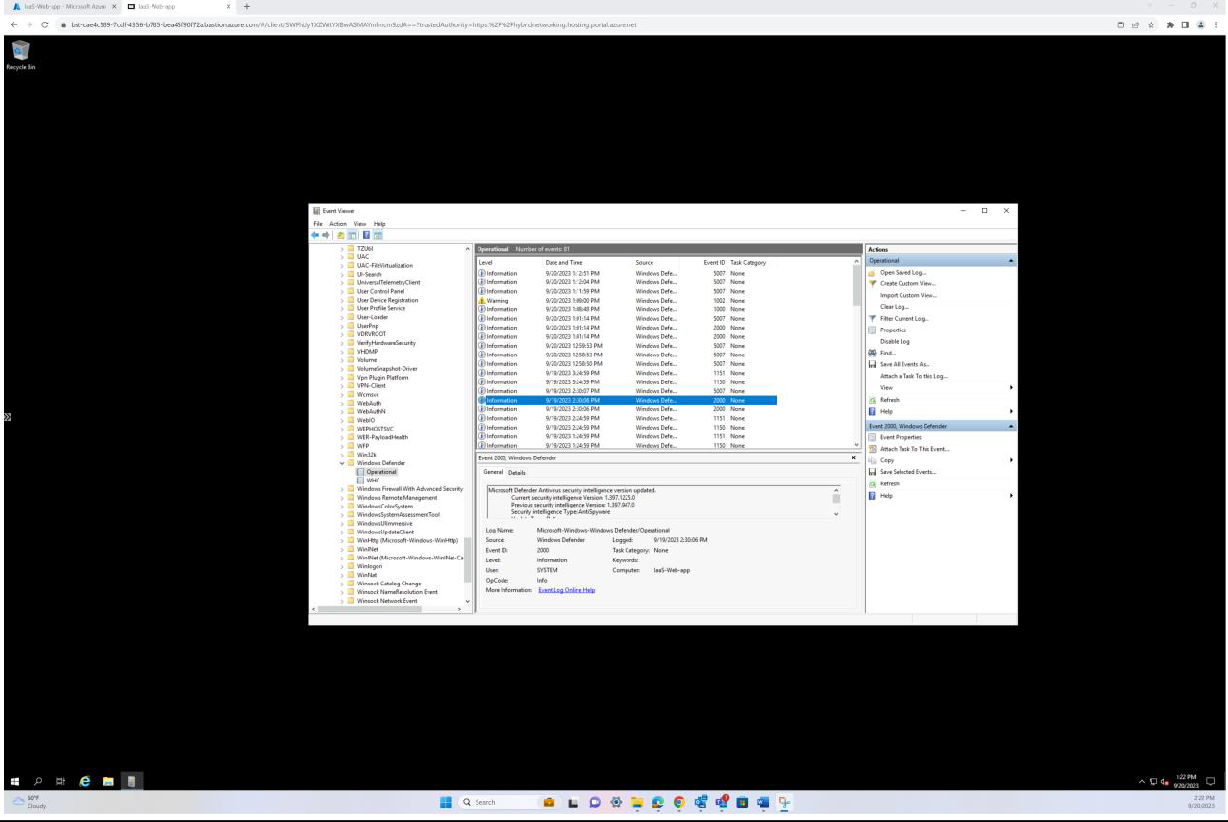

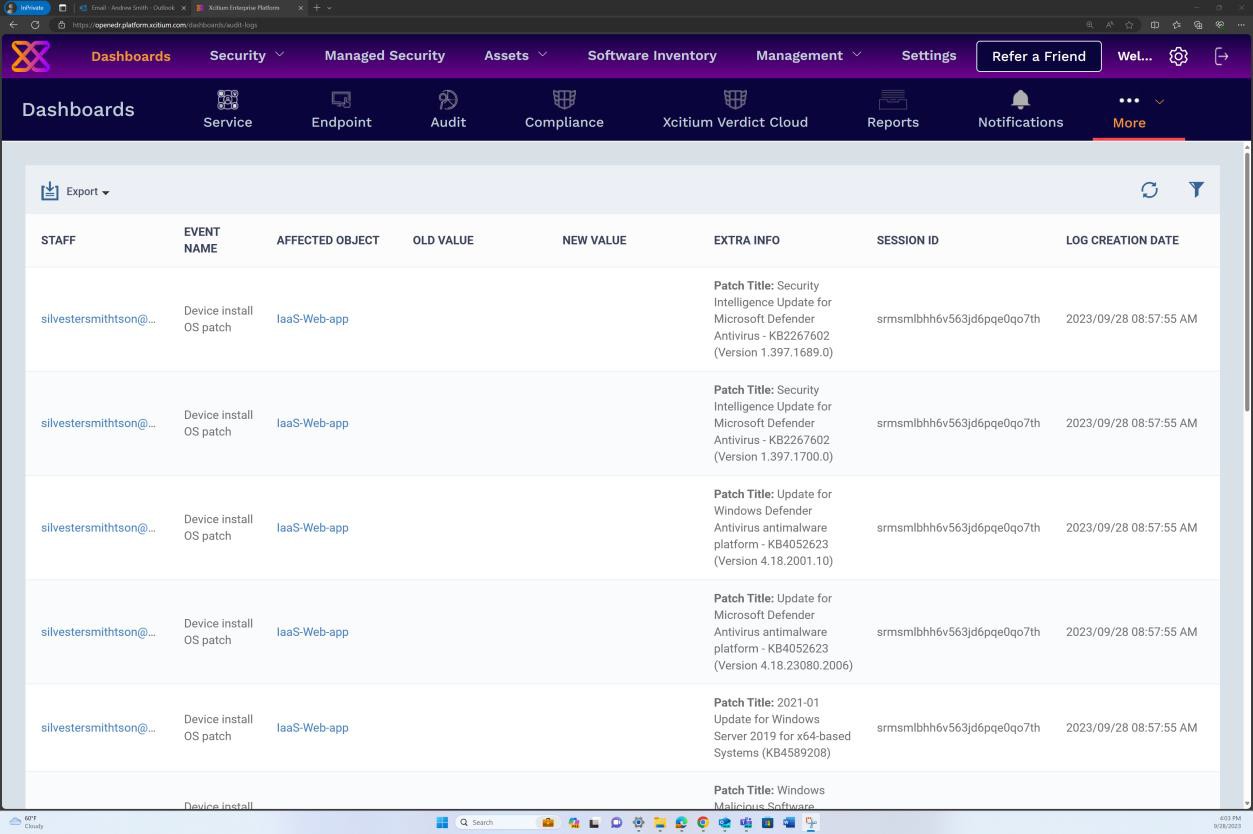

The next screenshot has been taken from a Windows Server device, showing Microsoft Defender Antimalware security intelligence version has updated the log from the Windows event viewer. This demonstrates the latest signatures for the host name “IaaS-Web-app”.

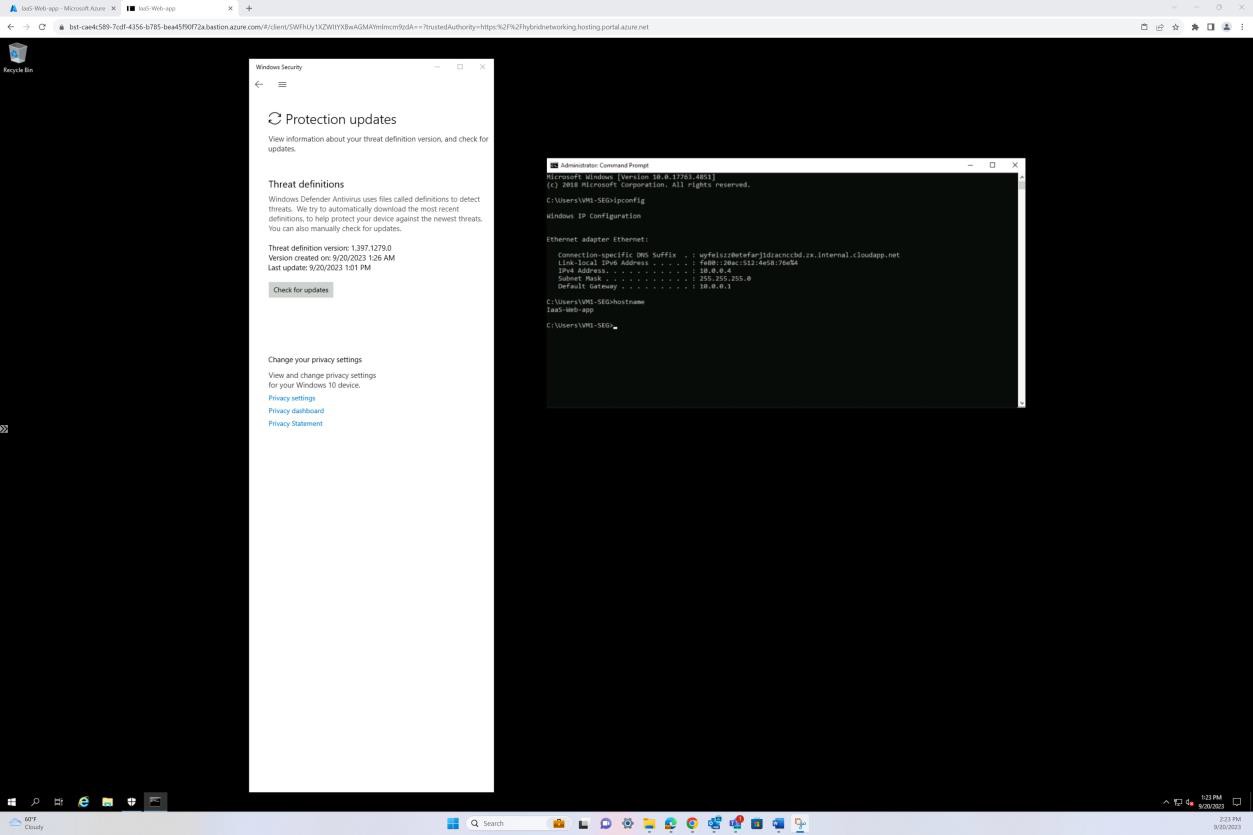

This screenshot has been taken from a Windows Server device, showing Microsoft Defender Anti-malware Protection updates. This clearly shows the threat definition versions, version created on, and last update to demonstrate that malware definitions are up to date for the host name “IaaS-Web- app”.

Intent: anti-malware blocks.

The purpose of this subpoint is to confirm that the anti-malware software is configured to automatically block malware upon detection or generate alerts and move detected malware to a secure quarantine area. This can ensure immediate action is taken when a threat is detected, reducing the window of vulnerability, and maintaining a strong security posture of the system.

Guidelines: anti-malware blocks.

Provide a screenshot for every device in the sample that supports the use of anti-malware. The screenshot should show that anti-malware is running and is configured to automatically block malware, alert or to quarantine and alert.

Example evidence: anti-malware blocks.

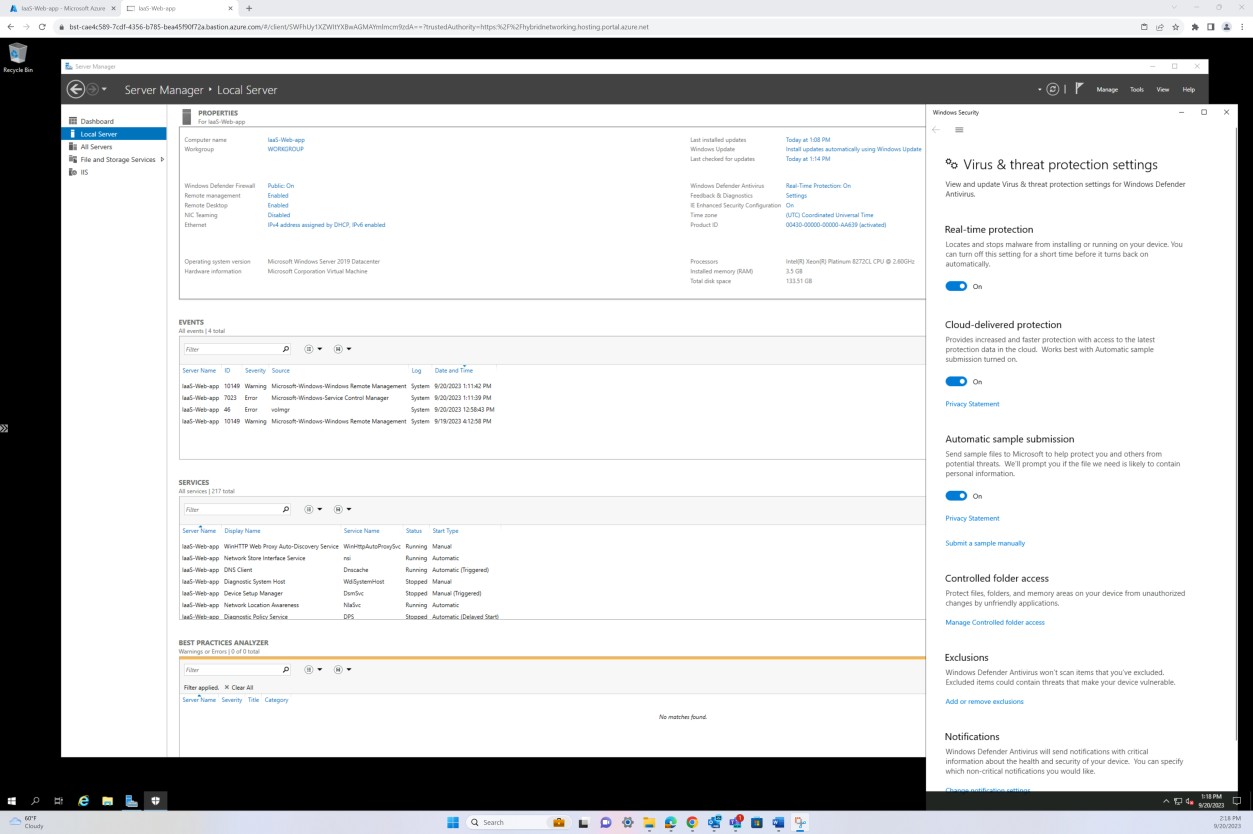

The next screenshot shows the host “IaaS-Web-app” is configured with real-time protection as ON for Microsoft Defender Antimalware. As the setting says, this locates and stops the malware from installing or running on the device.

Intent: EDR/NGAV

This subpoint aims to verify that Endpoint Detection and Response (EDR) or Next-Generation Antivirus (NGAV) are actively performing periodic scans across all sampled system components; audit logs are generated for tracking scanning activities and results; the scanning solution is continuously updated and possess self- learning capabilities to adapt to new threat landscapes.

Guidelines: EDR/NGAV

Provide a screenshot from your EDR/NGAV solution demonstrating that all the agents from the sampled systems are reporting in and showing that their status is active.

Example evidence: EDR/NGAV

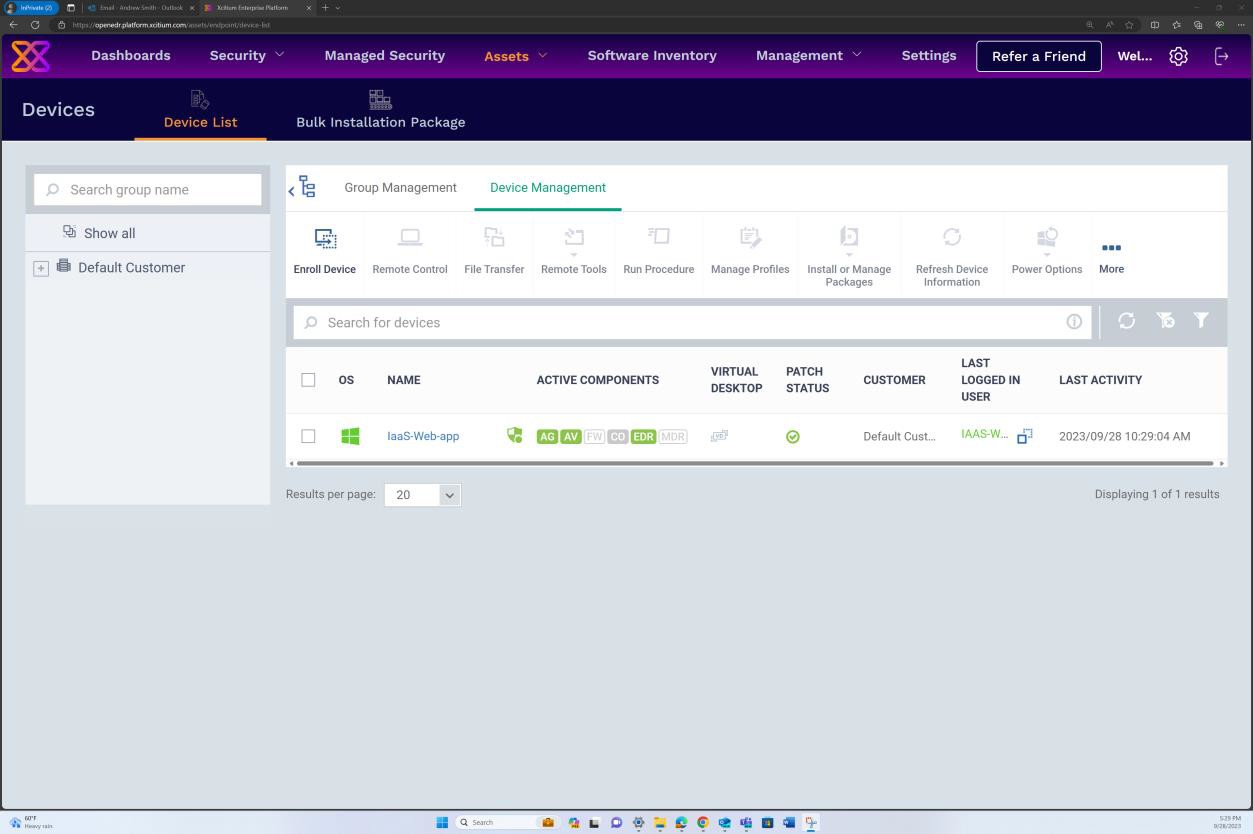

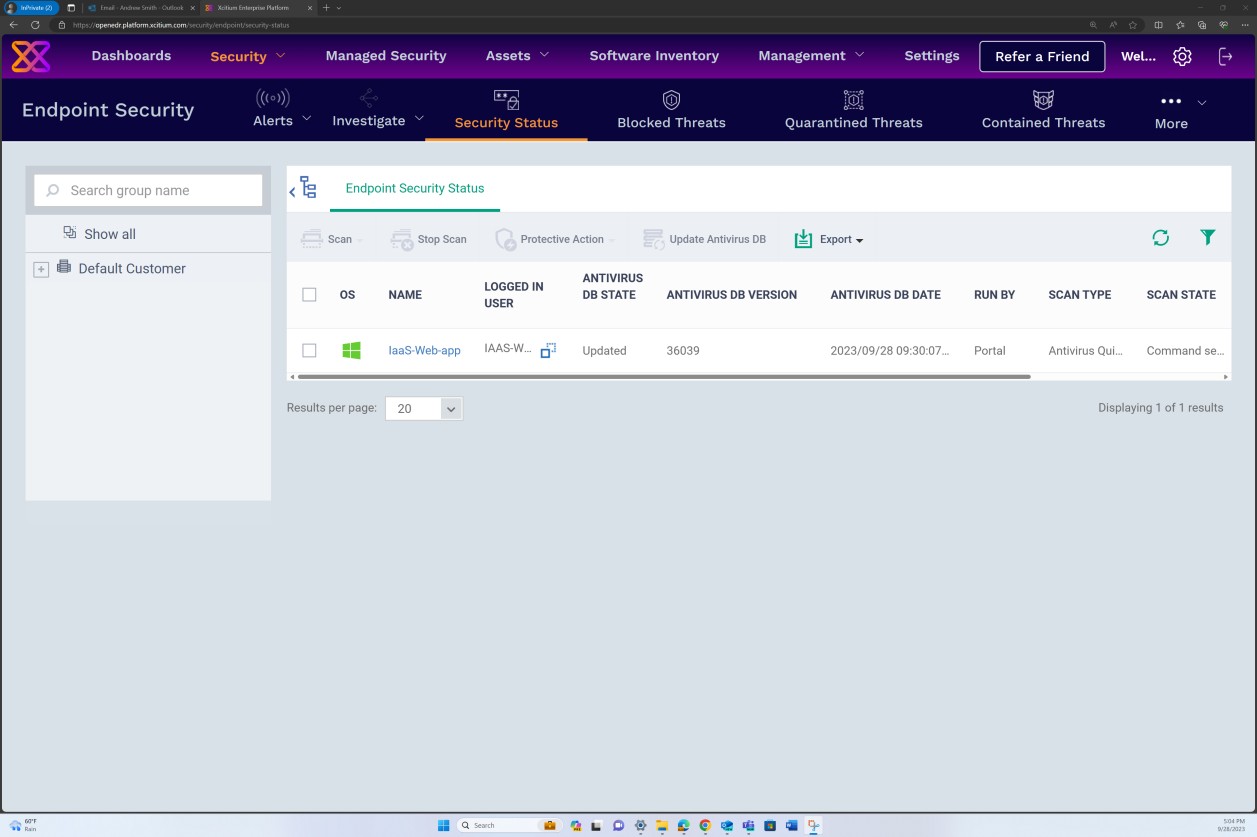

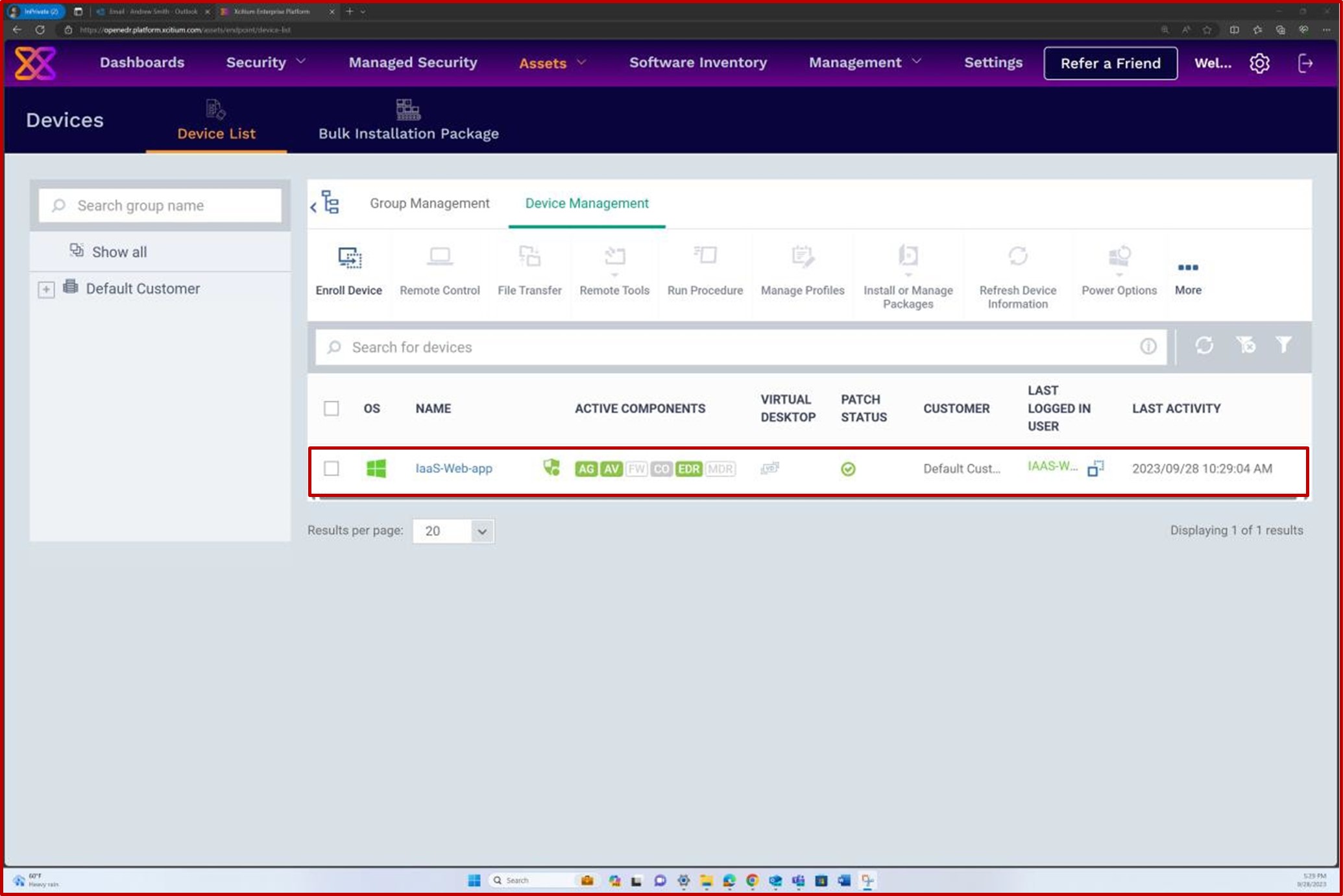

The next screenshot from the OpenEDR solution shows that an agent for the host “IaaS-Web-app” is active and reporting in.

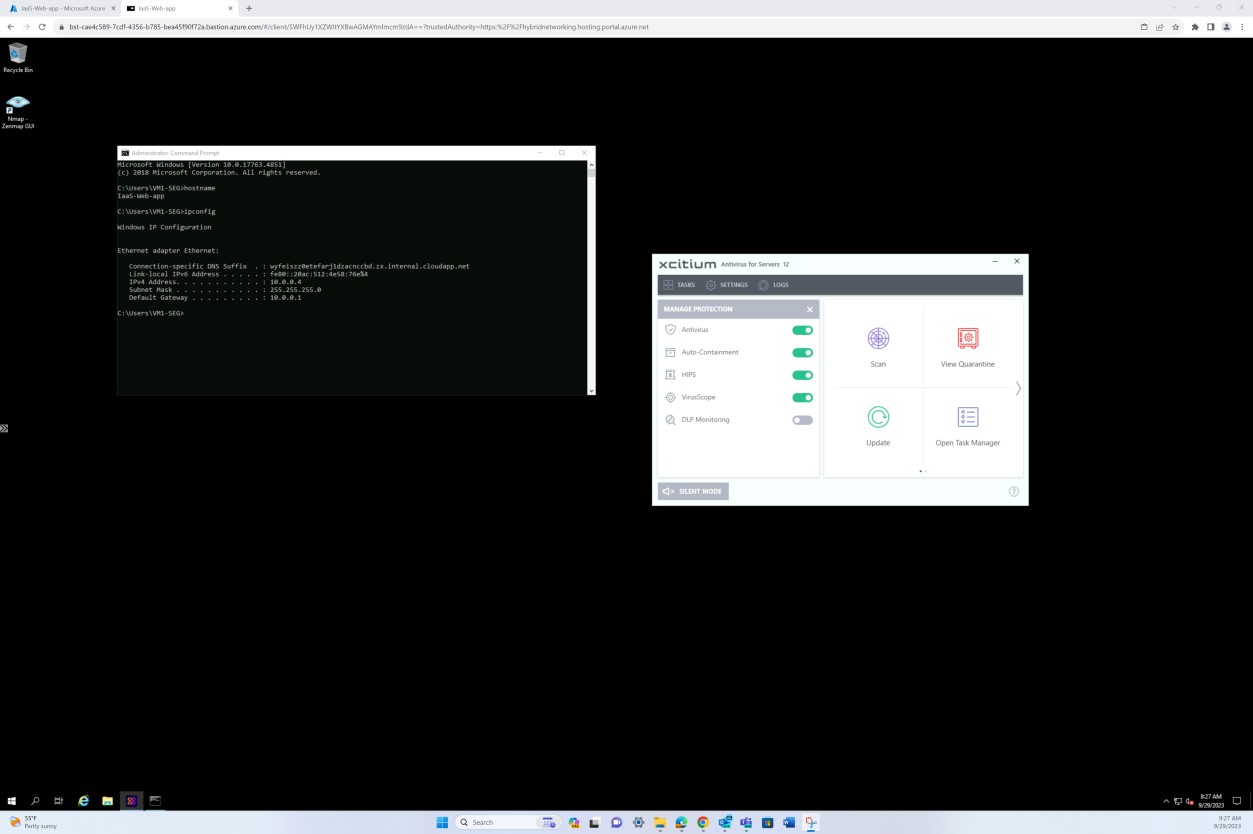

The next screenshot from OpenEDR solution shows that real-time scanning is enabled.

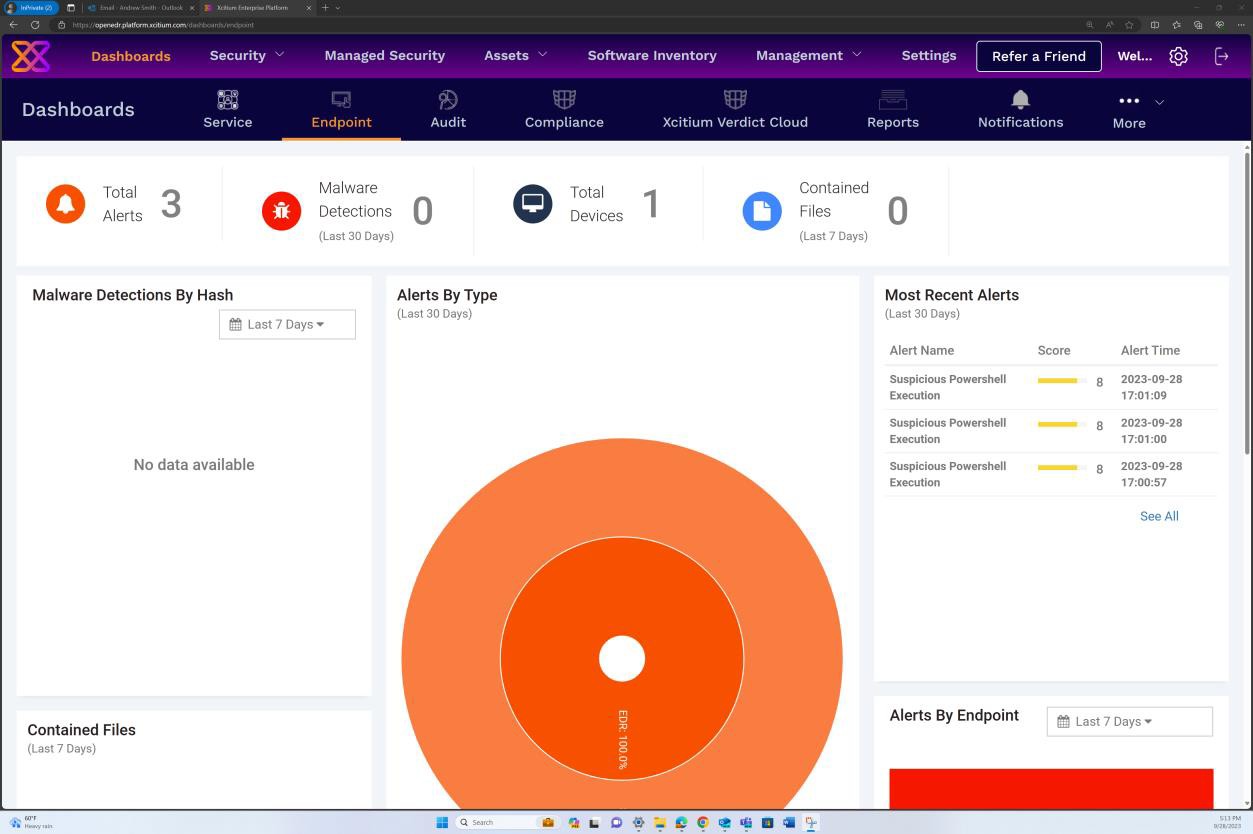

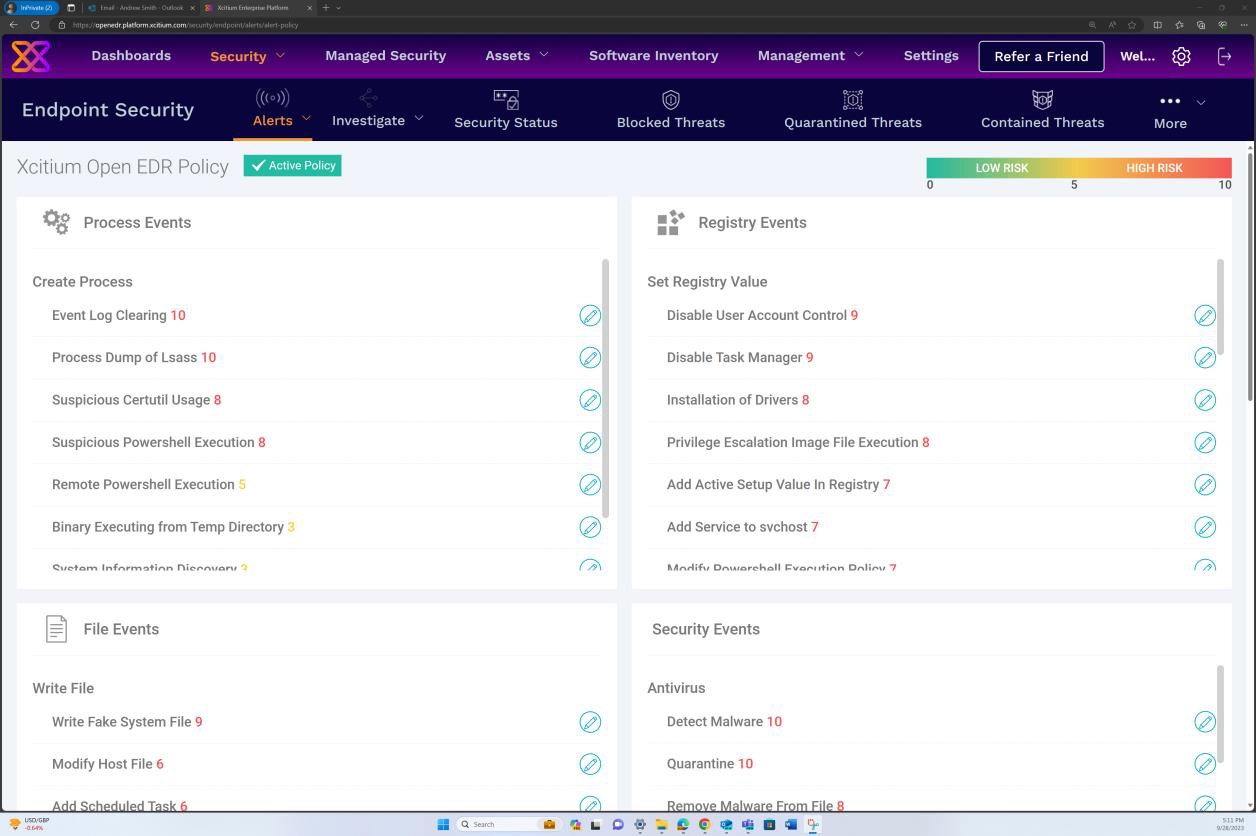

The next screenshot shows that alerts are generated based on behavior metrics which have been obtained in real-time from the agent installed at the system level.

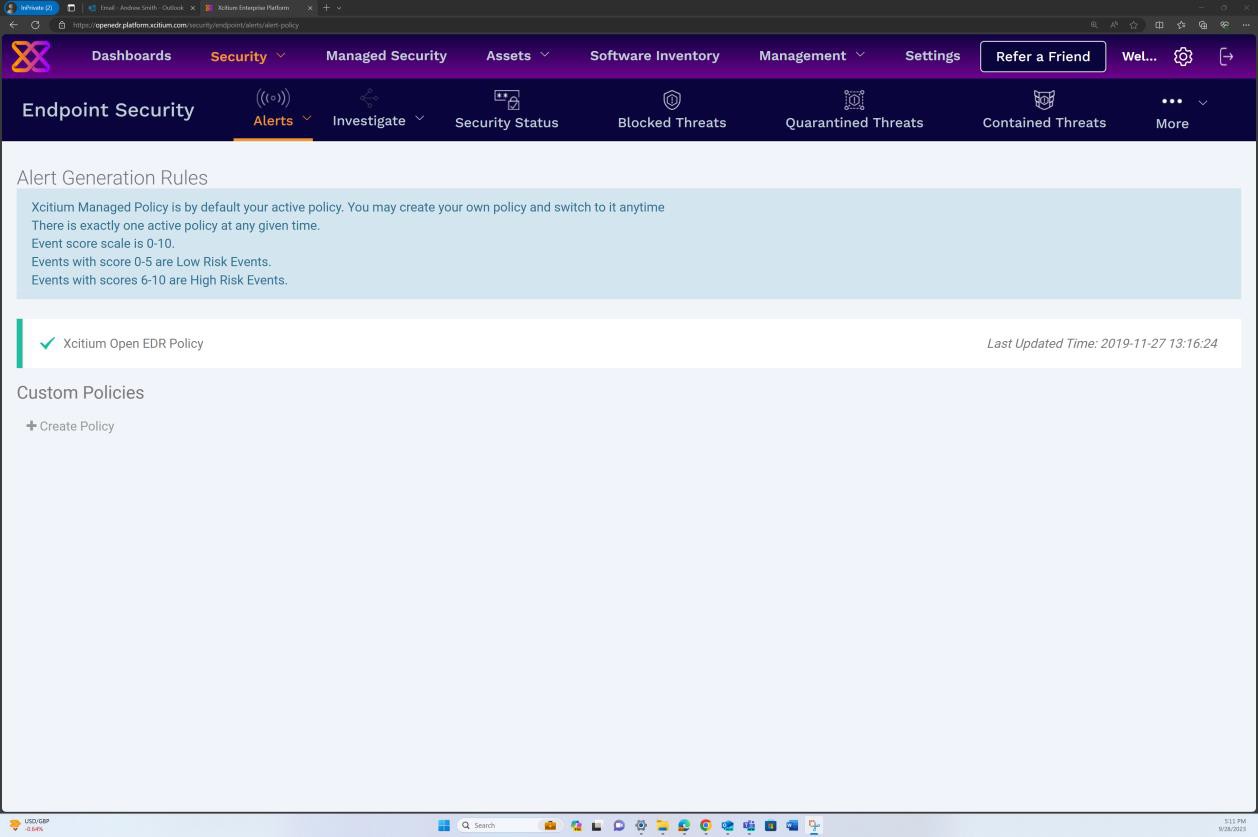

The next screenshots from the OpenEDR solution demonstrate the configuration and generation of audit logs and alerts. The second image shows that the policy is enabled, and the events are configured.

The next screenshot from OpenEDR solution demonstrates that the solution is kept up-to-date continually.

Intent: EDR/NGAV

The focus of this subpoint is to ensure that EDR/NGAV have the capability to block known malware automatically and identify and block new malware variants based on macro behaviors. It also ensures that the solution has full approval capabilities, allowing the organization to permit trusted software while blocking all else, thereby adding an additional layer of security.

Guidelines: EDR/NGAV

Depending on the type of solution used, evidence can be provided showing the configuration settings of the solution and that the solution has Machine Learning/heuristics capabilities, as well as being configured to block malware upon detection. If configuration is implemented by default on the solution, then this must be validated by vendor documentation.

Example evidence: EDR/NGAV

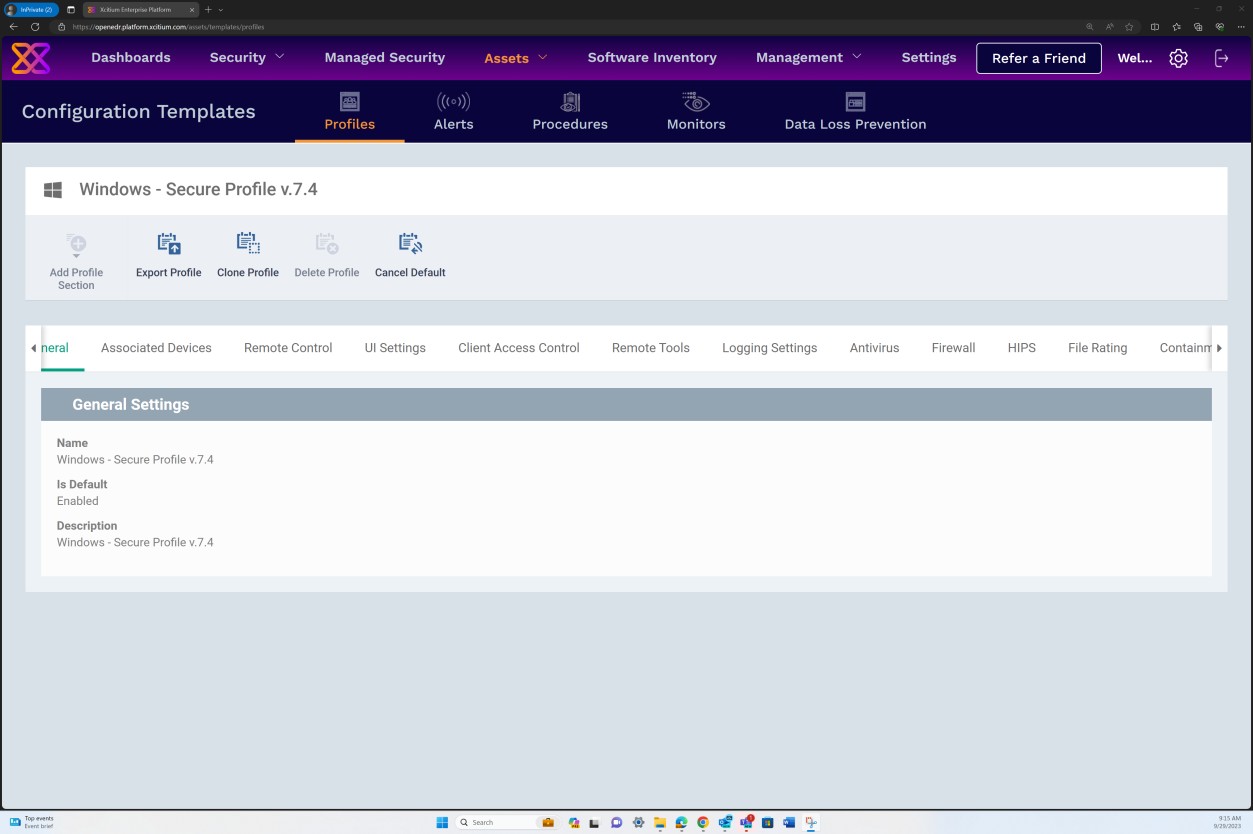

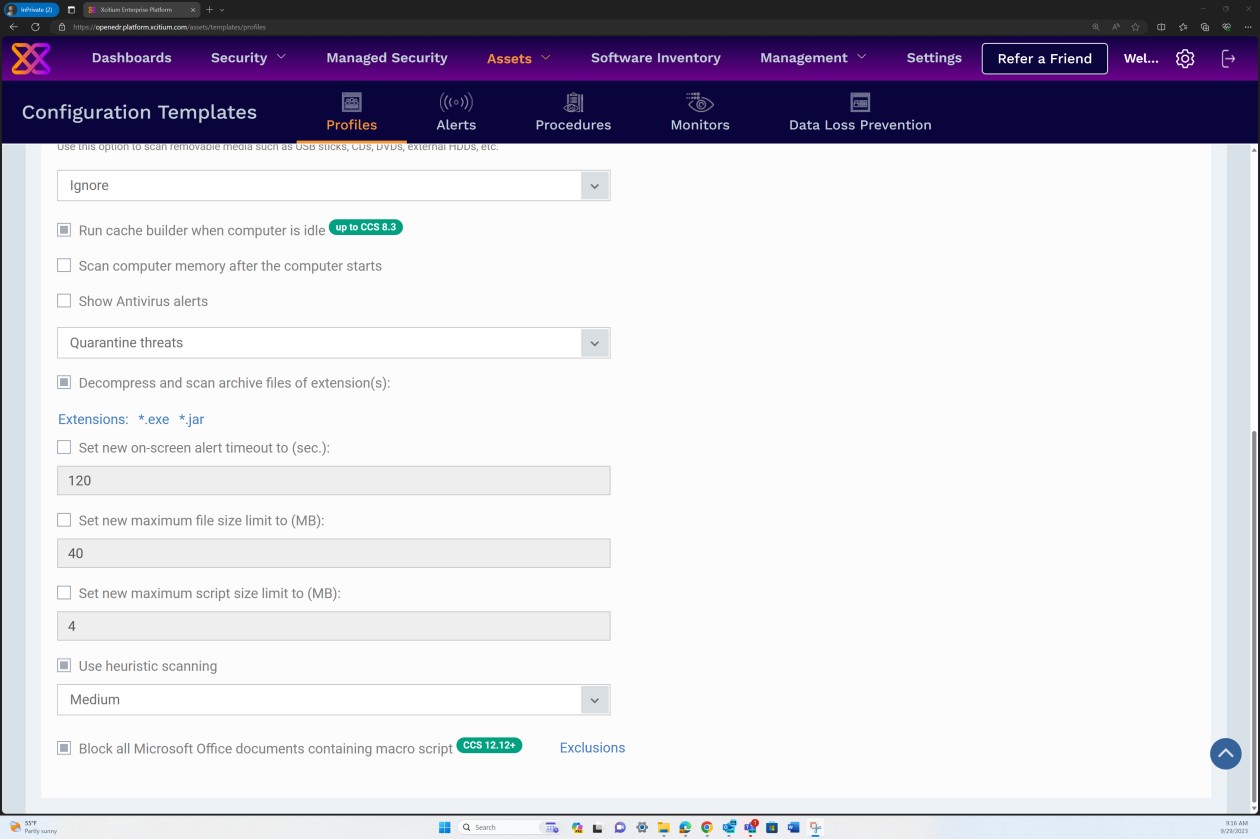

The next screenshots from the OpenEDR solution demonstrate that a Secure Profile v7.4 is configured to enforce real-time scan, block malware, and quarantine.

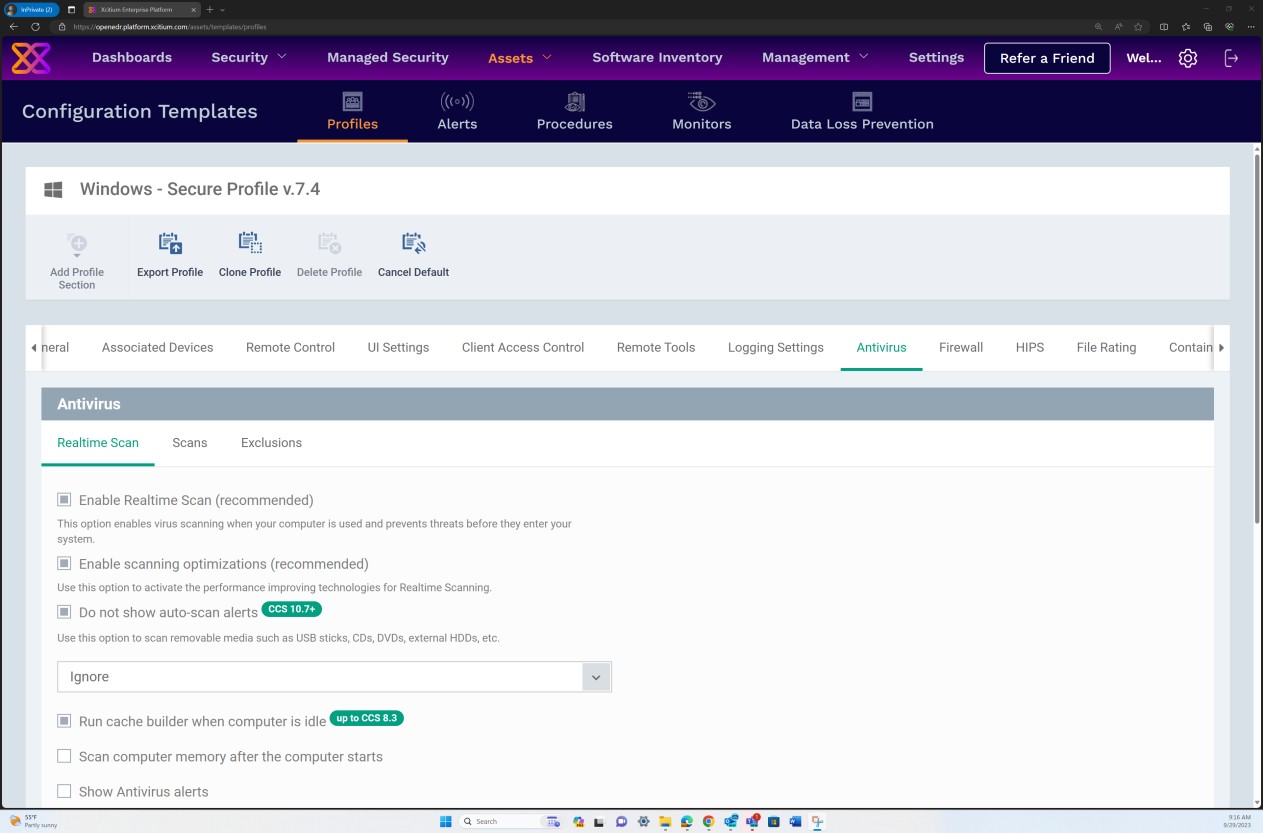

The next screenshots from the Secure Profile v7.4 configuration demonstrate that the solution implements both “Realtime” scanning based on a more traditional antimalware approach, which scans for known malware signatures, and “Heuristics” scanning set to a medium level. The solution detects and removes malware by checking the files and the code that behave in a suspicious/unexpected or malicious manner.

The scanner is configured to decompress archives and scan the files inside to detect potential malware that might be masking itself under the archive, Additionally, the scanner is configured to block micro scripts within Microsoft Office files.

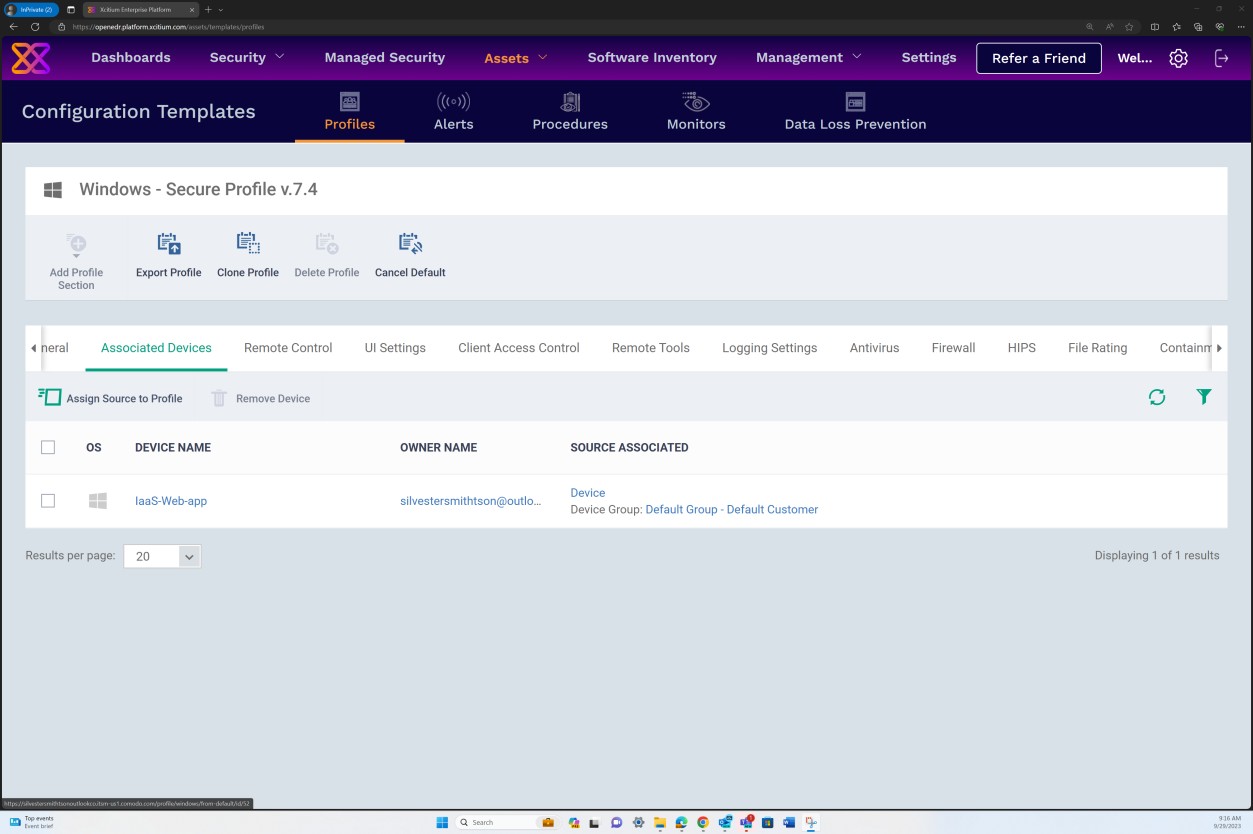

The next screenshots demonstrate that Secure Profile v.7.4 has been assigned to our Windows Server device ‘IaaS-Web-app’ host.

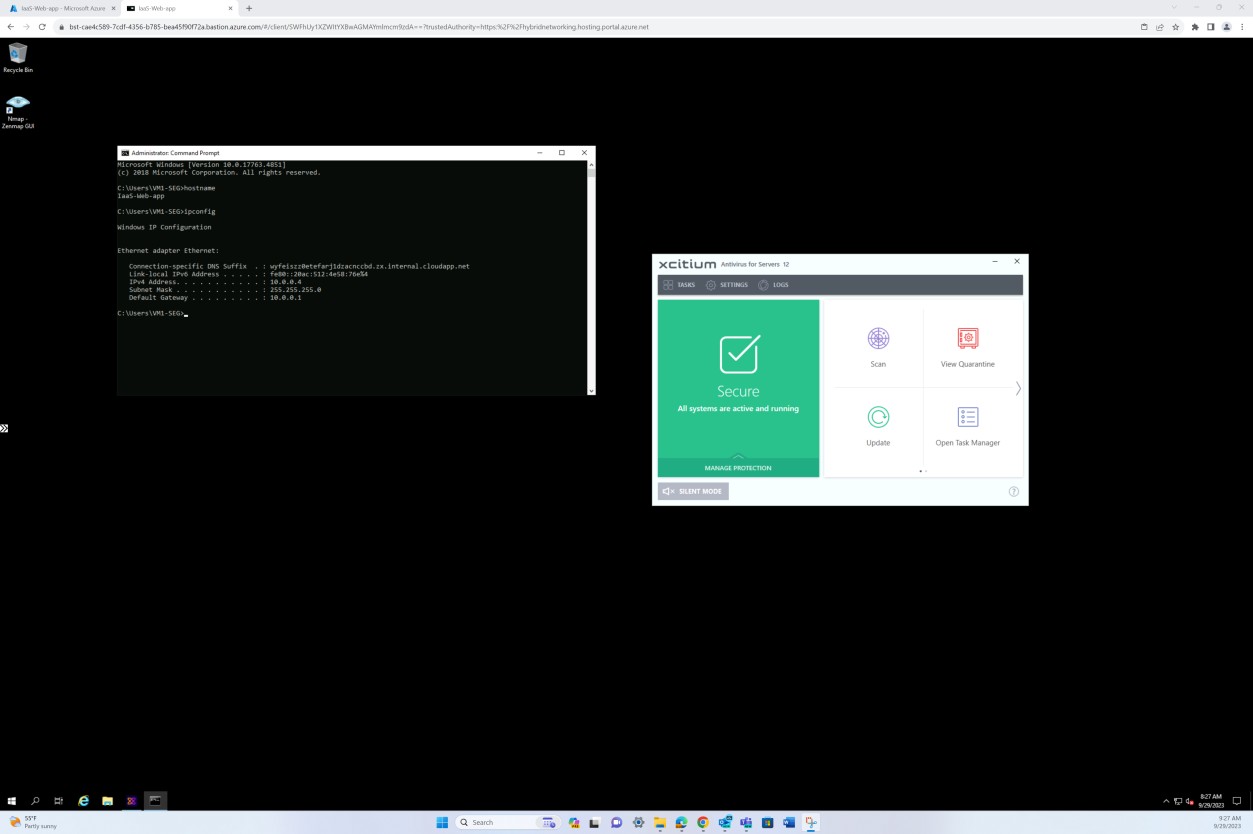

The next screenshot was taken from the Windows Server device ‘IaaS-Web-app’, which demonstrated that OpenEDR agent is enabled and running on the host.

Malware protection/application control

Application control is a security practice that blocks or restricts unauthorized applications from executing in ways that put data at risk. Application controls are an important part of a corporate security program and can help prevent malicious actors from exploiting application vulnerabilities and reduce the risk of a breach. By implementing application control, businesses and organizations can greatly reduce the risks and threats associated with application usage because applications are prevented from executing if they put the network or sensitive data at risk. Application controls provide operations and security teams with a reliable, standardized, and systematic approach to mitigating cyber risk. They also give organizations a fuller picture of the applications in their environment, which can help IT and security organizations effectively manage cyber risk.

Control No. 3

Please provide evidence demonstrating that:

You have an approved list of software/applications with business justification:

exists and is kept up to date, and

that each application undergoes an approval process and sign off prior to its deployment.

That application control technology is active, enabled, and configured across all the sampled system components as documented.

Intent: software list

This subpoint aims to ensure that an approved list of software and applications exist within the organization and is continually kept up to date. Ensure that each software or application on the list has a documented business justification to validate its necessity. This list serves as an authoritative reference to regulate software and application deployment, thus aiding in the elimination of unauthorized or redundant software that could pose a security risk.

Guidelines: software list

A document containing the approved list of software and applications if maintained as a digital document (Word, PDF, etc.). If the approved list of software and applications is maintained through a platform, then screenshots of the list from the platform must be provided.

Example evidence: software list

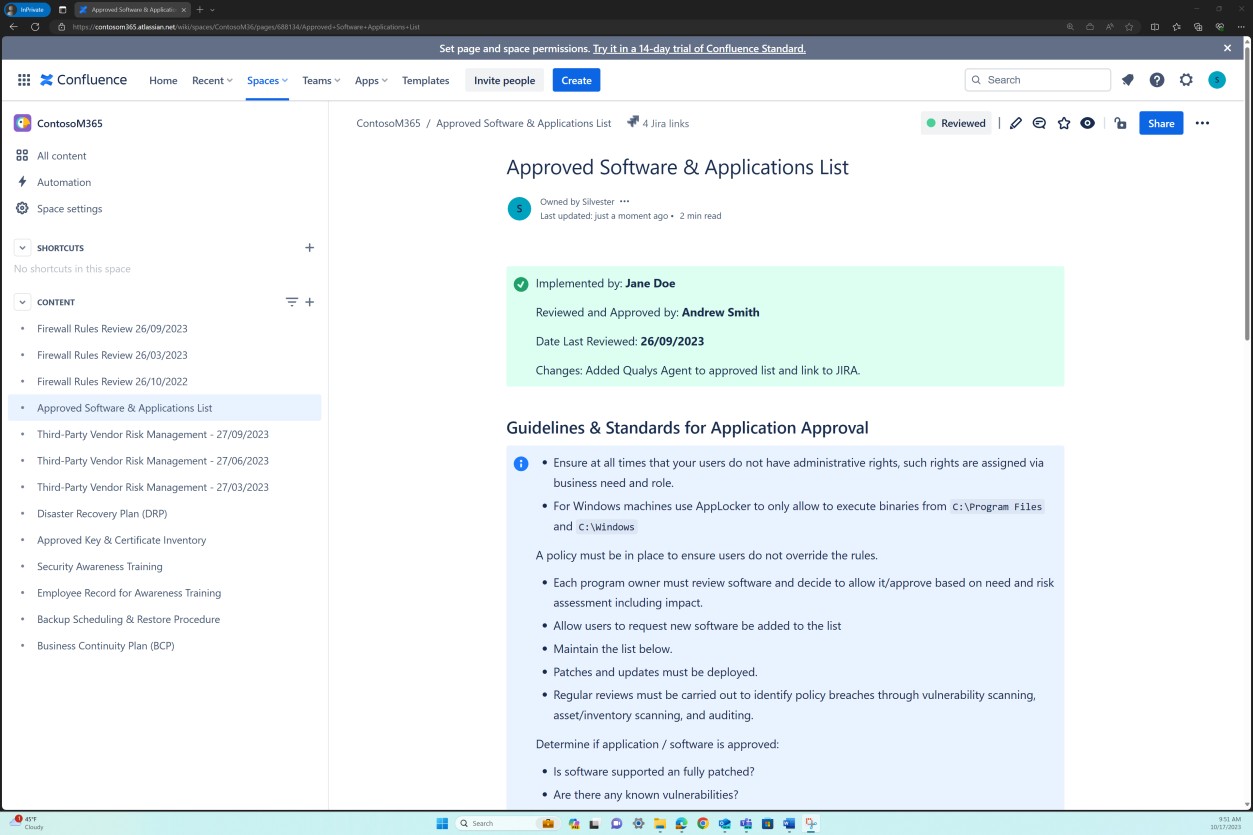

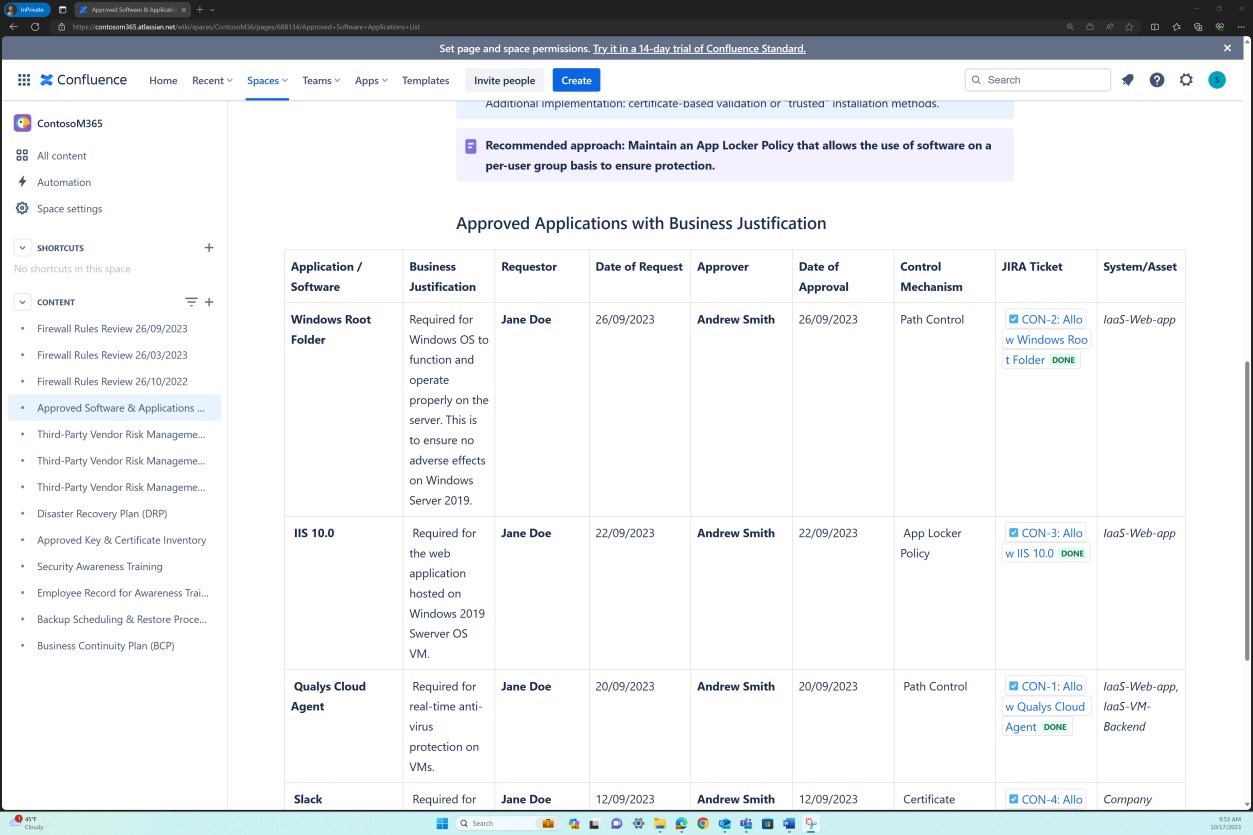

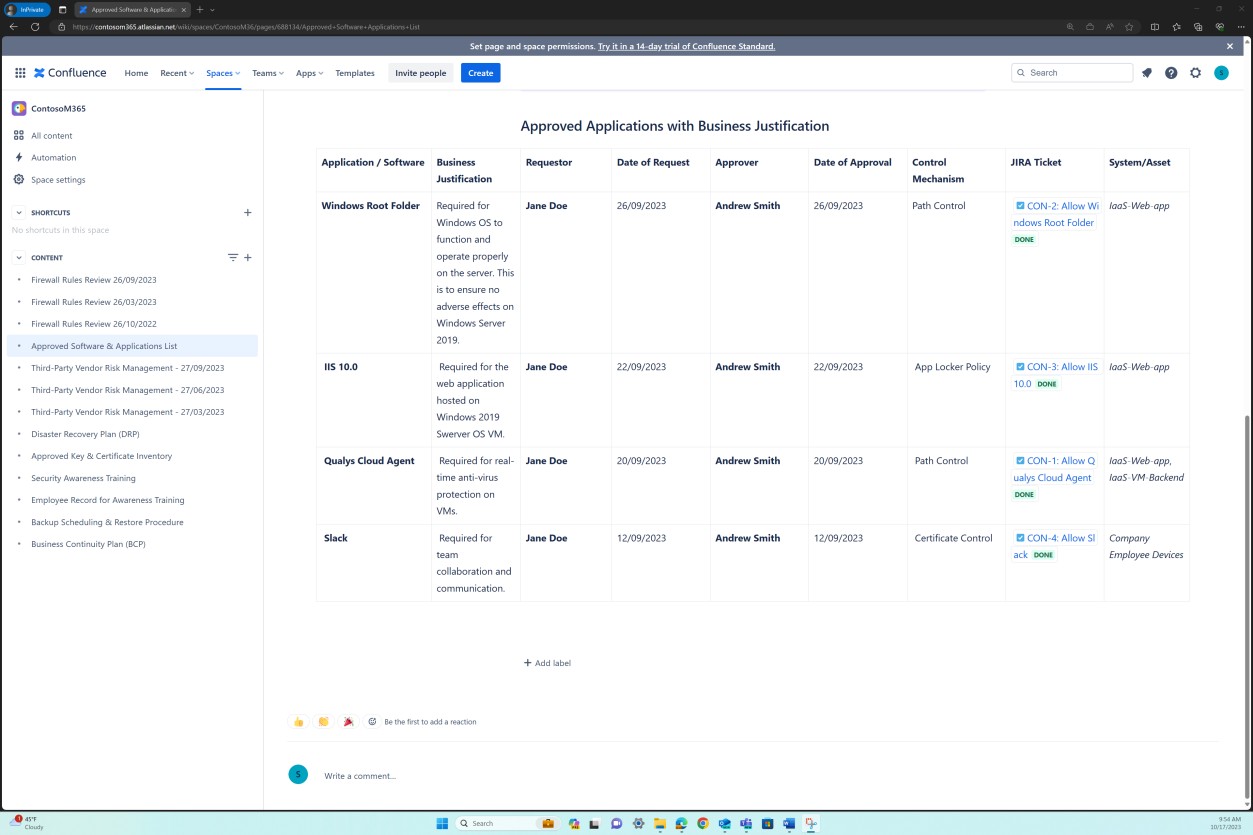

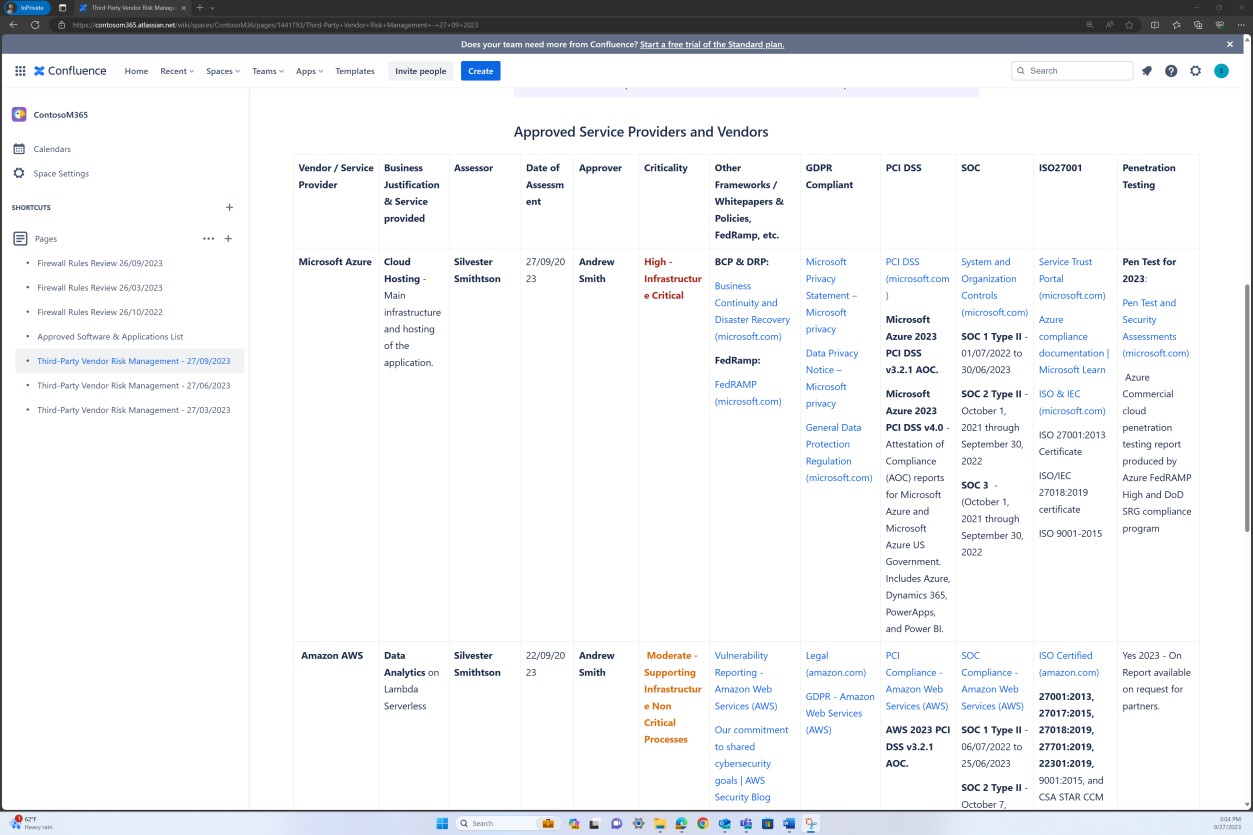

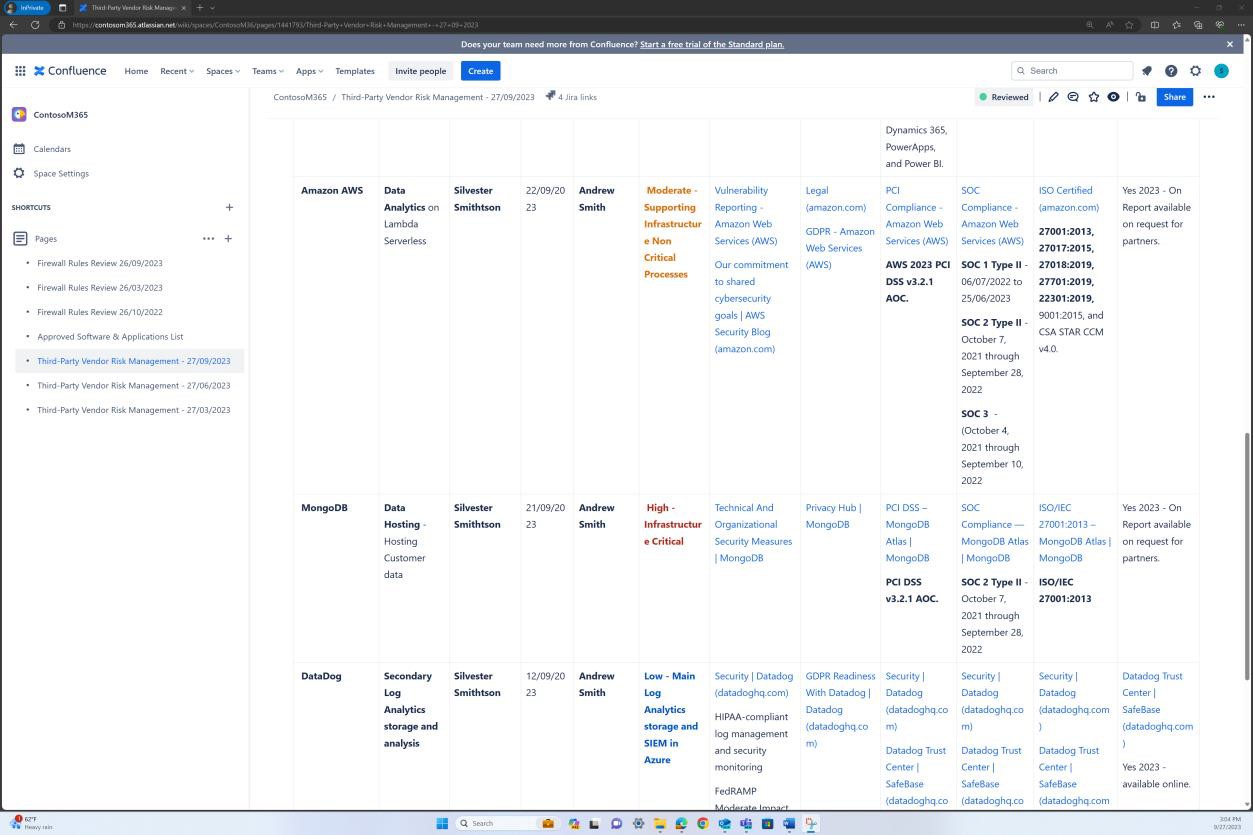

The next screenshots demonstrate that a list of approved software and applications is maintained in Confluence Cloud platform.

The next screenshots demonstrates that the list of approved software and applications including the requestor, date of request, approver, date of approval, control mechanism, JIRA ticket, system/asset is maintained.

Intent: software approval

The purpose of this subpoint is to confirm that each software/application undergoes a formal approval process before its deployment within the organization. The approval process should includes a technical evaluation and an executive sign-off, ensuring that both operational and strategic perspectives have been considered. By instituting this rigorous process, the organization ensures that only vetted and necessary software is deployed, thereby minimizing security vulnerabilities and ensuring alignment with the business objectives.

Guidelines

Evidence can be provided showing that the approval process is being followed. This may be provided by means of signed documents, tracking within the change control systems or using something like Azure DevOps/JIRA to track the change requests and authorization.

Example evidence

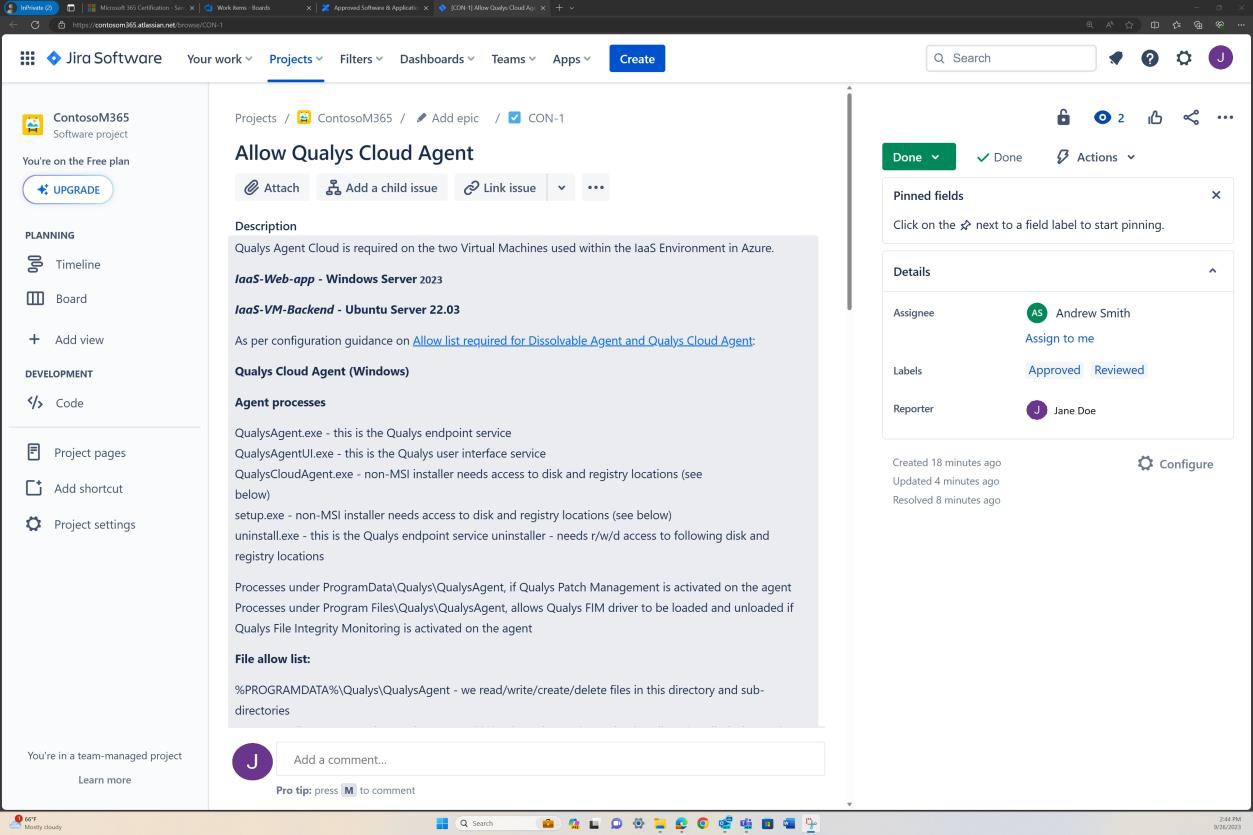

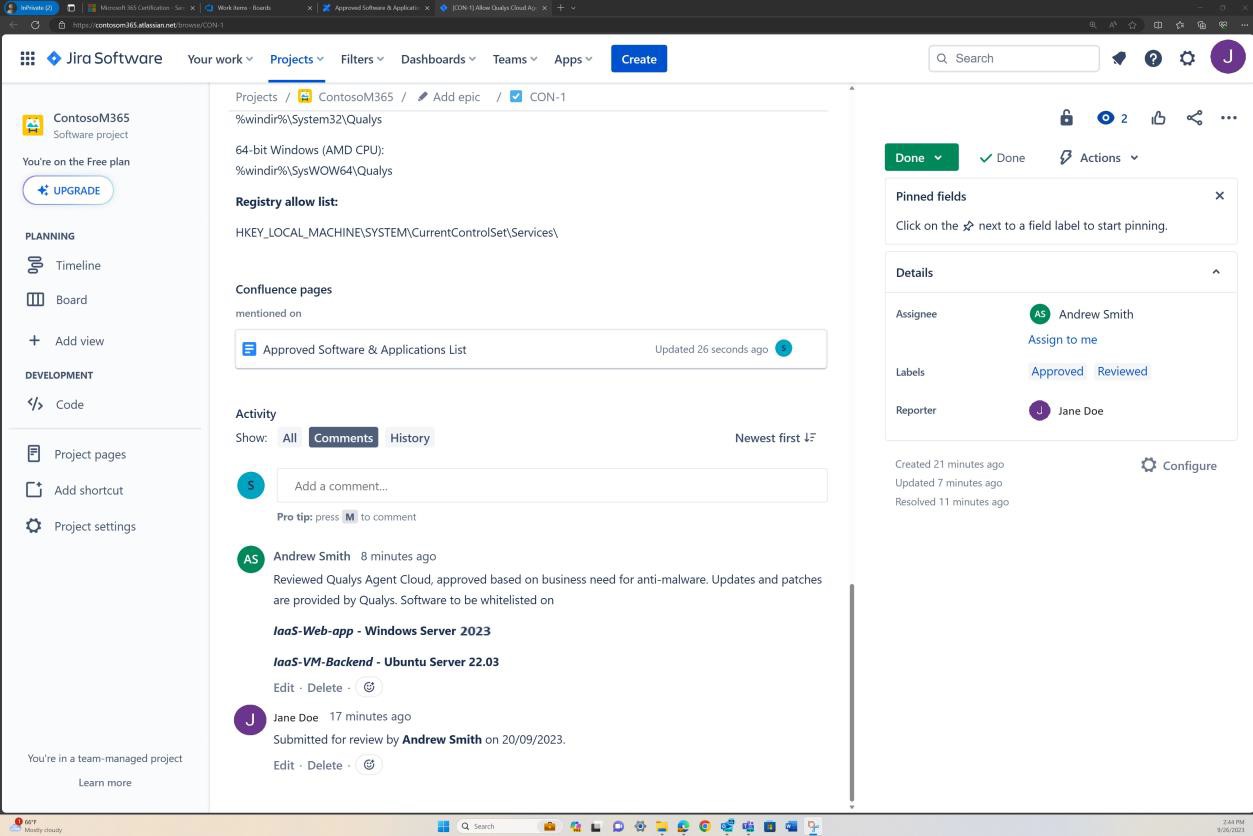

The next screenshots demonstrate a complete approval process in JIRA Software. A user ‘Jane Doe’ has raised a request for ‘Allow Qualys Cloud Agent’ to be installed on ‘IaaS-Web-app’ and ‘IaaS-VM- Backend’ servers. ‘Andrew Smith’ has reviewed the request and approved it with the comment ‘approved based on business need for anti-malware. Updates and patches provided by Qualys. Software to be approved.’

The next screenshot shows approval being granted via the ticket raised on Confluence platform before allowing the application to run on the production server.

Intent: app control technology

This subpoint focuses on verifying that application control technology is active, enabled and correctly configured across all sampled system components. Ensure that the technology operates in accordance with documented policies and procedures, which serve as guidelines for its implementation and maintenance. By having an active, enabled, and a well-configured application control technology, the organization can help prevent the execution of unauthorized or malicious software and enhance the overall security posture of the system.

Guidelines: app control technology

Provide documentation detailing how application control has been setup and evidence from the applicable technology showing how each application/process has been configured.

Example evidence: app control technology

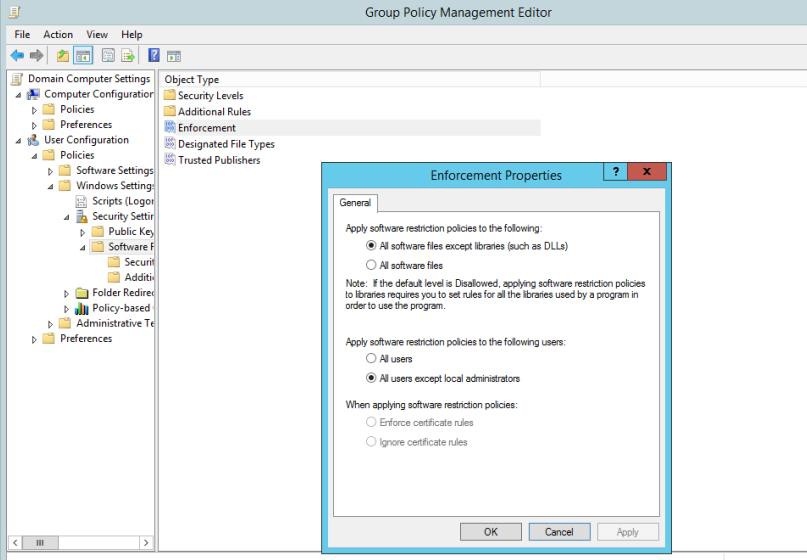

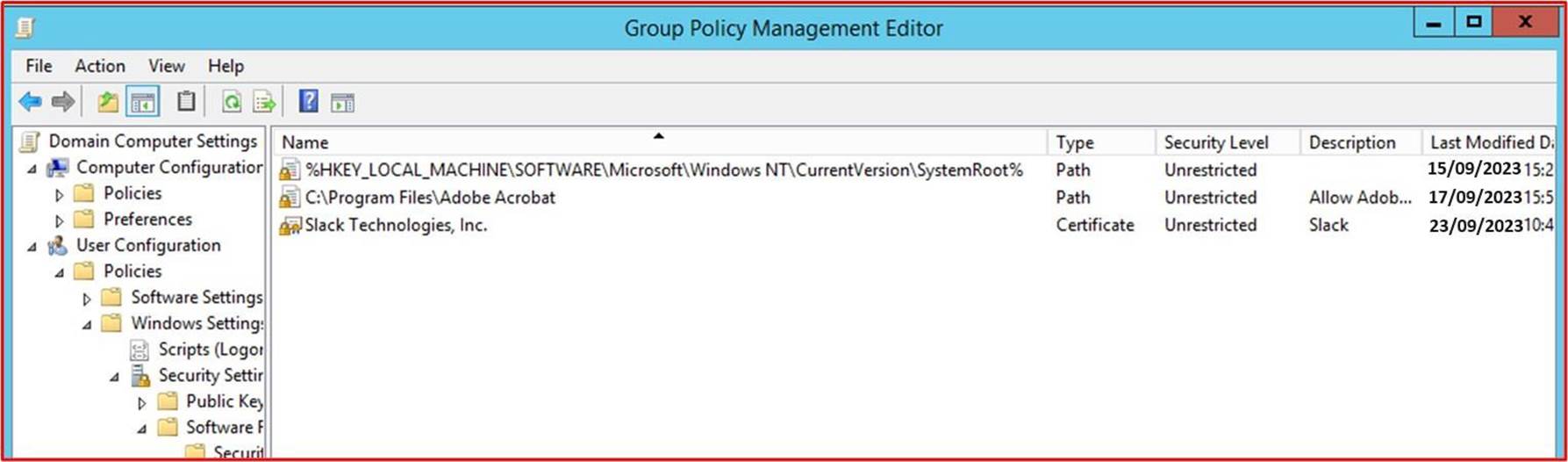

The next screenshots demonstrate that Windows Group Policies (GPO) is configured to enforce only approved software and applications.

The next screenshot shows the software/applications allowed to run via path control.

Note: In these examples full screenshots were not used, however ALL ISV submitted evidence screenshots must be full screen screenshots showing any URL, logged in user and the system time and date.

Patch management/patching and risk ranking

Patch management, often referred to as patching, is a critical component of any robust cybersecurity strategy. It involves the systematic process of identifying, testing, and applying patches or updates to software, operating systems, and applications. The primary objective of patch management is to mitigate security vulnerabilities, ensuring that systems and software remain resilient against potential threats. Additionally, patch management encompasses risk ranking a vital element in prioritizing patches. This involves evaluating vulnerabilities based on their severity and potential impact on an organization’s security posture. By assigning risk scores to vulnerabilities, organizations can allocate resources efficiently, focusing their efforts on addressing critical and high-risk vulnerabilities promptly while maintaining a proactive stance against emerging threats. An effective patch management and risk ranking strategy not only enhances security but also contributes to the overall stability and performance of IT infrastructure, helping organizations stay resilient in the ever-evolving landscape of cybersecurity threats.

To maintain a secure operating environment, applications/add-ons and supporting systems must be suitably patched. A suitable timeframe between identification (or public release) and patching needs to be managed to reduce the window of opportunity for a vulnerability to be exploited by a threat actor. The Microsoft 365 Certification does not stipulate a ‘Patching Window’; however, certification analysts will reject timeframes that are not reasonable or in line with industry best practices. This security control group is also in scope for Platform-as-a-Service (PaaS) hosting environments as the application/add-in third-party software libraries and code base must be patched based upon the risk ranking.

Control No. 4

Provide evidence that patch management policy and procedure documentation define all of the following:

A suitable minimal patching window for critical/high and medium risks vulnerabilities.

Decommissioning of unsupported operating systems and software.

How new security vulnerabilities are identified and assigned a risk score.

Intent: patch management

Patch management is required by many security compliance frameworks i.e., PCI-DSS, ISO 27001, NIST (SP) 800-53, FedRAMP and SOC 2. The importance of good patch management cannot be over stressed

as it can correct security and functionality problems in software, firmware and mitigate vulnerabilities, which helps in the reduction of opportunities for exploitation. The intent of this control is to minimize the window of opportunity a threat actor has, to exploit vulnerabilities that may exist within the in- scope environment.

Provide a patch management policy and procedure documentation which comprehensively covers the following aspects:

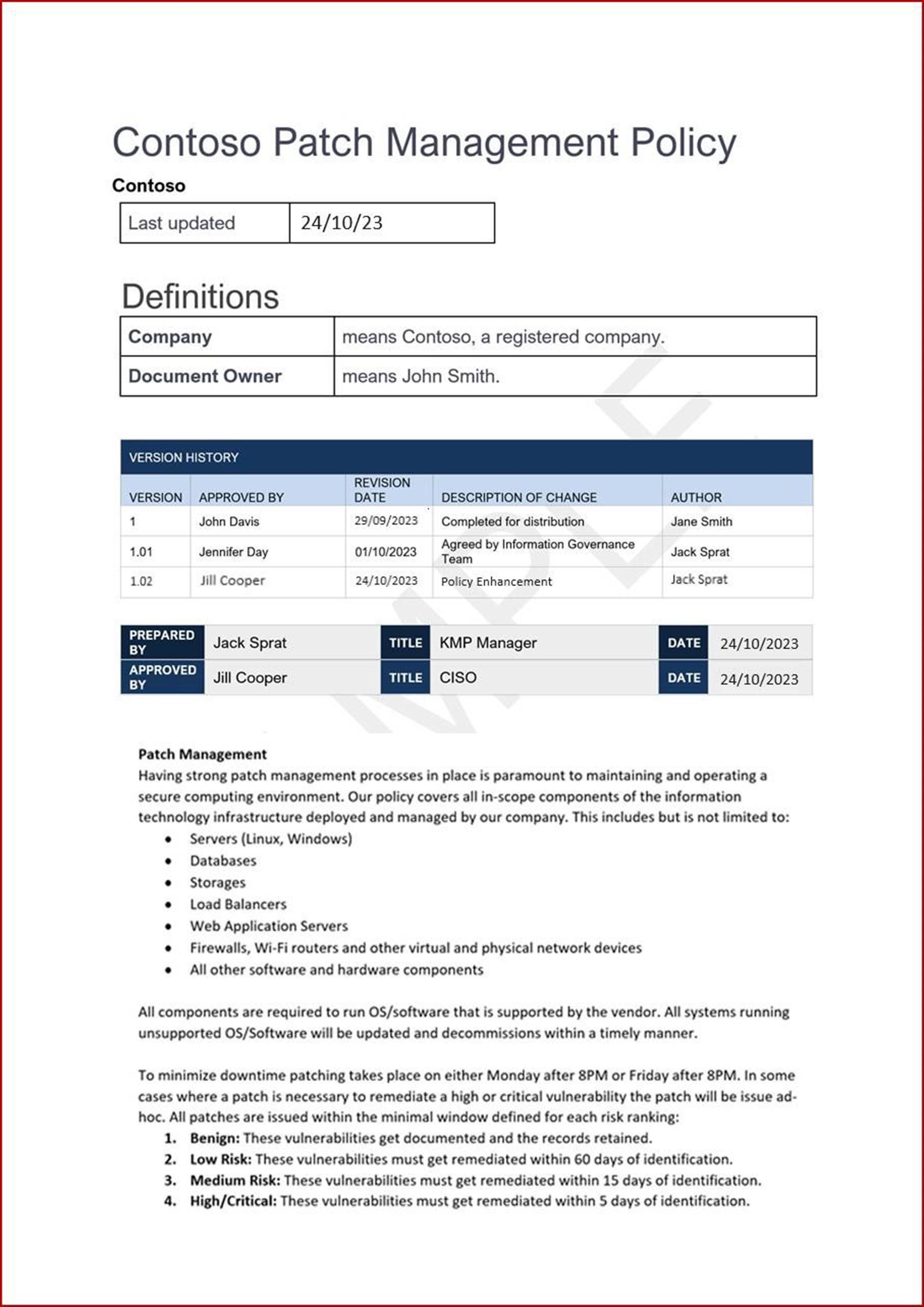

A suitable minimal patching window for critical/high and medium risks vulnerabilities.

The organization’s patch management policy and procedure documentation must clearly define a suitable minimal patching window for vulnerabilities categorized as critical/high and medium risks. Such a provision establishes the maximum allowable time within which patches must be applied after the identification of a vulnerability, based on its risk level. By explicitly stating these time frames, the organization standardized its approach to patch management, minimizing the risk associated with unpatched vulnerabilities.

Decommissioning of unsupported operating systems and software.

The patch management policy includes provisions for the decommissioning of unsupported operating systems and software. Operating systems and software that no longer receive security updates pose a significant risk to an organization’s security posture. Therefore, this control ensures that such systems are identified and removed or replaced in a timely manner, as defined in the policy documentation.

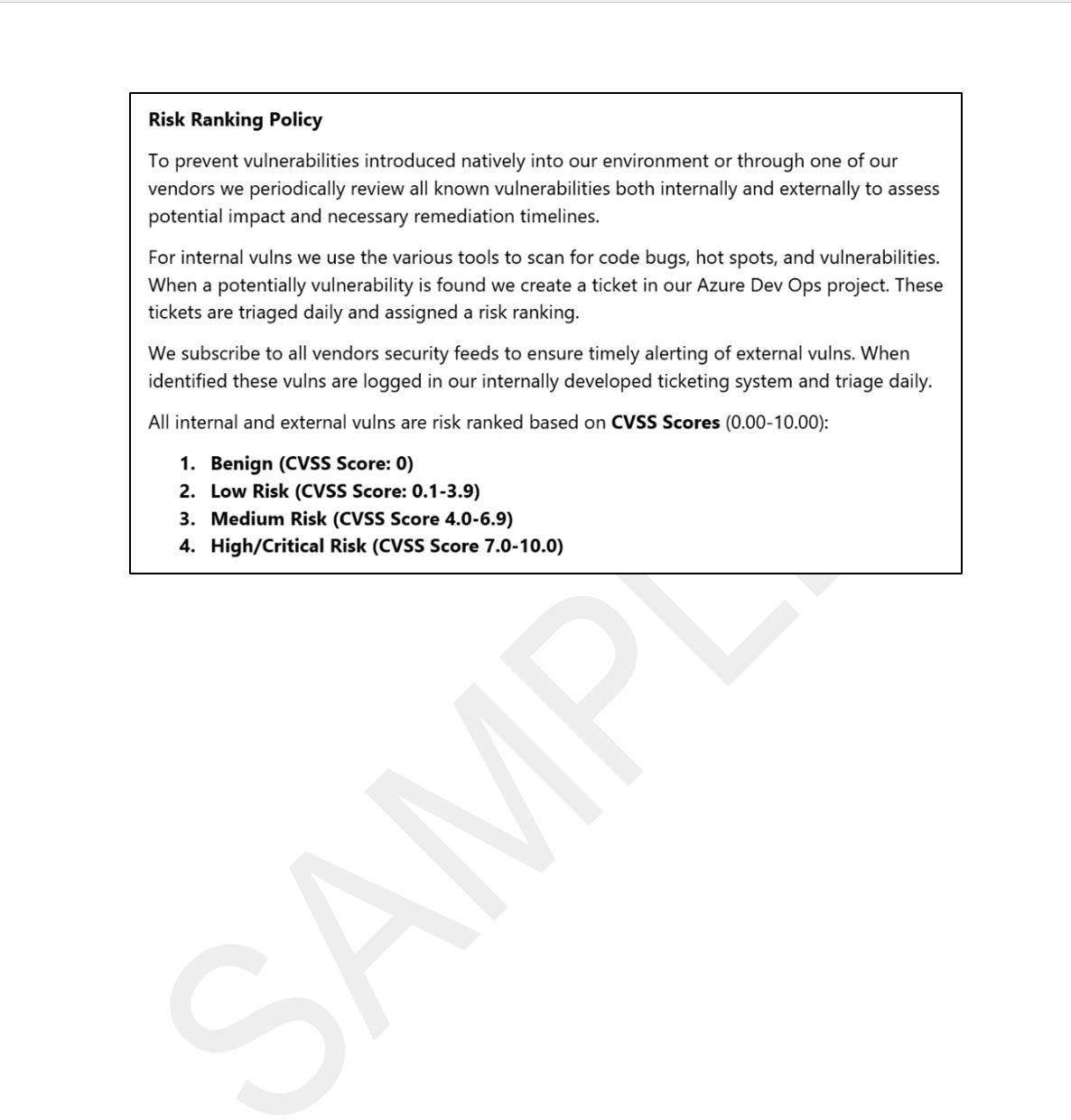

- A documented procedure outlining how new security vulnerabilities are identified and assigned a risk score.

Patching needs to be based upon risk, the riskier the vulnerability, the quicker it needs to be remediated. Risk ranking of identified vulnerabilities is an integral part of this process. The intent of this control is to ensure that there is a documented risk ranking process which is being followed to ensure all identified vulnerabilities are suitably ranked based upon risk. Organizations usually utilize the Common Vulnerability Scoring System (CVSS) rating provided by vendors or security researchers. It is recommended that if organizations rely on CVSS, that a re-ranking mechanism is included within the process to allow the organization to change the ranking based upon an internal risk assessment. Sometimes, the vulnerability may not be applicable due to the way the application has been deployed within the environment. For example, a Java vulnerability may be released which impacts a specific library that is not used by the organization.

Note: Even if you are running within a purely Platform as a Service ‘PaaS/Serverless’ environment, you still have a responsibility to identify vulnerabilities within your code base: i.e., third-party libraries.

Guidelines: patch management

Supply the policy document. Administrative evidence such as policy and procedure documentation detailing the organization’s defined processes which cover all elements for the given control must be provided.

Note: that logical evidence can be provided as supporting evidence which will provide further insight into your organization’s Vulnerability Management Program (VMP), but it will not meet this control on its own.

Example evidence: patch management

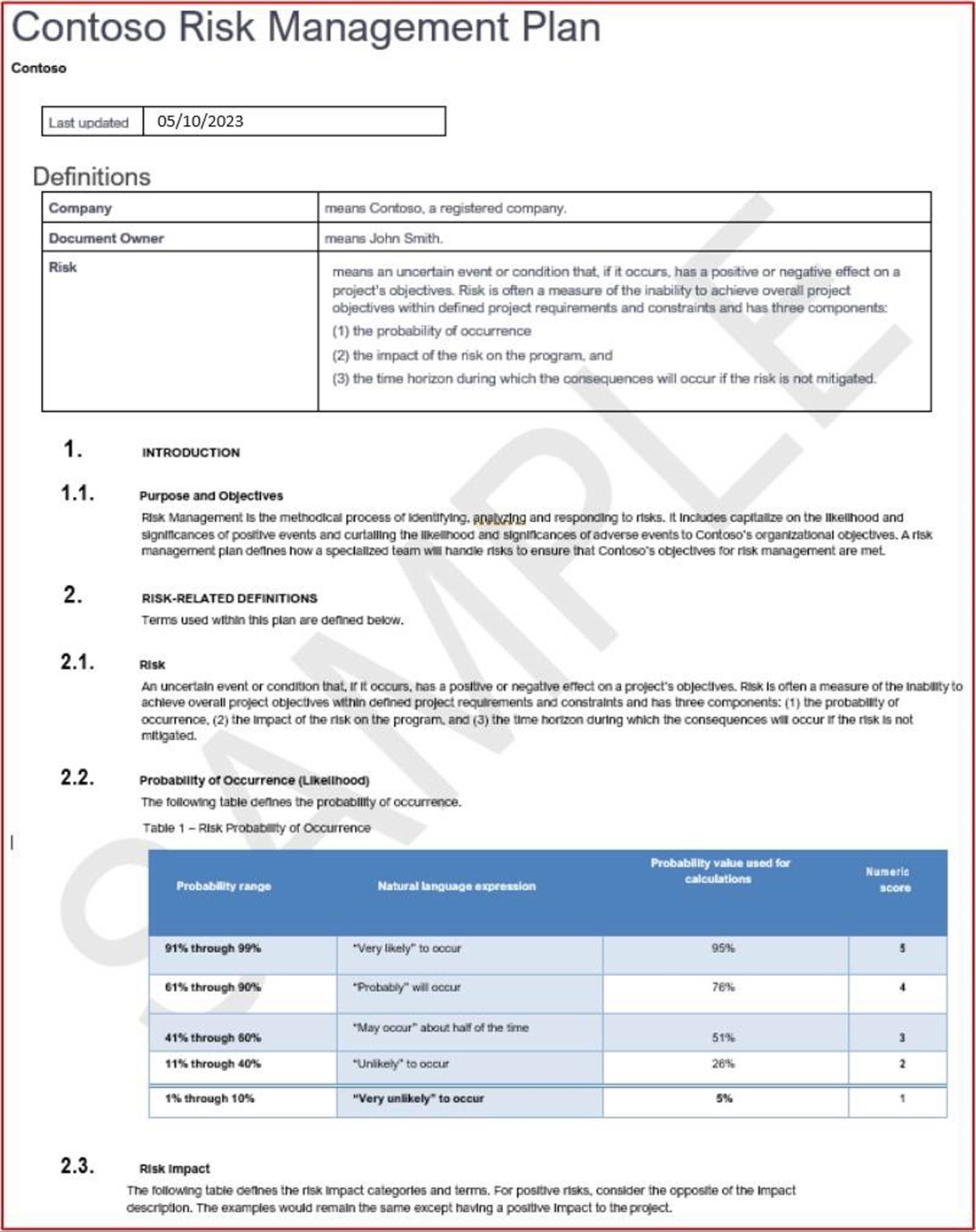

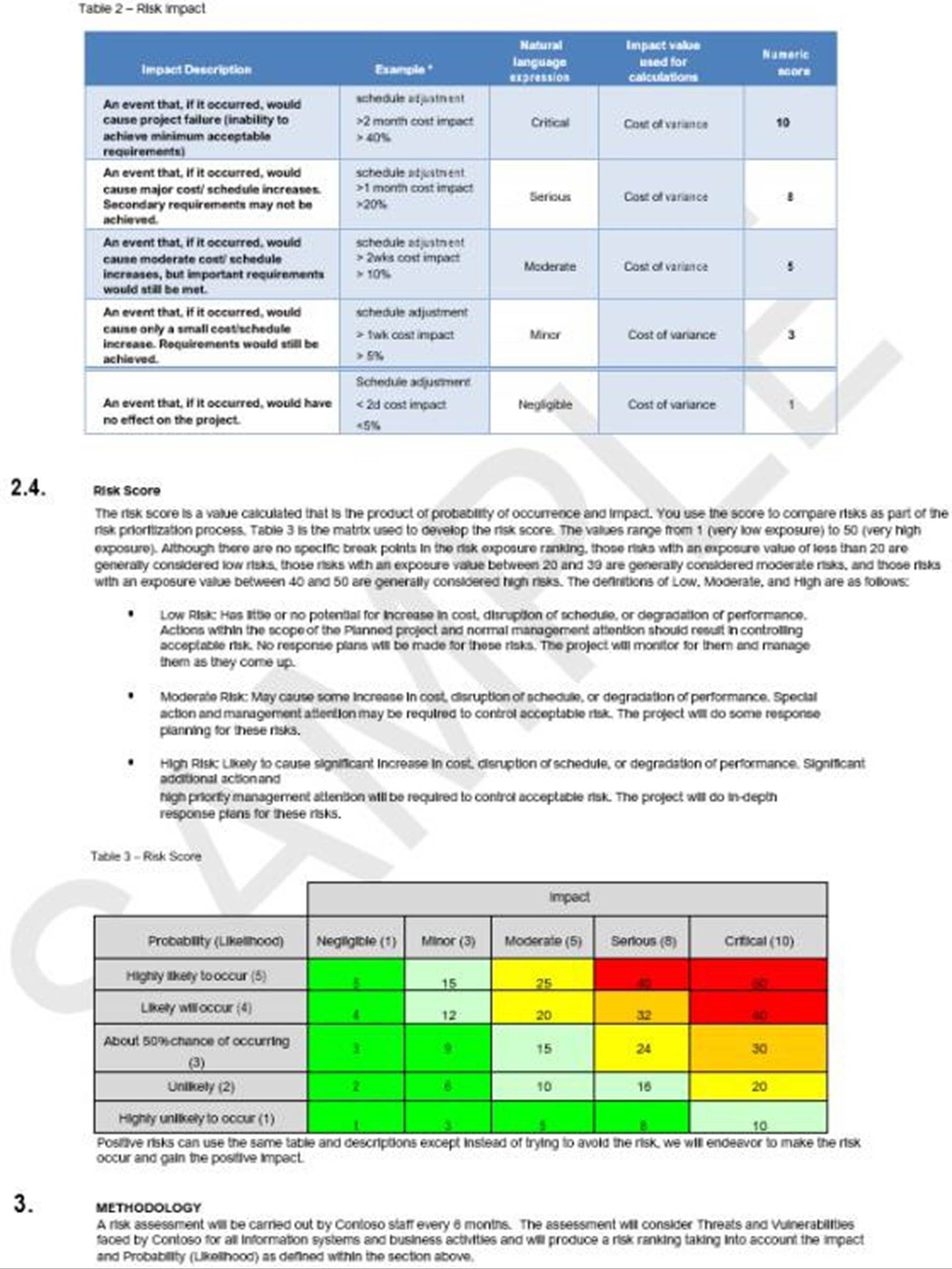

The next screenshot shows a snippet of a patch management/risk ranking policy as well as the different levels of risk categories. This is followed by the classification and remediation timeframes. Please Note: The expectation is for ISVs to share the actual supporting policy/procedure documentation and not simply provide a screenshot.

Example of (Optional) Additional Technical Evidence Supporting the Policy Document

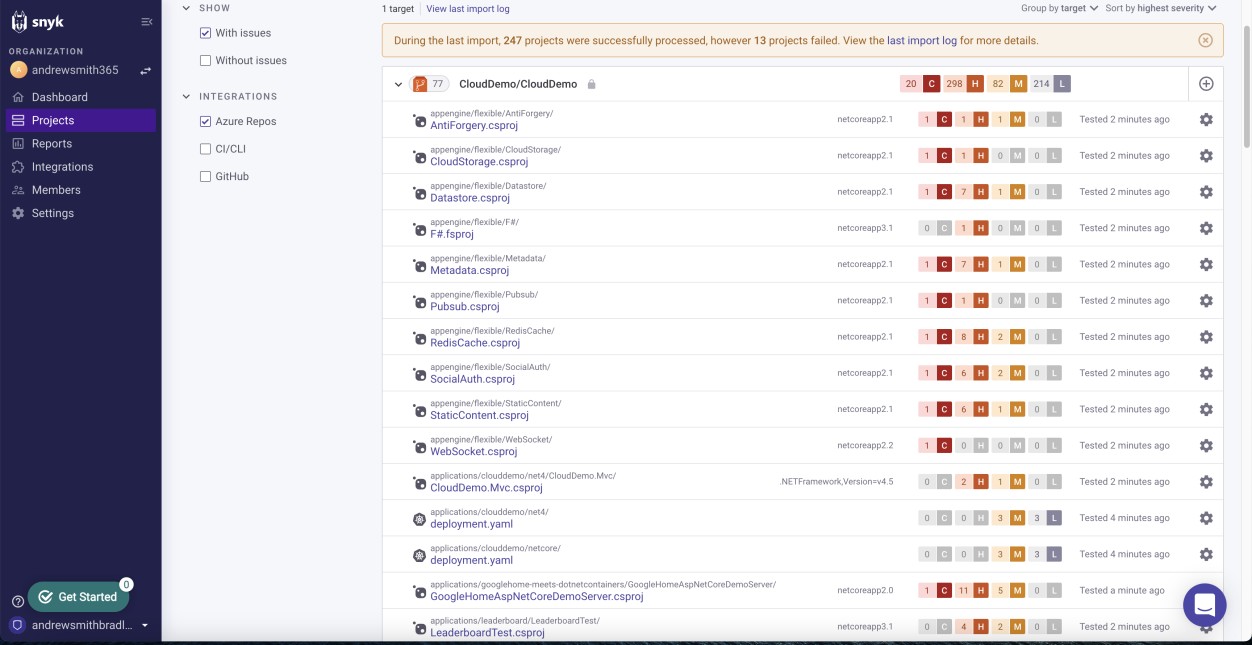

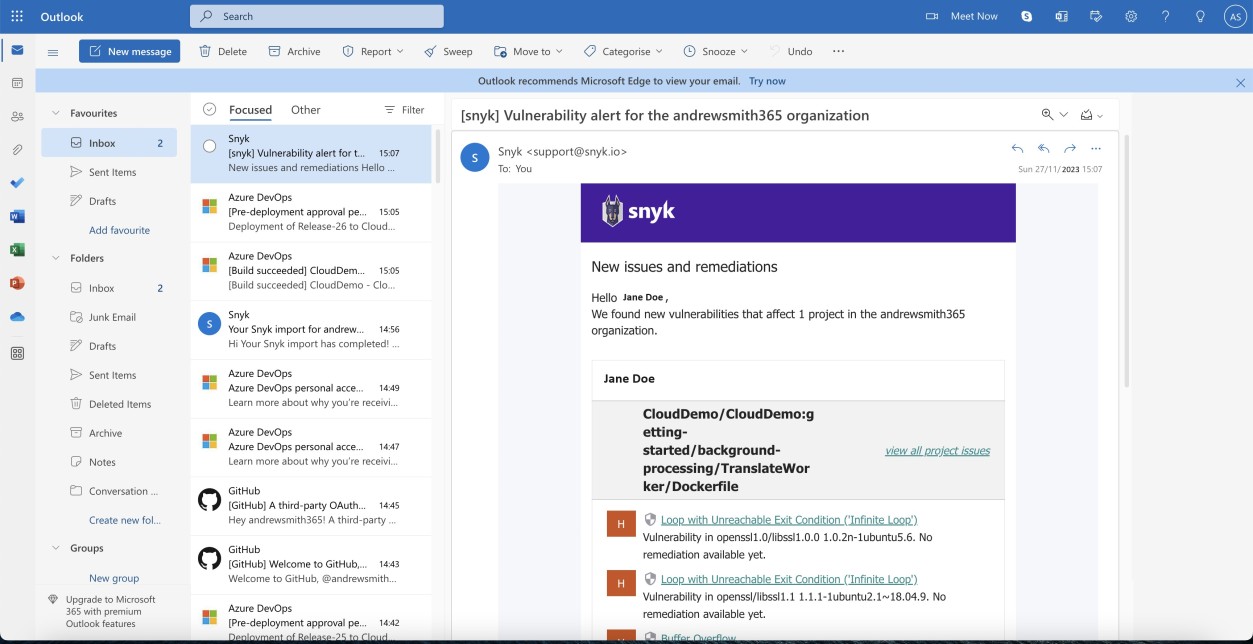

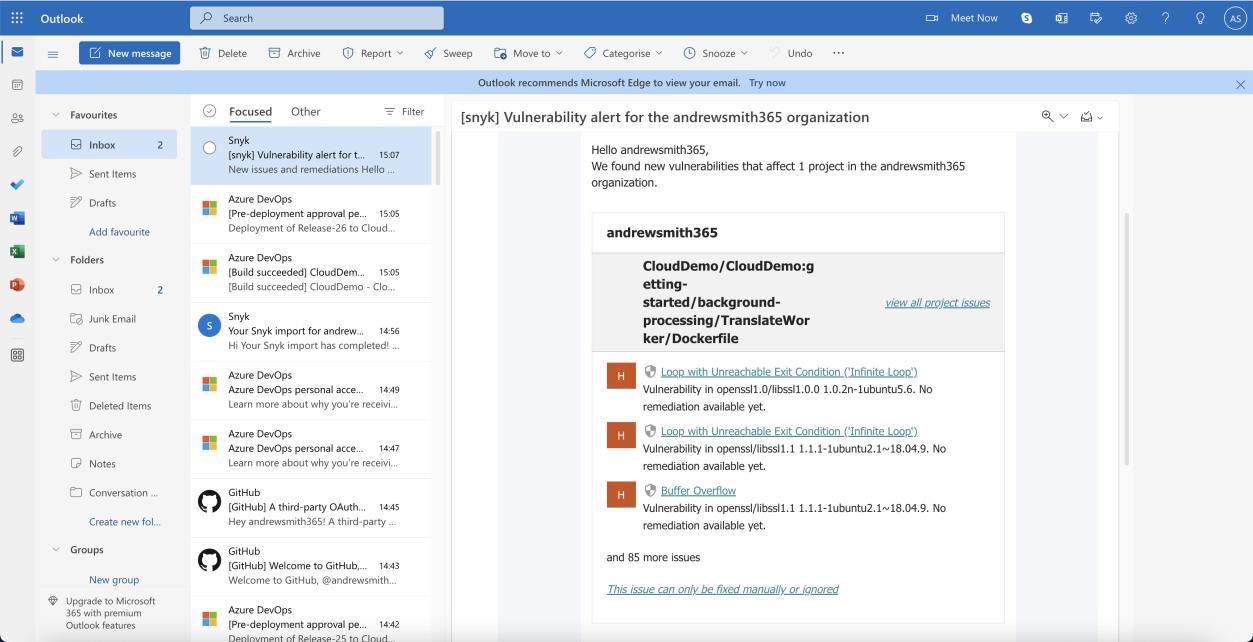

Logical evidence such as vulnerability tracking spreadsheets, vulnerability technical assessment reports, or screenshots of tickets raised through online management platforms to track the status and progress of vulnerabilities used to support the implementation of the process outlined in the policy documentation to be provided. The next screenshot demonstrated that Snyk, which is a Software Composition Analysis (SCA) tool, is used to scan the code base for vulnerabilities. This is followed by a notification via email.

Please Note: In these example a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing any URL, logged in user and the system time and date.

The next two screenshots show an example of the email notification received when new vulnerabilities are flagged by Snyk. We can see the email contains the project affected and the assigned user for receiving the alerts.

The following screenshot shows the vulnerabilities identified.

Please Note: In the previous examples full screenshots were not used, however ALL ISV submitted evidence screenshots must be full screenshots showing URL, any logged in user and system time and date.

Example evidence

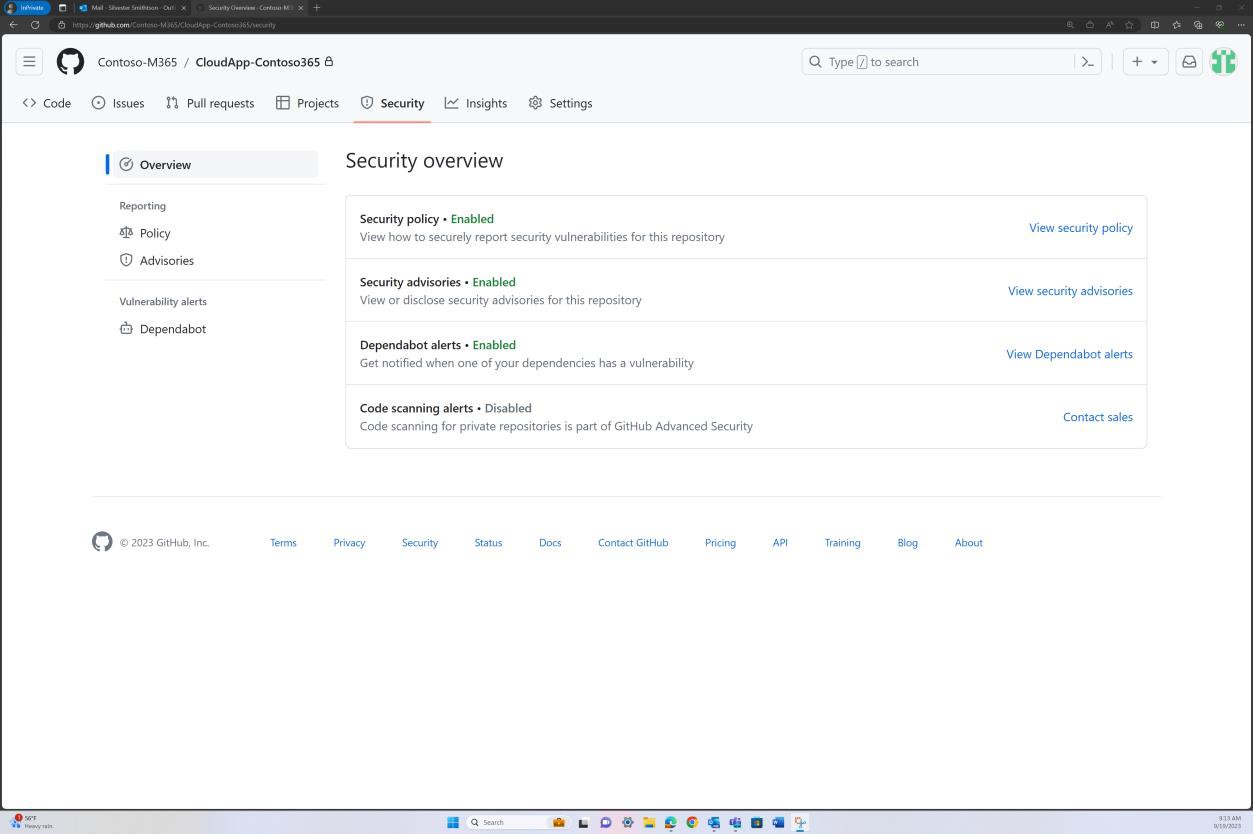

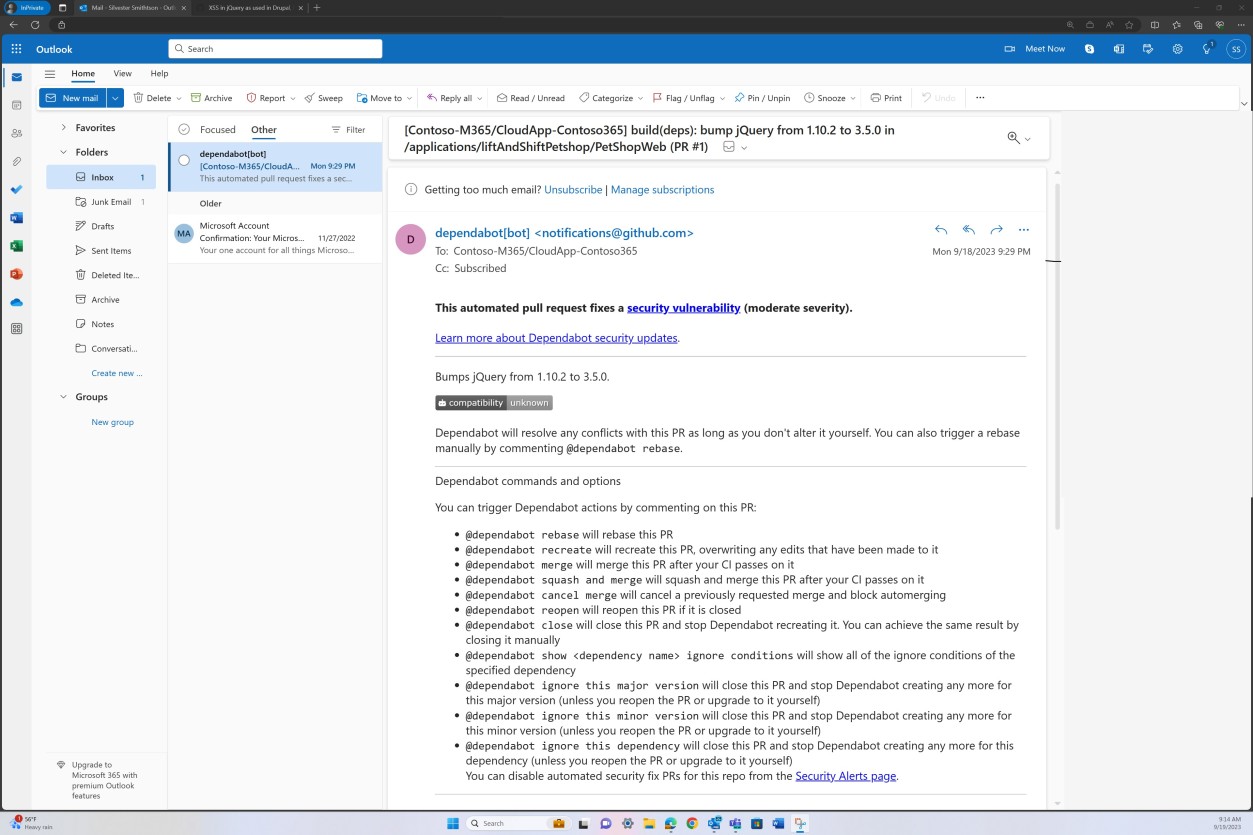

The next screenshots show GitHub security tools configured and enabled to scan for vulnerabilities within the code base and alerts are sent via email.

The email notification shown next is a confirmation that the flagged issues will be automatically resolved through a pull request.

Example evidence

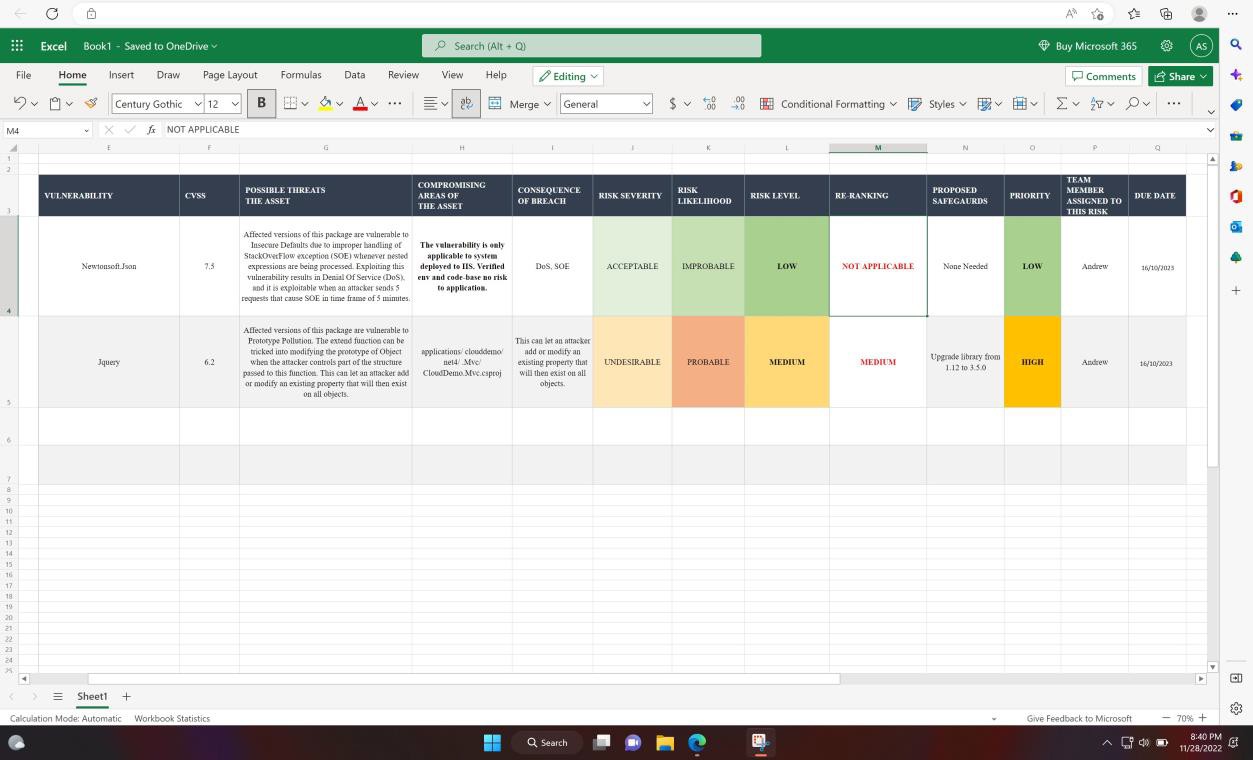

The next screenshot shows the internal technical assessment and ranking of vulnerabilities via a spreadsheet.

Example evidence

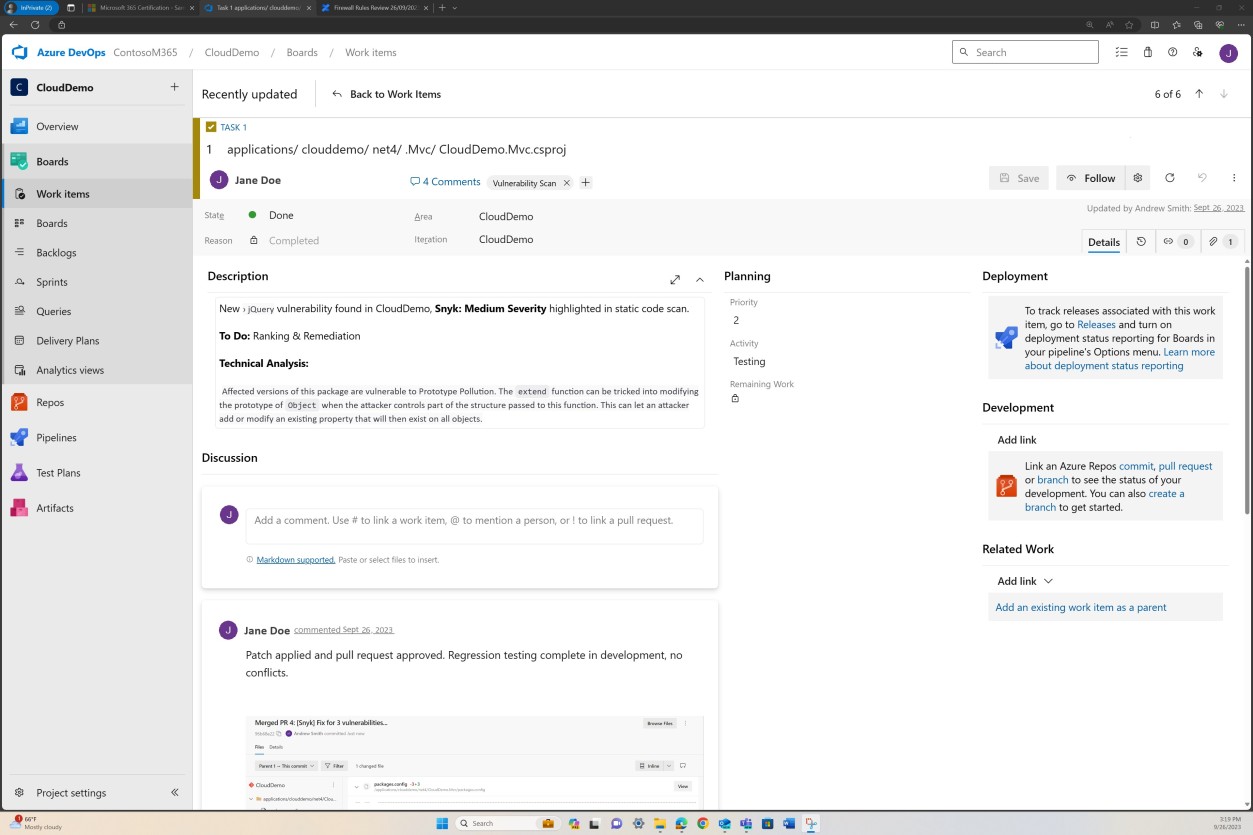

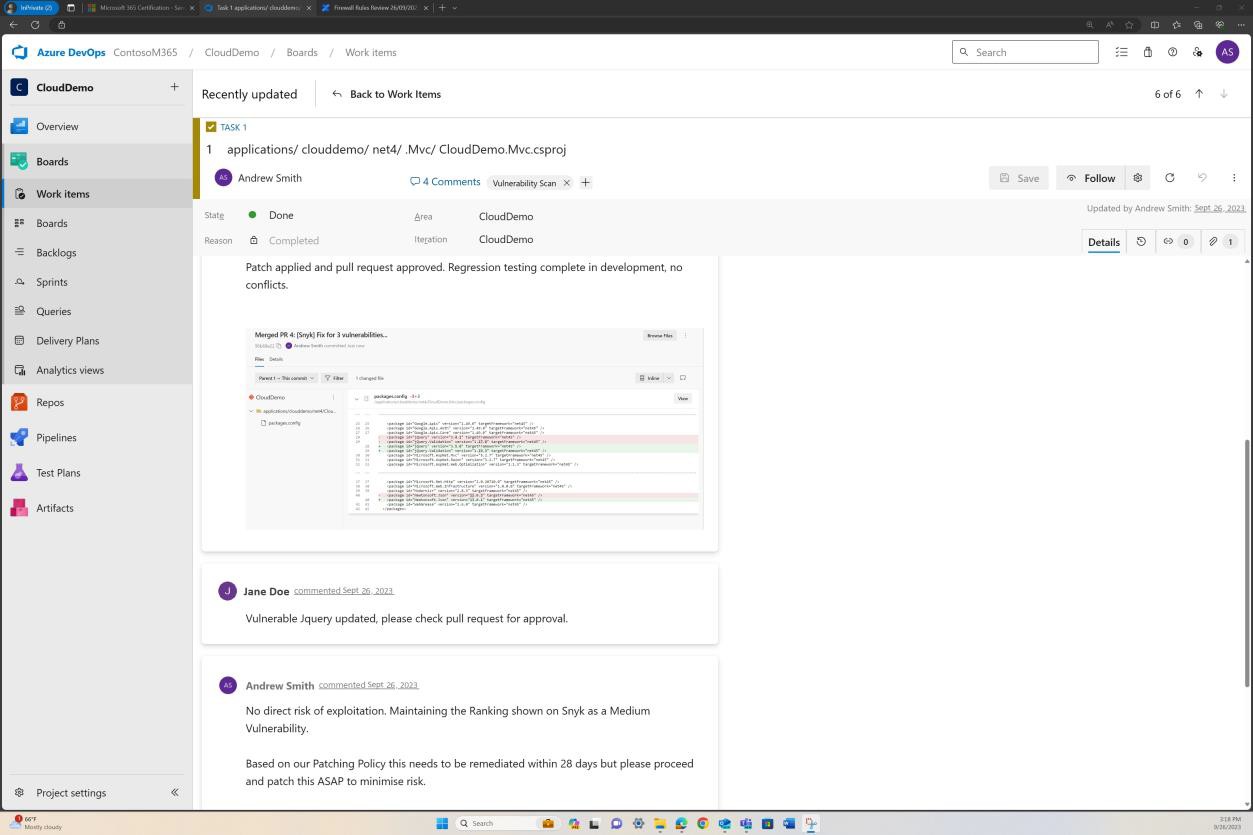

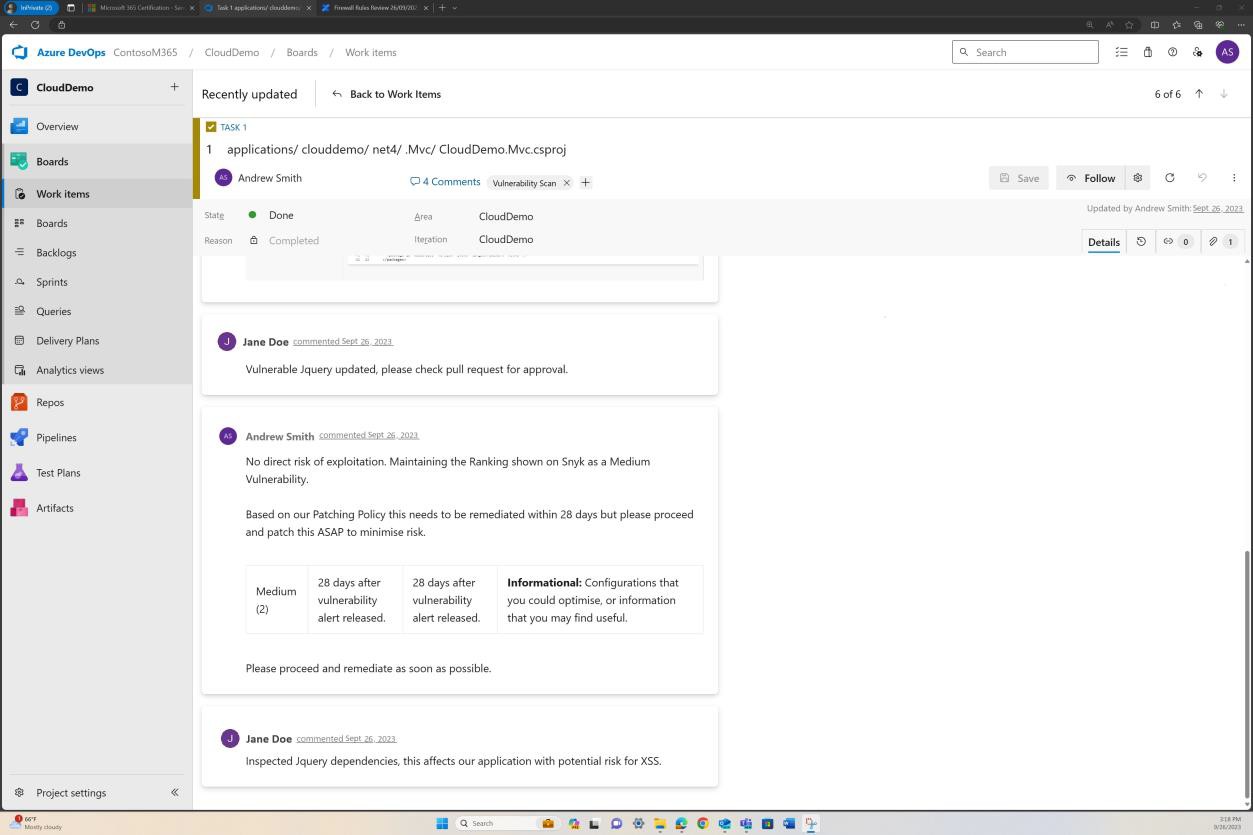

The next screenshots show tickets raised in DevOps for each vulnerability that has been discovered.

Assessment, ranking and reviewed by a separate employee occurs before implementing the changes.

Control No. 5

Provide demonstratable evidence that:

All sampled system components are being patched.

Provide demonstratable evidence that unsupported operating systems and software components are not in use.

Intent: sampled system components

This subpoint aims to ensure that verifiable evidence is provided to confirm that all sampled system components within the organization are being actively patched. The evidence may include but is not limited to, patch management logs, system audit reports, or documented procedures showing that patches have been applied. Where serverless technology or Platform as a Service (PaaS) is employed, this should extend to include the code base to confirm that the most recent and secure versions of libraries and dependencies are in use.

Guidelines: sampled system components

Provide a screenshot for every device in the sample and supporting software components showing that patches are installed in line with the documented patching process. Additionally, provide screenshots demonstrating the patching of the code base.

Example evidence: sampled system components

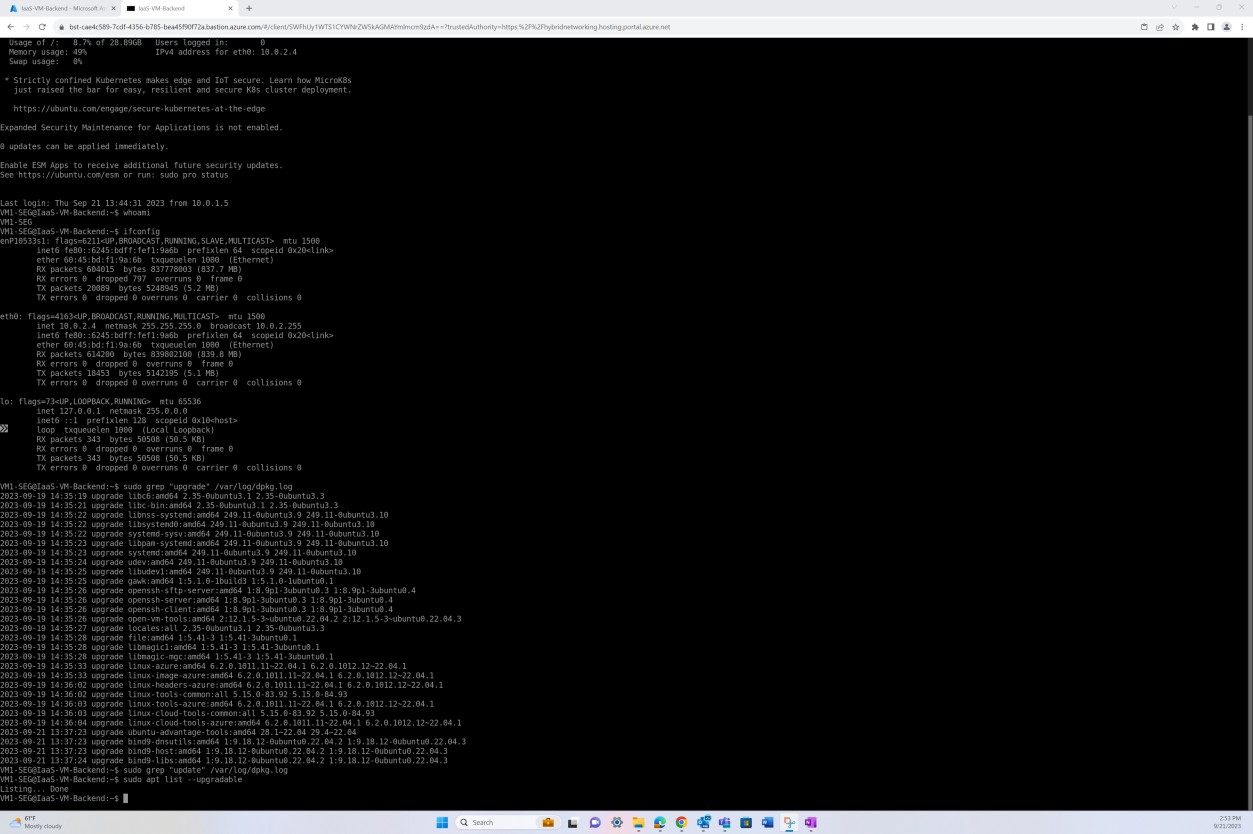

The next screenshot demonstrates the patching of a Linux operating system virtual machine ‘IaaS- VM-Backend’.

Example evidence

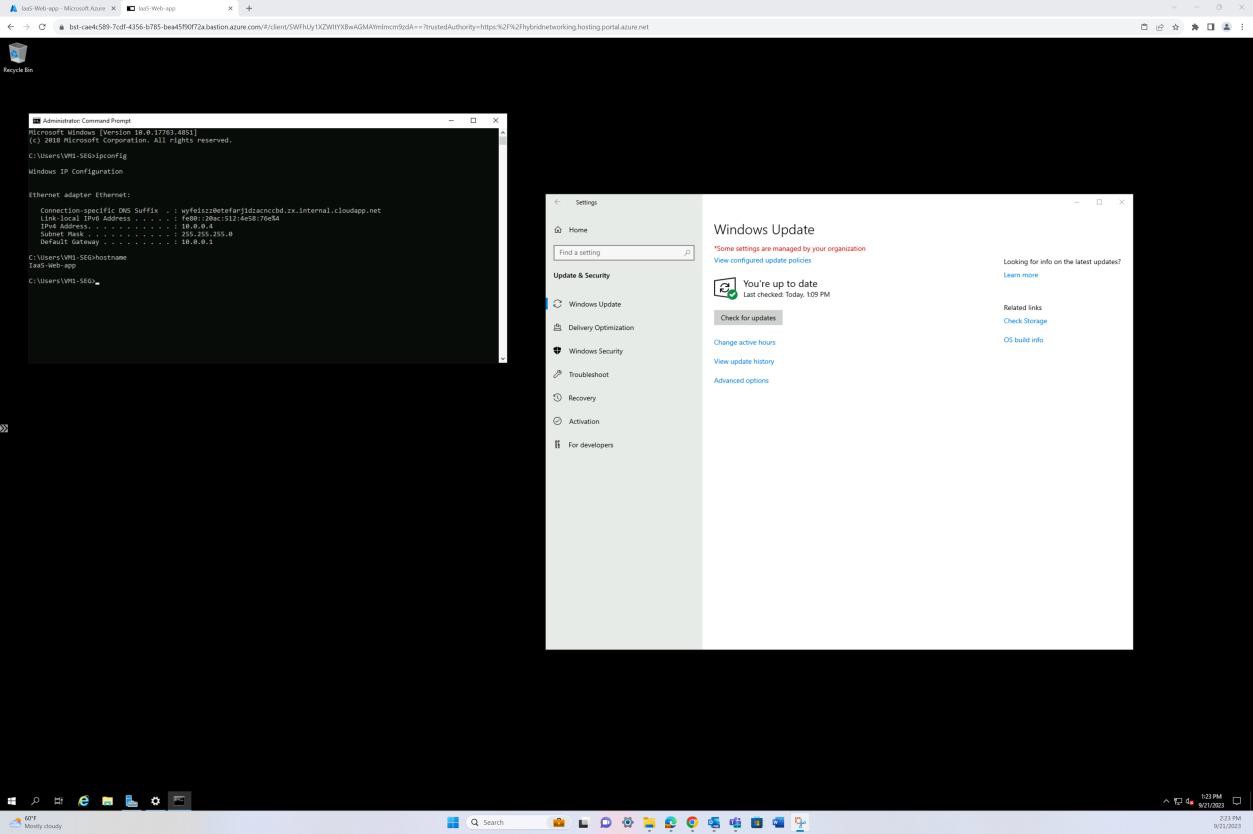

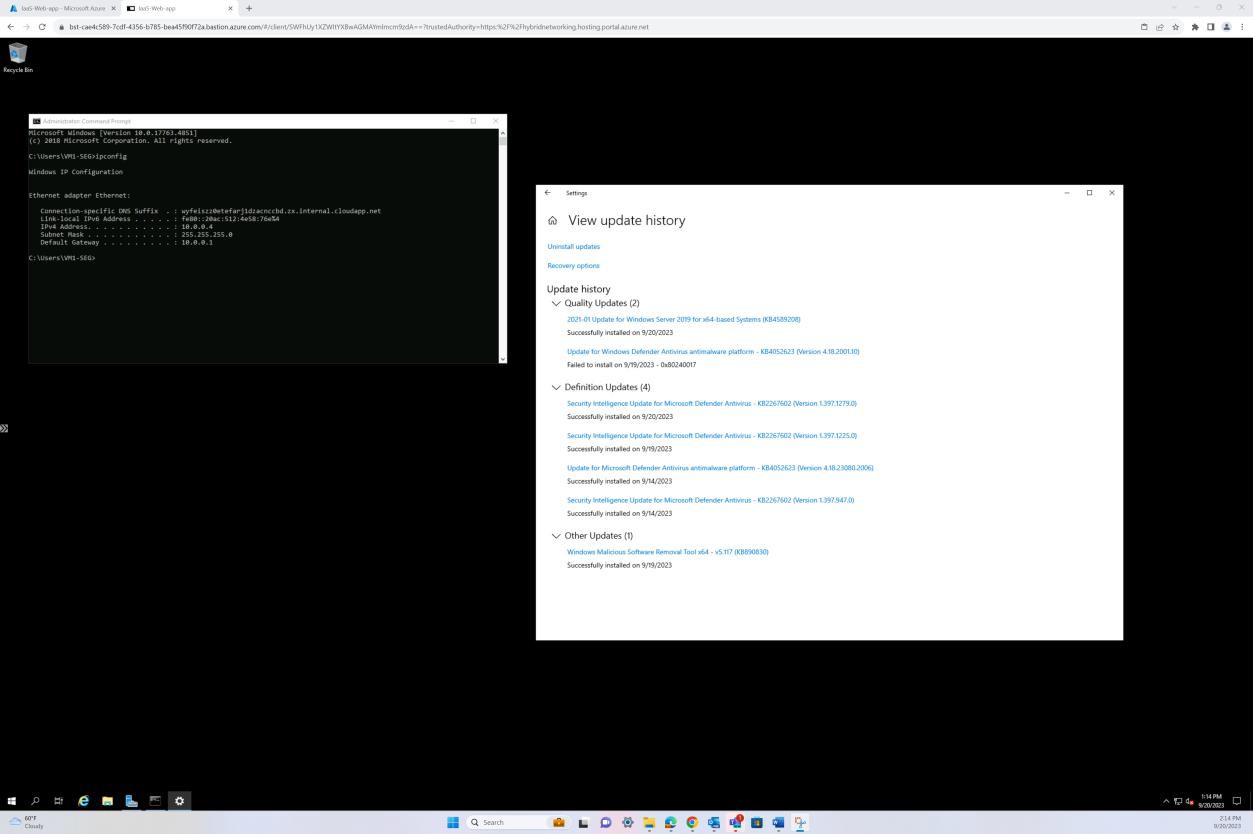

The next screenshot demonstrates the patching of a Windows operating system virtual machine ‘IaaS-Web-app’.

Example evidence

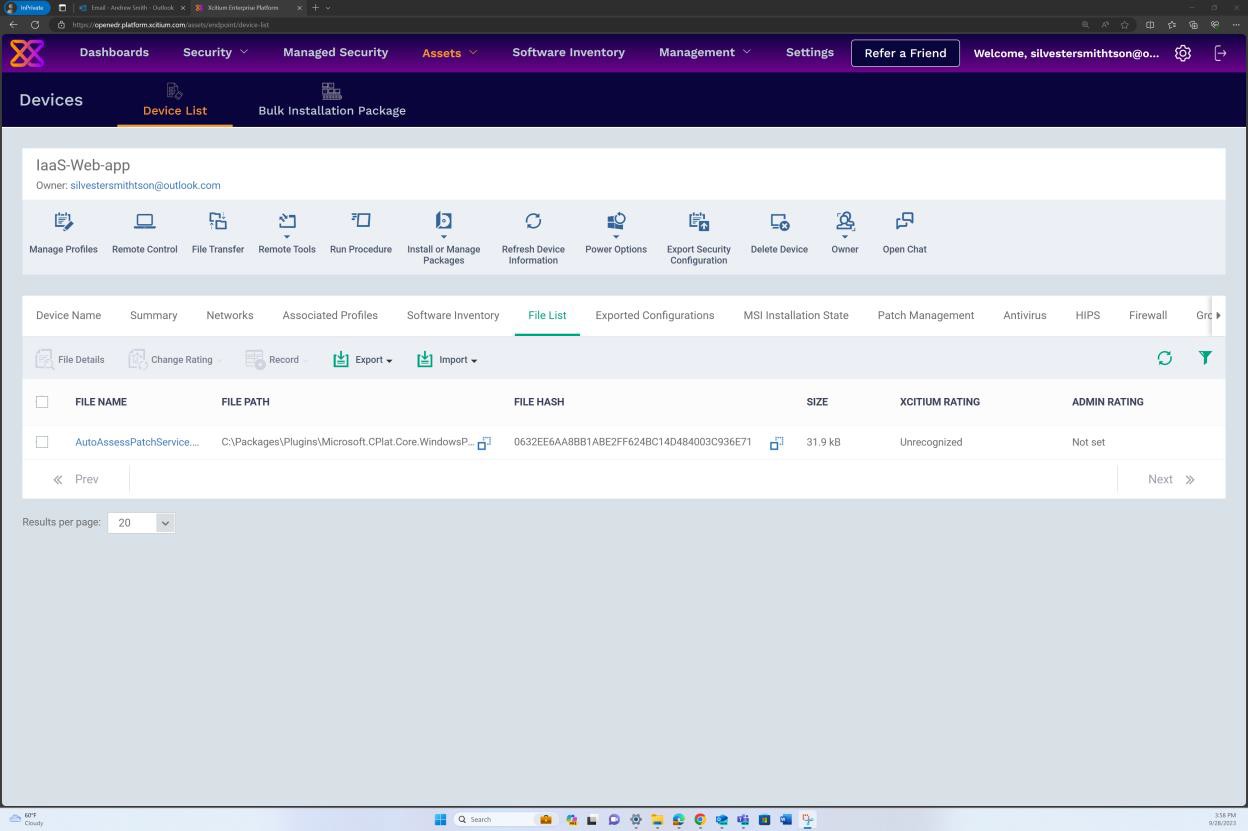

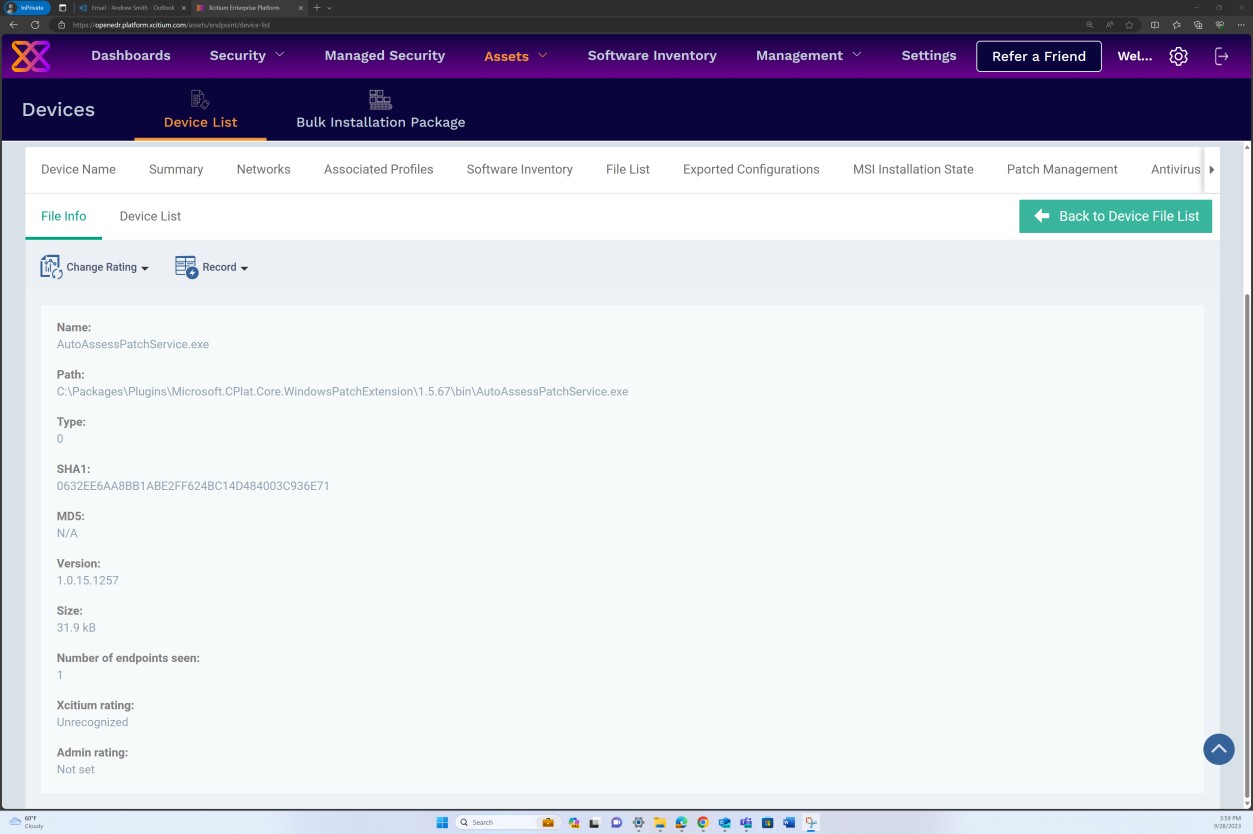

If you are maintaining the patching from any other tools such as Microsoft Intune, Defender for Cloud etc., screenshots can be provided from these tools. The next screenshots from the OpenEDR solution demonstrate that patch management is performed via the OpenEDR portal.

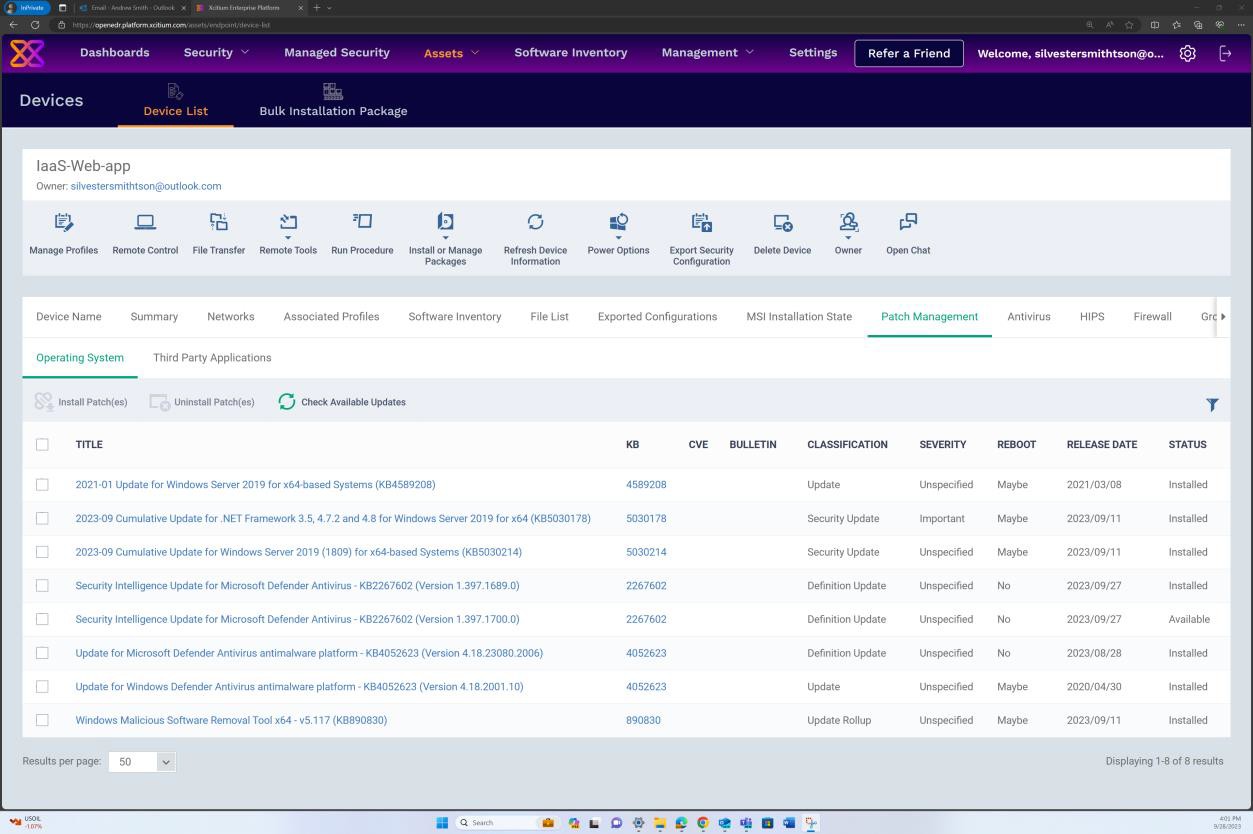

The next screenshot demonstrates that patch management of the in-scope server is done via the OpenEDR platform. The classification and the status are visible below demonstrating that patching occurs.

The next screenshot shows that logs are generated for the patches successfully installed on the server.

Example evidence

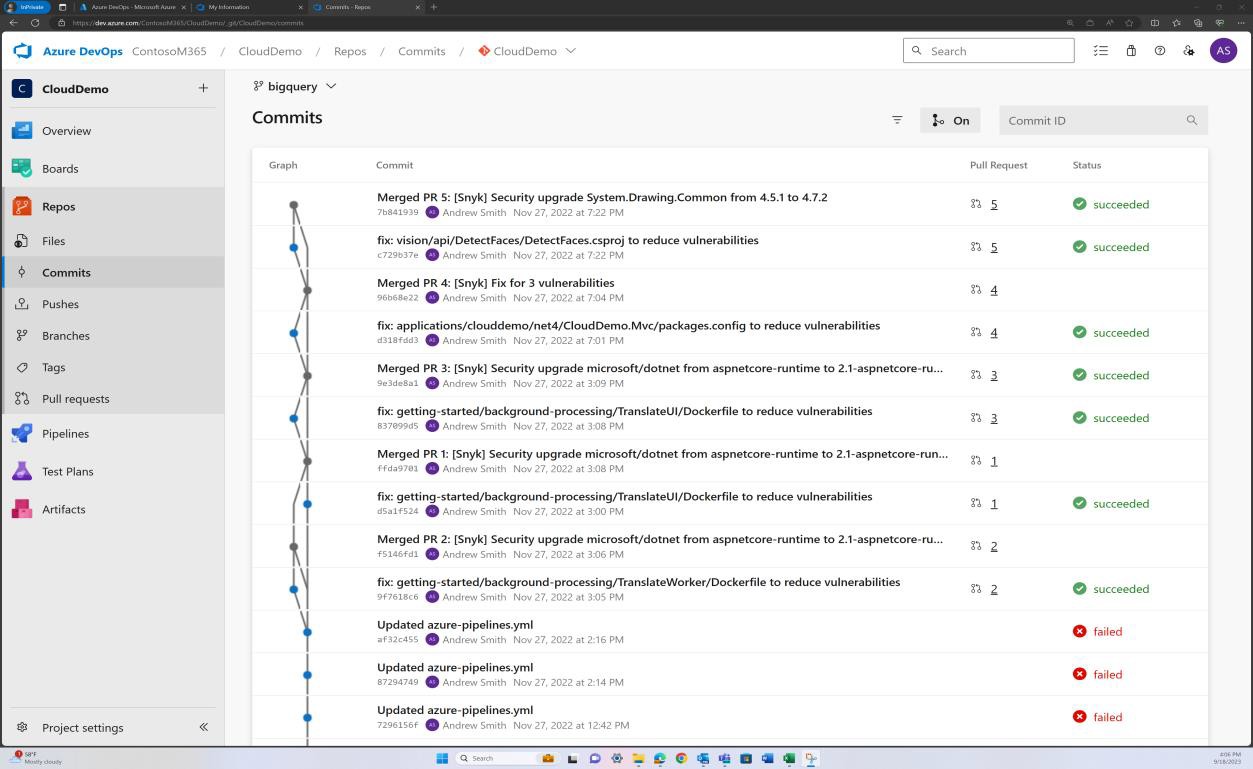

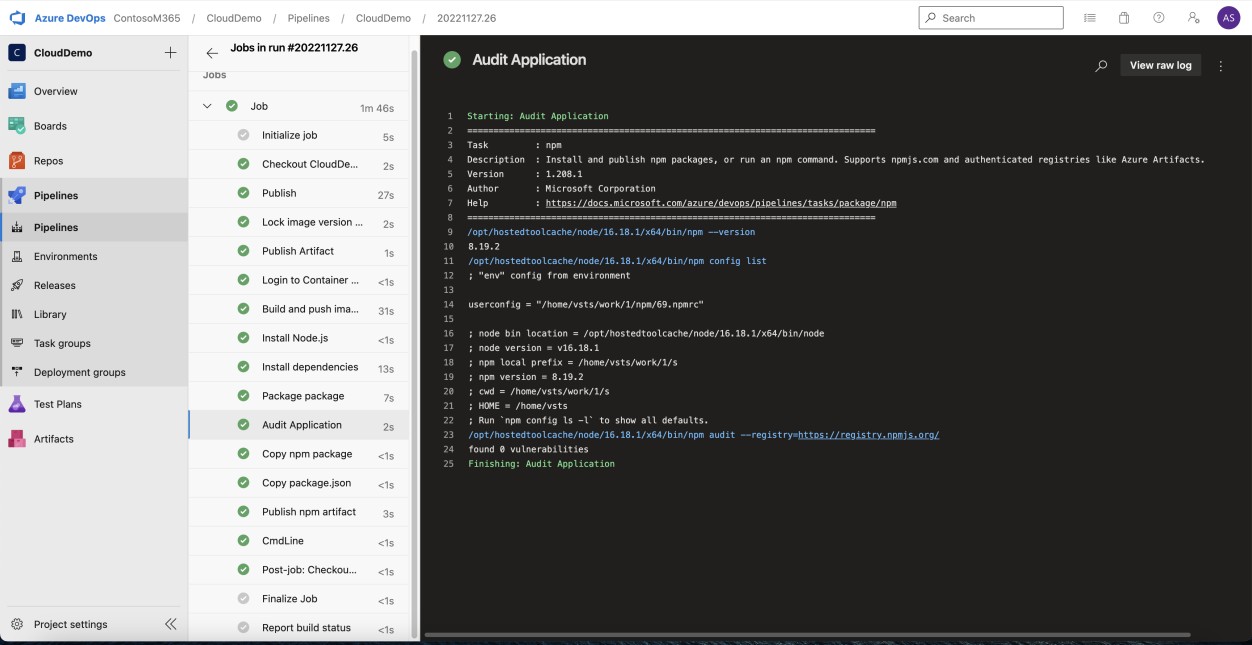

The next screenshot demonstrates that the code base/third party library dependencies are patched via Azure DevOps.

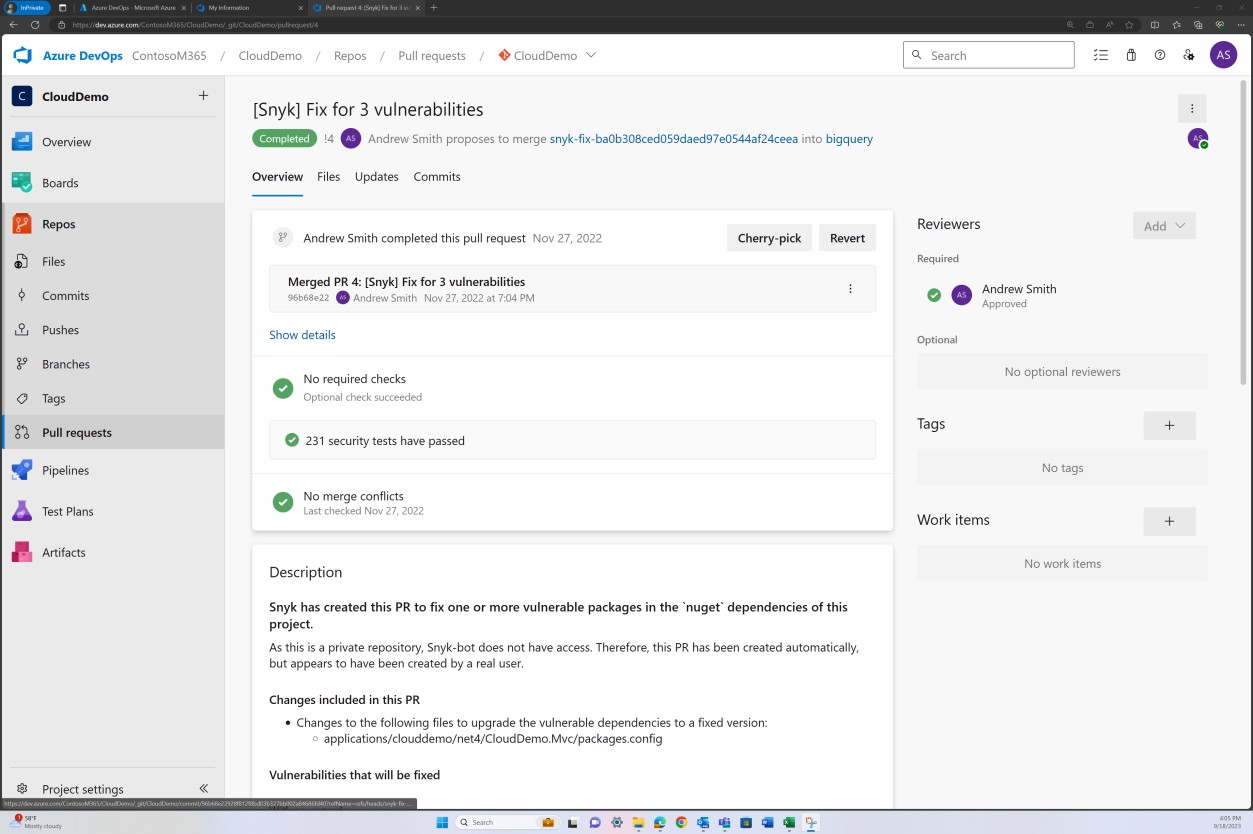

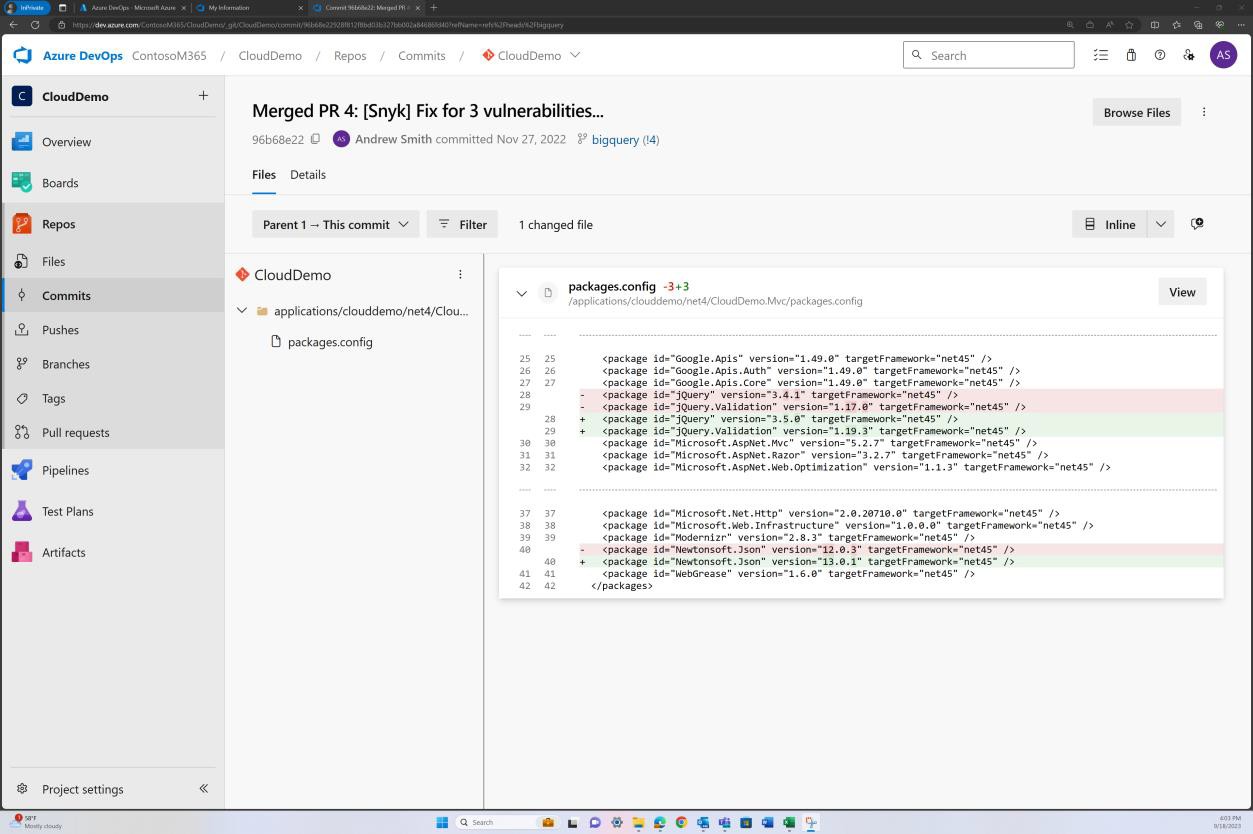

The next screenshot shows that a fix for vulnerabilities discovered by Snyk is being committed to the branch to resolve outdated libraries.

The next screenshot demonstrates that the libraries have been upgraded to supported versions.

Example evidence

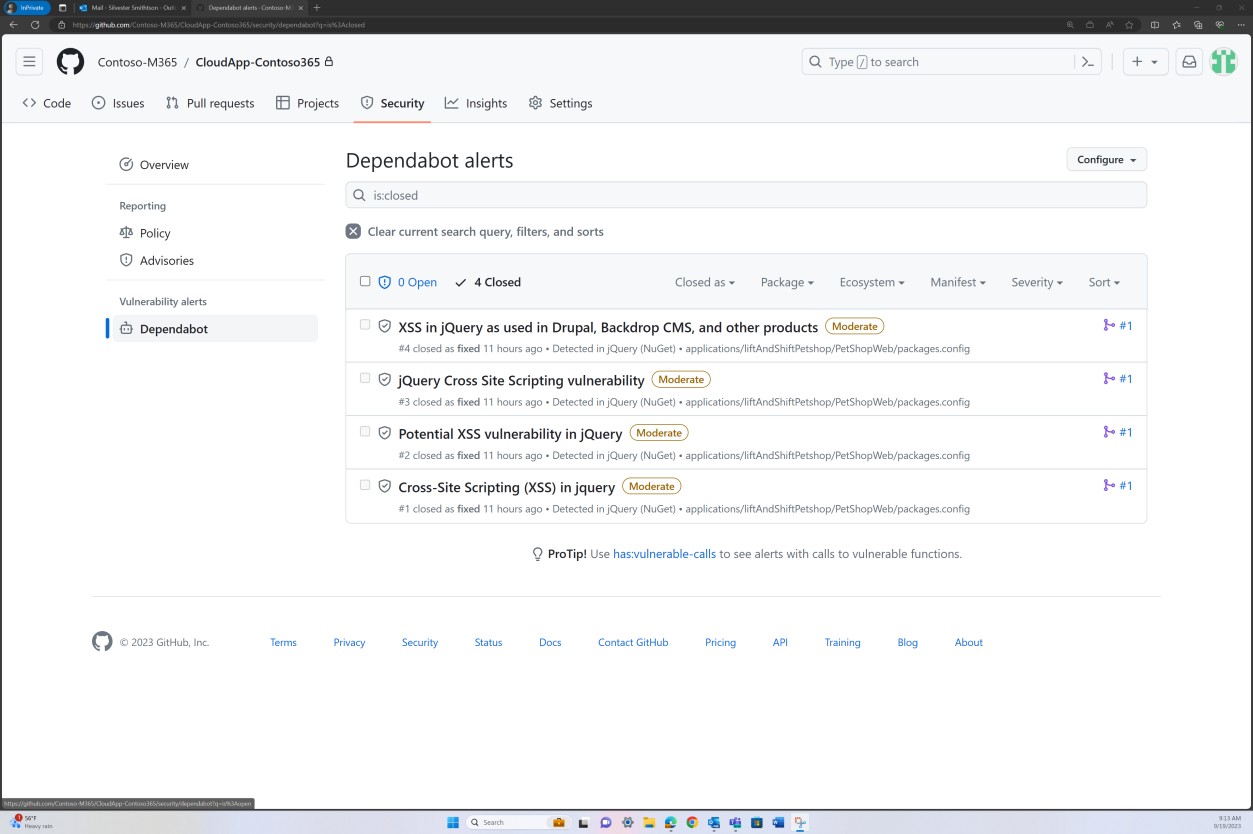

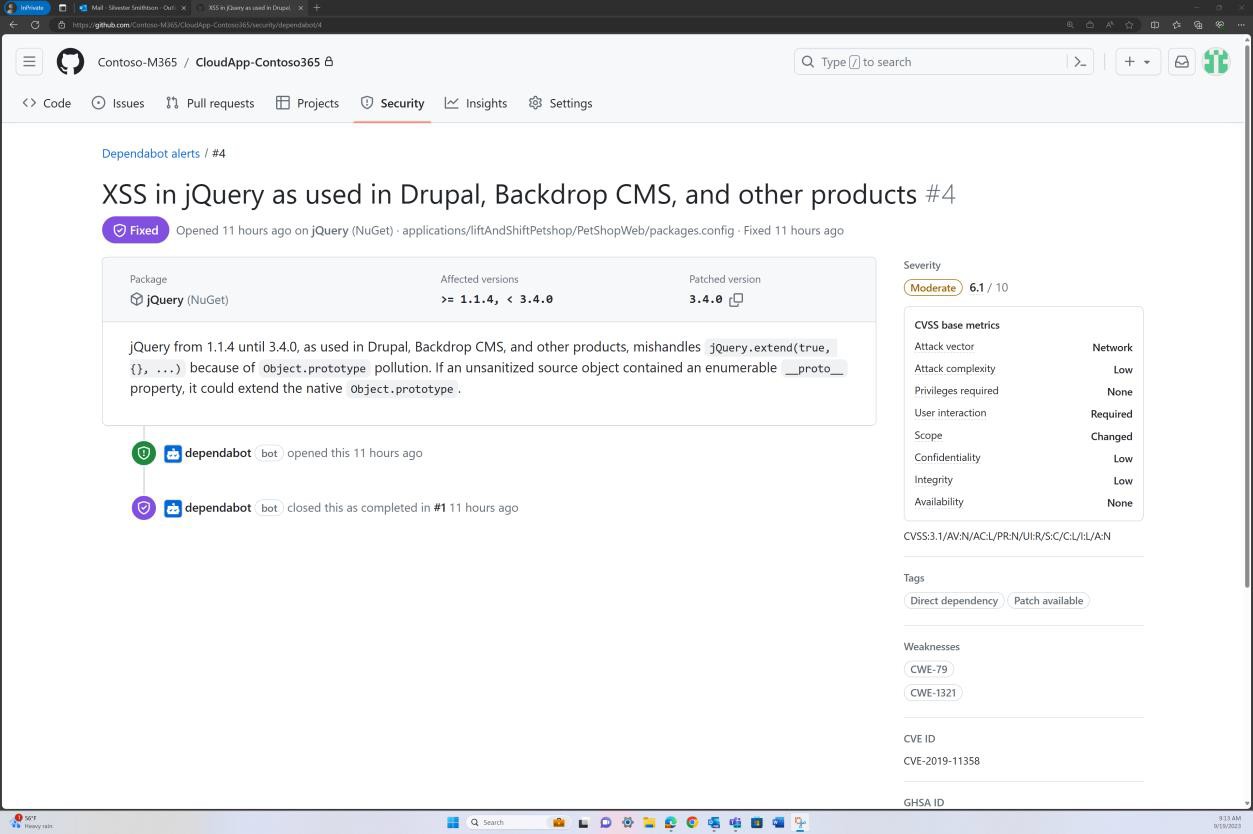

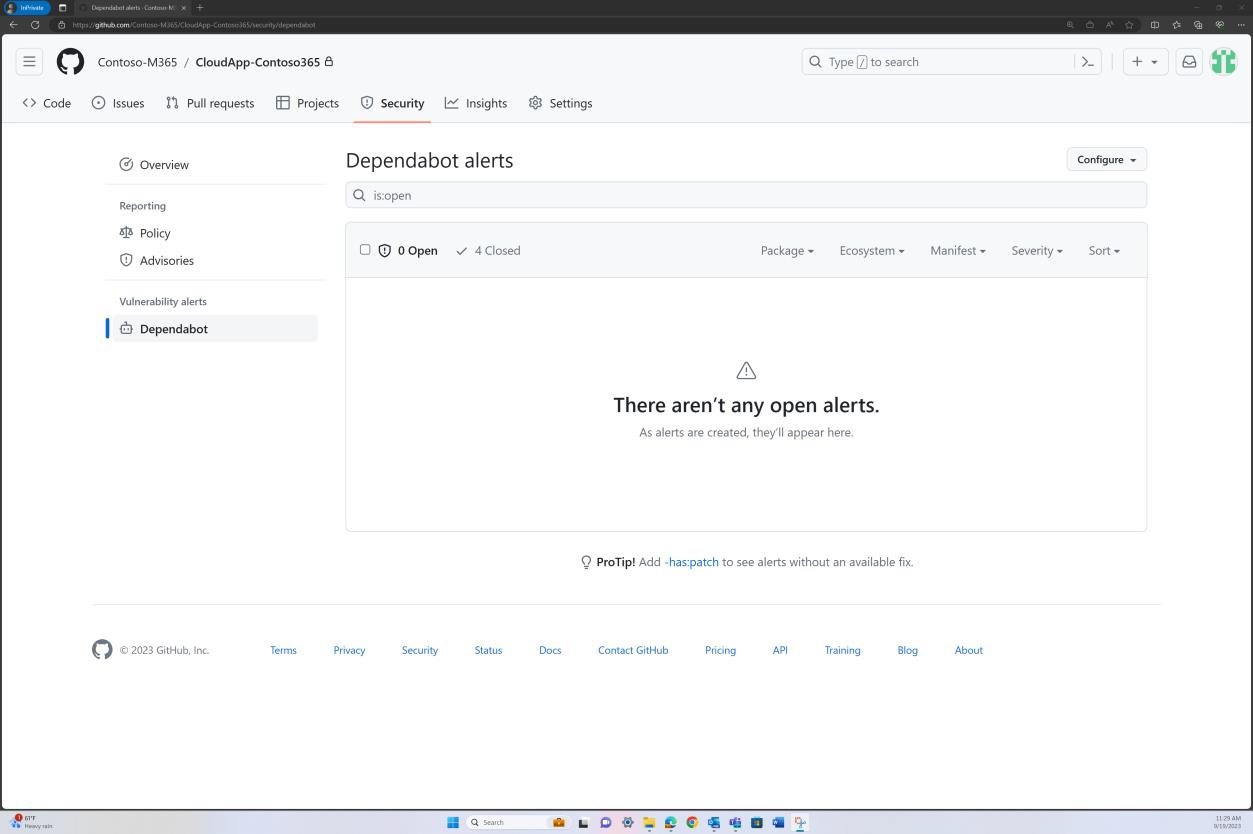

The next screenshots demonstrate that code base patching is maintained via GitHub Dependabot. Closed items demonstrate that patching occurs, and vulnerabilities have been resolved.

Intent: unsupported OS

Software that is not being maintained by vendors will, overtime, suffer from known vulnerabilities that are not fixed. Therefore, the use of unsupported operating systems and software components must not be used within production environments. Where Infrastructure-as-a-Service (IaaS) is deployed, the requirement for this subpoint expands to include both the infrastructure and the code base to ensure that every layer of the technology stack is compliant with the organization’s policy on the use of supported software.

Guidelines: unsupported OS

Provide a screenshot for every device in the sample set chosen by your analyst for you to collect evidence against showing the version of OS running (include the device/server’s name in the screenshot). In addition to this, provide evidence that software components running within the environment are running supported versions of the software. This may be done by providing the output of internal vulnerability scan reports (providing authenticated scanning is included) and/or the output of tools which check third-party libraries, such as Snyk, Trivy or NPM Audit. If running in PaaS only third-party library patching needs to be covered.

Example evidence: unsupported OS

The next screenshot from Azure DevOps NPM audit demonstrates that no unsupported libraries/ dependencies are utilized within the web app.

Note: In the next example a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing URL, any logged in user and system time and date.

Example evidence

The next screenshot from GitHub Dependabot demonstrates that no libraries/dependencies are utilized within the web app.

Example evidence

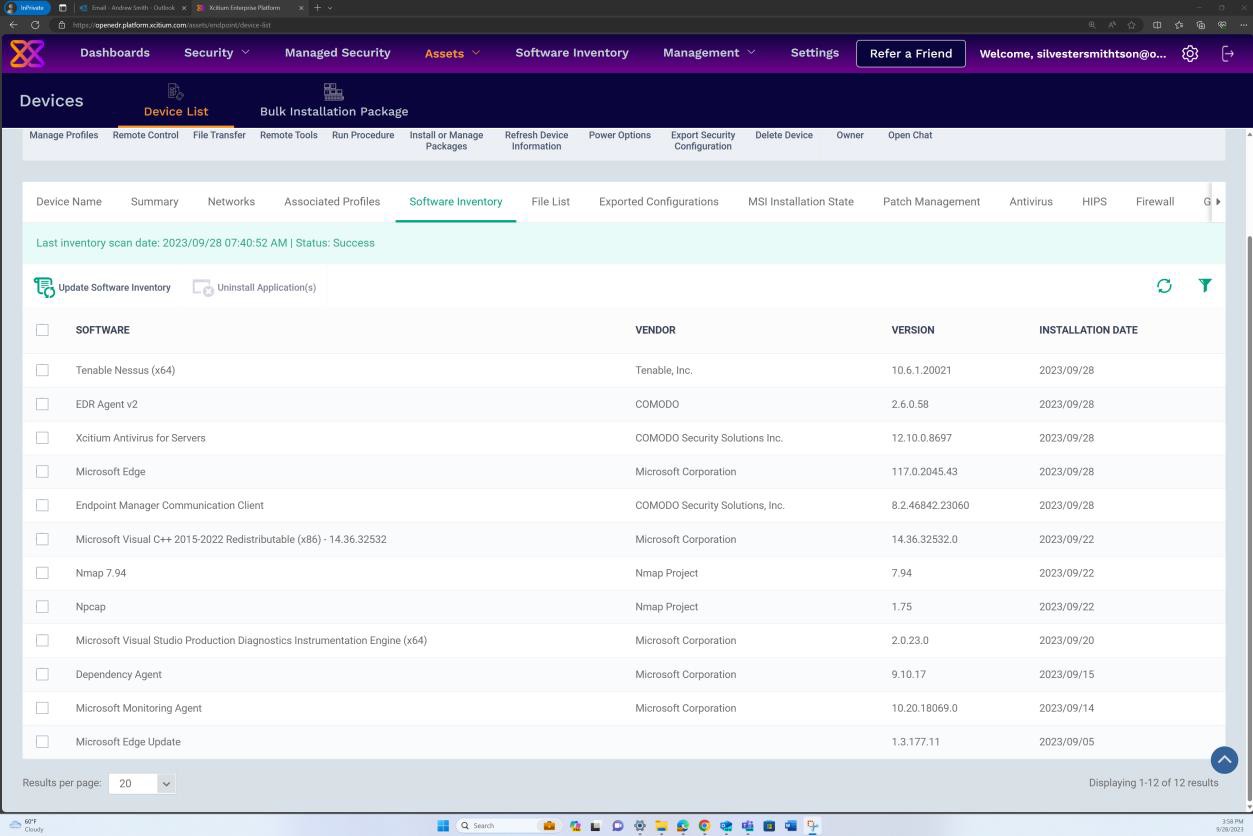

The next screenshot from software inventory for Windows operating system via OpenEDR demonstrates that no unsupported or outdated operating system and software versions were found.

Example evidence

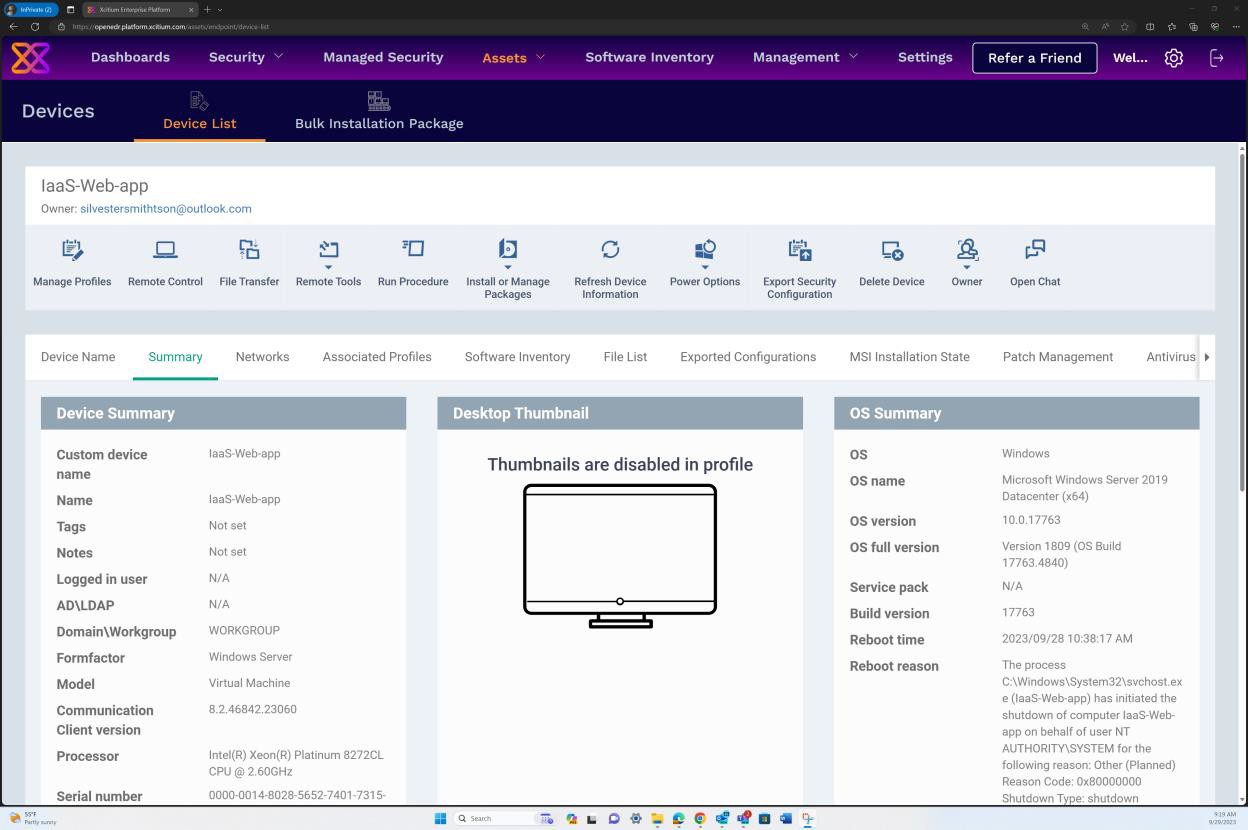

The next screenshot is from OpenEDR under the OS Summary showing Windows Server 2019 Datacenter (x64) and OS full version history including service pack, build version, etc… validating that no unsupported operating system was found.

Example evidence

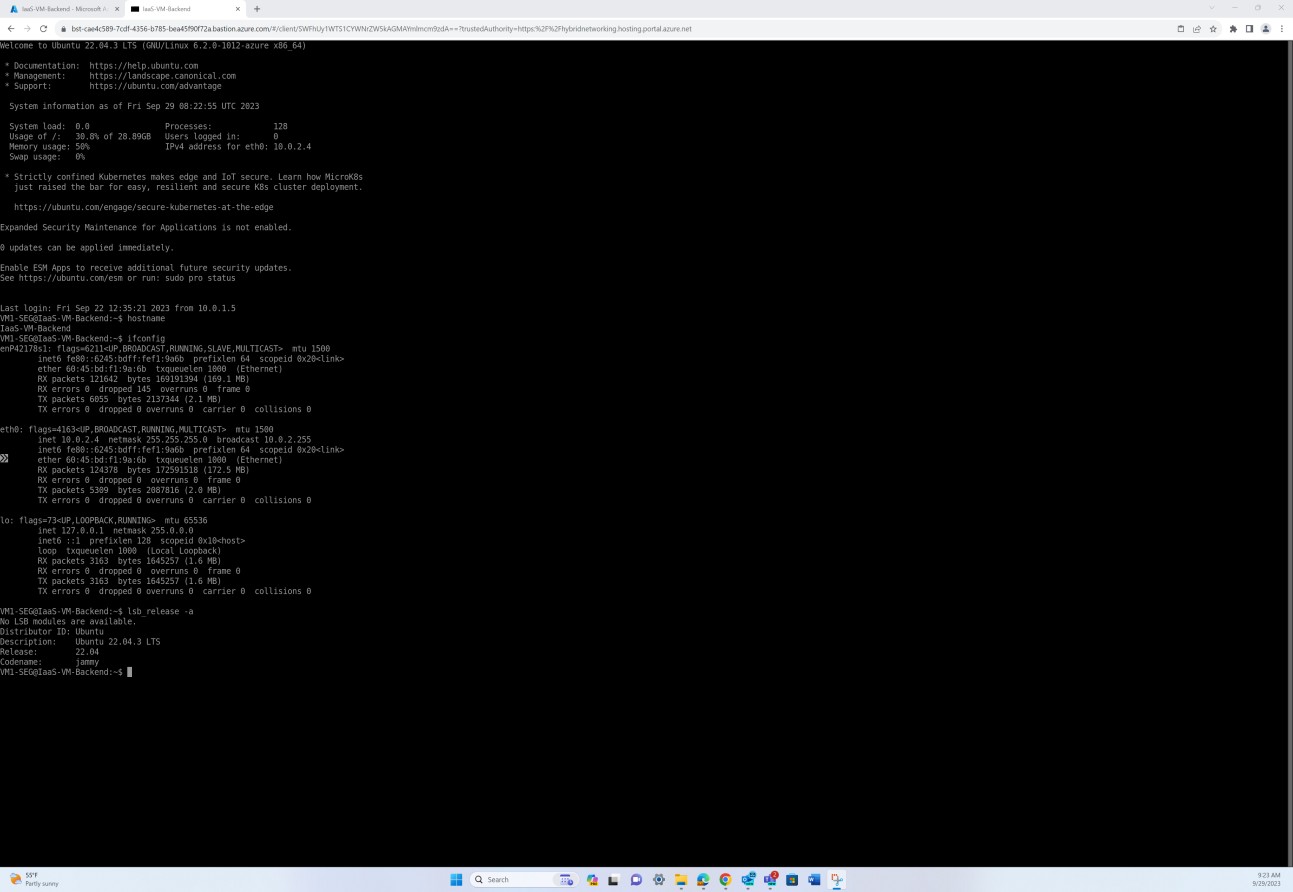

The next screenshot from a Linux operating system server demonstrates all the version details including Distributor ID, Description, Release and Codename validating that no unsupported Linux operating system was found.

Example evidence:

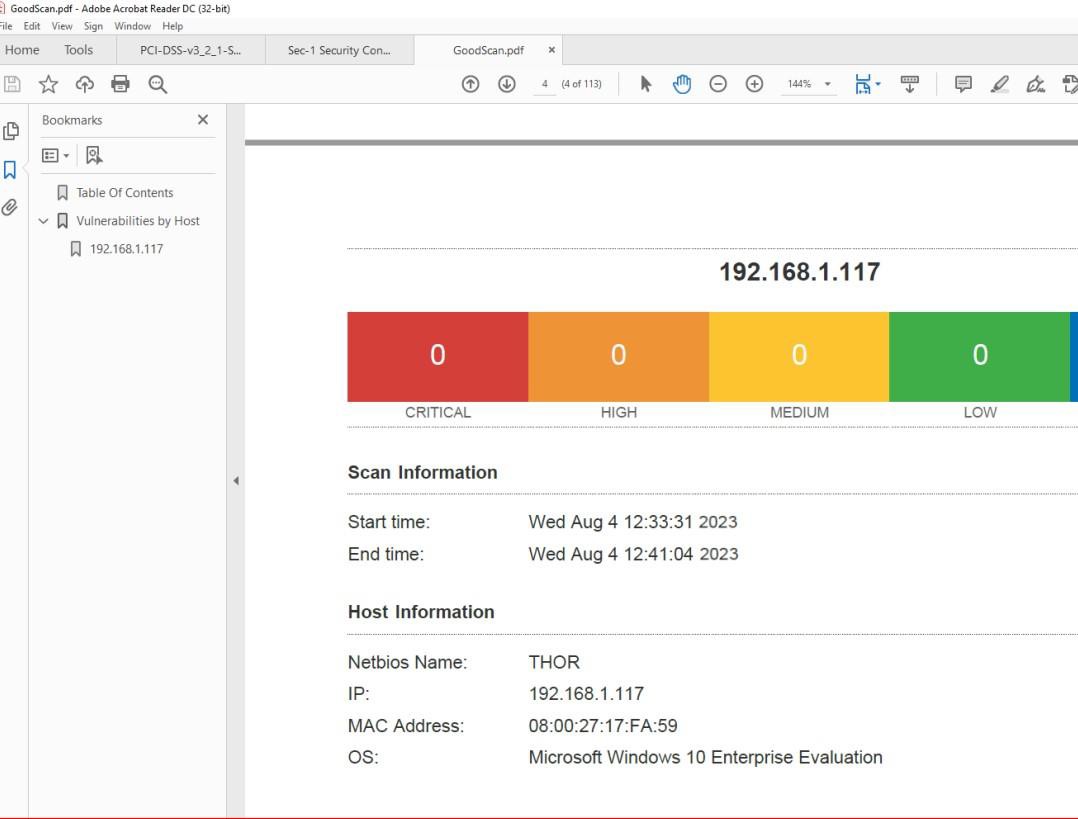

The next screenshot from Nessus vulnerability scan report demonstrates that no unsupported operating system (OS) and software were found on the target machine.

Please Note: In the previous examples a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing URL, any logged in user and system time and date.

Vulnerability scanning

Vulnerability scanning looks for possible weaknesses in an organization’s computer system, networks, and web applications to identify holes which could potentially lead to security breaches and the exposure of sensitive data. Vulnerability scanning is often required by industry standards and government regulations, for example, the PCI DSS (Payment Card Industry Data Security Standard).

A report by Security Metric entitled “2020 Security Metrics Guide to PCI DSS Compliance” states that ‘on average it took 166 days from the time an organization was seen to have vulnerabilities for an attacker to compromise the system. Once compromised, attackers had access to sensitive data for an average of 127 days’ therefore this control is aimed at identifying potential security weakness within the in-scope environment.

By introducing regular vulnerability assessments, organizations can detect weaknesses and insecurities within their environments which may provide an entry point for a malicious actor to compromise the environment. Vulnerability scanning can help to identify missing patches or misconfigurations within the environment. By regularly conducting these scans, an organization can provide appropriate remediation to minimize the risk of a compromise due to issues that are commonly picked up by these vulnerability scanning tools.

Control No. 6

Please provide evidence demonstrating that:

Quarterly infrastructure and web application vulnerability scanning is carried out.

Scanning needs to be carried out against the entire public footprint (IPs/URLs) and internal IP ranges if the environment is IaaS, Hybrid or On-prem.

Note: This must include the full scope of the environment.

Intent: vulnerability scanning

This control aims to ensure that the organization conducts vulnerability scanning on a quarterly basis, targeting both its infrastructure and web applications. The scanning must be comprehensive, covering both public footprints such as public IPs and URLs, as well as internal IP ranges. The scope of scanning varies depending on the nature of the organization’s infrastructure:

If an organization implements hybrid, on-premises, or Infrastructure-as-a-Service (IaaS) models, the scanning must encompass both external public IPs/URLs and internal IP ranges.

If an organization implements Platform-as-a-Service (PaaS), the scanning must encompass external public IPs/URLs only.

This control also mandates that the scanning should include the full scope of the environment, thereby leaving no component unchecked. The objective is to identify and evaluate vulnerabilities in all parts of the organization’s technology stack to ensure comprehensive security.

Guidelines: vulnerability scanning

Provide the full scan report(s) for each quarter’s vulnerability scans that have been carried out over the past 12 months. The reports should clearly state the targets to validate that the full public footprint is included, and where applicable, each internal subnet. Provide ALL scan reports for EVERY quarter.

Example evidence: vulnerability scanning

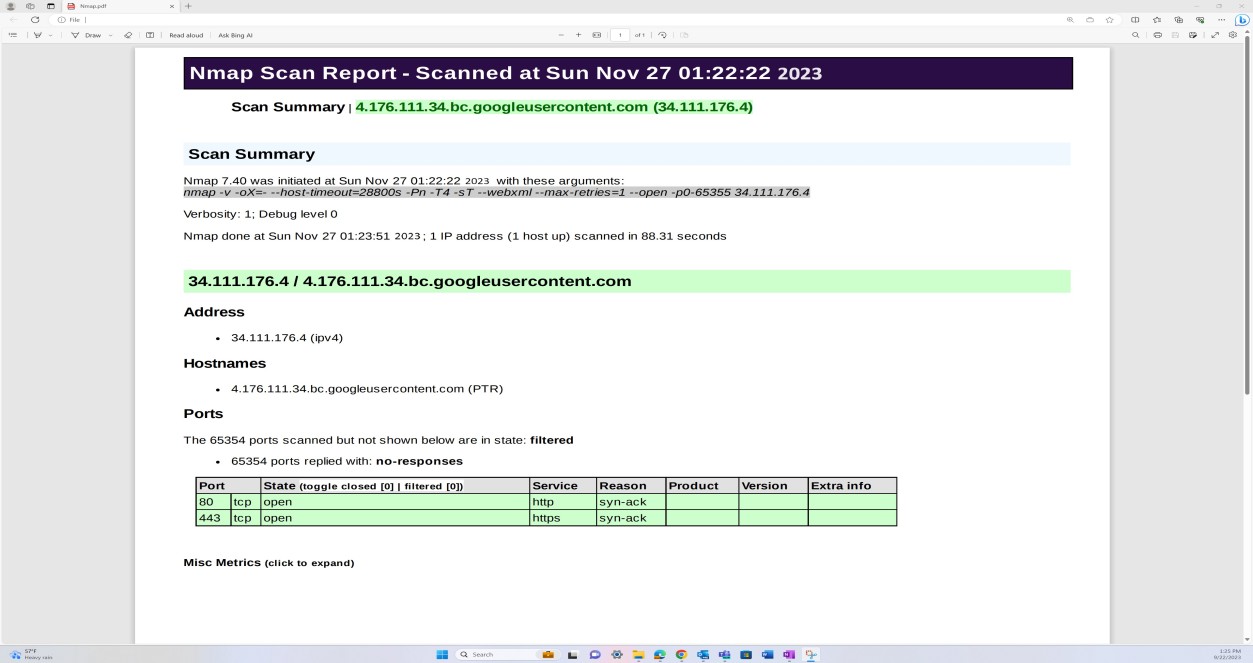

The next screenshot shows a network discovery and a port scan performed via Nmap on the External infrastructure to identify any unsecured open ports.

Note: Nmap on its own cannot be utilized to meet this control as the expectation is that a complete vulnerability scan must be provided. The Nmap port discovery is part of the vulnerability management process exemplified below and is complemented by OpenVAS and OWASP ZAP scans against the external infrastructure.

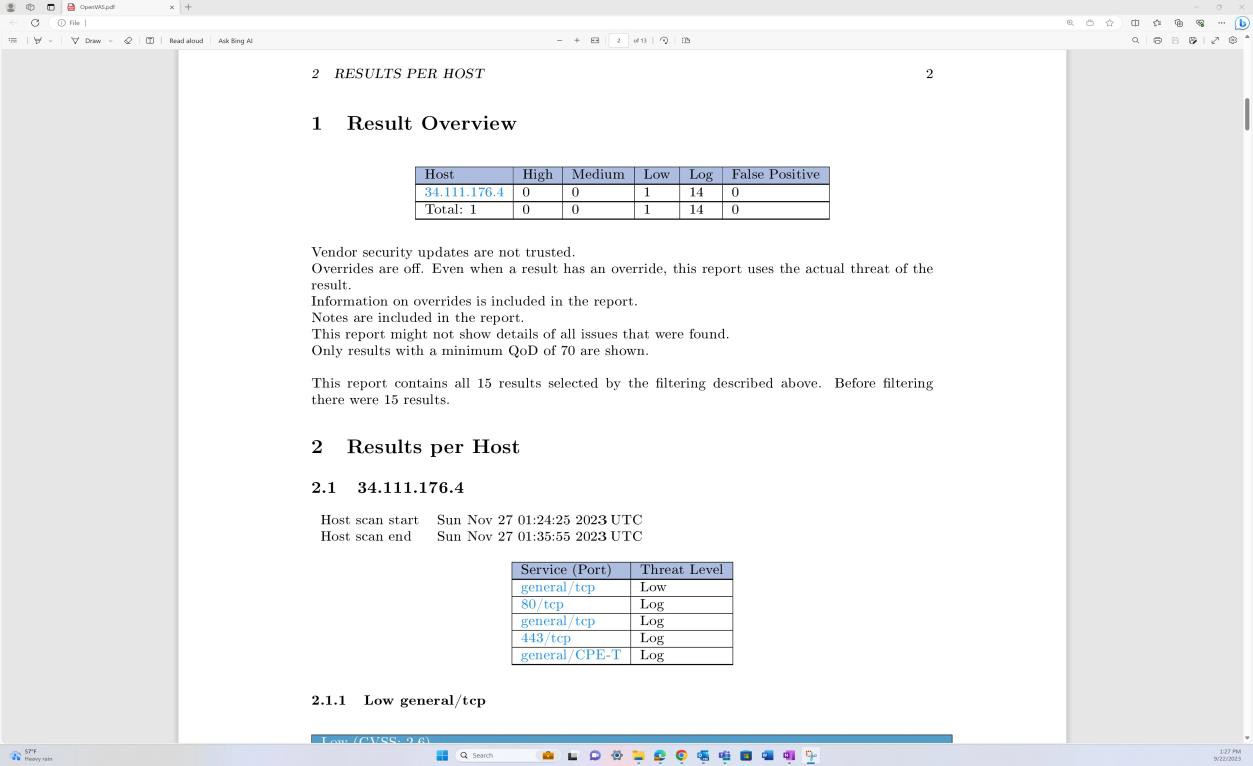

The screenshot shows vulnerability scanning via OpenVAS against the external infrastructure to identify any misconfigurations and outstanding vulnerabilities.

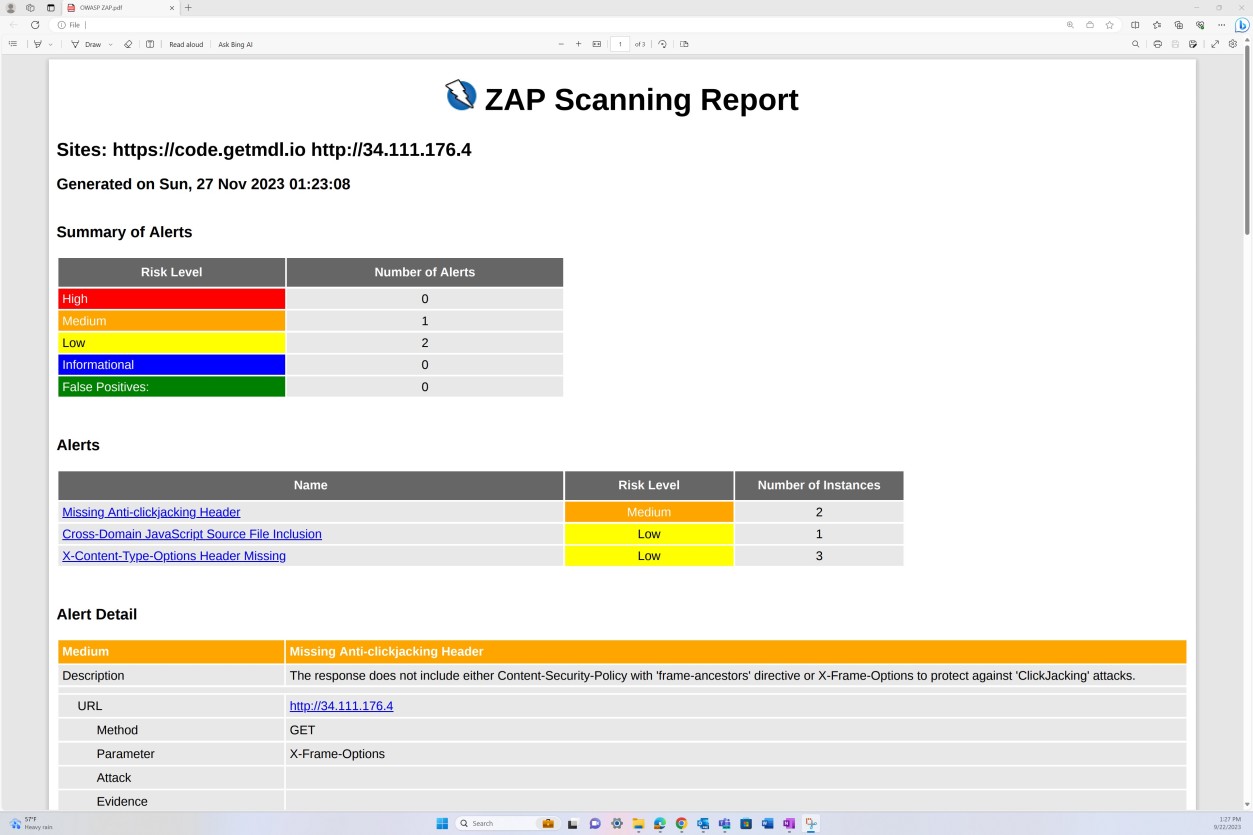

The next screenshot shows the vulnerability scan report from OWASP ZAP demonstrating dynamic application security testing.

Example evidence: vulnerability scanning

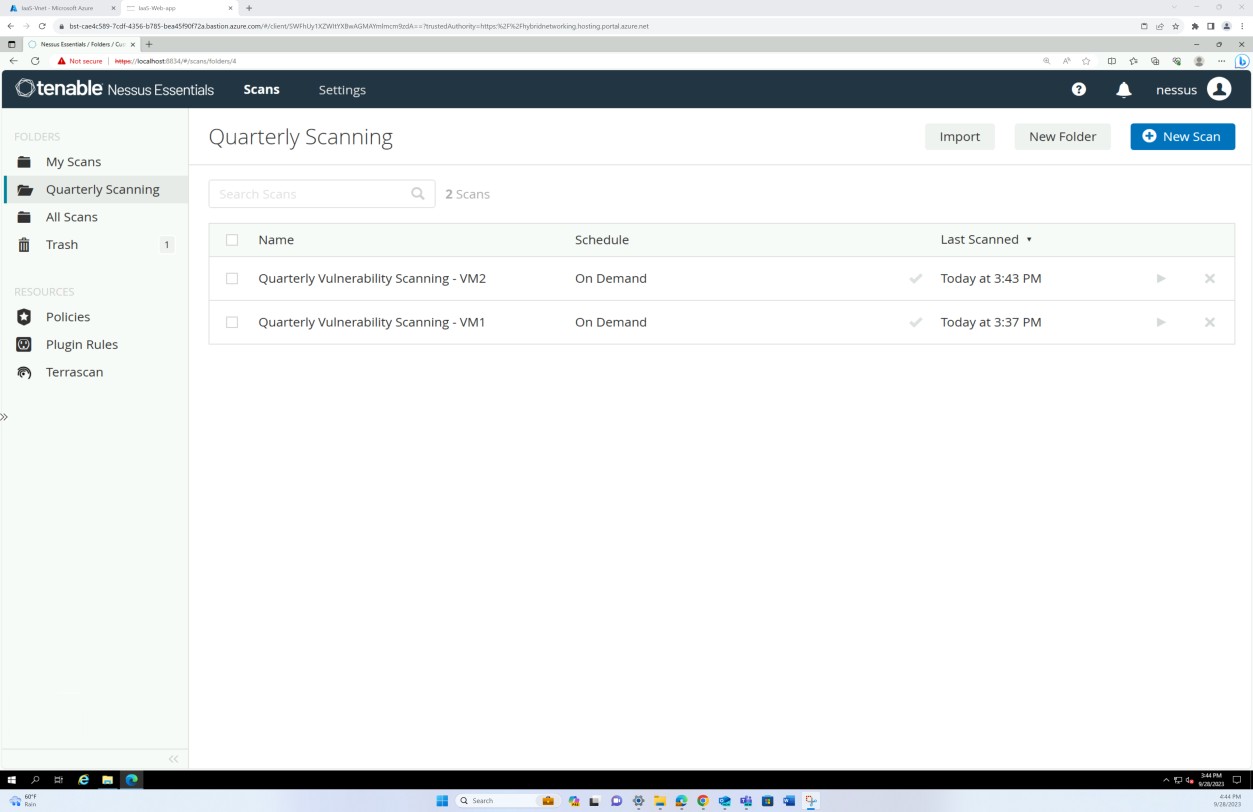

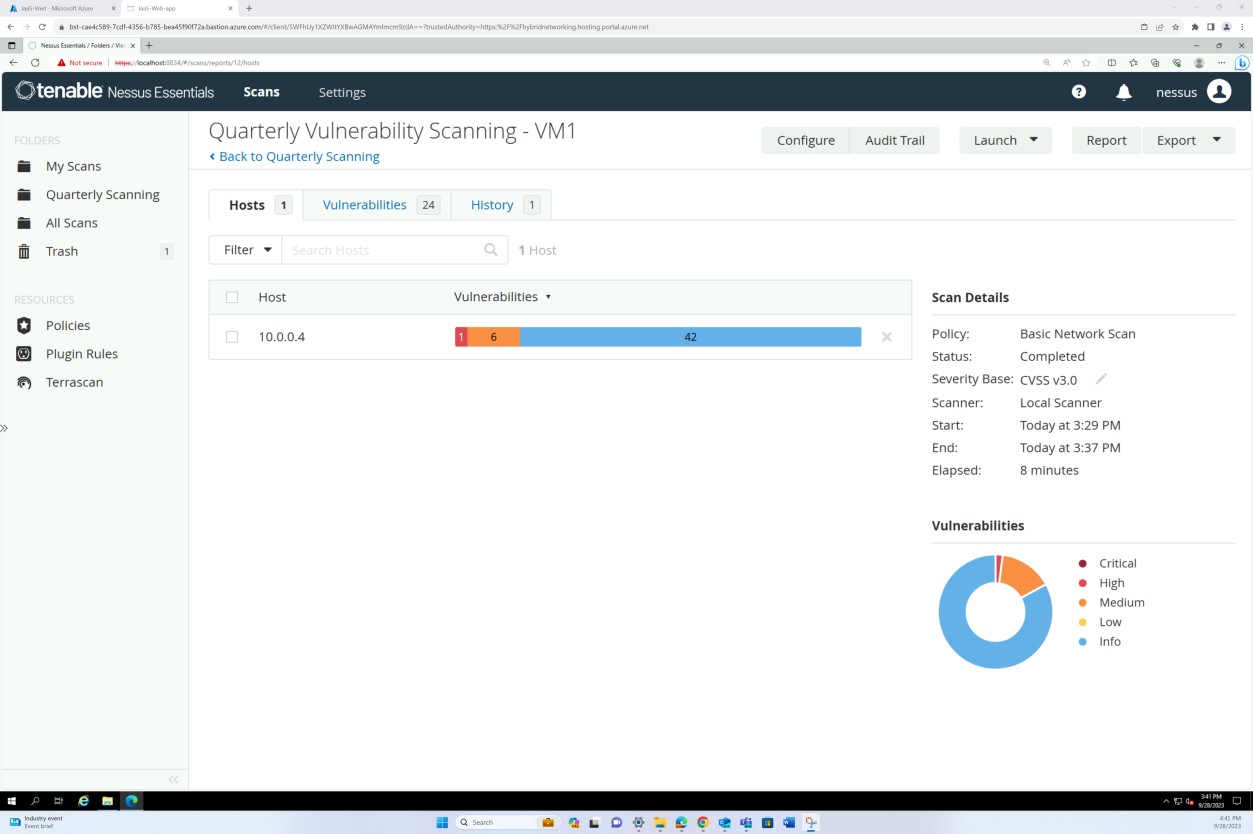

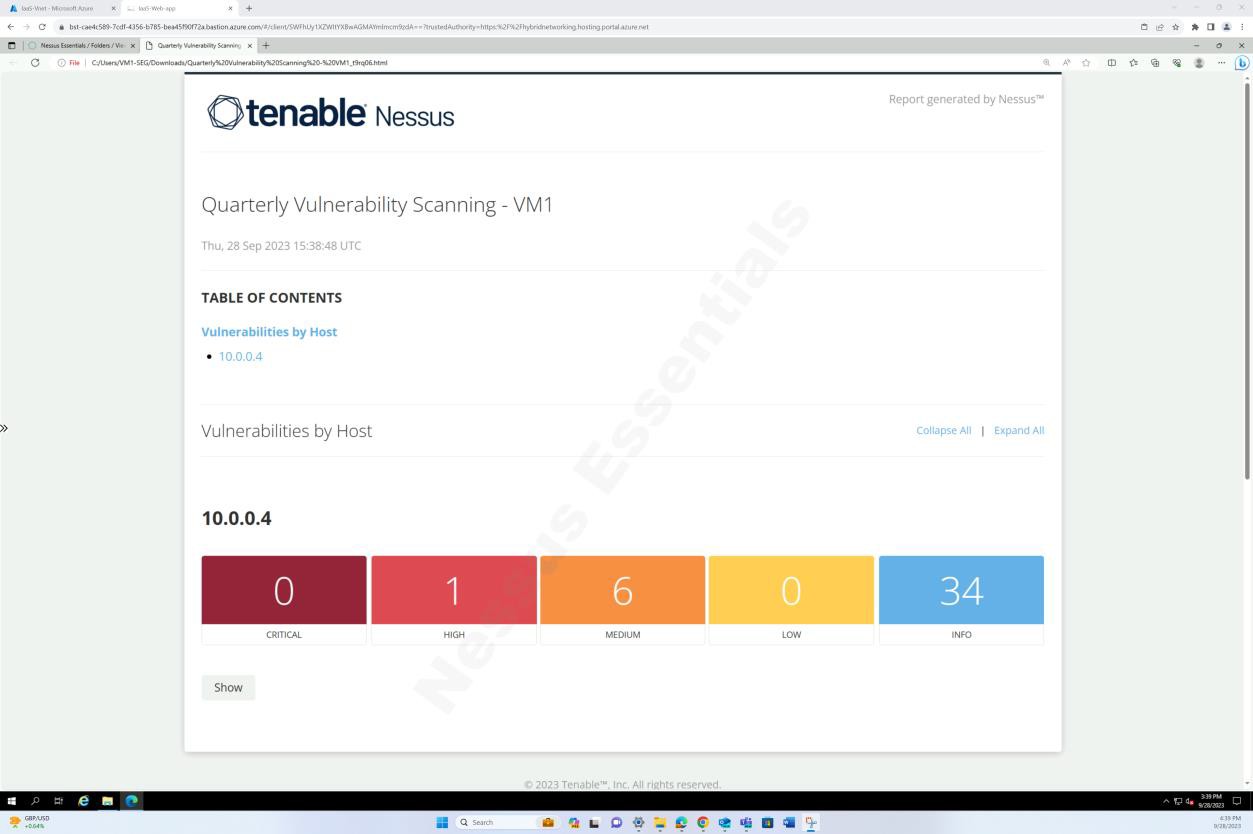

The following screenshots from tenable Nessus Essentials vulnerability scan report demonstrate that internal infrastructure scanning is performed.

The previous screenshots demonstrate the folders setup for quarterly scans against the host VMs.

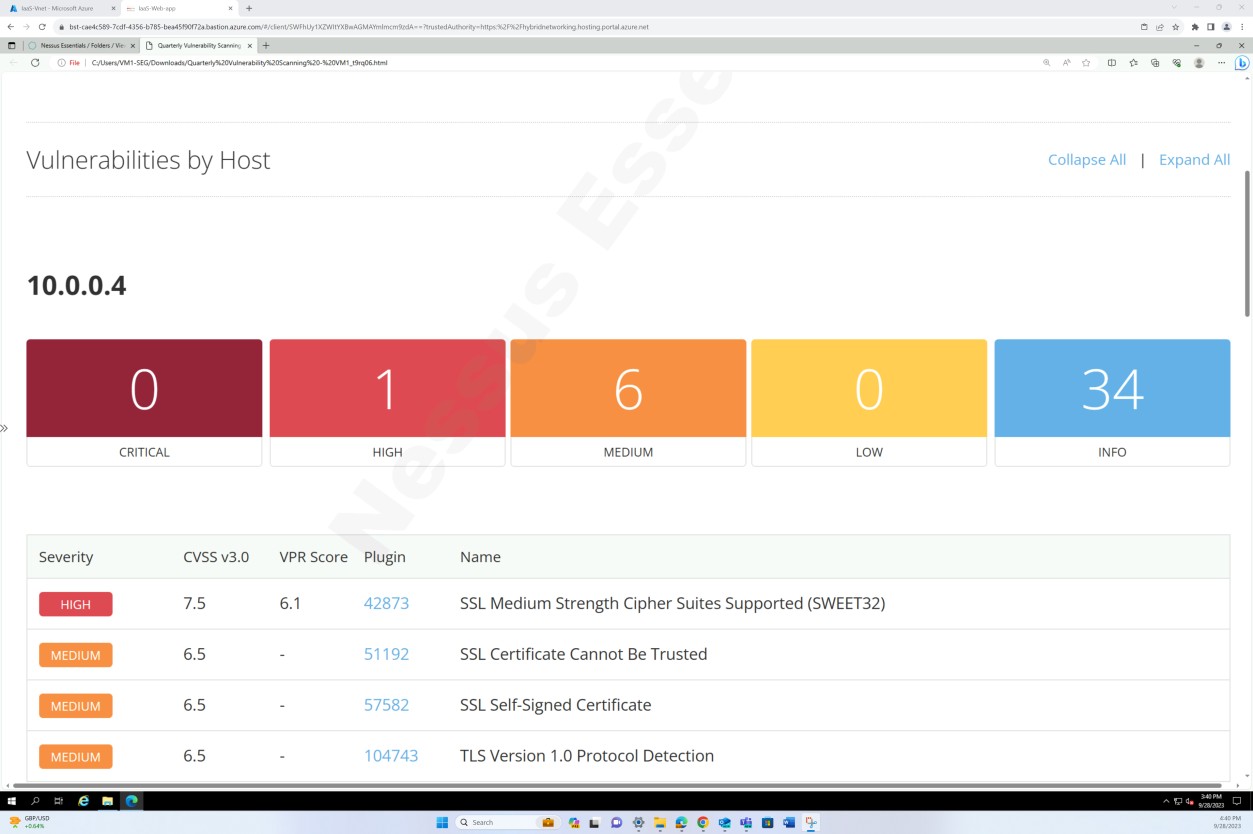

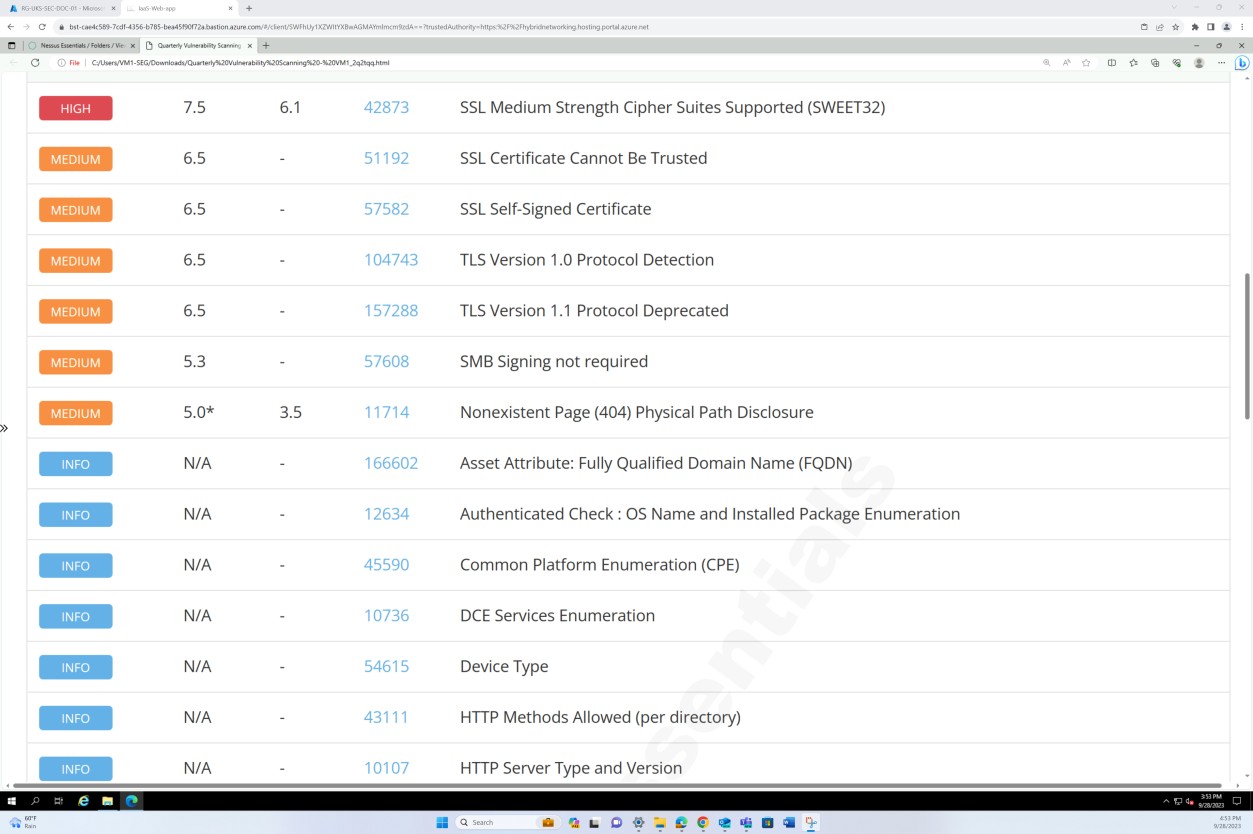

The screenshots above and below show the output of the vulnerability scan report.

The next screenshot shows the continuation of the report covering all issues found.

Control No. 7

Please provide rescan evidence demonstrating that:

- Remediation of all vulnerabilities identified in Control 6 are patched in line with the minimal patching window defined in your policy.

Intent: patching

Failure to identify, manage and remediate vulnerabilities and misconfigurations quickly can increase an organization’s risk of a compromise leading to potential data breaches. Correctly identifying and remediating issues is seen as important for an organization’s overall security posture and environment which is in line with best practices of various security frameworks e.g., ISO 27001 and PCI DSS.

The intent of this control is to ensure that the organization provides credible evidence of rescans, demonstrating that all vulnerabilities identified in a Control 6 have been remediated. The remediation must align with the minimal patching window defined in the organization’s patch management policy.

Guidelines: patching

Provide re-scan reports validating that any vulnerabilities identified in control 6 have been remediated in line with the patching windows defined in control 4 .

Example evidence: patching

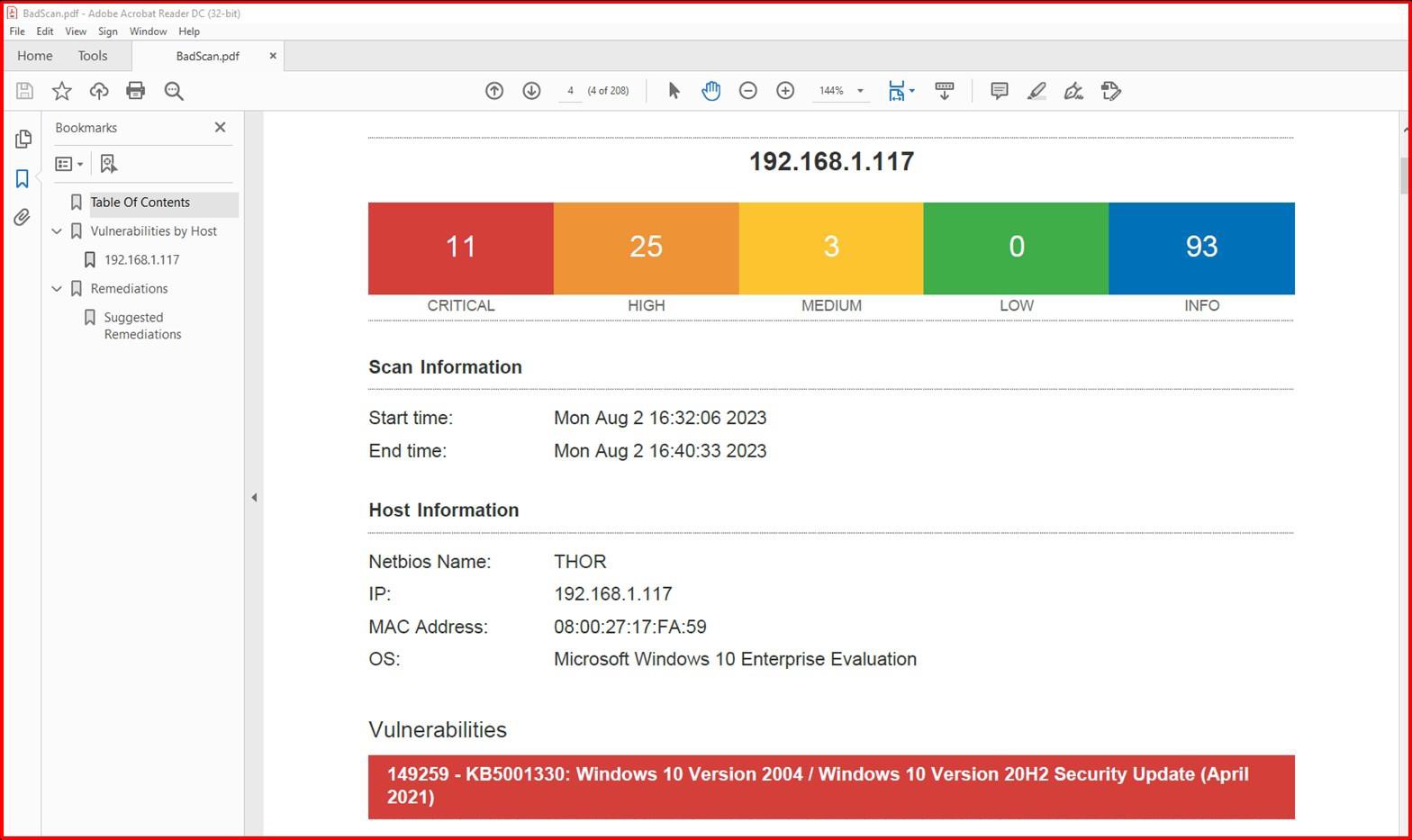

The next screenshot shows a Nessus scan of the in-scope environment (a single machine in this example named Thor) showing vulnerabilities on the 2nd of August 2023.

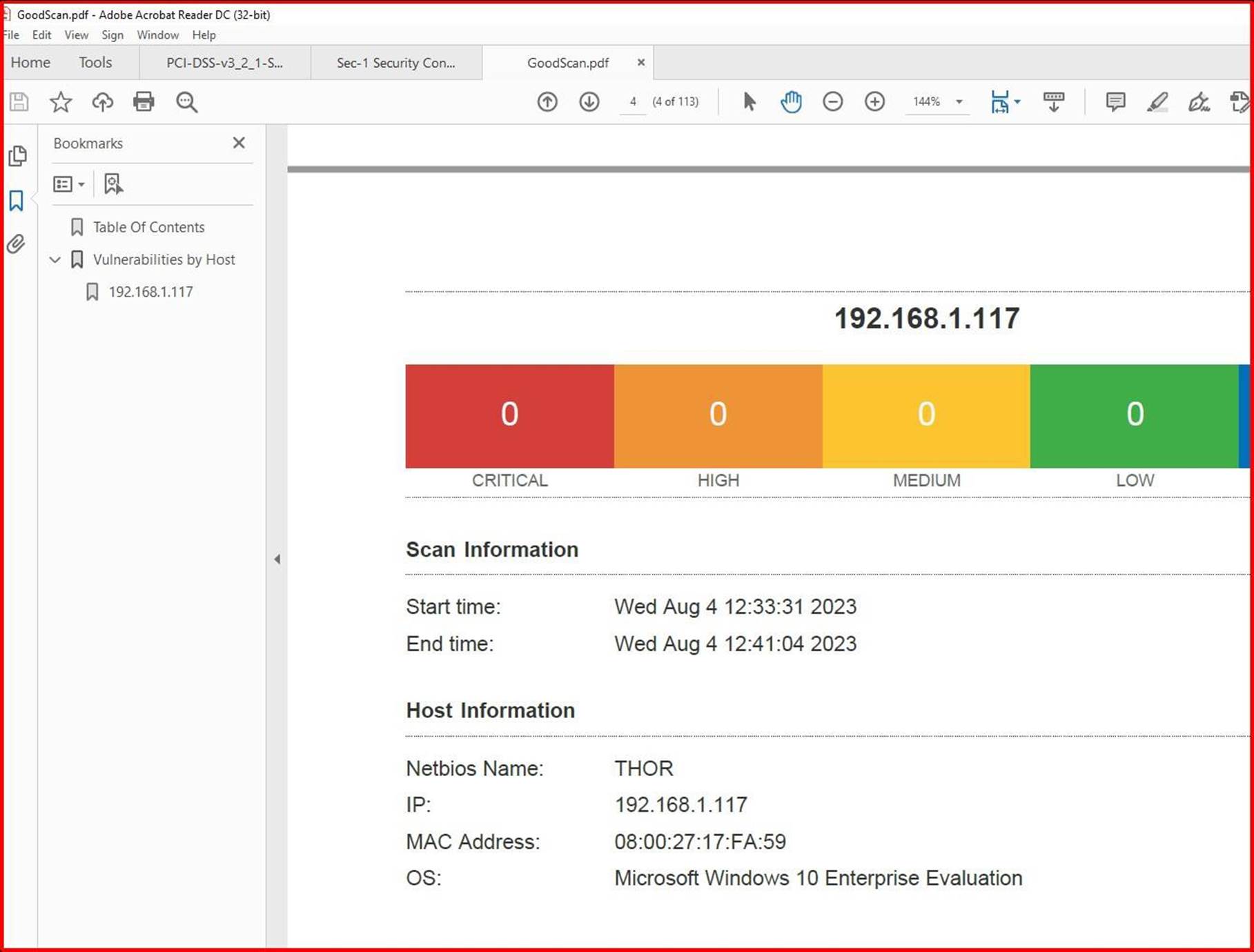

The next screenshot shows that the issues were resolved, 2 days later which is within the patching window defined within the patching policy.

Note: In the previous examples a full screenshot was not used, however ALL ISV submitted

evidence screenshots must be full screenshots showing any URL, logged in user and the system time and date.

Network Security Controls (NSC)

Network security controls are an essential component of cyber security frameworks such as ISO 27001, CIS controls, and NIST Cybersecurity Framework. They help organizations manage risks associated with cyber threats, protect sensitive data from unauthorized access, comply with regulatory requirements, detect, and respond to cyber threats in a timely manner, and ensure business continuity. Effective network security protects organizational assets against a wide range of threats from within or outside the organization.

Control No. 8

Provide demonstrable evidence that:

- Network Security Controls (NSC) are installed on the boundary of the in-scope environment and installed between the perimeter network and internal networks.

AND if Hybrid, On-prem, IaaS also provide evidence that:

- All public access terminates at the perimeter network.

Intent: NSC

This control aims to confirm that Network Security Controls (NSC) are installed at key locations within the organization’s network topology. Specifically, NSCs must be placed on the boundary of the in-scope environment and between the perimeter network and the internal networks. The intent of this control is to confirm that these security mechanisms are correctly situated to maximize their effectiveness in protecting the organization’s digital assets.

Guidelines: NSC

Evidence should be provided to demonstrate that Network Security Controls (NSC) are installed on the boundary and configured between perimeter and internal networks. This can be achieved by providing the screenshots from the configuration settings from the Network Security Controls (NSC) and the scope that it is applied to e.g., a firewall or equivalent technology such as Azure Network Security Groups (NSGs), Azure Front Door etc.

Example evidence: NSC

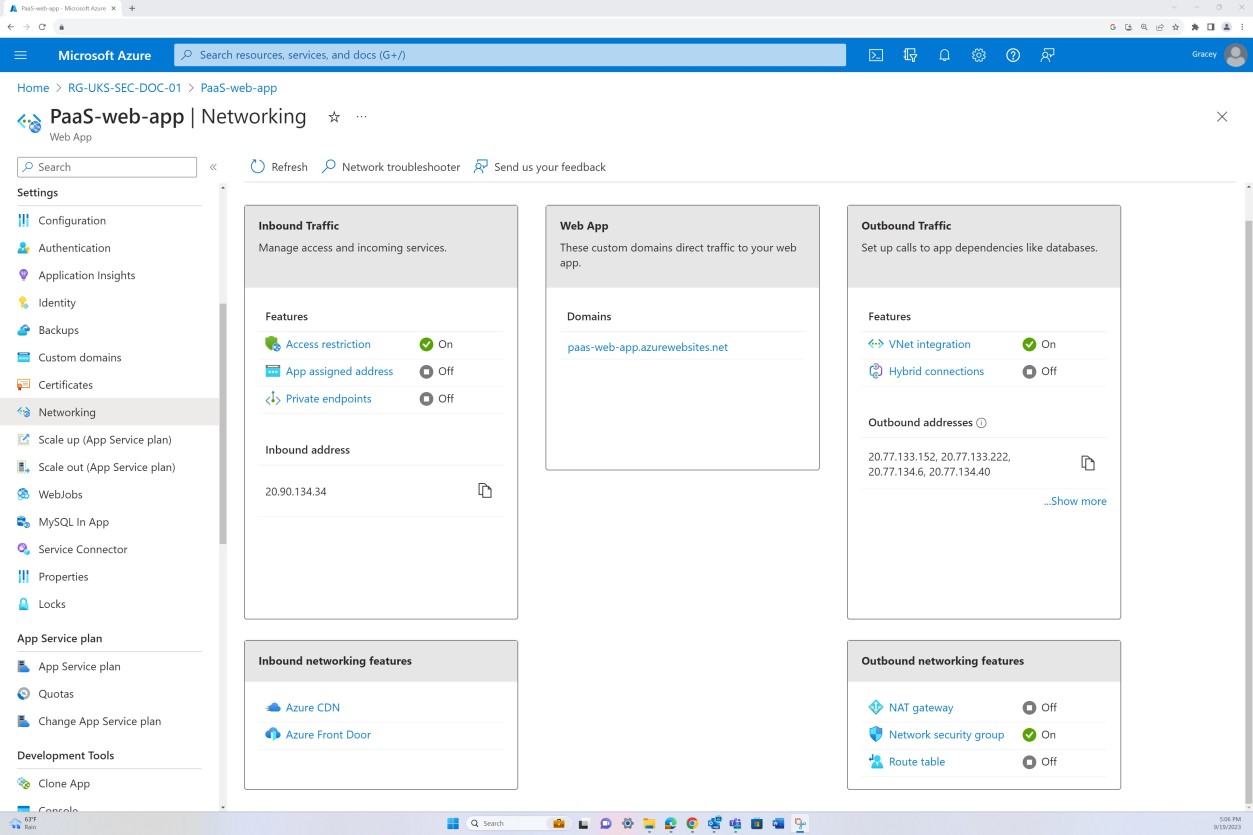

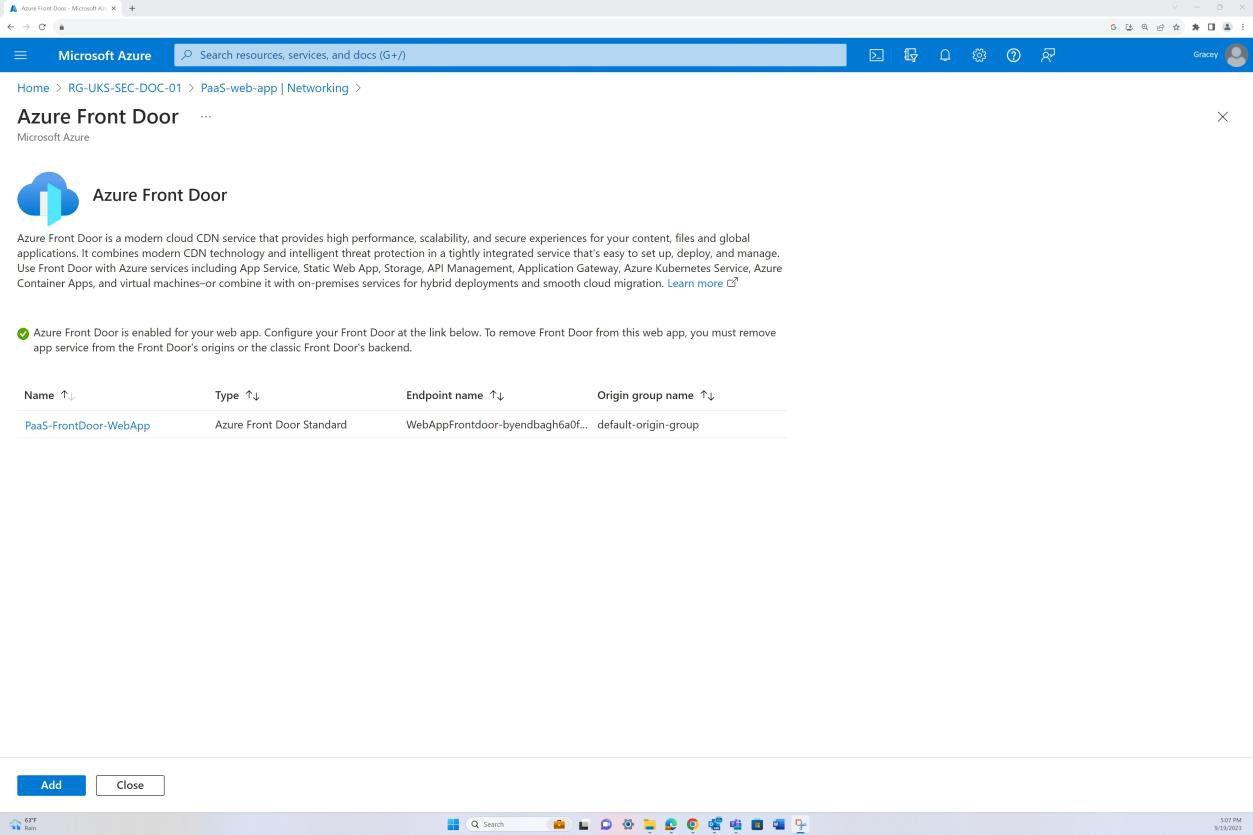

The next screenshot is from the Web app ‘PaaS-web-app’; the networking blade demonstrates that all the inbound traffic is passing through Azure Front Door, whilst all traffic from the application to other Azure resources are routed and filtered via the Azure NSG through VNET integration.

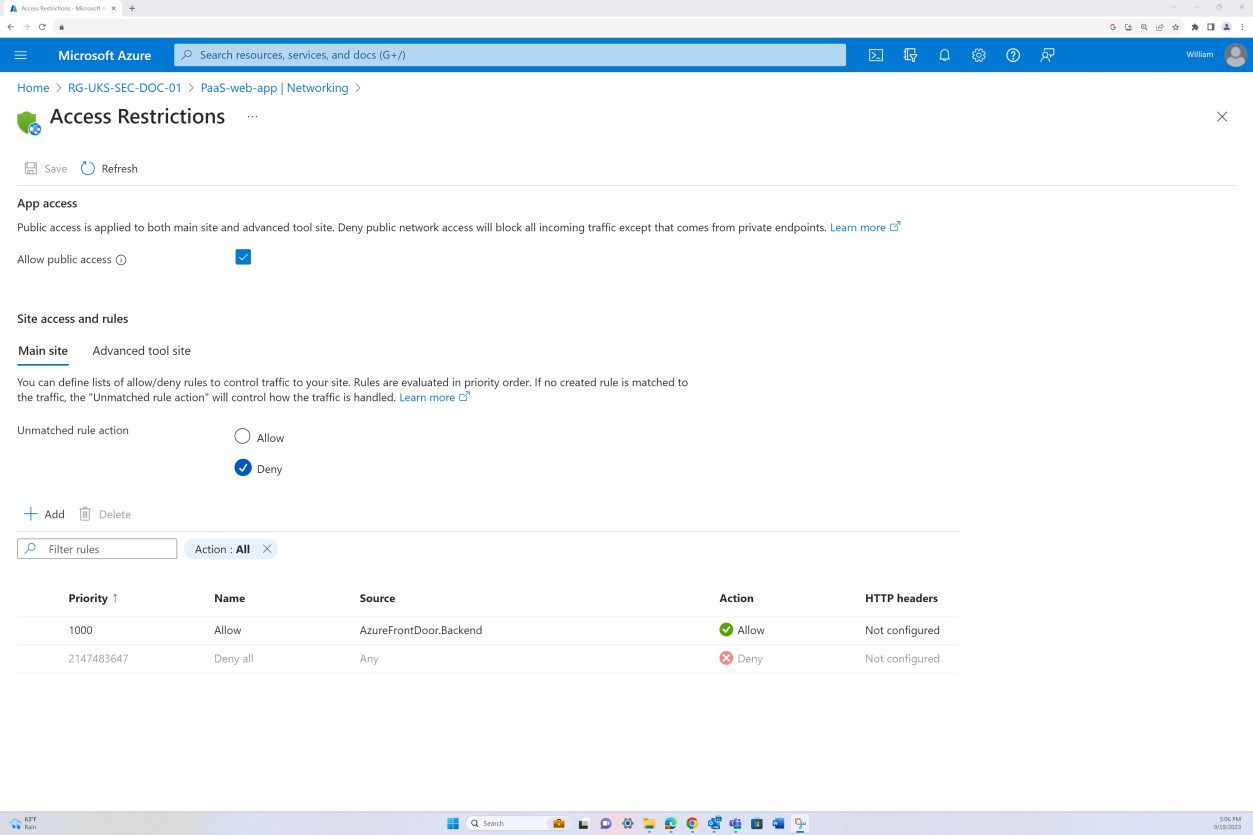

Deny rules within the “Access restrictions” prevent any inbound except from Front Door (FD), traffic is routed through FD before reaching the application.

Example evidence: NSC

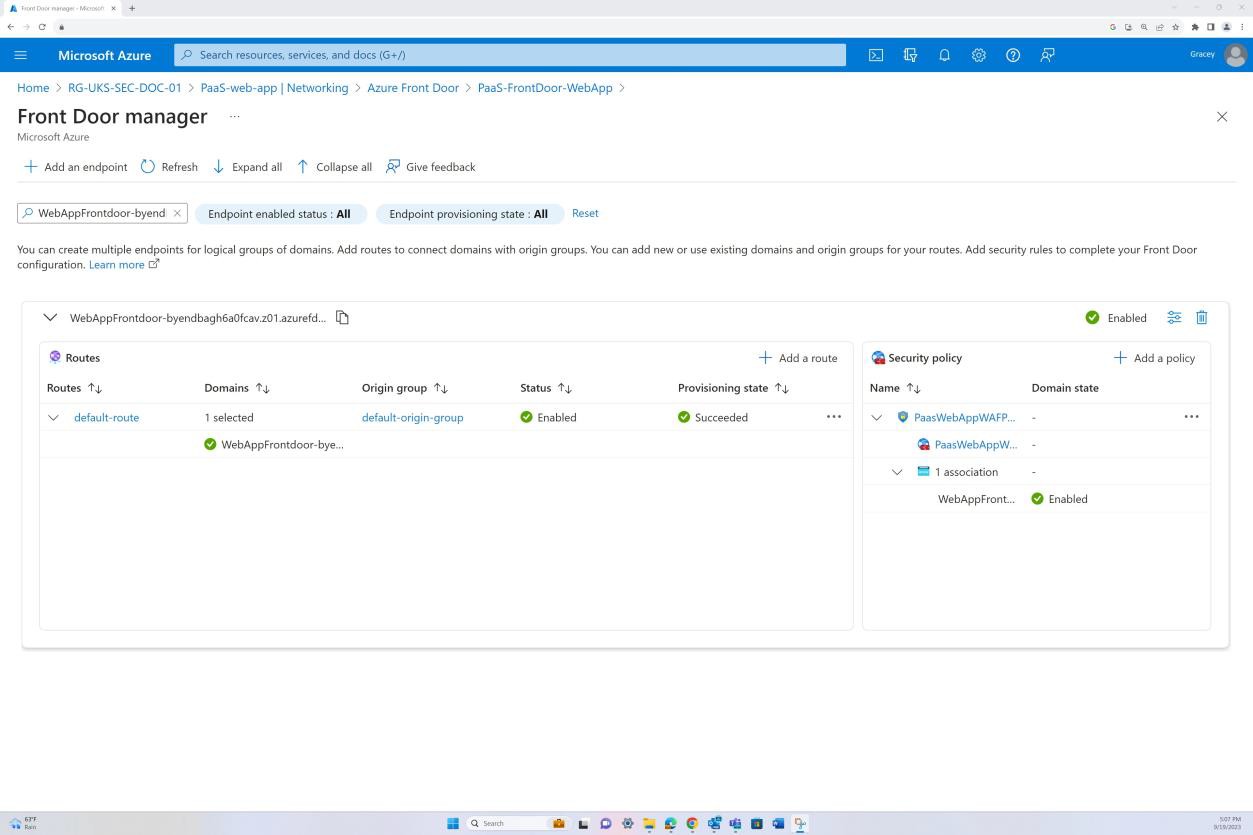

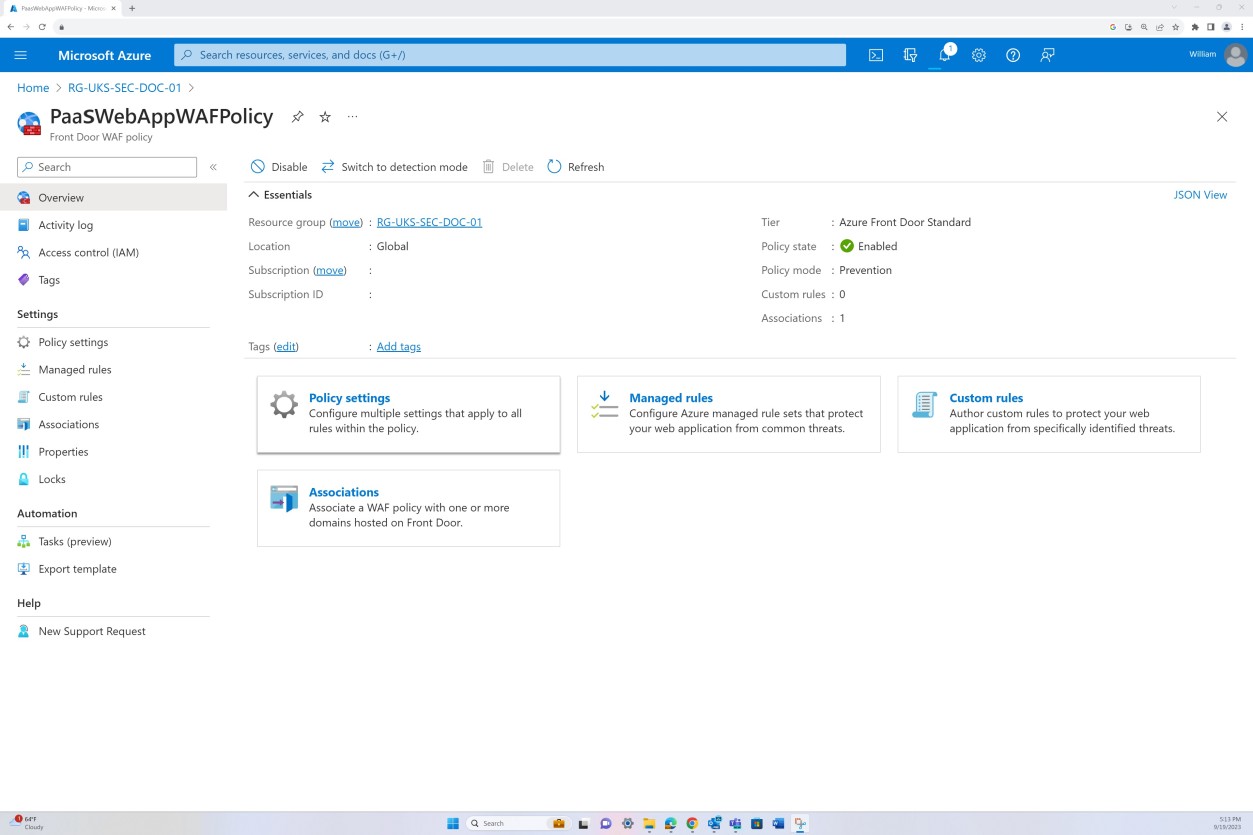

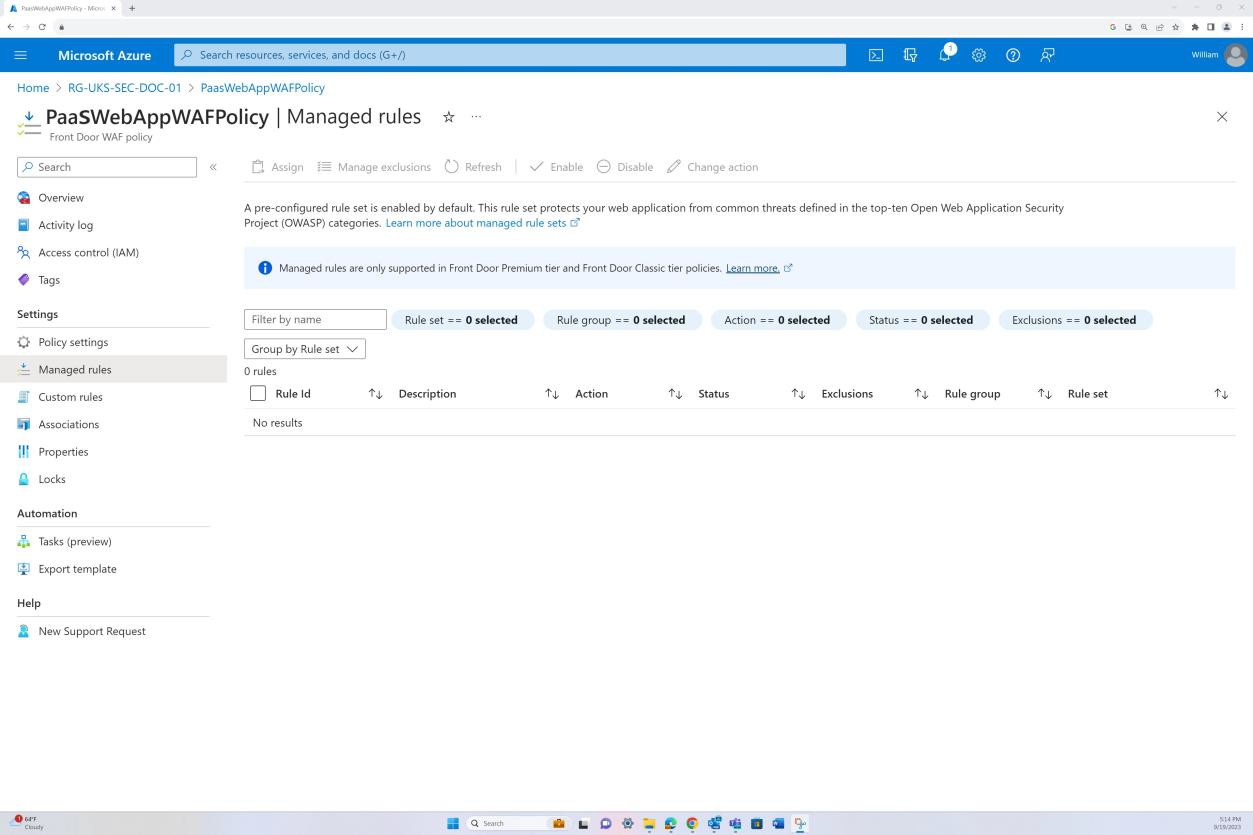

The following screenshot shows Azure Front Door’s default route, and that the traffic is routed through Front Door before reaching the application. WAF policy has also been applied.

Example evidence: NSC

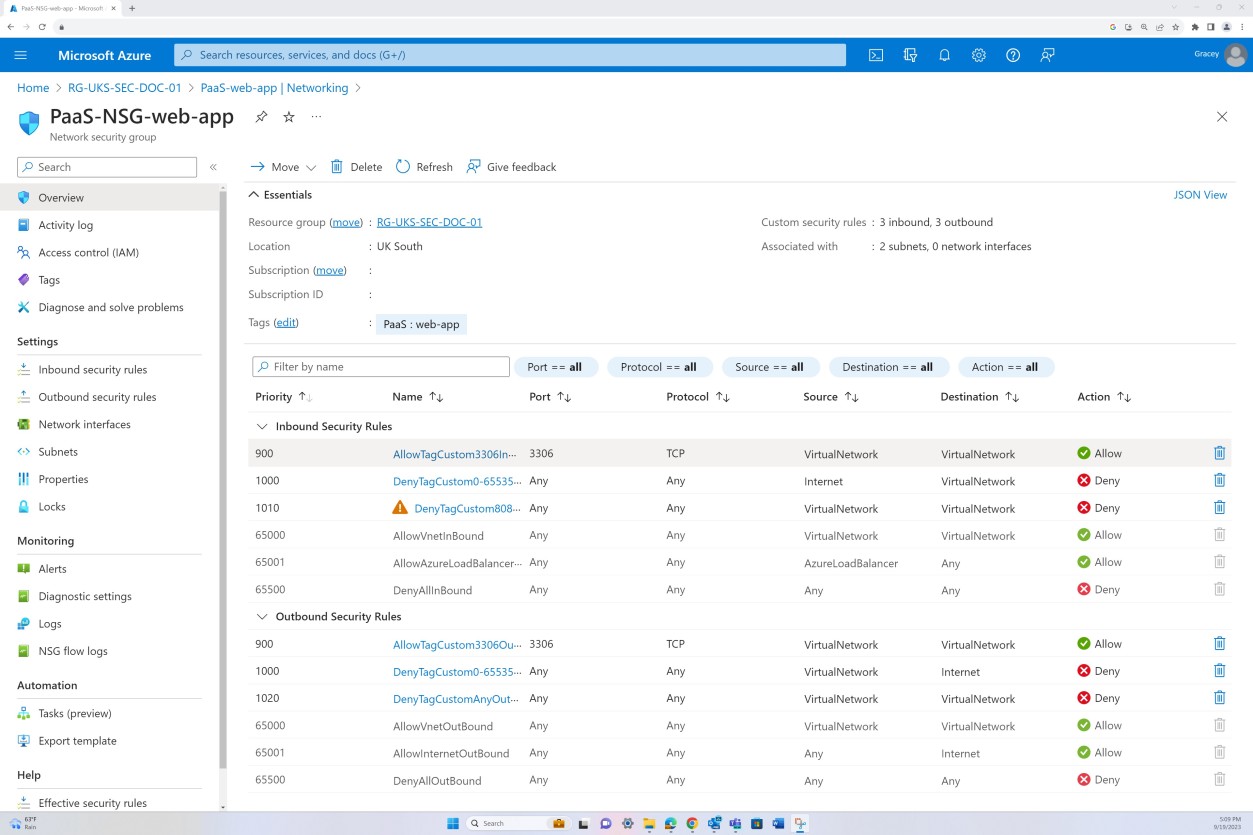

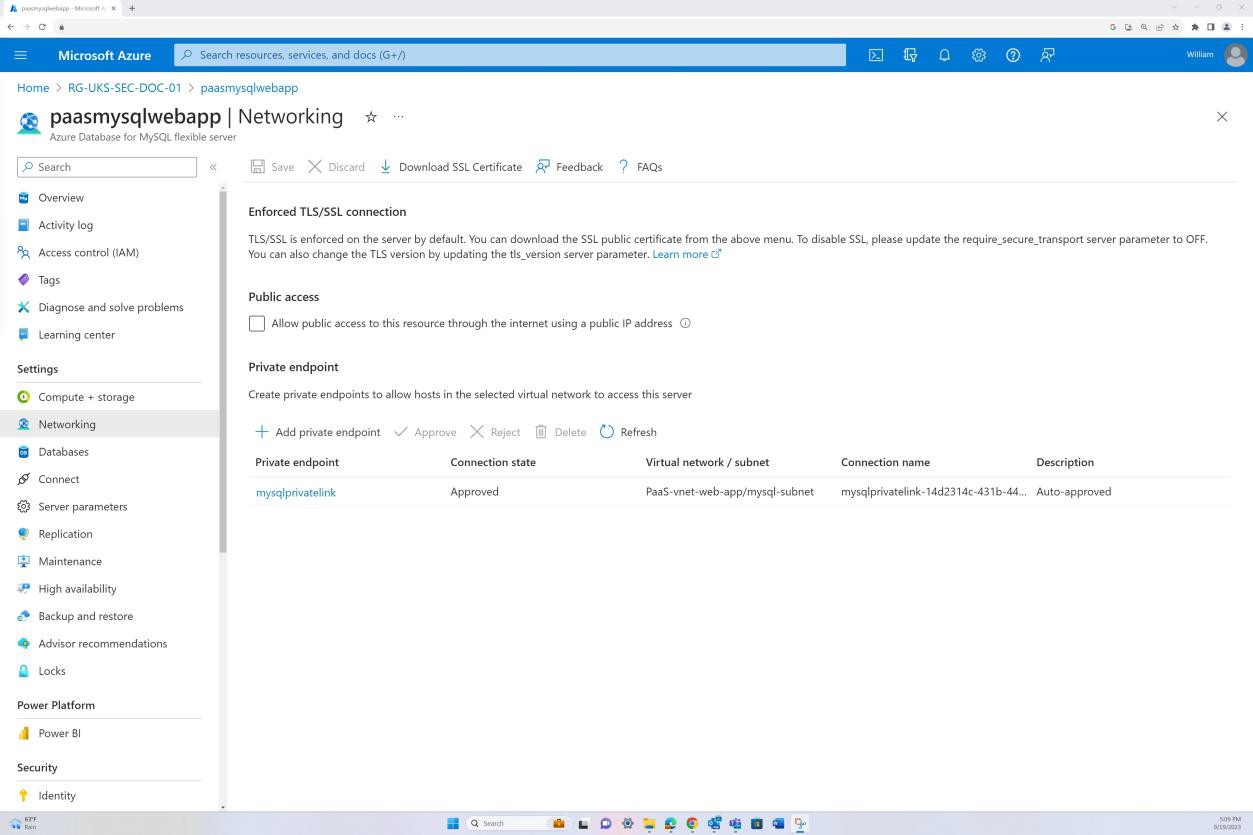

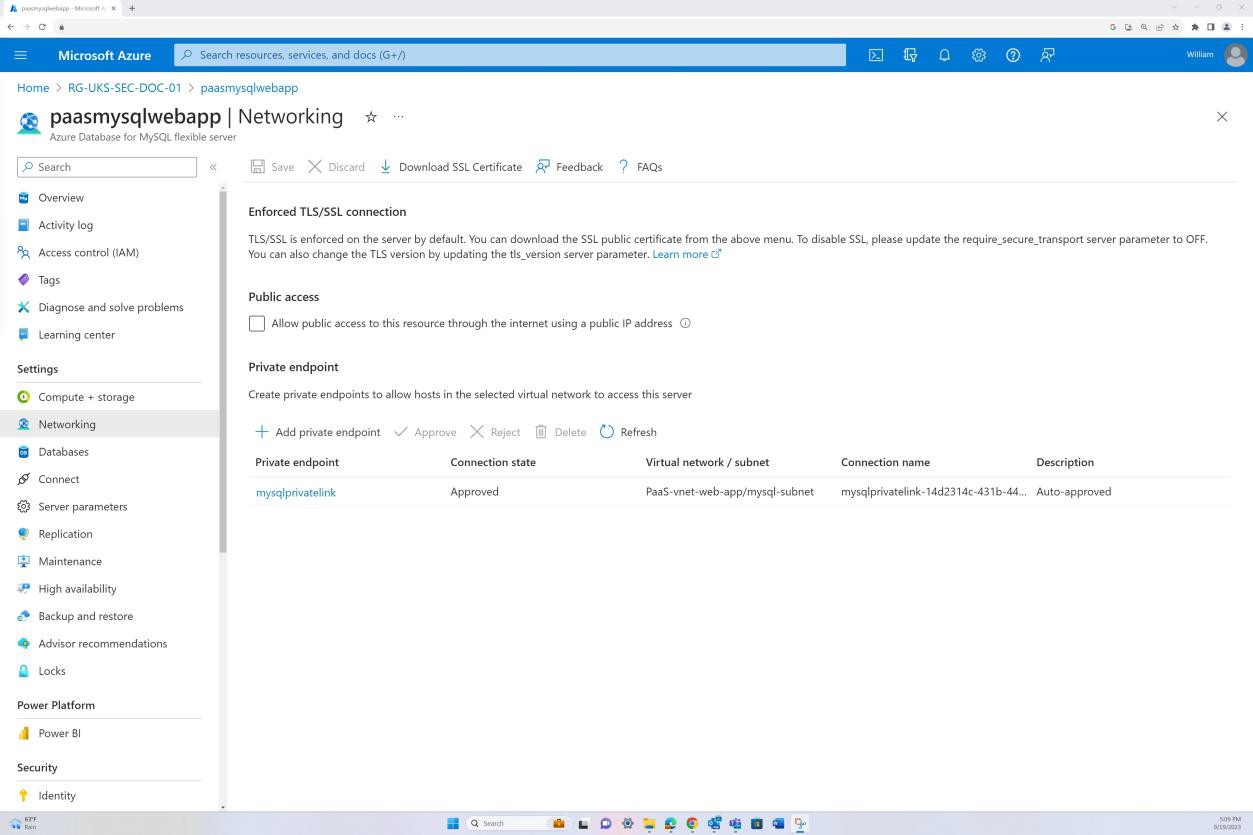

The first screenshot shows an Azure Network Security Group applied at VNET level to filter inbound and outbound traffic. Second screenshot demonstrates that SQL server is not routable over the internet and is integrated via the VNET and through a private link.

This ensures that internal traffic and communication is filtered by the NSG before reaching the SQL server.

Intent**:** hybrid, on-prem, IaaS

This subpoint is essential for organizations that operate hybrid, on-premises, or Infrastructure-as-a- Service (IaaS) models. It seeks to ensure that all public access terminates at the perimeter network, which is crucial for controlling points of entry into the internal network and reducing potential exposure to external threats. Evidence of compliance may include firewall configurations, network access control lists, or other similar documentation that can substantiate the claim that public access does not extend beyond the perimeter network.

Example evidence: hybrid, on-prem, IaaS

The screenshot demonstrates that SQL server is not routable over the internet, and it is integrated via the VNET and through a private link. This ensures only internal traffic is allowed.

Example evidence: hybrid, on-prem, IaaS

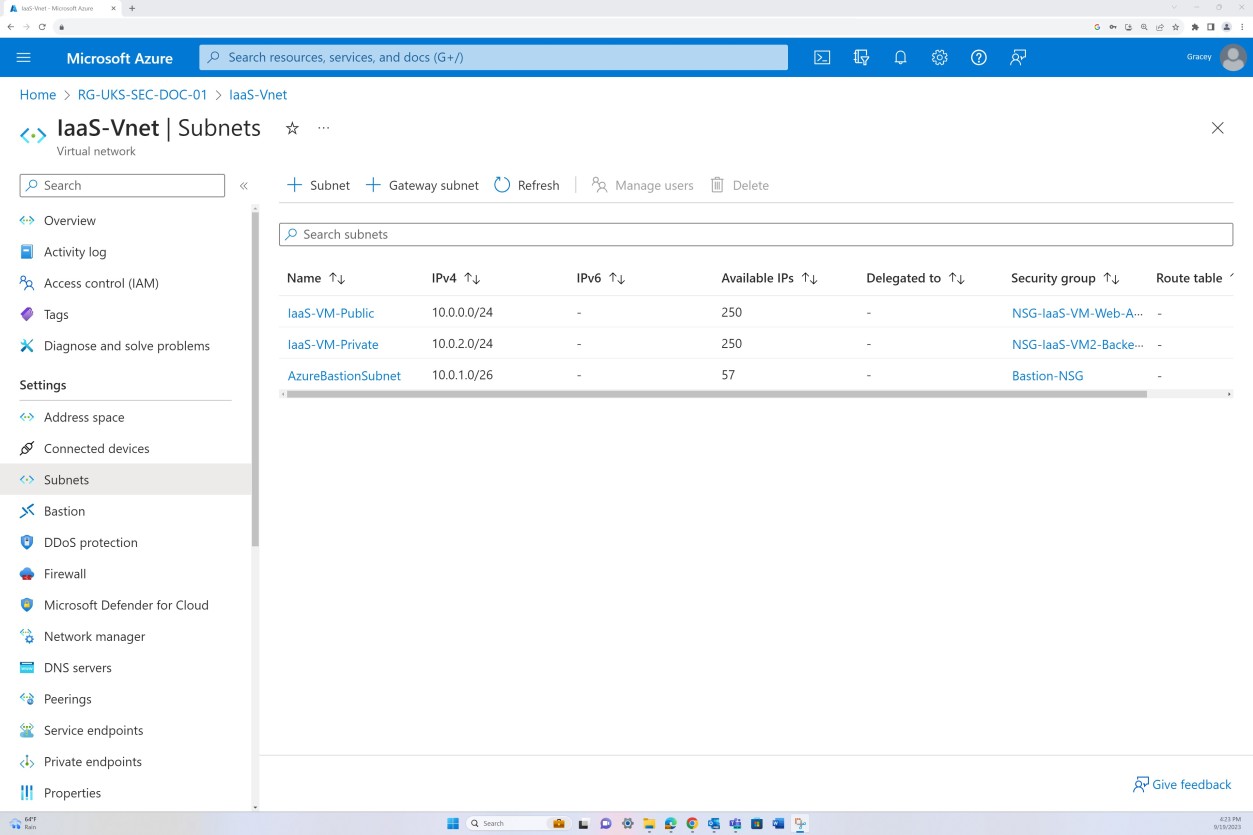

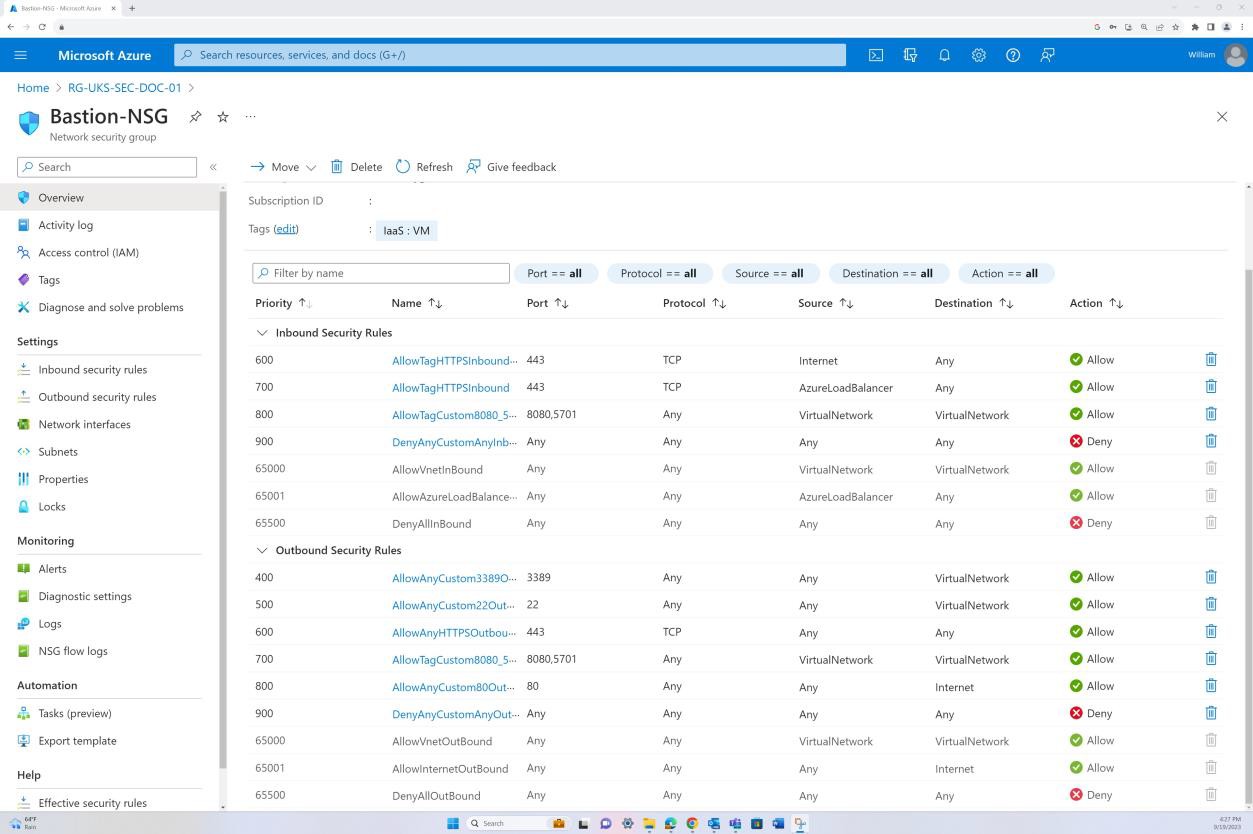

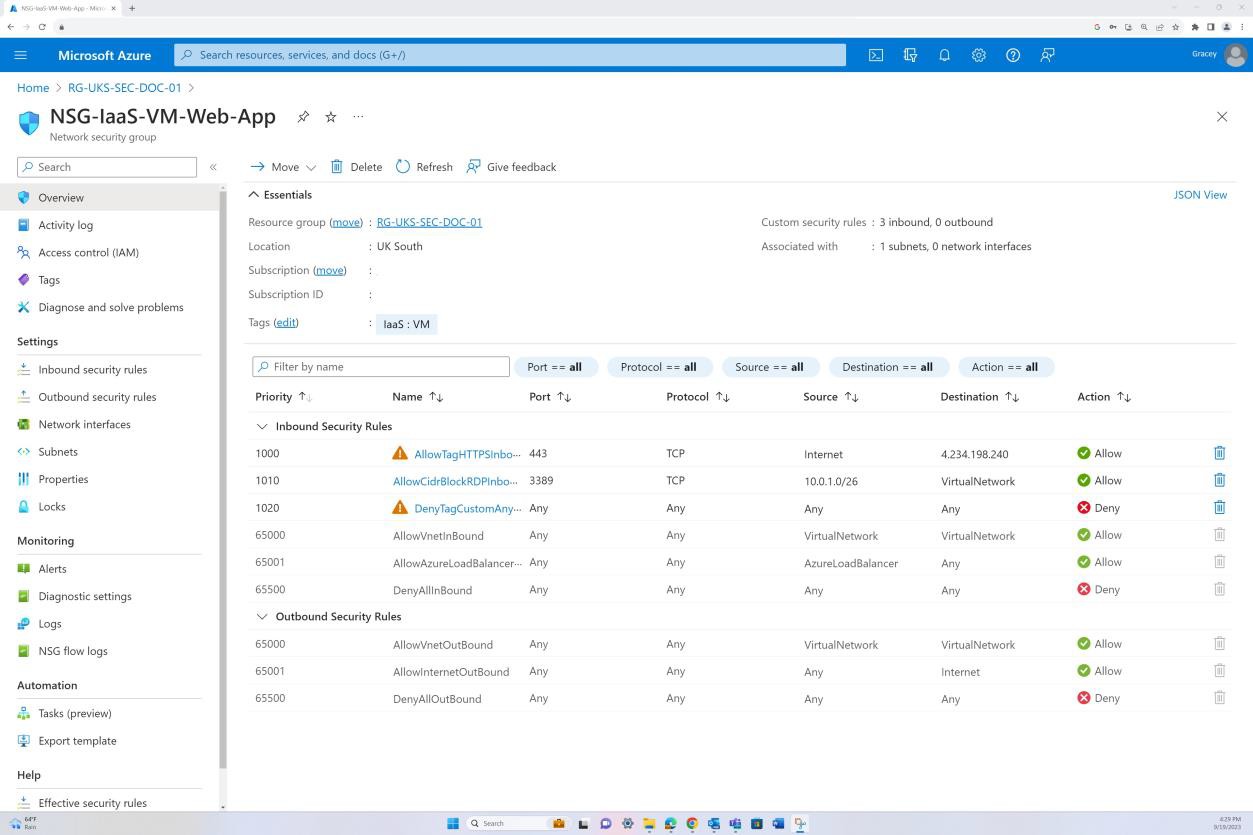

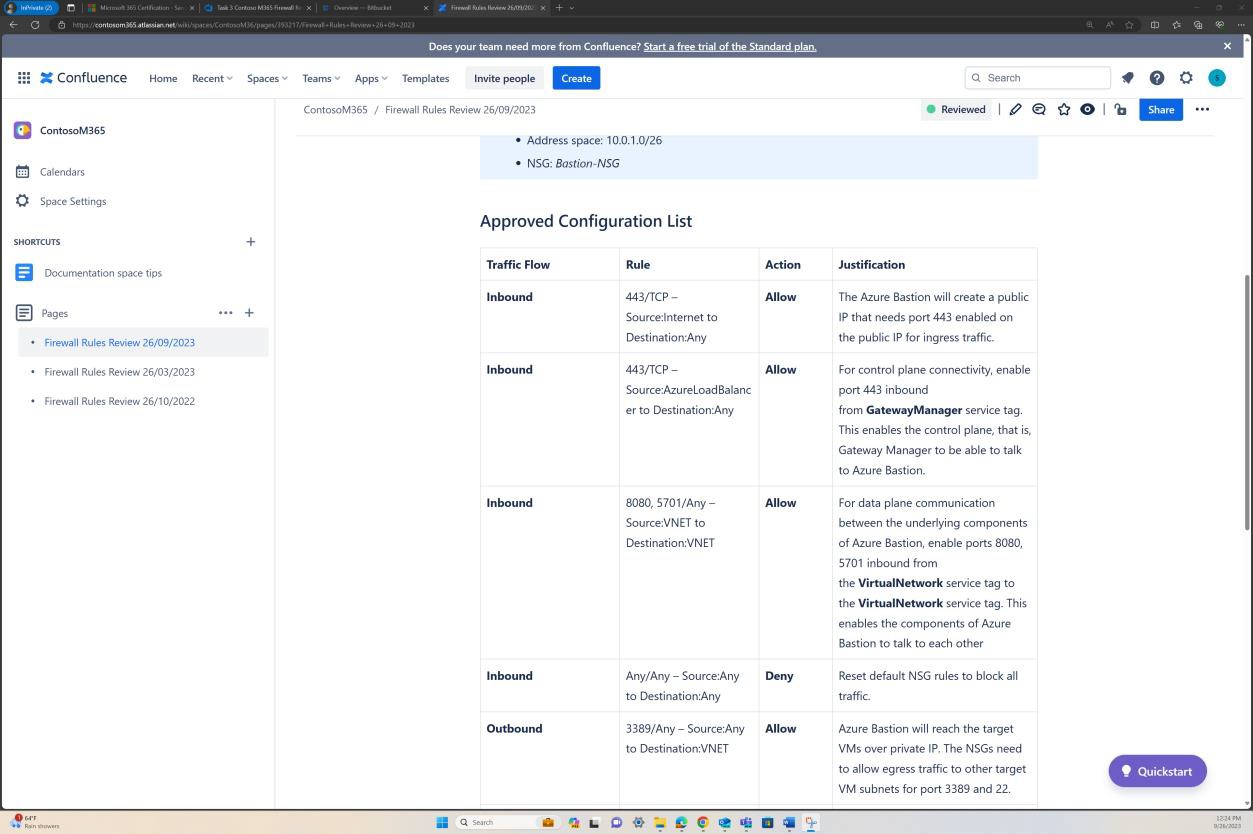

The next screenshots demonstrate that network segmentation is in place within the in-scope virtual network. The VNET as shown following is divided into three subnets, each with an NSG applied.

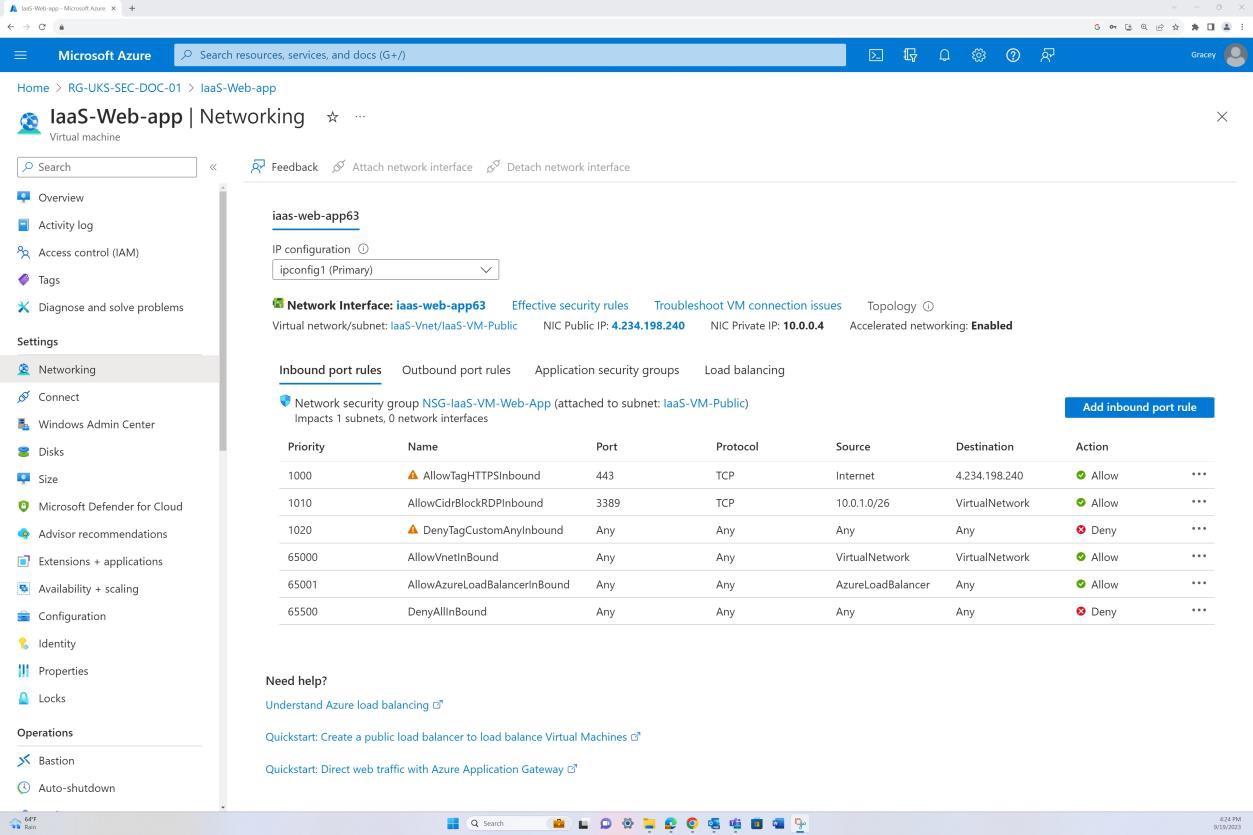

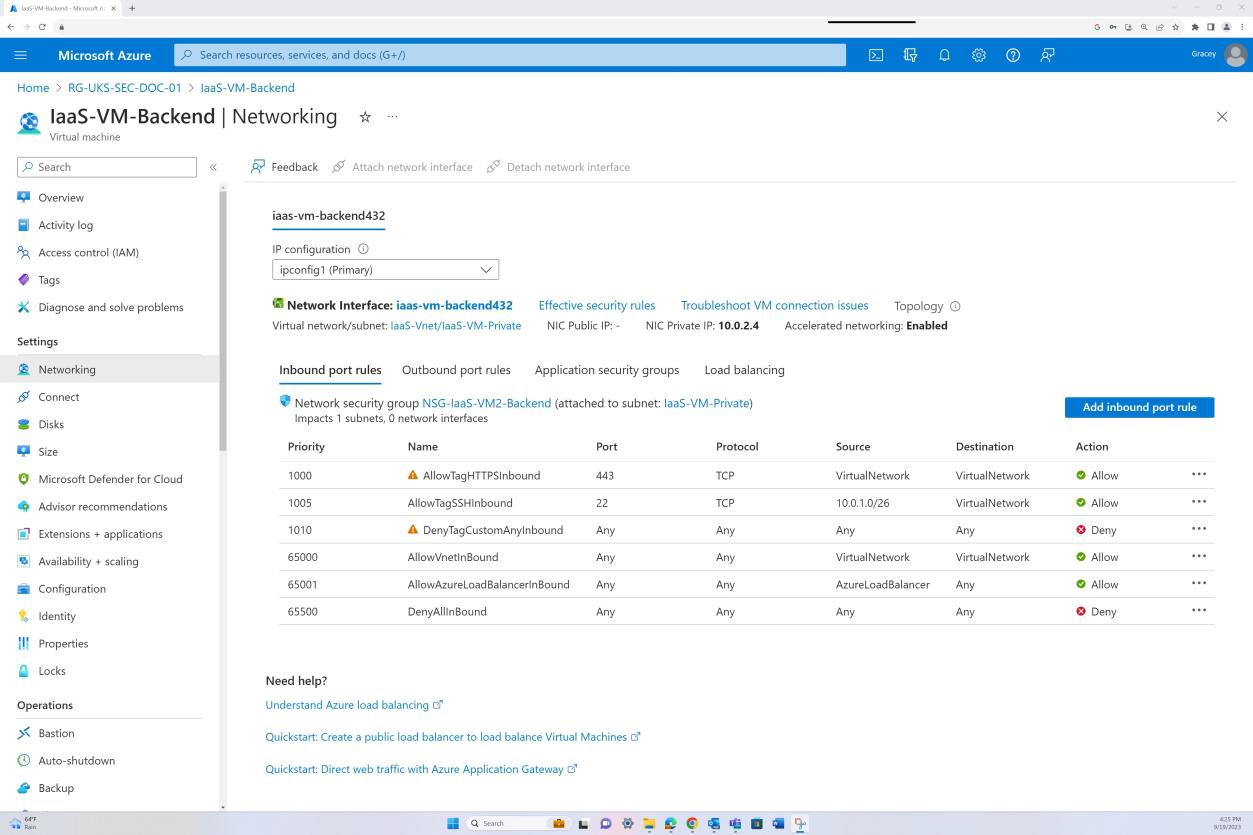

The public subnet acts as the perimeter network. All public traffic is routed through this subnet and filtered via the NSG with specific rules and only explicitly defined traffic is allowed. The backend consists of the private subnet with no public access. All VM access is permitted only through the Bastion Host which has its own NSG applied at subnet level.

The next screenshot shows that traffic is allowed from the internet to a specific IP address only on port 443. Additionally, RDP is allowed only from the Bastion IP range to the virtual network.

The next screenshot demonstrates that the backend is not routable over the internet (this is due to there being no public IP for the NIC) and that the traffic is only allowed to originate from the Virtual Network and Bastion.

The screenshot demonstrates that Azure Bastion host is used to access the virtual machines for maintenance purposes only.

Control No. 9

All Network Security Controls (NSC) are configured to drop traffic not explicitly defined within the rule base.

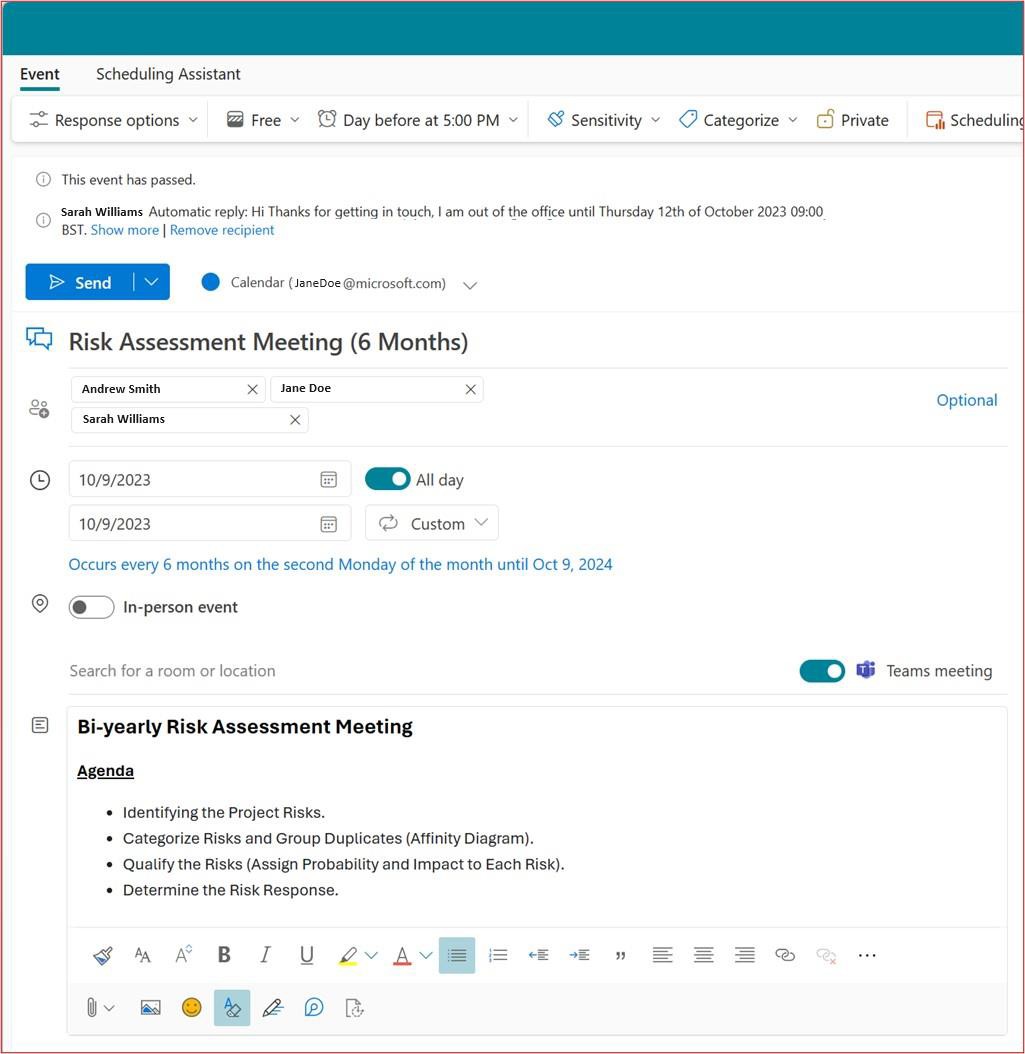

Network Security Controls (NSC) rule reviews are carried out at least every 6 months.

Intent: NSC

This subpoint ensures that all Network Security Controls (NSC) in an organization are configured to drop any network traffic that is not explicitly defined within their rule base. The objective is to enforce the principle of least privilege at the network layer by allowing only authorized traffic while blocking all unspecified or potentially malicious traffic.

Guidelines: NSC

Evidence provided for this could be rule configurations which show the inbound rules and where these rules are terminated; either by routing public IP Addresses to the resources, or by providing the NAT (Network Address Translation) of the inbound traffic.

Example evidence: NSC

The screenshot shows the NSG configuration including the default rules set and a custom Deny:All rule to reset all the NSG’s default rules and ensure all traffic is prohibited. In the additional custom rules the Deny:All rule is explicitly defining the traffic that is allowed.

Example evidence: NSC

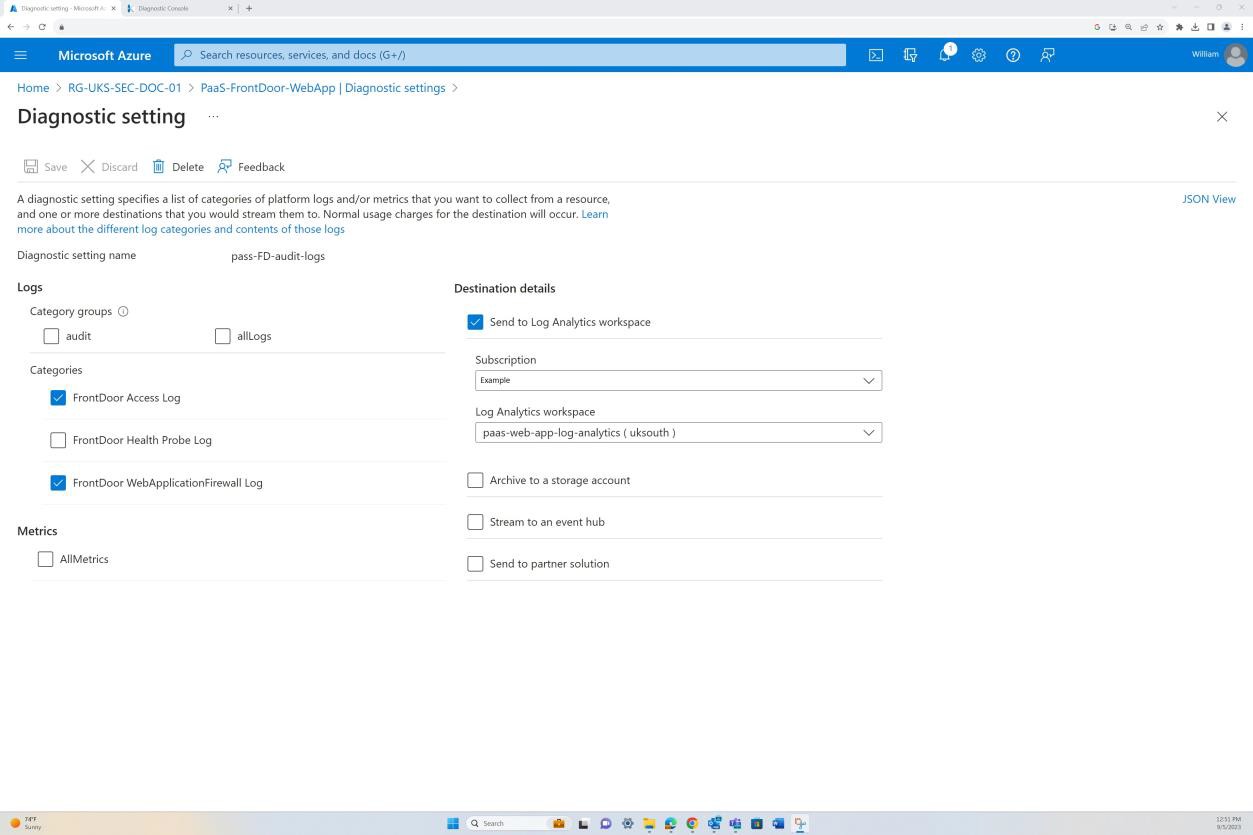

The following screenshots show that Azure Front Door is deployed, all traffic is routed through the Front Door. A WAF Policy in “Prevention Mode” is applied which filters inbound traffic for potential malicious payloads and blocks it.

Intent: NSC

Without regular reviews, Network Security Controls (NSC) may become outdated and ineffective, leaving an organization vulnerable to cyber-attacks. This can result in data breaches, theft of sensitive information, and other cyber security incidents. Regular NSC reviews are essential for managing risks, protecting sensitive data, complying with regulatory requirements, detecting, and responding to cyber threats in a timely manner, and ensuring business continuity. This subpoint requires that Network Security Controls (NSC) undergo rule base reviews at least every six months. Regular reviews are crucial for maintaining the effectiveness and relevance of the NSC configurations, especially in dynamically changing network environments.

Guidelines: NSC

Any evidence provided needs to be able to demonstrate that rule review meetings have been occurring. This can be done by sharing meeting minutes of the NSC review and any additional change control evidence that shows any actions taken from the review. Please ensure that dates are present as the certification analyst reviewing your submission would need to see a minimum of two of these meetings review documents (i.e., every six months).

Example evidence: NSC

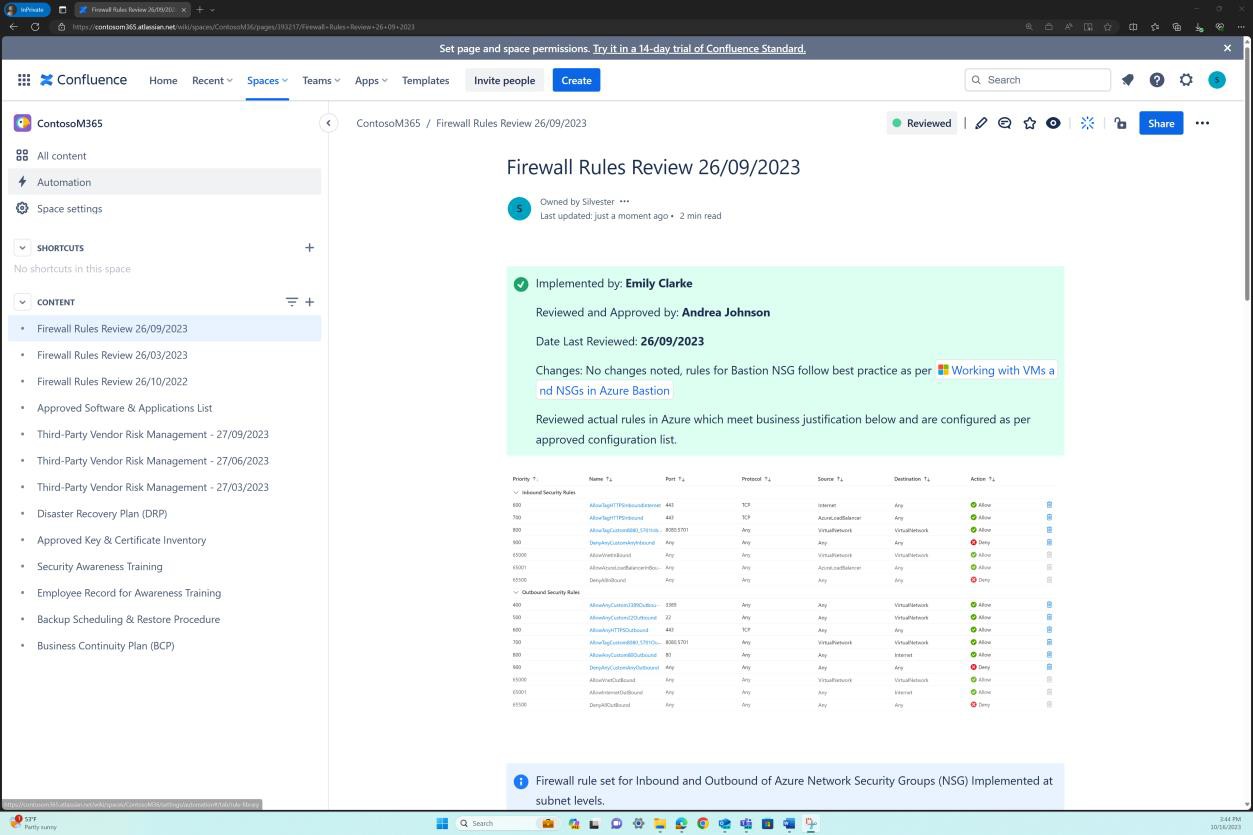

These screenshots demonstrate that six-monthly firewall reviews exist, and the details are maintained in the Confluence Cloud platform.

The next screenshot demonstrates that every rule review has a page created in Confluence. The rule review contains an approved ruleset list outlining the traffic allowed, the port number, protocol, etc. along with the business justification.

Example evidence: NSC

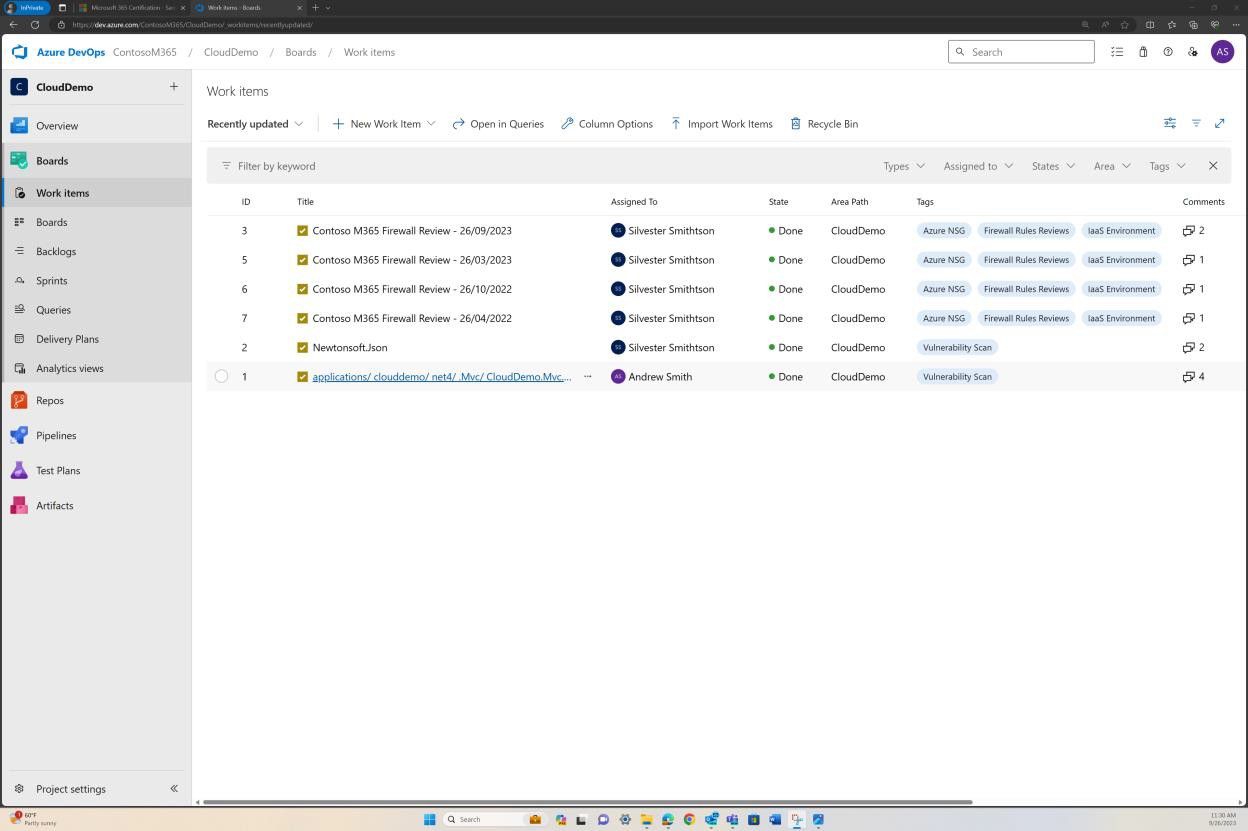

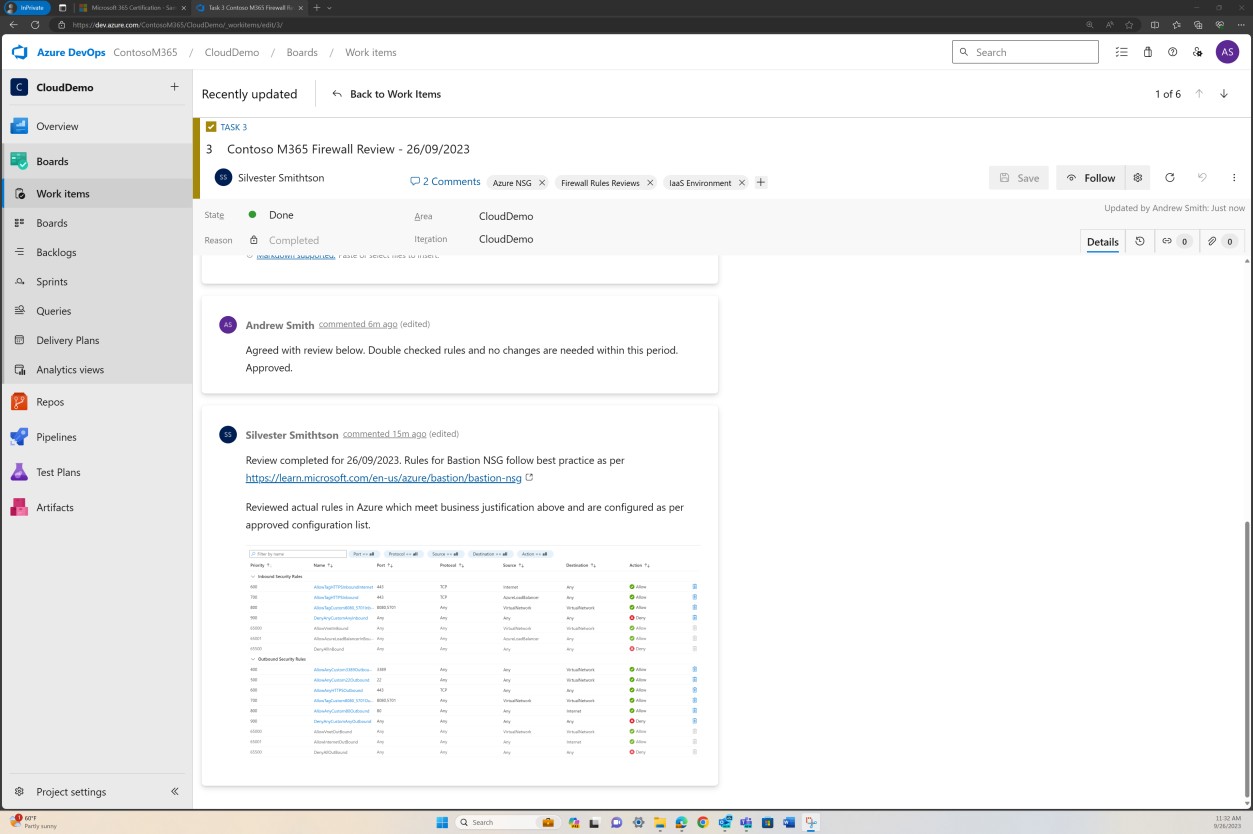

The next screenshot demonstrates an alternative example of six-months rules review being maintained in DevOps.

Example evidence: NSC

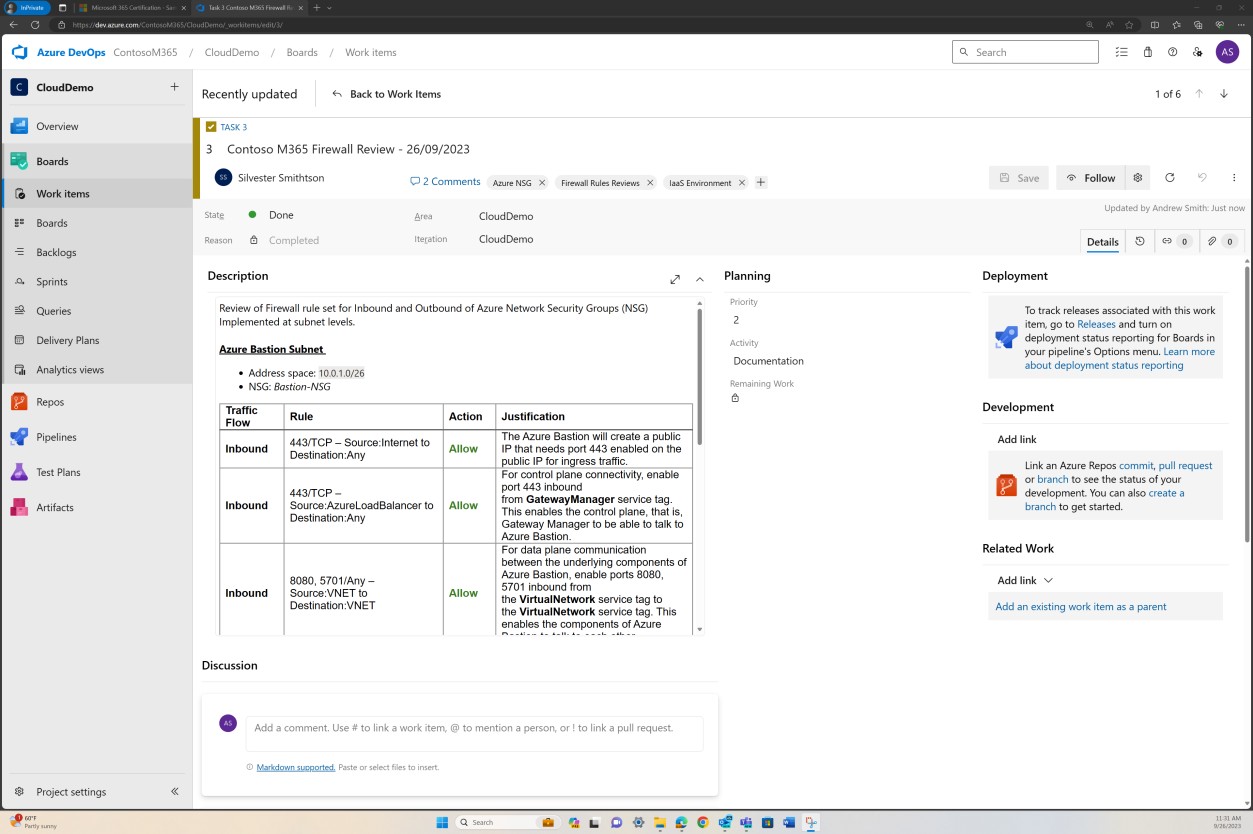

This screenshot demonstrates an example of a rule review being performed and recorded as a ticket in DevOps.

The previous screenshot shows the established documented rule list alongside the business justification, while the next image demonstrates a snapshot of the rules within the ticket from the actual system.

Change control

An established and understood change control process is essential in ensuring that all changes go through a structured process which is repeatable. By ensuring all changes go through a structured process, organizations can ensure changes are effectively managed, peer reviewed and adequately tested before being signed off. This not only helps to minimize the risk of system outages, but also helps to minimize the risk of potential security incidents through improper changes being introduced.

Control No. 10

Please provide evidence demonstrating that:

Any changes introduced to production environments are implemented through documented change requests which contain:

impact of the change.

details of back-out procedures.

testing to be carried out.

review and approval by authorized personnel.

Intent: change control

The intent of this control is to ensure that any requested changes have been carefully considered and documented. This includes assessing the impact of the change on the security of the system/environment, documenting any back-out procedures to aid in the recovery if something goes wrong, and detailing the testing needed to validate the change’s success.

Processes must be implemented which forbid changes to be carried out without proper authorization and sign off. The change needs to be authorized before being implemented and the change needs to be signed off once complete. This ensures that the change requests have been properly reviewed and someone in authority has signed off the change.

Guidelines: change control

Evidence can be provided by sharing screenshots of a sample of change requests demonstrating that the details of impact of the change, back-out procedures, testing are held within the change request.

Example evidence: change control

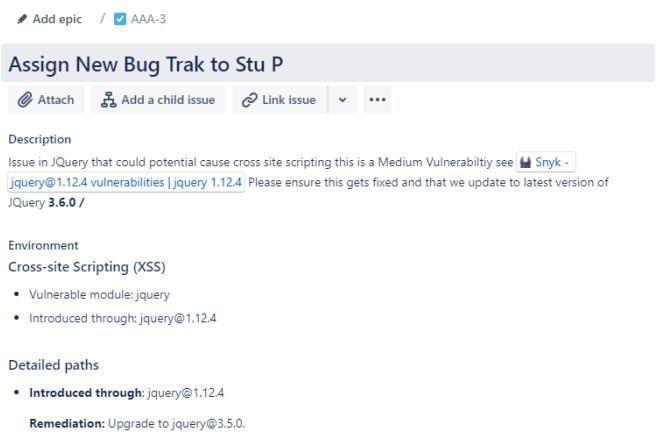

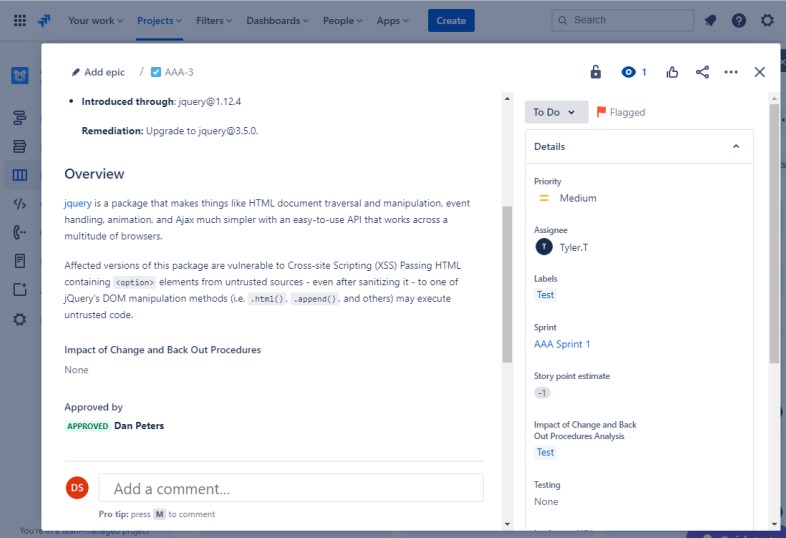

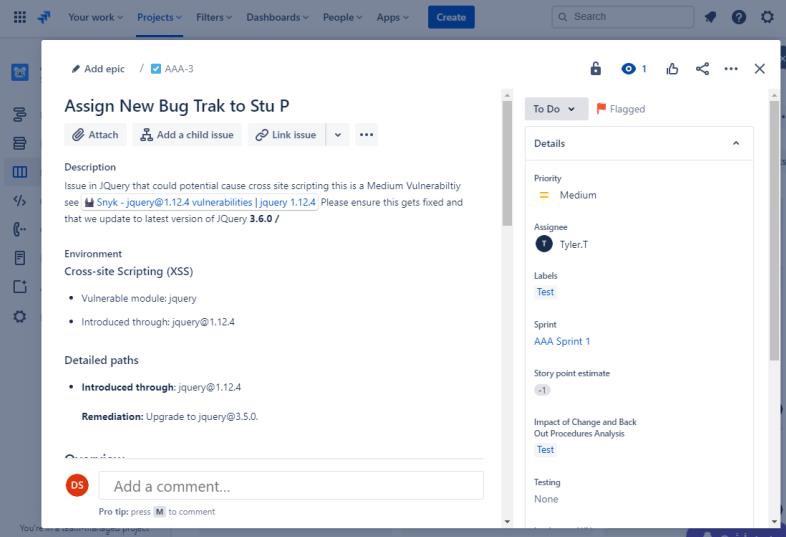

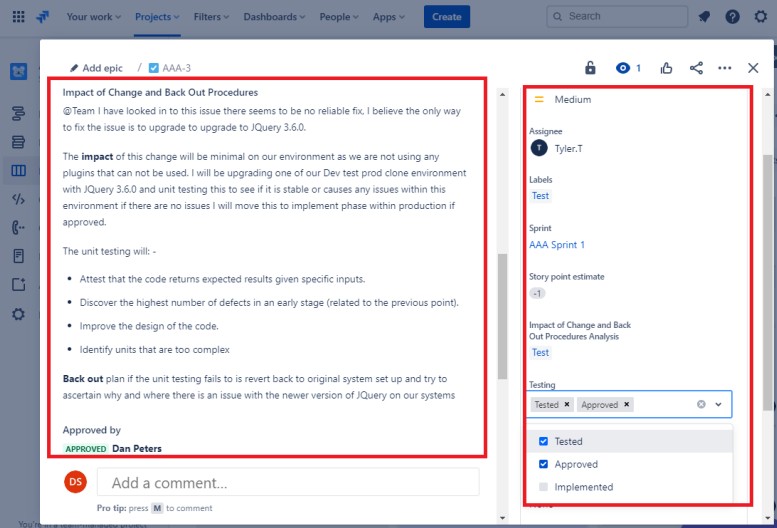

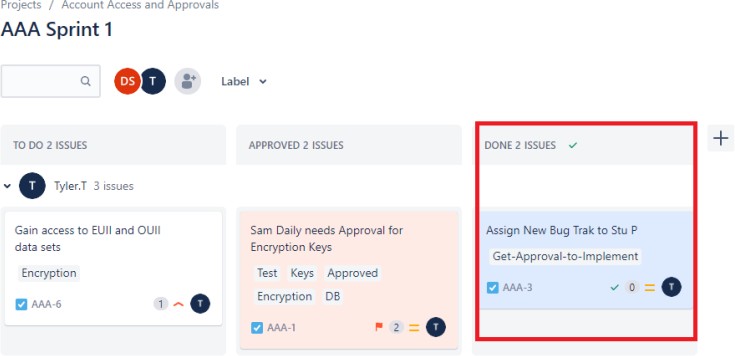

The next screenshot shows a new Cross Site Scripting Vulnerability (XSS) being assigned and document for change request. The tickets below demonstrate the information that has been set or added to the ticket on its journey to being resolved.

The two tickets following show the impact of the change to the system and the back out procedures which may be needed in the event of an issue. The impact of changes and back out procedures have gone through an approval process and have been approved for testing.

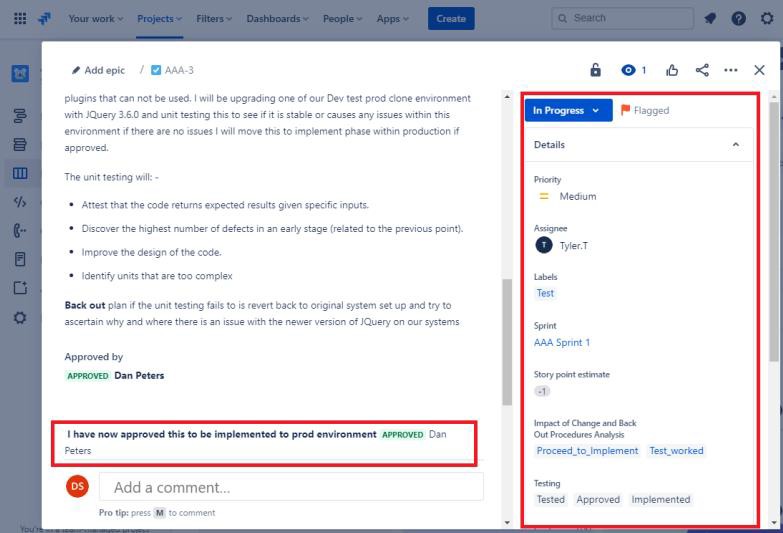

On the following screenshot, the testing of the changes has been approved, and on the right, you see that the changes have now been approved and tested.

Throughout the process note that the person doing the job, the person reporting on it and the person approving the work to be done are different people.

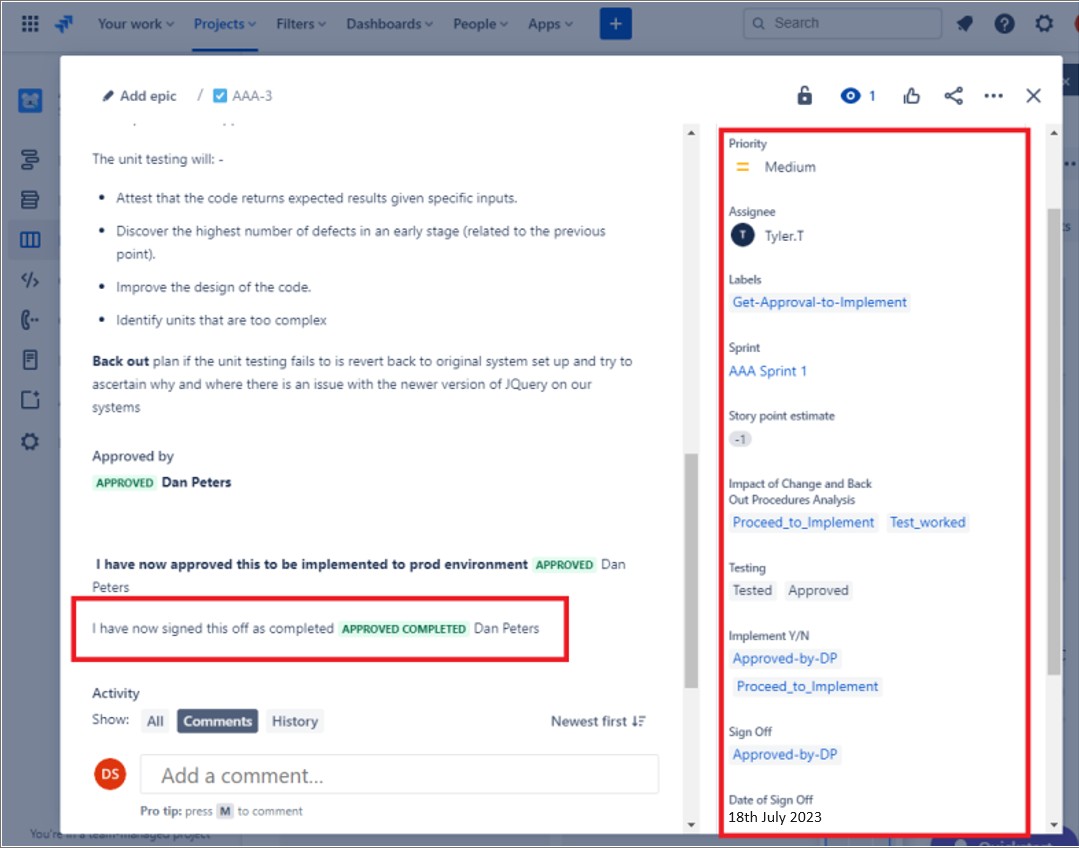

The ticket following shows that the changes have now been approved for implementation to the production environment. The right-hand box shows that the test worked and was successful and that the changes are now been implemented to the Prod Environment.

Example evidence

The next screenshots show an example Jira ticket showing that the change needs to be authorized before being implemented and approved by someone other than the developer/requester. The changes are approved by someone with authority. The right of the screenshot shows that the change has been signed by DP once complete.

In the ticket following the change has been signed off once complete and shows the job completed and closed.

Please Note: - In these examples a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing any URL, logged in user and the system time and date.

Control No. 11

Please provide evidence that:

Separate environments exist so that:

Development and test/staging environments enforce separation of duties from the production environment.

Separation of duties is enforced via access controls.

Sensitive production data is not in use within the development or test/staging environments.

Intent: separate environments

Most organizations’ development/test environments are not configured to the same vigor as the production environments and are therefore less secure. Additionally, testing should not be carried out within the production environment as this can introduce security issues or can be detrimental to service delivery for customers. By maintaining separate environments which enforce a separation of duties, organizations can ensure changes are being applied to the correct environments, thereby, reducing the risk of errors by implementing changes to production environments when it was intended for the development/test environment.

The access controls should be configured such that personnel responsible for development and testing do not have unnecessary access to the production environment, and vice versa. This minimizes the potential for unauthorized changes or data exposure.

Using production data in development/test environments can increase the risk of a compromise and expose the organization to data breaches or unauthorized access. The intent requires that any data used for development or testing should be sanitized, anonymized, or generated specifically for that purpose.

Guidelines: separate environments

Screenshots could be provided which demonstrate different environments being used for development/test environments and production environments. Typically, you would have different people/teams with access to each environment, or where this is not possible, the environments would utilize different authorization services to ensure users cannot mistakenly log into the wrong environment to apply changes.

Example evidence: separate environments

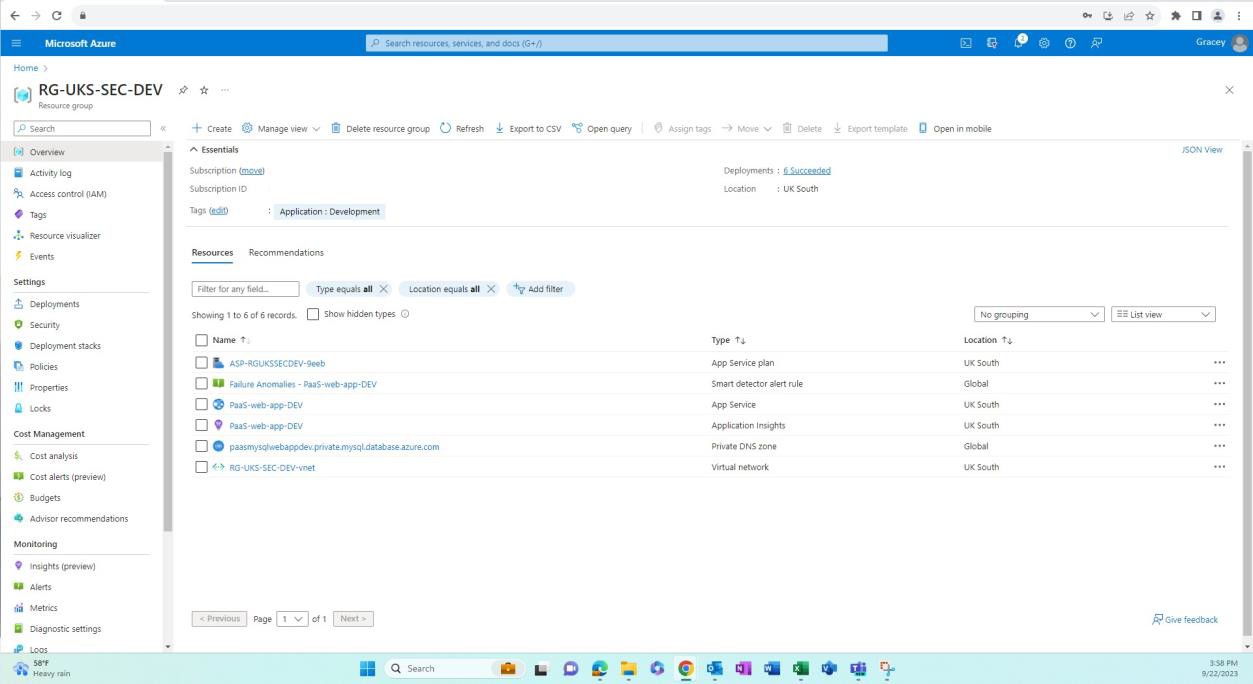

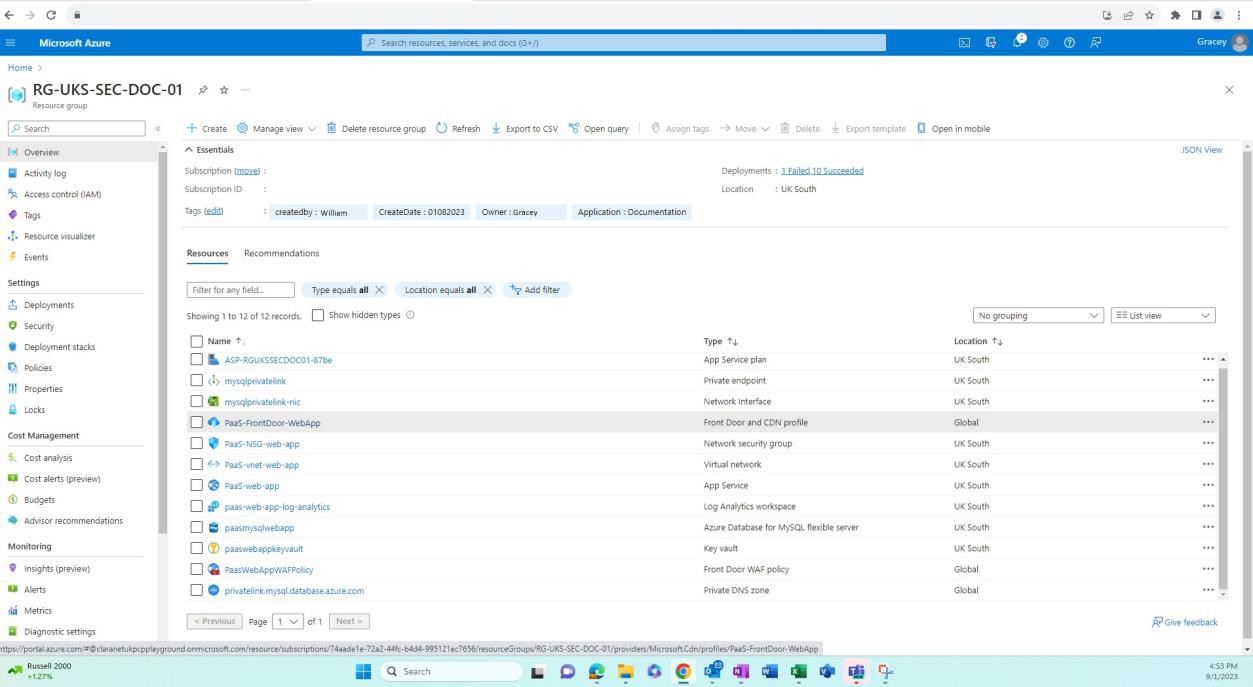

The next screenshots demonstrate for that environments for development/testing are separated from production, this is achieved via Resource Groups in Azure, which is a way to logical group resources into a container. Other ways to achieve the separation could be different Azure Subscriptions, Networking and Subnetting etc.

The following screenshot shows the development environment and the resources within this resource group.

The next screenshot shows the production environment and the resources within this resource group.

Example evidence:

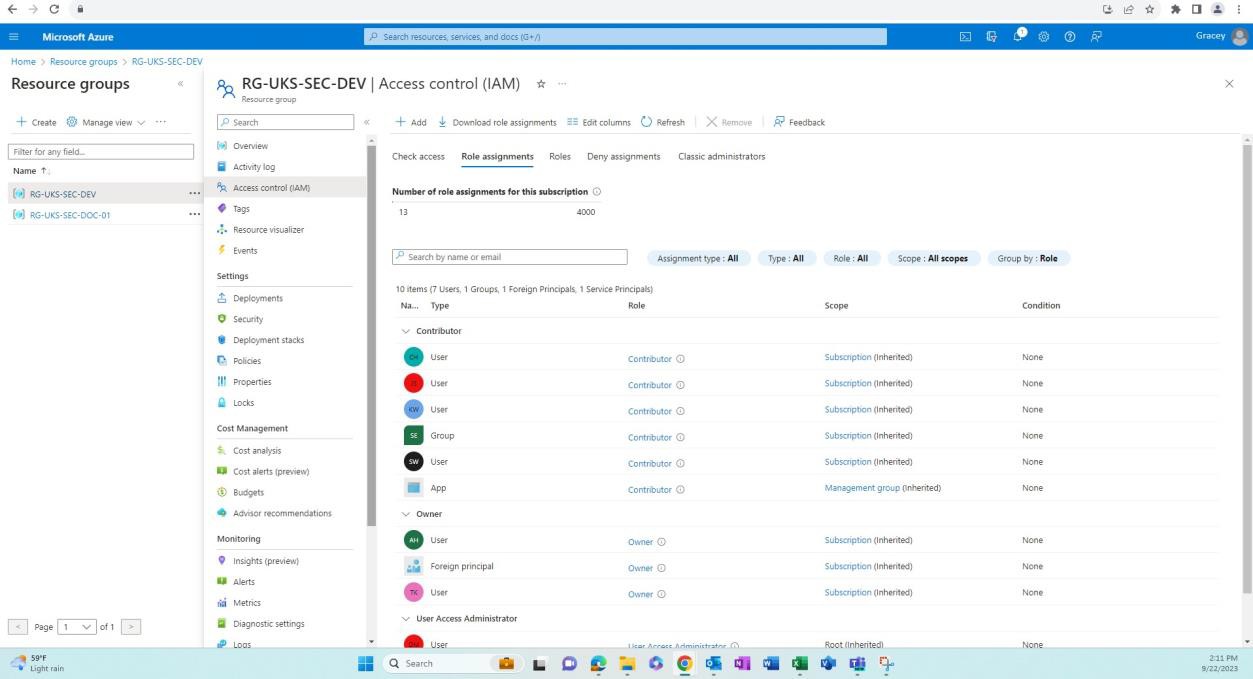

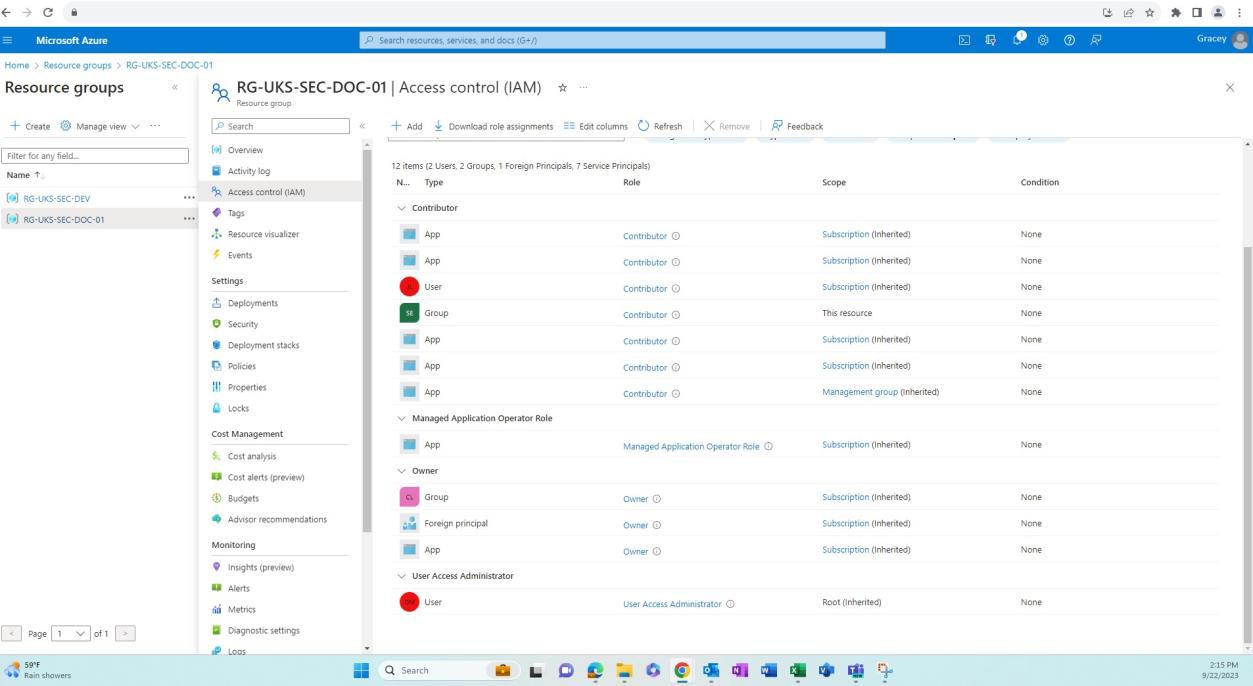

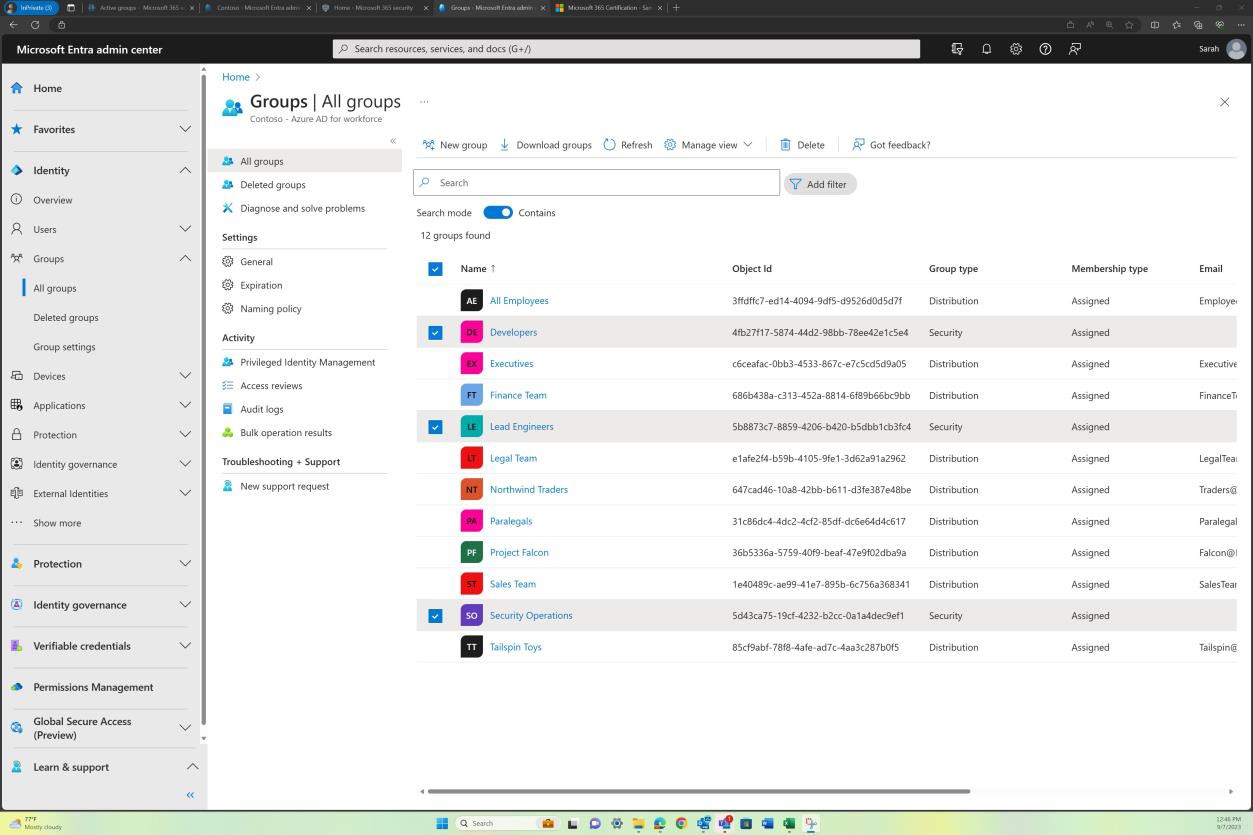

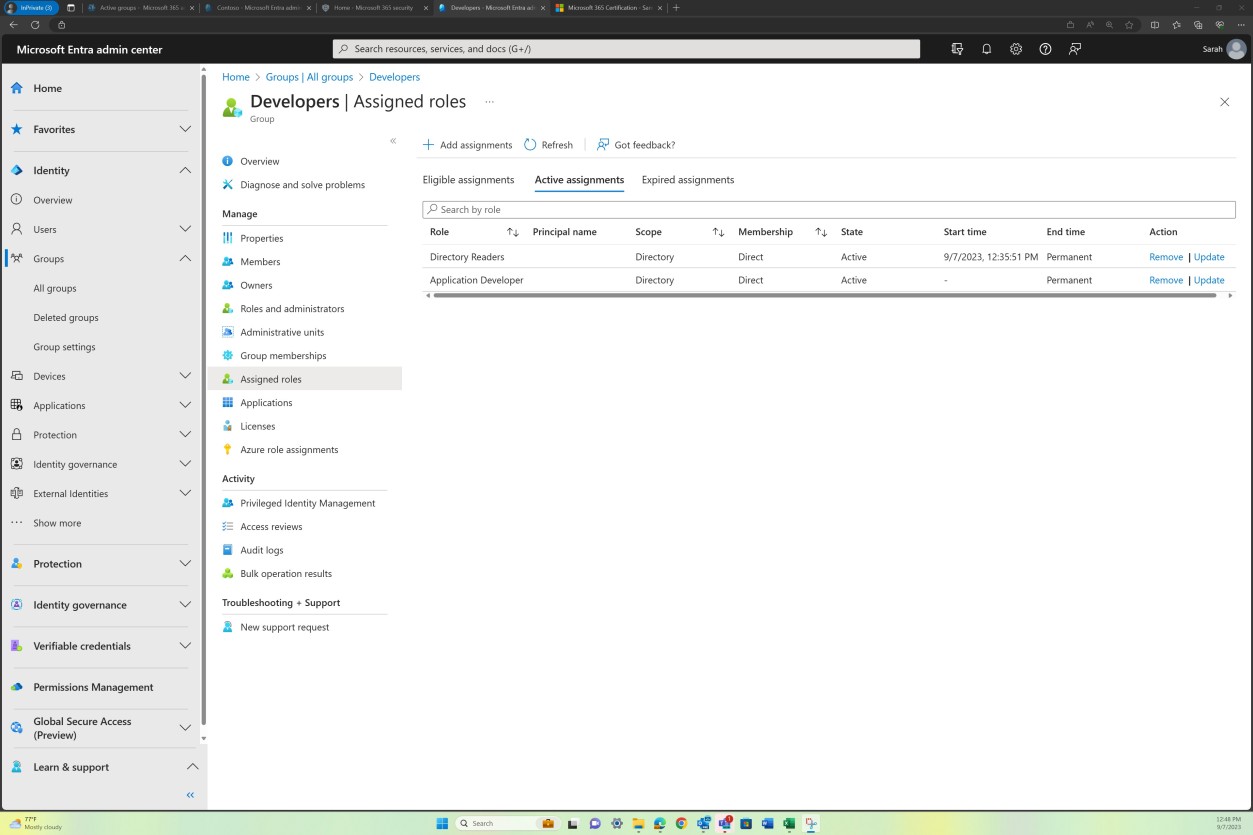

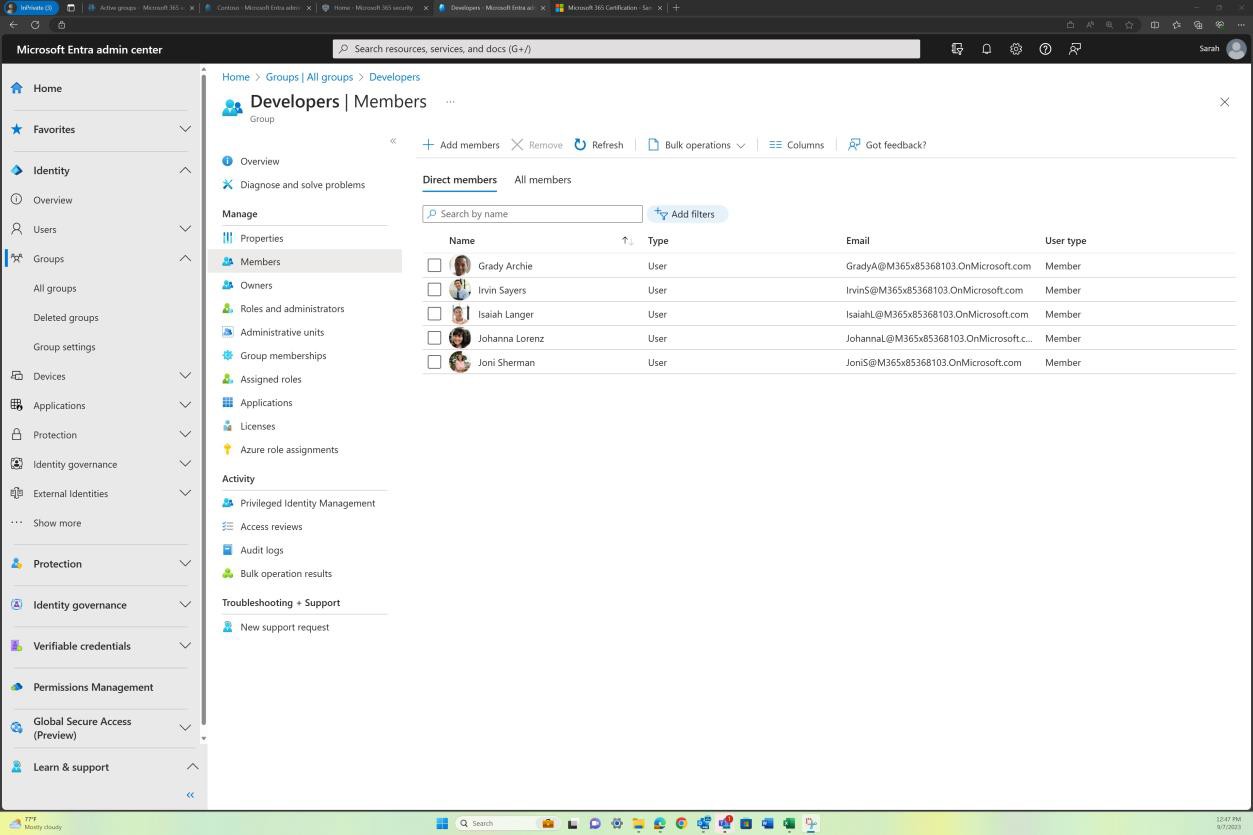

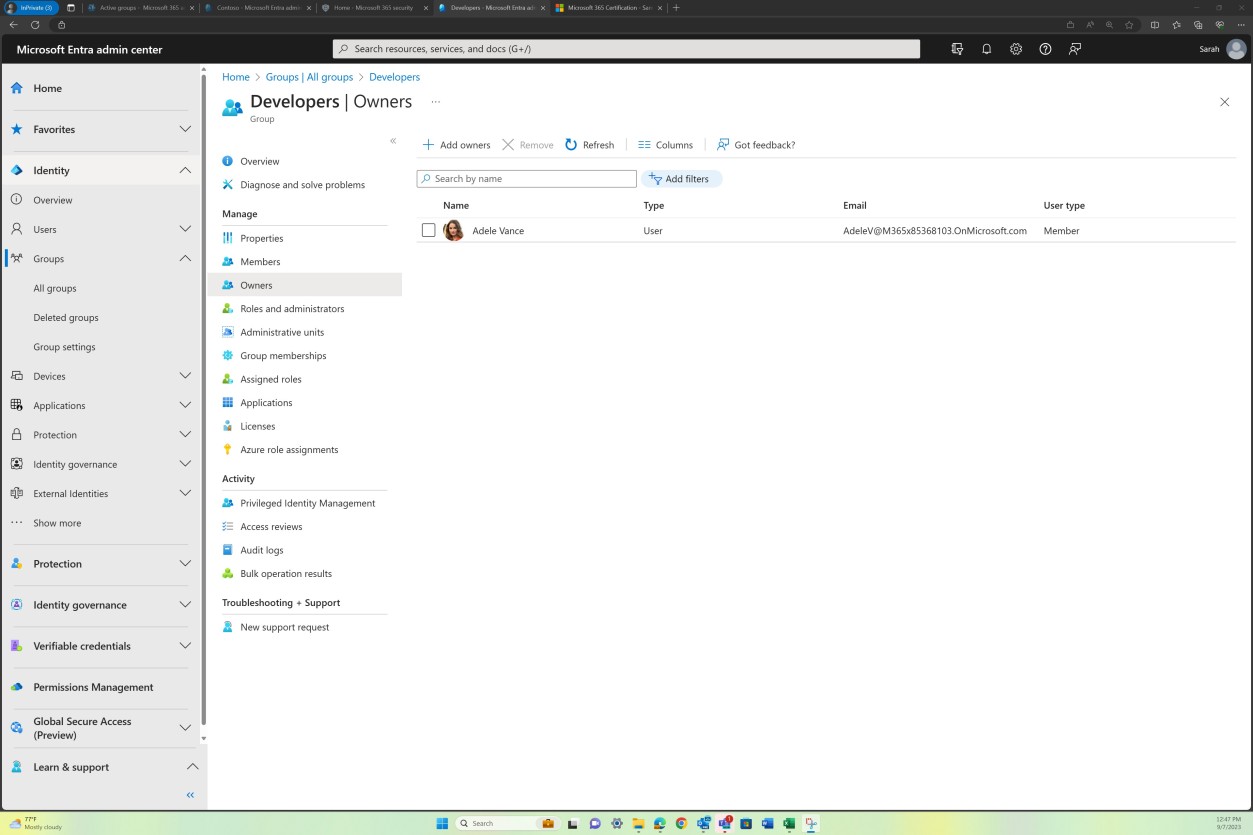

The next screenshots demonstrate that environments for development/testing are separate from the production environment. Adequate separation of environments is achieved via different users/groups with different permissions associated with each environment.

The next screenshot shows the development environment and the users with access to this resource group.

The next screenshot shows the production environment and the users (different from the development environment) that have access to this resource group.

Guidelines:

Evidence can be provided by sharing screenshots of the output of the same SQL query against a production database (redact any sensitive information) and the development/test database. The output of the same commands should produce different data sets. Where files are being stored, viewing the contents of the folders within both environments should also demonstrate different data sets.

Example evidence

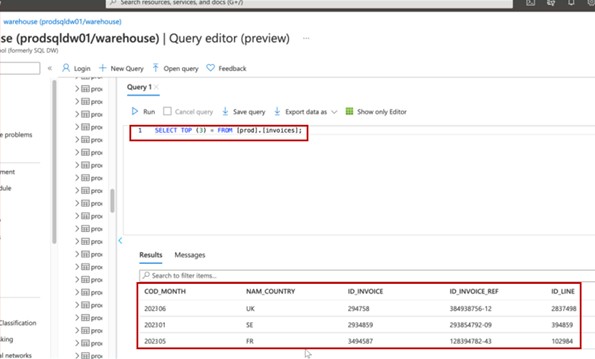

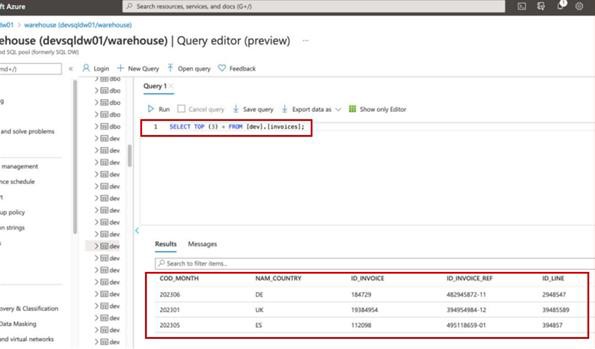

The screenshot shows the top 3 records (for evidence submission, please provide top 20) from the production database.

The next screenshot shows the same query from the Development Database, showing different records.

Note: In this example a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing URL, any logged in user and system time and date.

Secure software development/deployment

Organizations involved in software development activities are often faced with competing priorities between security and TTM (Time to Market) pressures, however, implementing security related activities throughout the software development lifecycle (SDLC) can not only save money, but can also save time. When security is left as an afterthought, issues are usually only identified during the test phase of the (DSLC), which can often be more time consuming and costly to fix. The intent of this security section is to ensure secure software development practices are followed to reduce the risk of coding flaws being introduced into the software which is developed. Additionally, this section looks to include some controls to aid in secure deployment of software.

Control No. 12

Please provide evidence demonstrating documentation exists and is maintained that:

supports the development of secure software and includes industry standards and/or best practices for secure coding, such as OWASP Top 10 or SANS Top 25 CWE.

developers undergo relevant secure coding and secure software development training annually.

Intent: secure development

Organizations need to do everything in their power in ensuring software is securely developed and free from vulnerabilities. In a best effort to achieve this, a robust secure software development lifecycle (SDLC) and secure coding best practices should be established to promote secure coding techniques and secure development throughout the whole software development process. The intent is to reduce the number and severity of vulnerabilities in the software.

Coding best practices and techniques exist for all programming languages to ensure code is securely developed. There are external training courses that are designed to teach developers the different types of software vulnerabilities classes and the coding techniques that can be used to stop introducing these vulnerabilities into the software. The intention of this control is also to teach these techniques to all developers and to ensure that these techniques are not forgotten, or newer techniques are learned by carrying this out annually.

Guidelines: secure development

Supply the documented SDLC and/or support documentation which demonstrates that a secure development life cycle is in use and that guidance is provided for all developers to promote secure coding best practice. Take a look at OWASP in SDLC and the OWASP Software Assurance Maturity Model (SAMM).

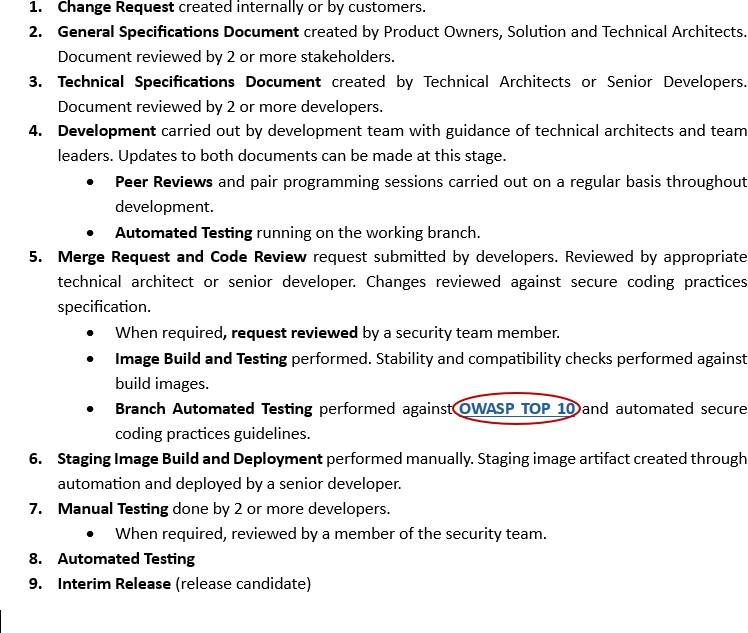

Example evidence: secure development

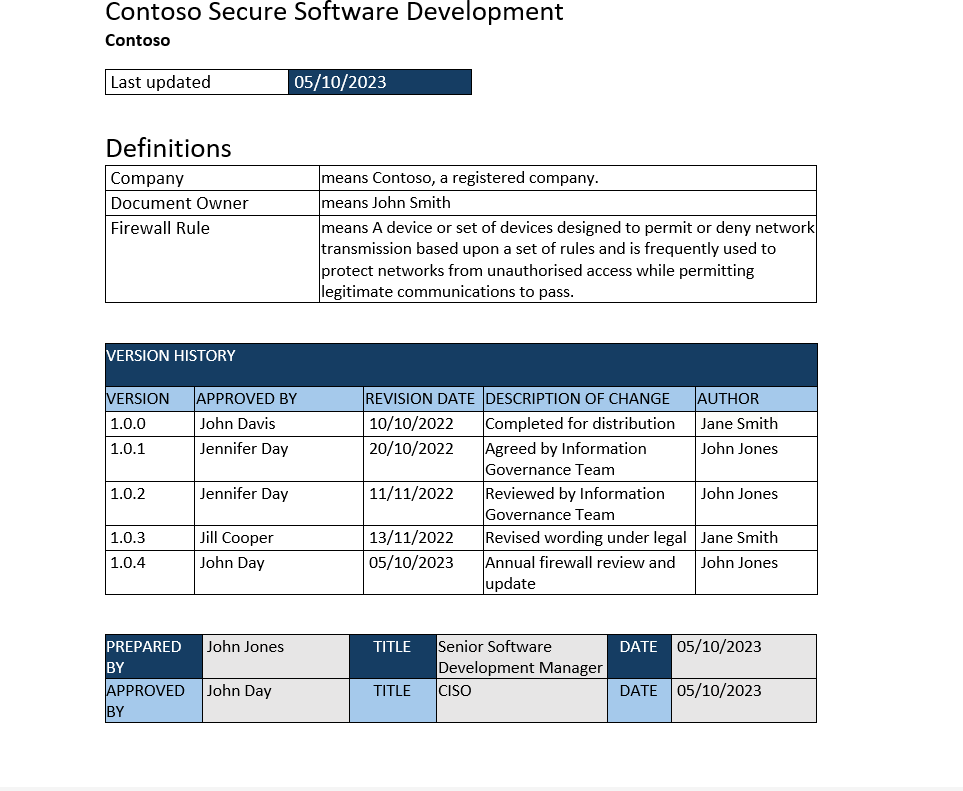

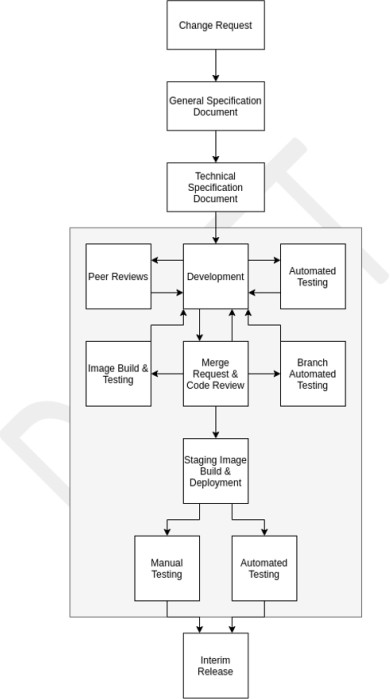

An example of the Secure Software Development policy document is shown below. The following is an extract from Contoso's Secure Software Development Procedure, which demonstrates secure development and coding practices.

Note: In the previous examples full screenshots were not used, however ALL ISV submitted evidence screenshots must be full screenshots showing any URL, logged in user and the system time and date.

Guidelines: secure development training

Provide evidence by way of certificates if training is carried out by an external training company, or by providing screenshots of the training diaries or other artifacts which demonstrates that developers have attended training. If this training is carried out via internal resources, provide evidence of the training material as well.

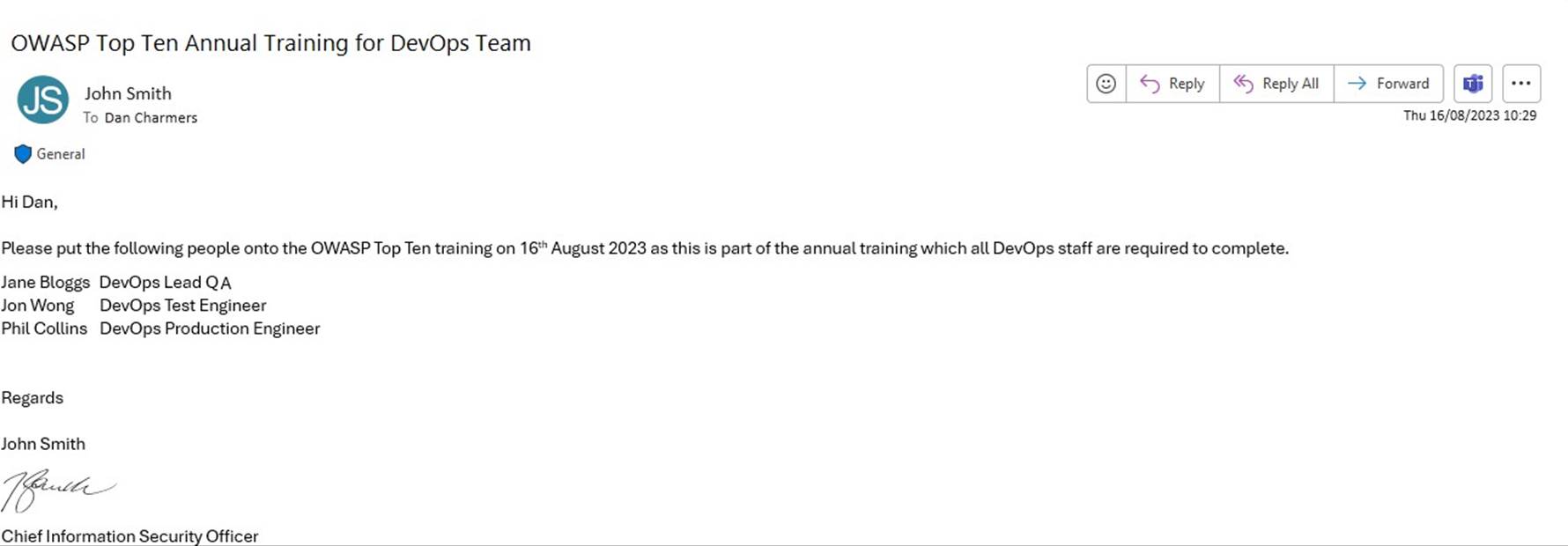

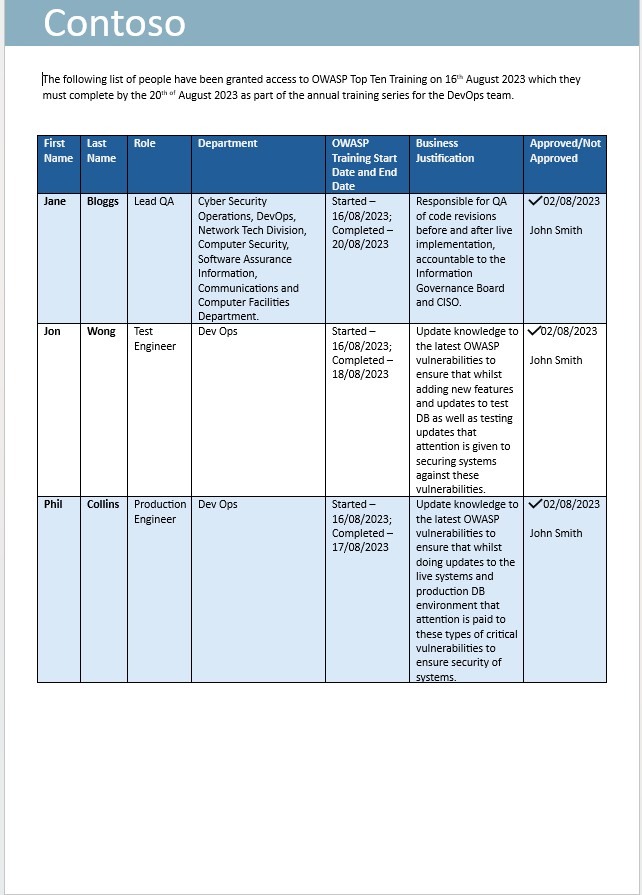

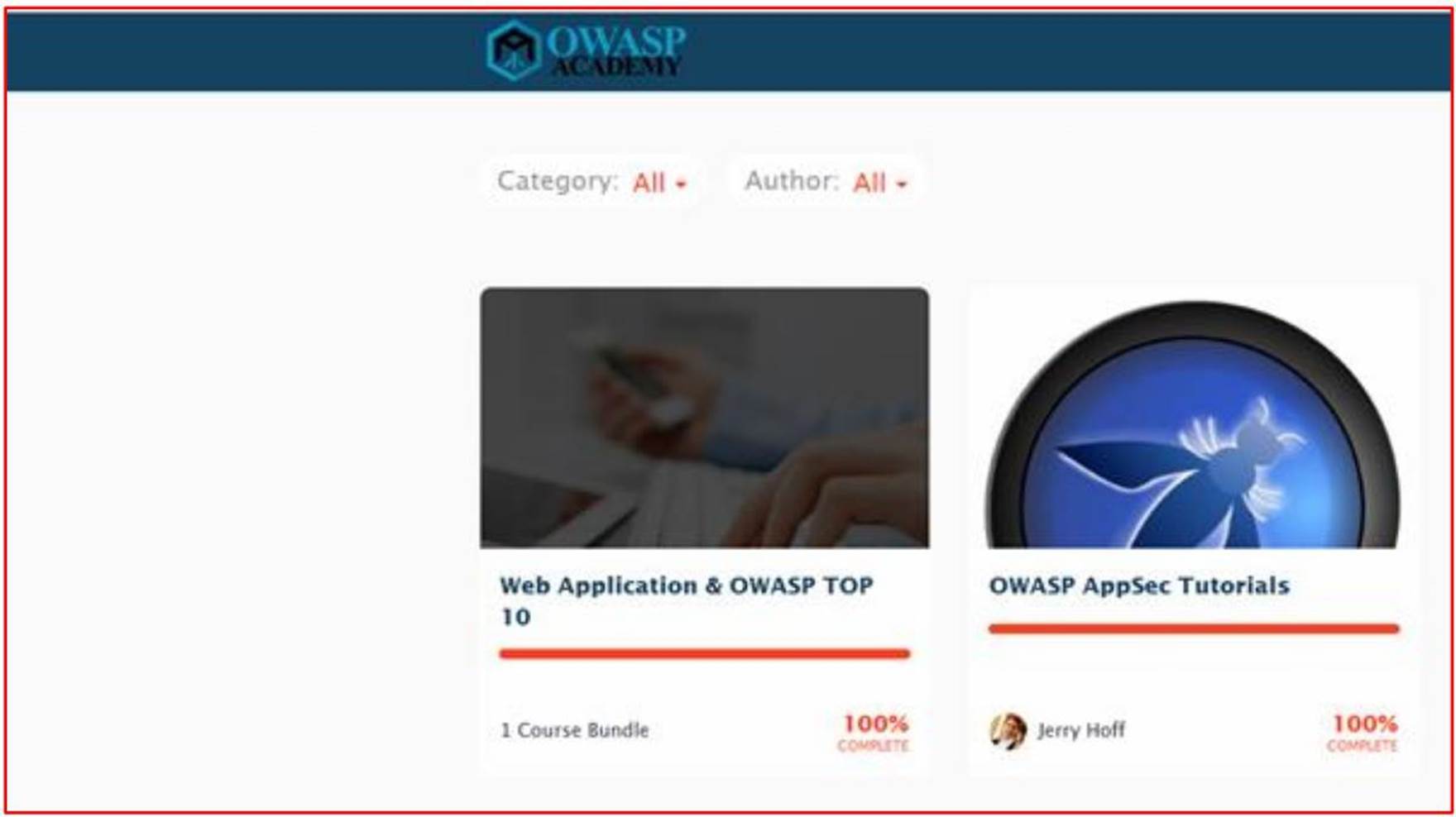

Example evidence: secure development training

The next screenshot is an email requesting staff in the DevOps team to be enrolled into OWASP Top Ten Training Annual Training.

The next screenshot shows that training has been requested with business justification and approval. This is then followed by screenshots taken from the training and a completion record showing that the person has finished the annual training.

Note: In this example a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing URL, any logged in user and system time and date.

Control No. 13

Please provide evidence that code repositories are secured so that:

all code changes undergo a review and approval process by a second reviewer prior to being merged with main branch.

appropriate access controls are in place.

all access is enforced through multi-factor authentication (MFA).

all releases made into the production environment(s) are reviewed and approved prior to their deployment.

Intent: code review

The intent with this subpoint is to perform a code review by another developer to help identify any coding mistakes which could introduce a vulnerability in the software. Authorization should be established to ensure code reviews are carried, testing is done, etc. prior to deployment. The authorization step validates that the correct processes have been followed which underpins the SDLC defined in control 12.

The objective is to ensure that all code changes undergo a rigorous review and approval process by a second reviewer before they are merged into the main branch. This dual-approval process serves as a quality control measure, aiming to catch any coding errors, security vulnerabilities, or other issues that could compromise the integrity of the application.

Guidelines: code review

Provide evidence that code undergoes a peer review and must be authorized before it can be applied to the production environment. This evidence may be via an export of change tickets, demonstrating that code reviews have been carried out and the changes authorized, or it could be through code review software such as Crucible

Example evidence: code review

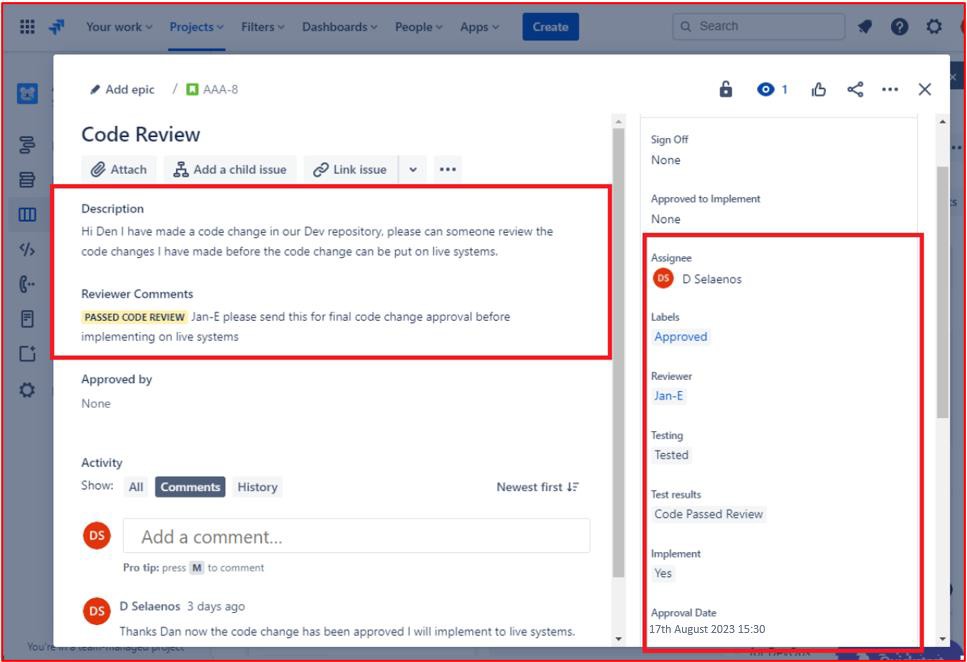

The following is a ticket that shows code changes undergo a review and authorization process by someone other than the original developer. It shows that a code review has been requested by the assignee and will be assigned to someone else for the code review.

The next image shows that the code review was assigned to someone other than the original developer as shown by the highlighted section on the right-hand side of the image. On the lefthand side the code has been reviewed and given a ’PASSED CODE REVIEW’ status by the code reviewer. The ticket must now get approval by a manager before the changes can be put onto live production systems.

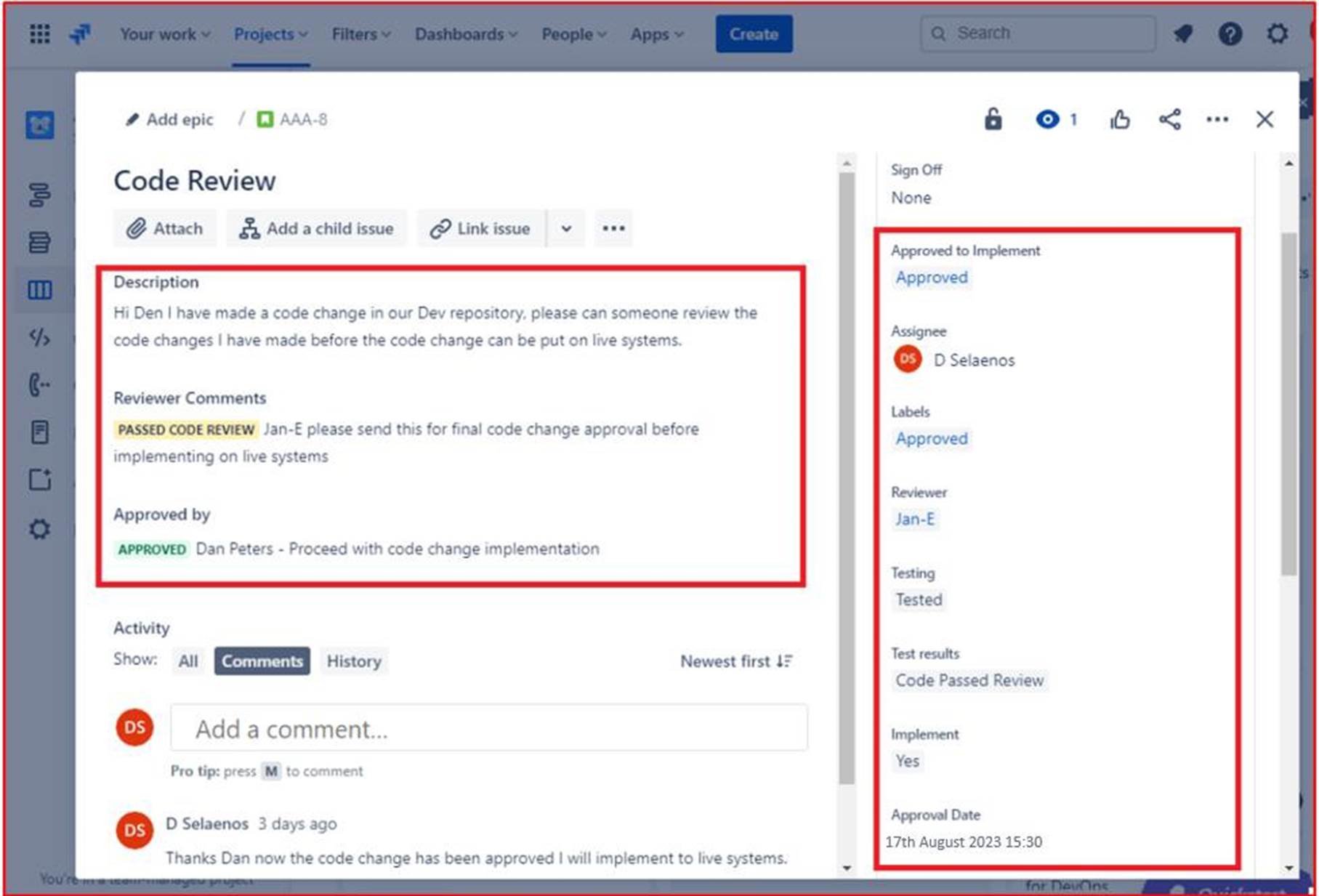

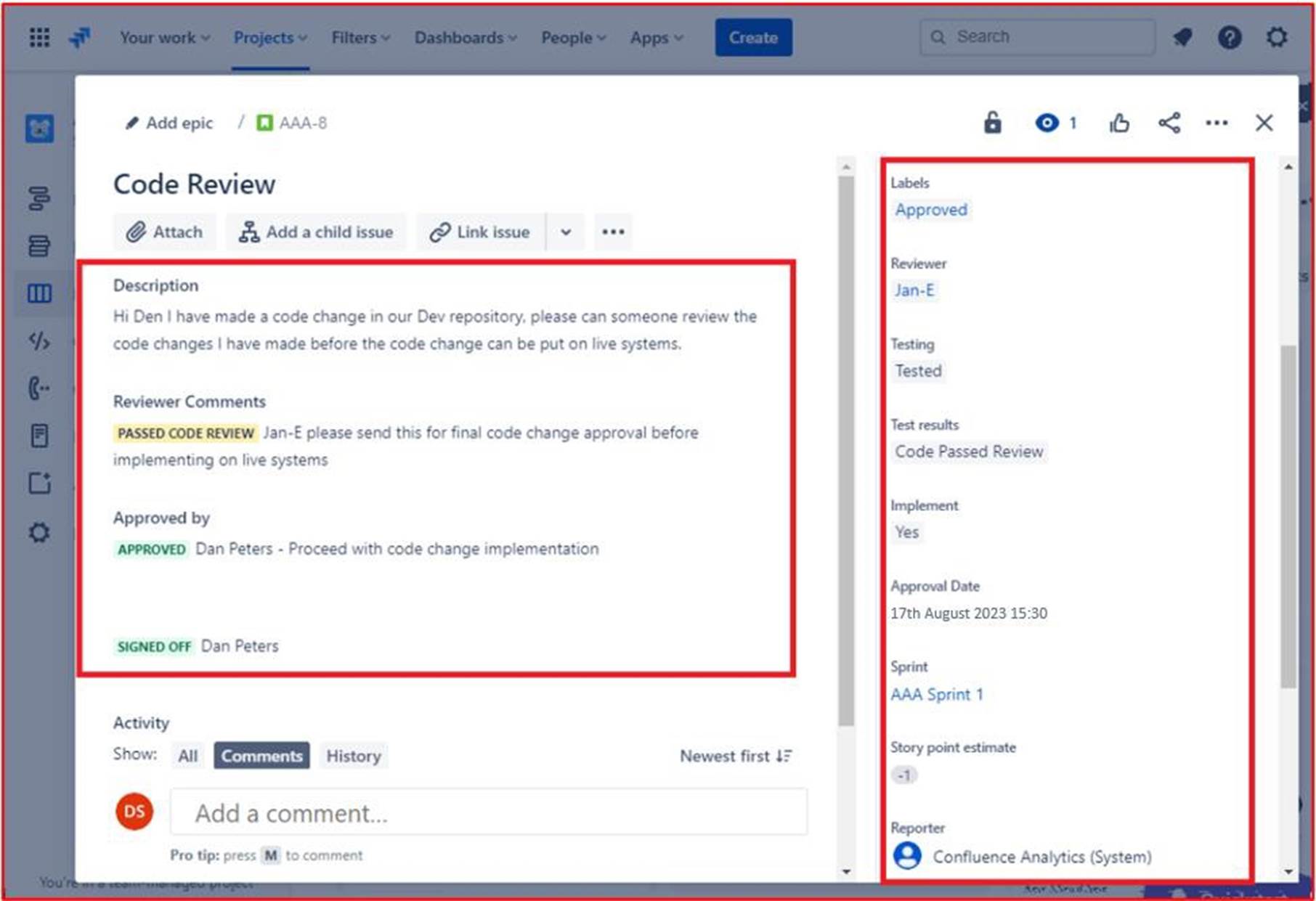

The following image shows that the reviewed code has been given approved to be implemented onto the live production systems. After the code changes have been done the final job gets sign off. Please note that throughout the process there are three people involved, the original developer of the code, the code reviewer, and a manager to give approval and sign off. To meet the criteria for this control, it would be an expectation that your tickets will follow this process.

Note: In this example a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing URL, any logged in user and system time and date.

Example evidence: code review

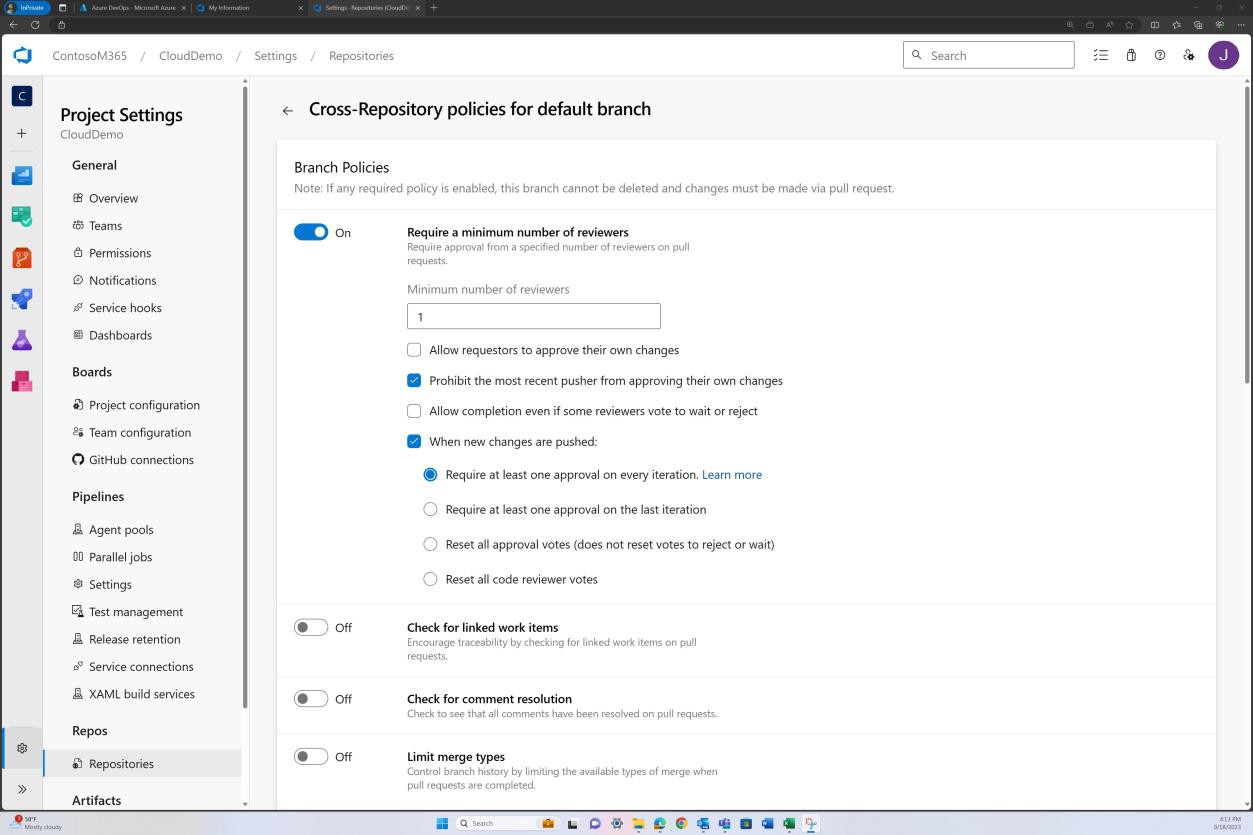

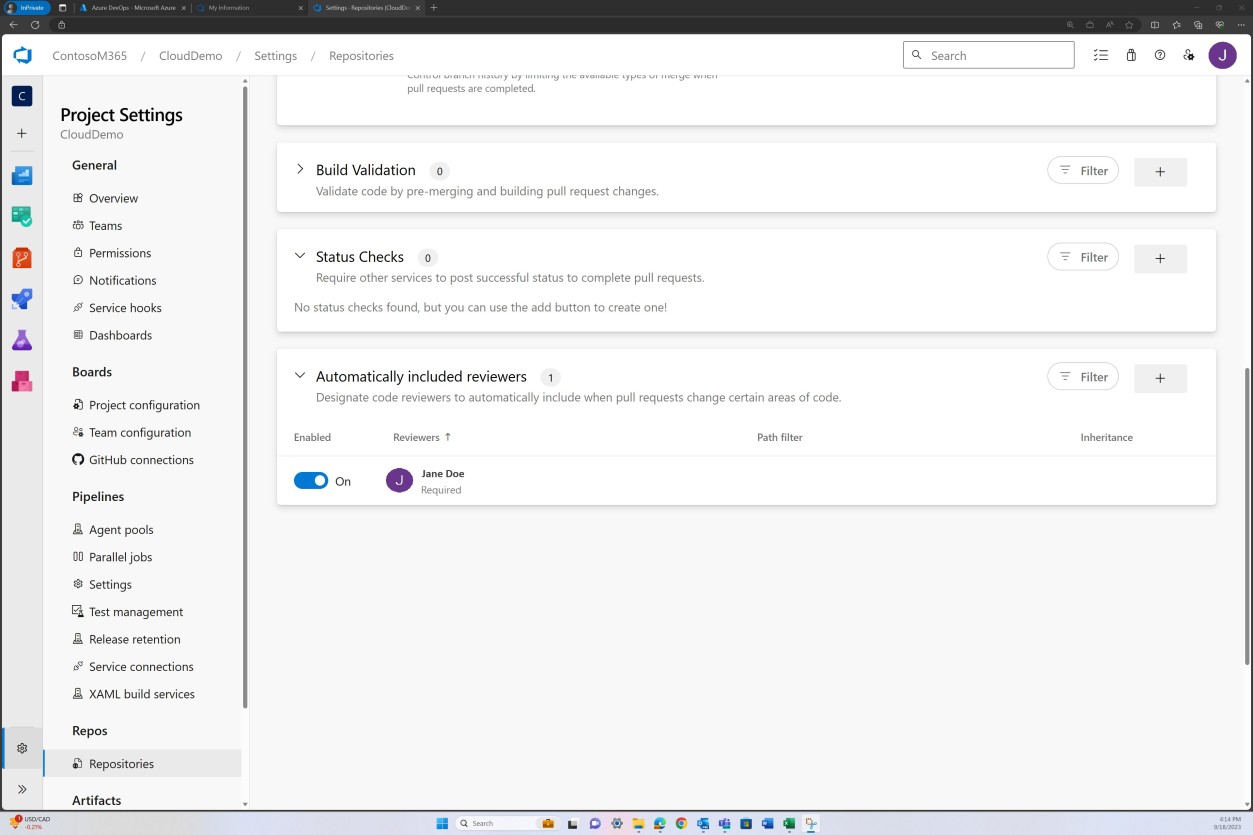

Besides the administrative part of the process shown above, with modern code repositories and platforms additional controls such as branch policy enforcing review can be implemented to ensure merges cannot occur until such review is completed. The example following shows this being achieved in DevOps.

The next screenshot shows that default reviewers are assigned, and review is automatically required.

Example evidence: code review

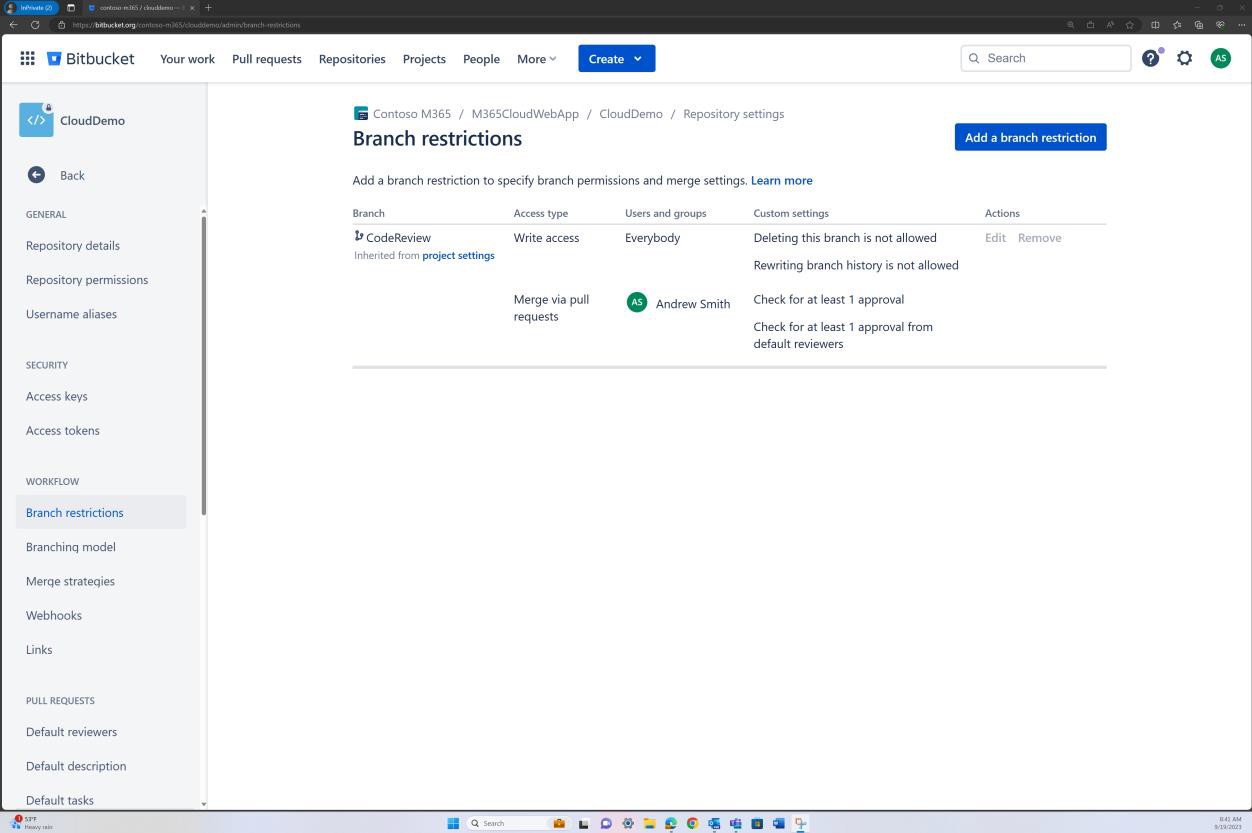

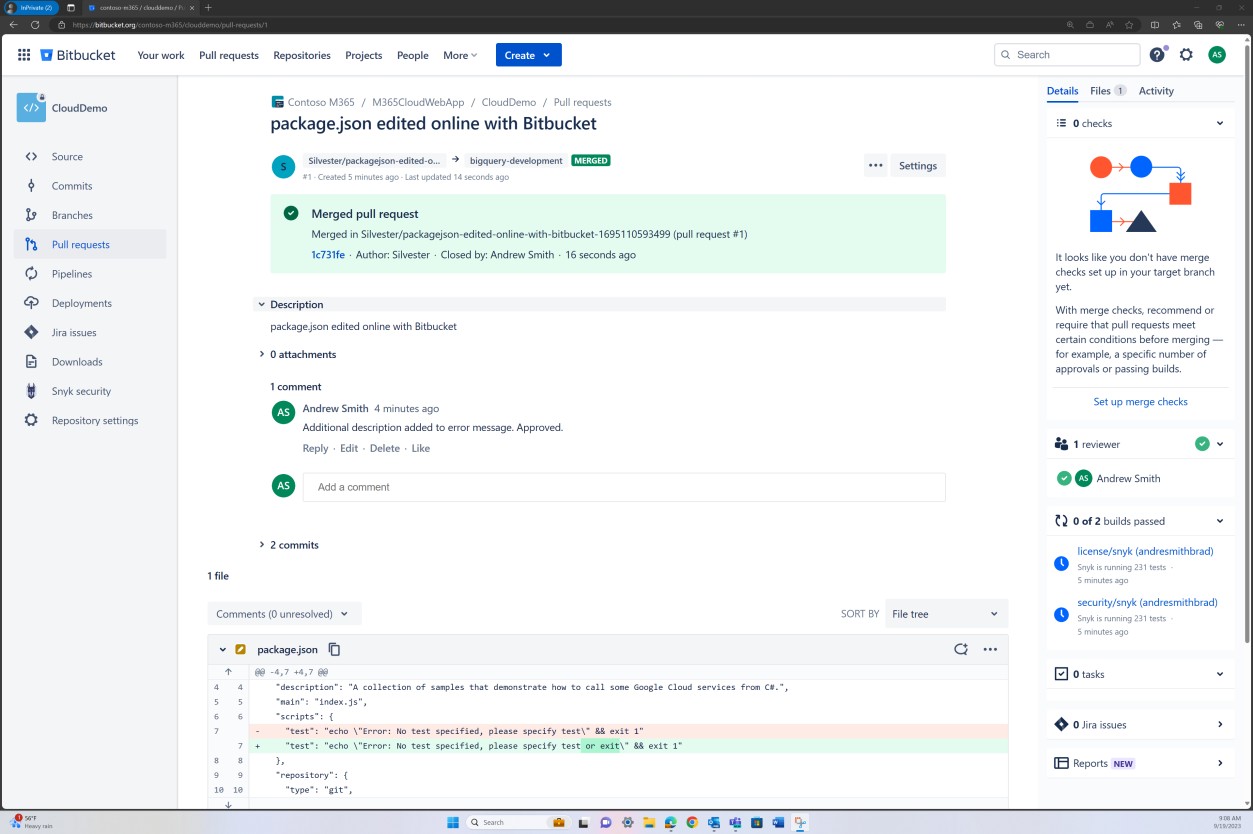

Branch policy enforcing review can also be achieved in Bitbucket.

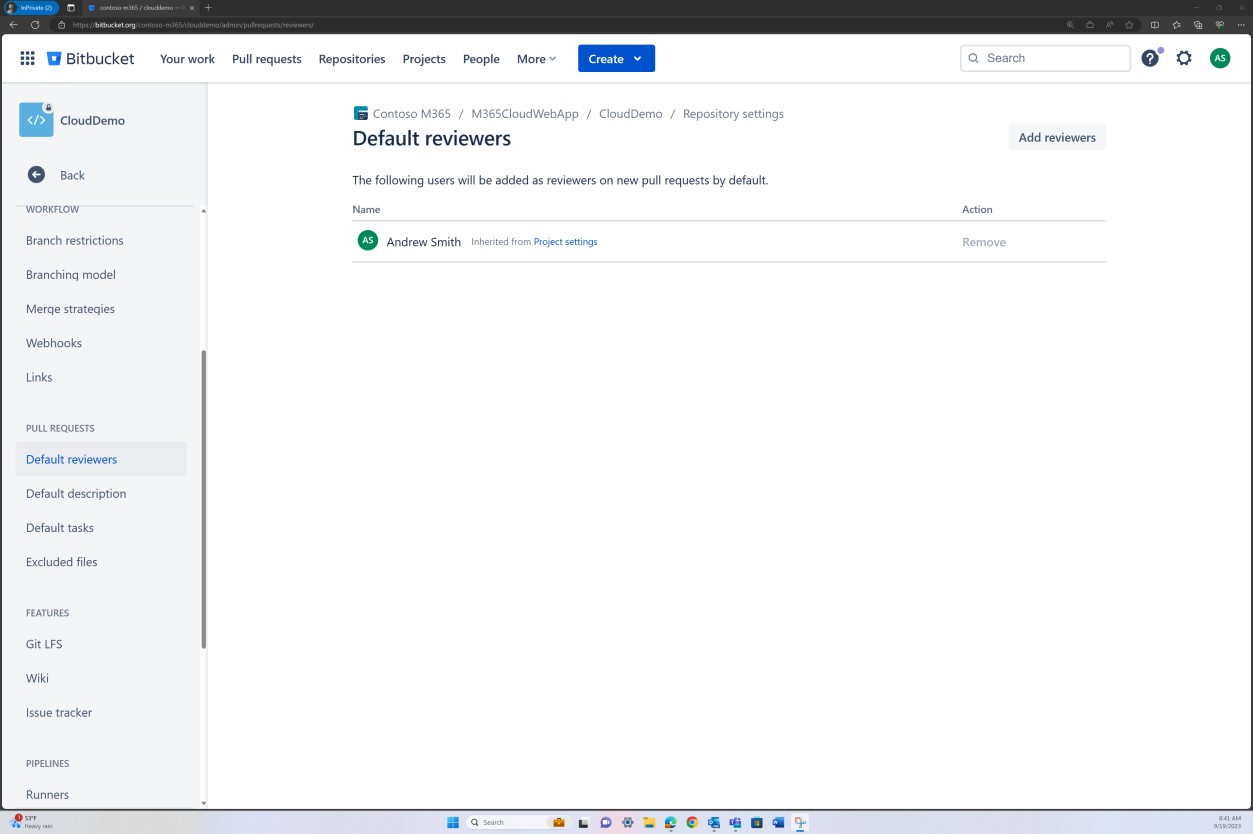

In the next screenshot that a default reviewer is set. This ensures that any merges will require a review from the assigned individual before the change is propagated to the main branch.

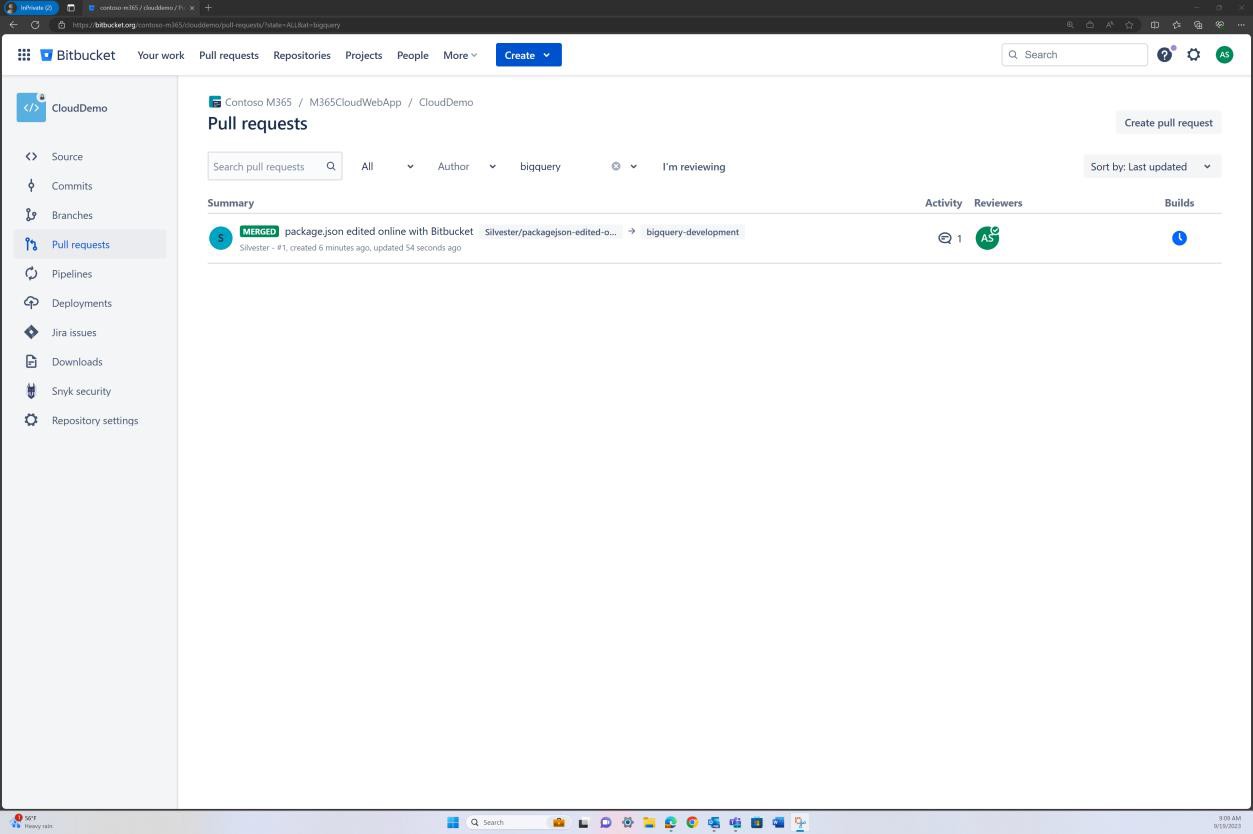

The subsequent two screenshots, demonstrate an example of the configuration settings being applied. As well as a completed pull request, which was initiated by the user Silvester and required approval from the default reviewer Andrew before being merged with the main branch.

Please note that when evidence is supplied the expectation will be for the end-to-end process to be demonstrated. Note: Screenshots should be supplied showing the configuration settings if a branch policy is in place (or some other programmatic method/control), and tickets/records of approval being granted.

Intent: restricted access

Leading on from the previous control, access controls should be implemented to limit access to only individual users who are working on specific projects. By limiting access, you can limit the risk of unauthorized changes being carried out and thereby introducing insecure code changes. A least privileged approach should be taken to protect the code repository.

Guidelines: restricted access

Provide evidence by way of screenshots from the code repository that access is restricted to individuals needed, including different privileges.

Example evidence: restricted access

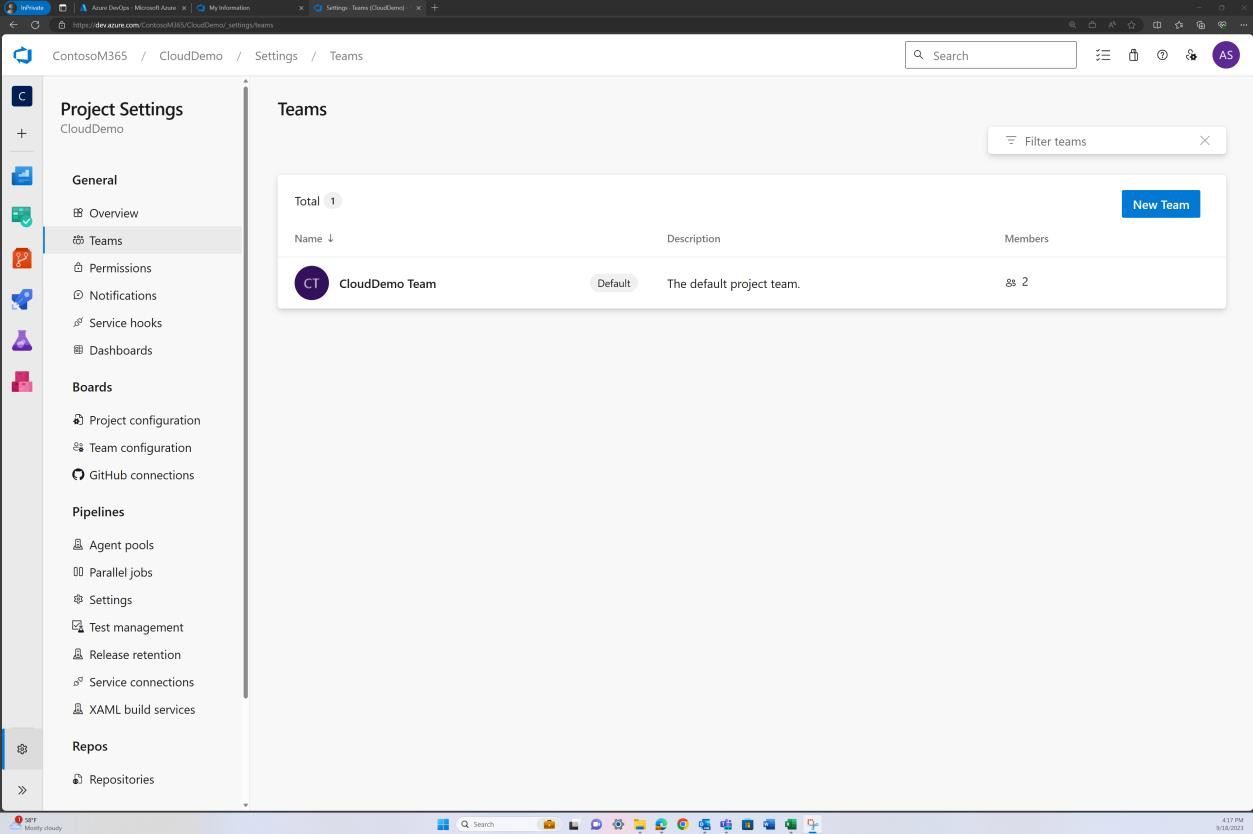

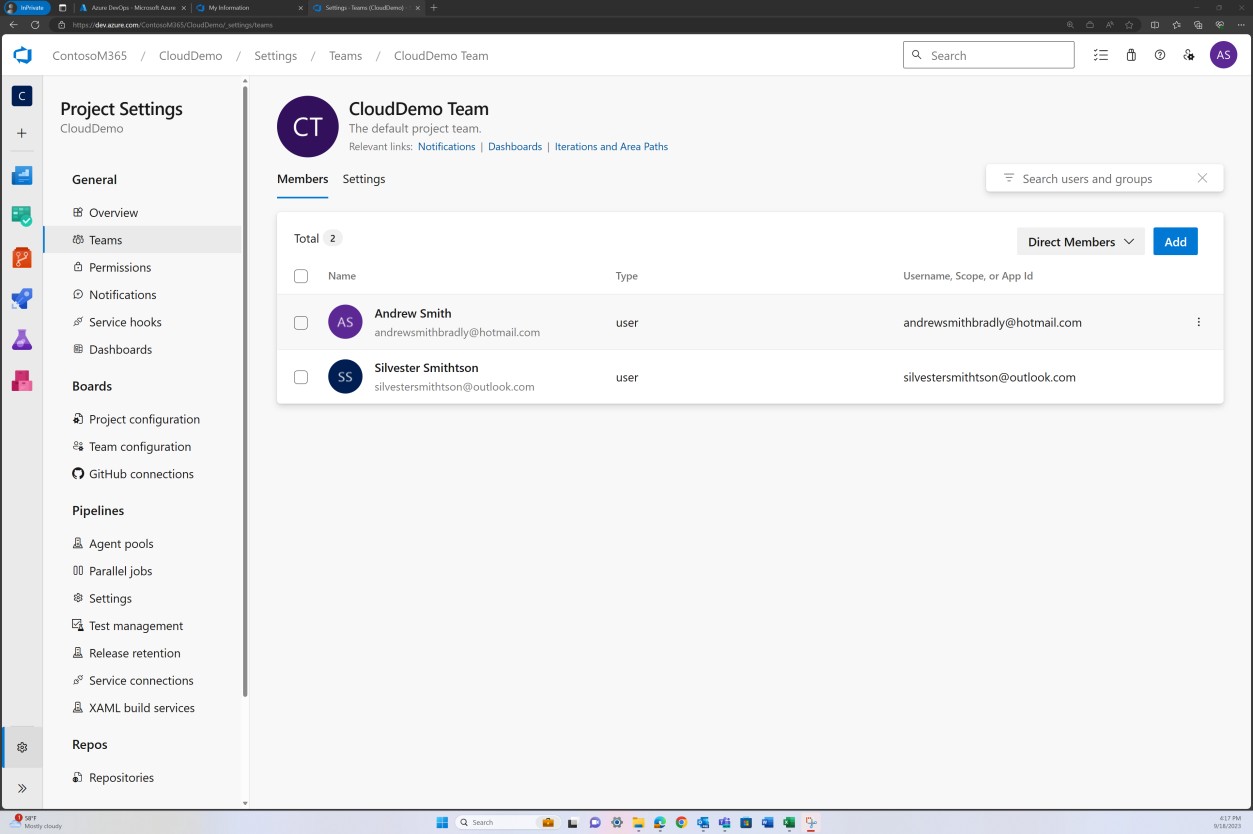

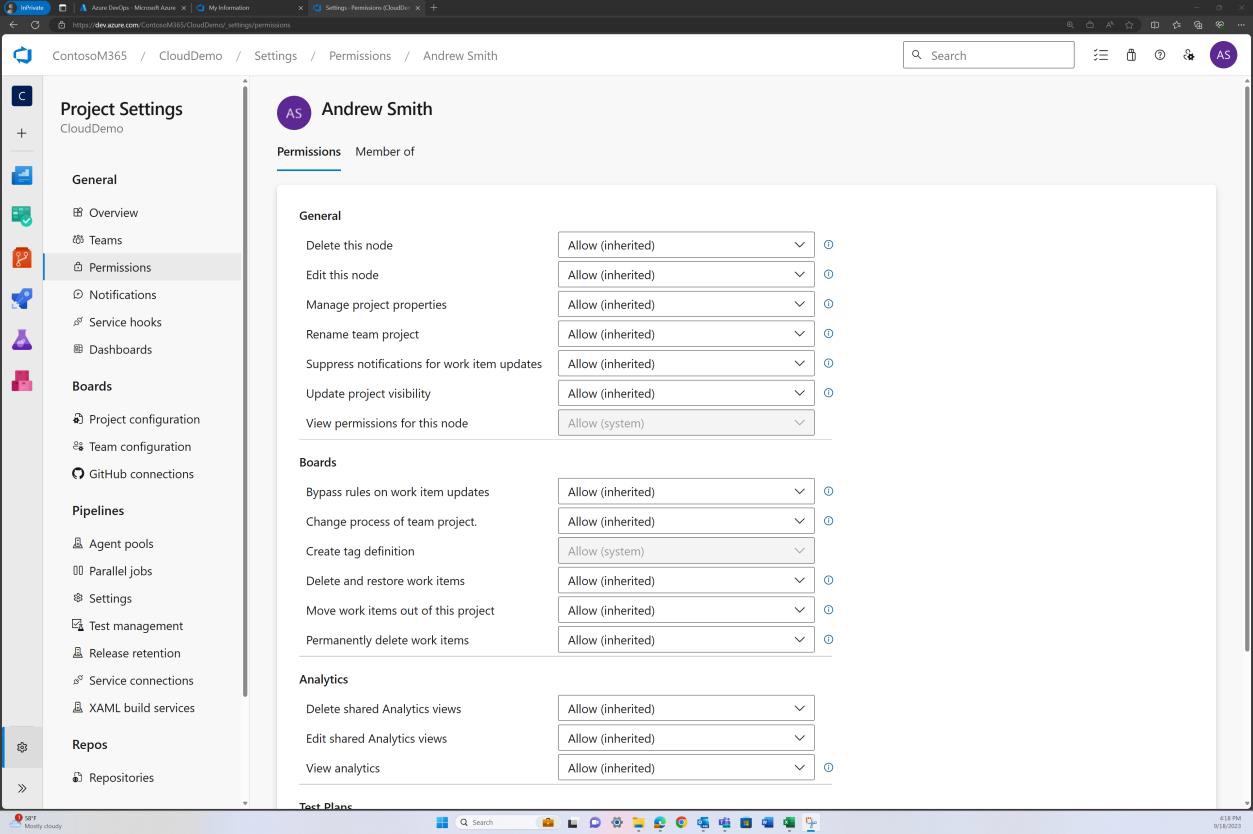

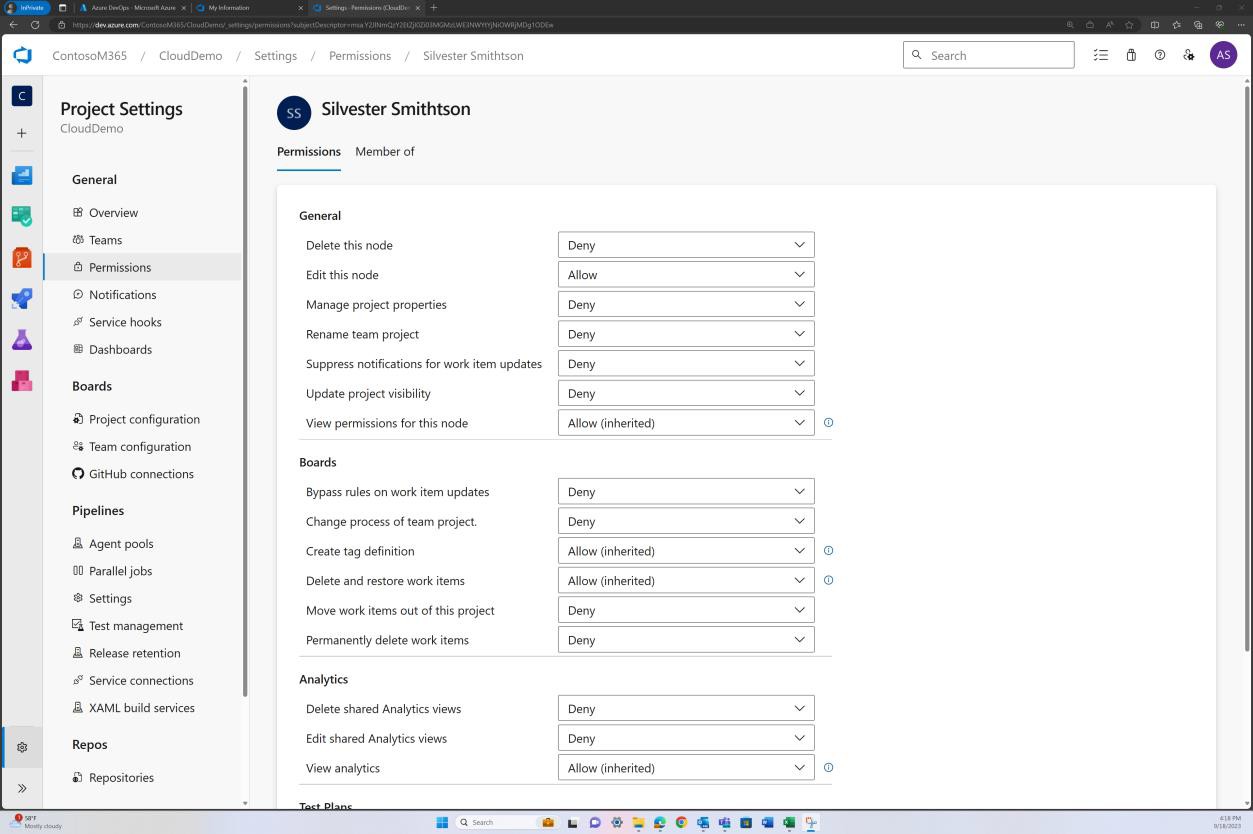

The next screenshots show the access controls that have been implemented in Azure DevOps. The “CloudDemo Team” is shown to have two members, and each member has different permissions.

Note: The next screenshots show an example of the type of evidence and format that would be expected to meet this control. This is by no means extensive and the real-world cases might differ on how access controls are implemented.

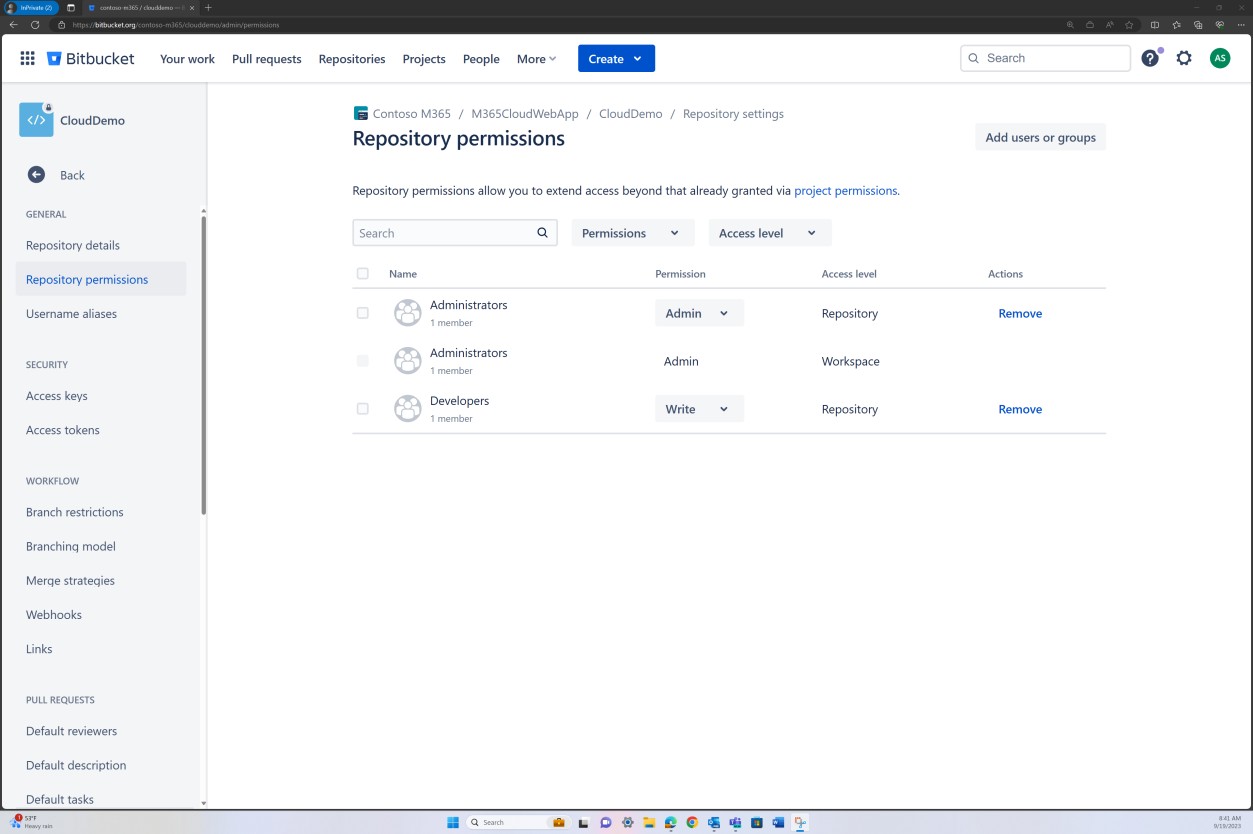

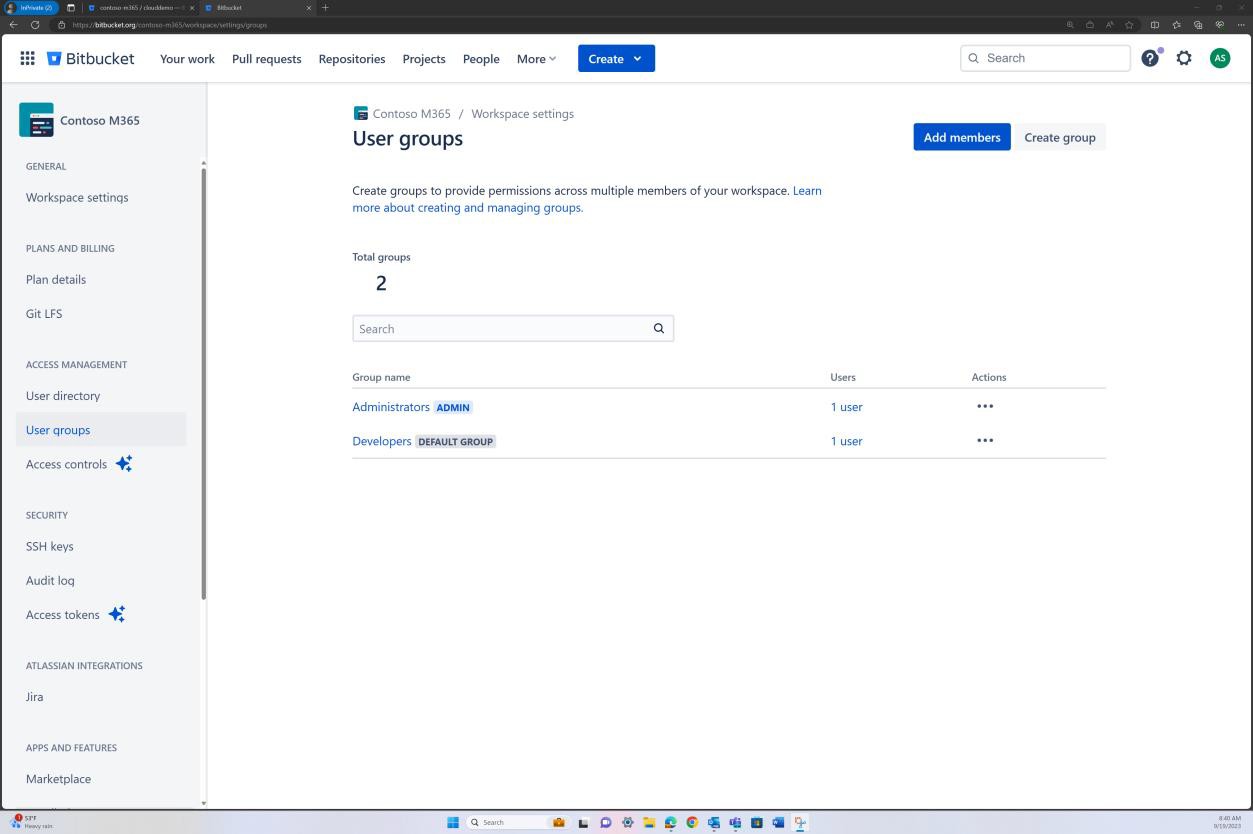

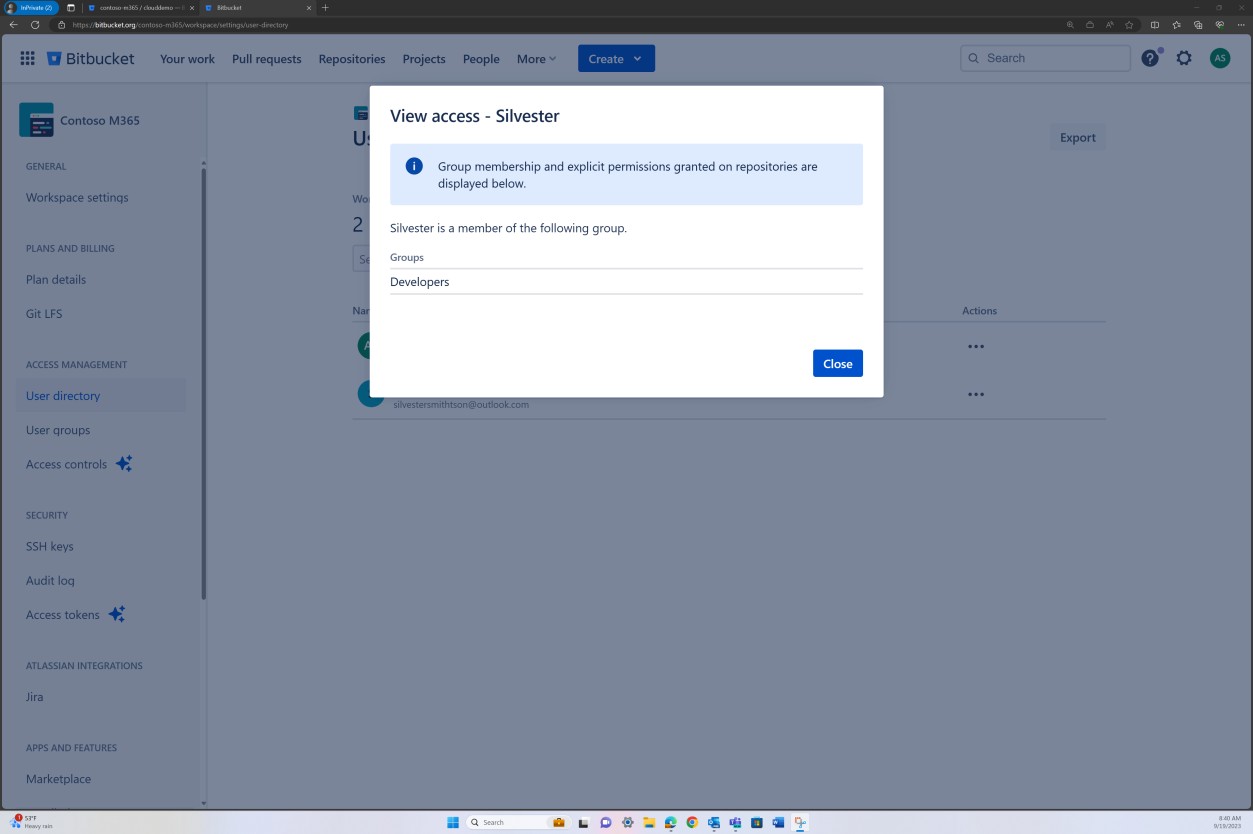

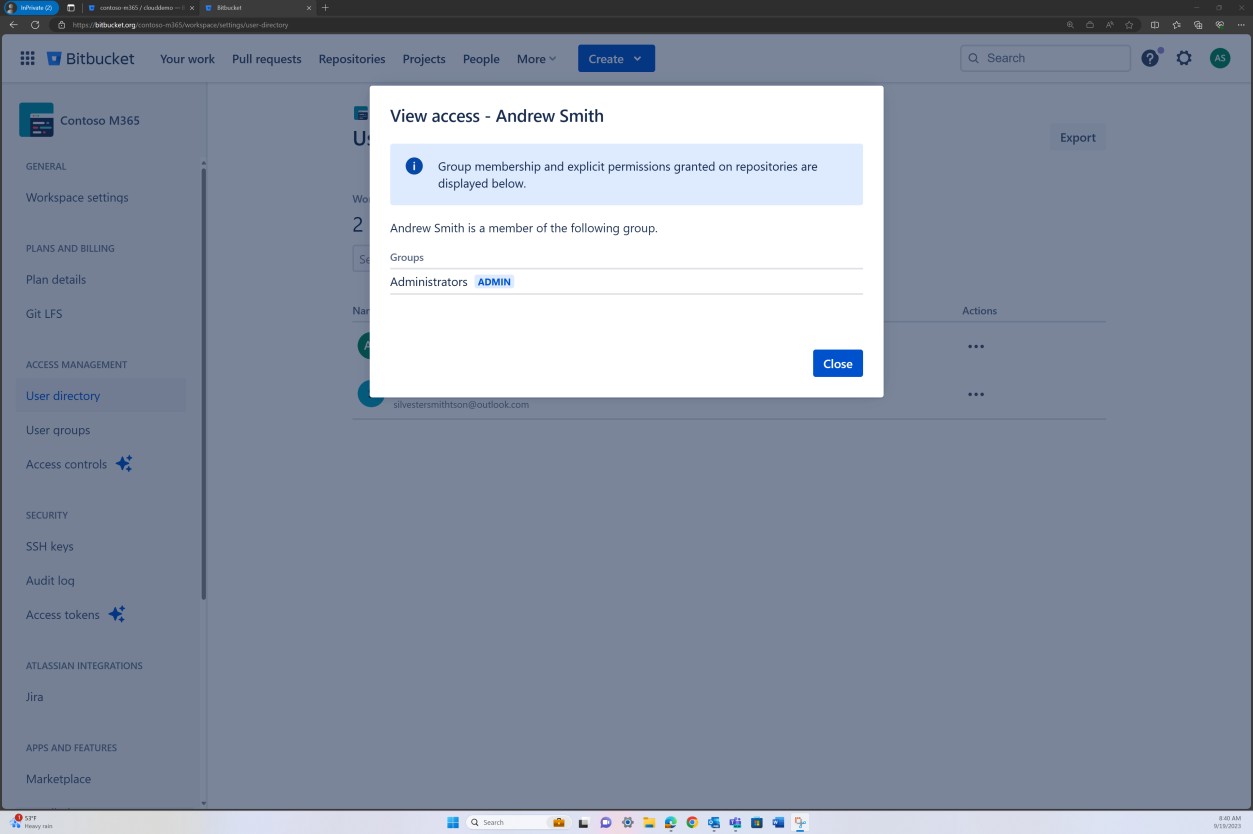

If permissions are set at group level, then evidence of each group and the users of that group must be supplied as shown in the second example for Bitbucket.

Screenshot following shows the members of the “CloudDemo Team”.

The previous image shows that Andrew Smith has significantly higher privileges as the project owner than Silvester below.

Example evidence

In the next screenshot, access controls implemented in Bitbucket is achieved via permissions set at group level. For repository access level there is an “Administrator” group with one user and a “Developer” group with another user.

The next screenshots show that each of the users belongs to a different group and inherently has a different level of permissions. Andrew Smith is the Administrator, and Silvester is part of the Developer group, which only grants him developer privileges.

Intent: MFA

If a threat actor can access and modify a software’s code base, they could introduce vulnerabilities, backdoors, or malicious code into the code base and therefore into the application. There have been several instances of this already, with probably the most publicized being the SolarWinds attack of 2020, where the attackers injected malicious code into a file that was later included in SolarWinds' Orion software updates. More than 18,000 SolarWinds customers installed the malicious updates, with the malware spreading undetected.

The intent of this subpoint is to verify that all access to code repositories is enforced through multi- factor authentication (MFA).

Guidelines: MFA

Provide evidence by way of screenshots from the code repository that ALL users have MFA enabled.

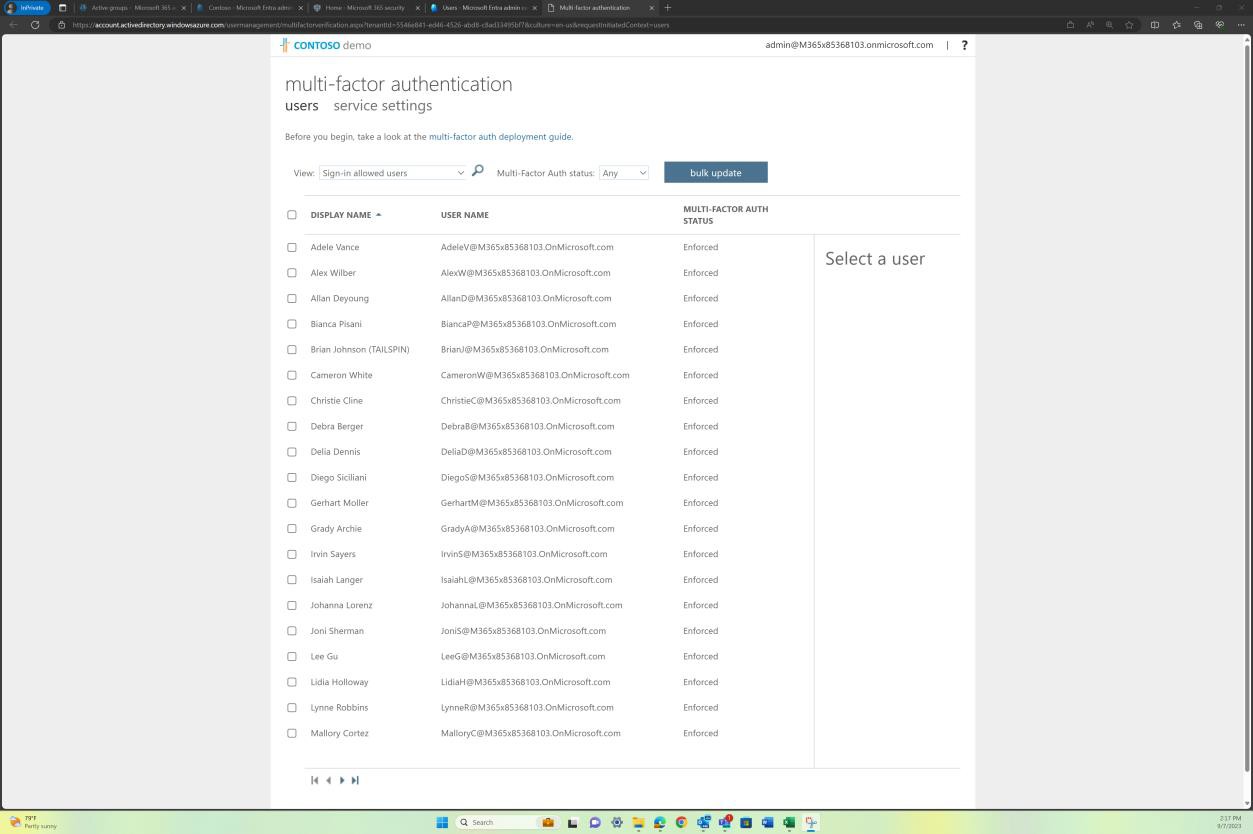

Example evidence: MFA

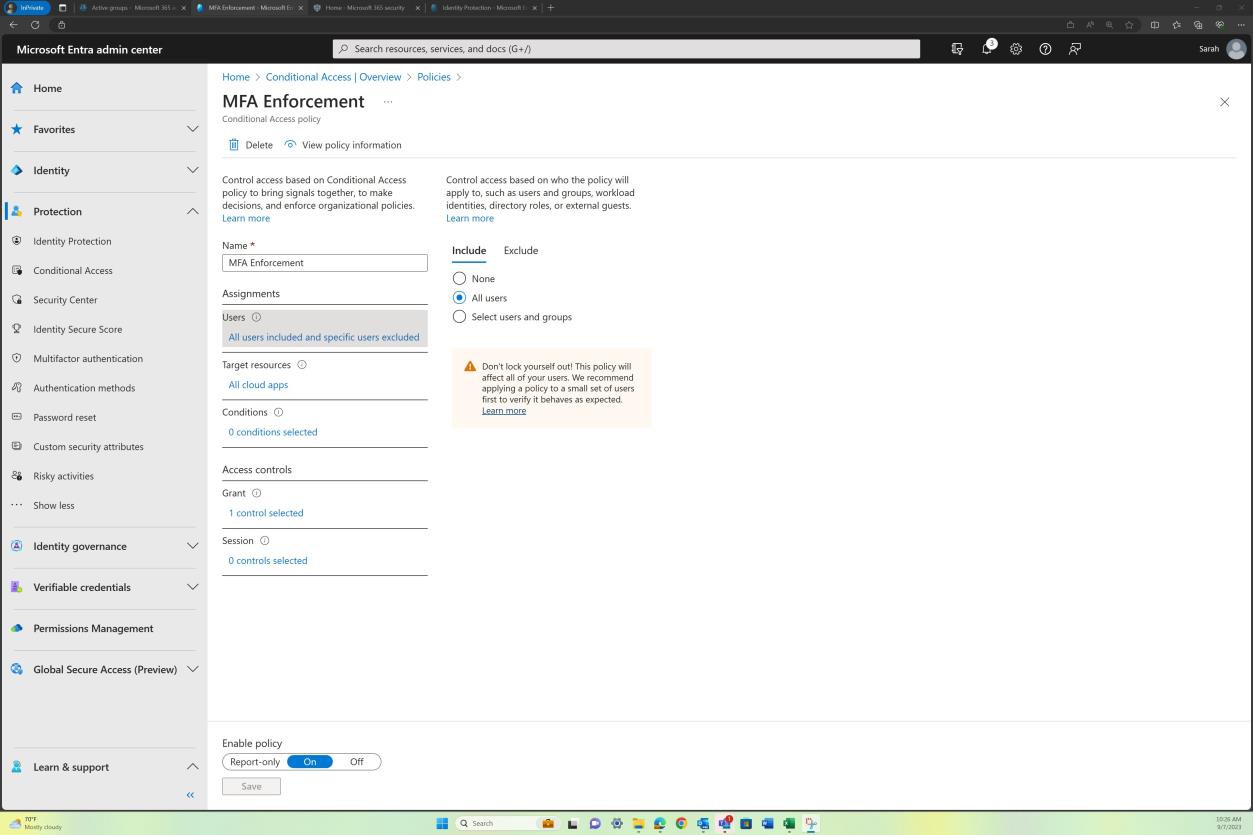

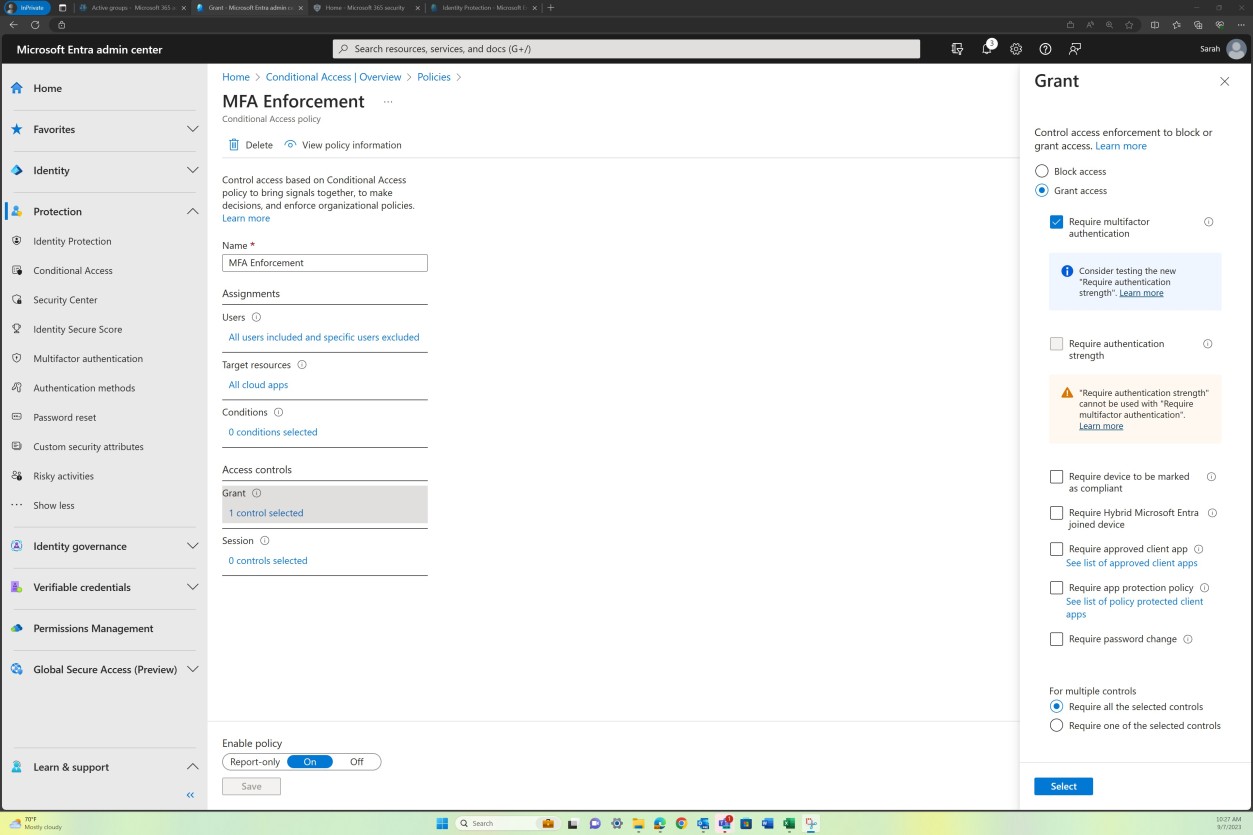

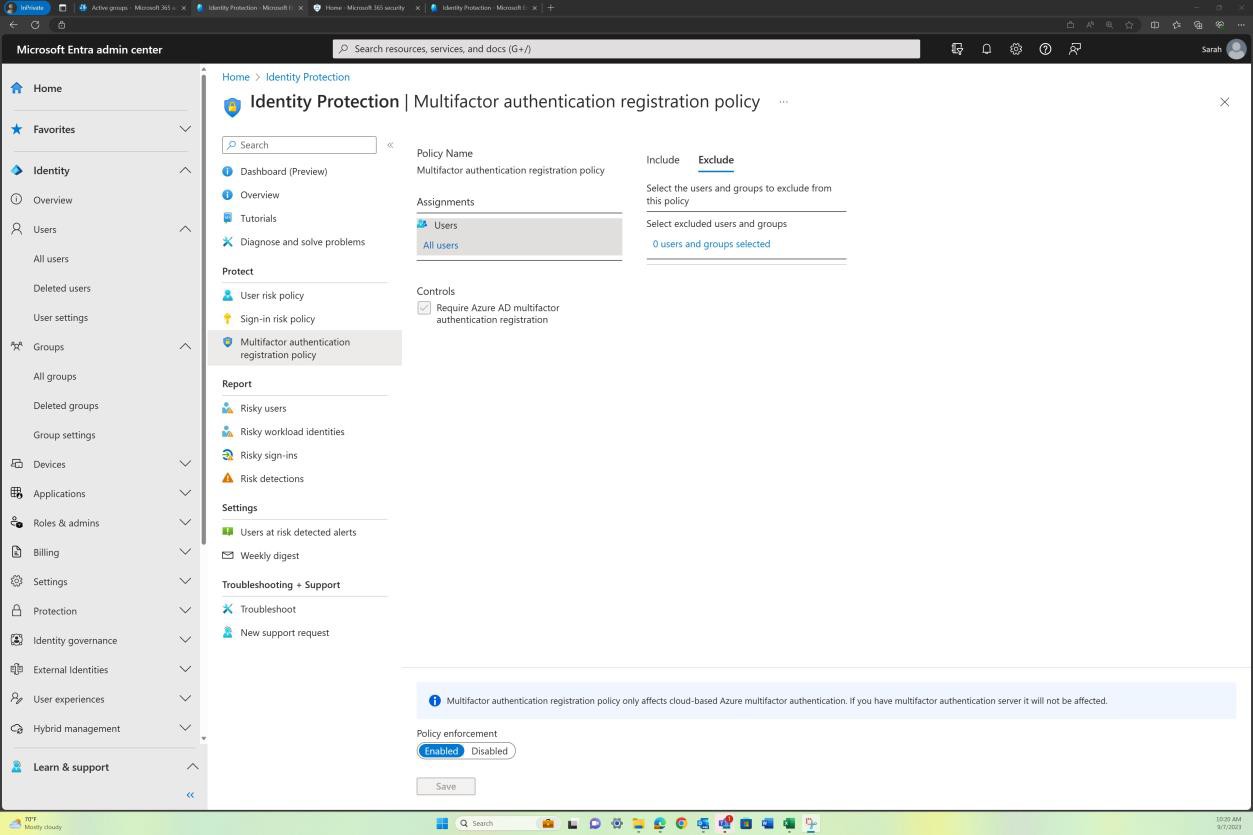

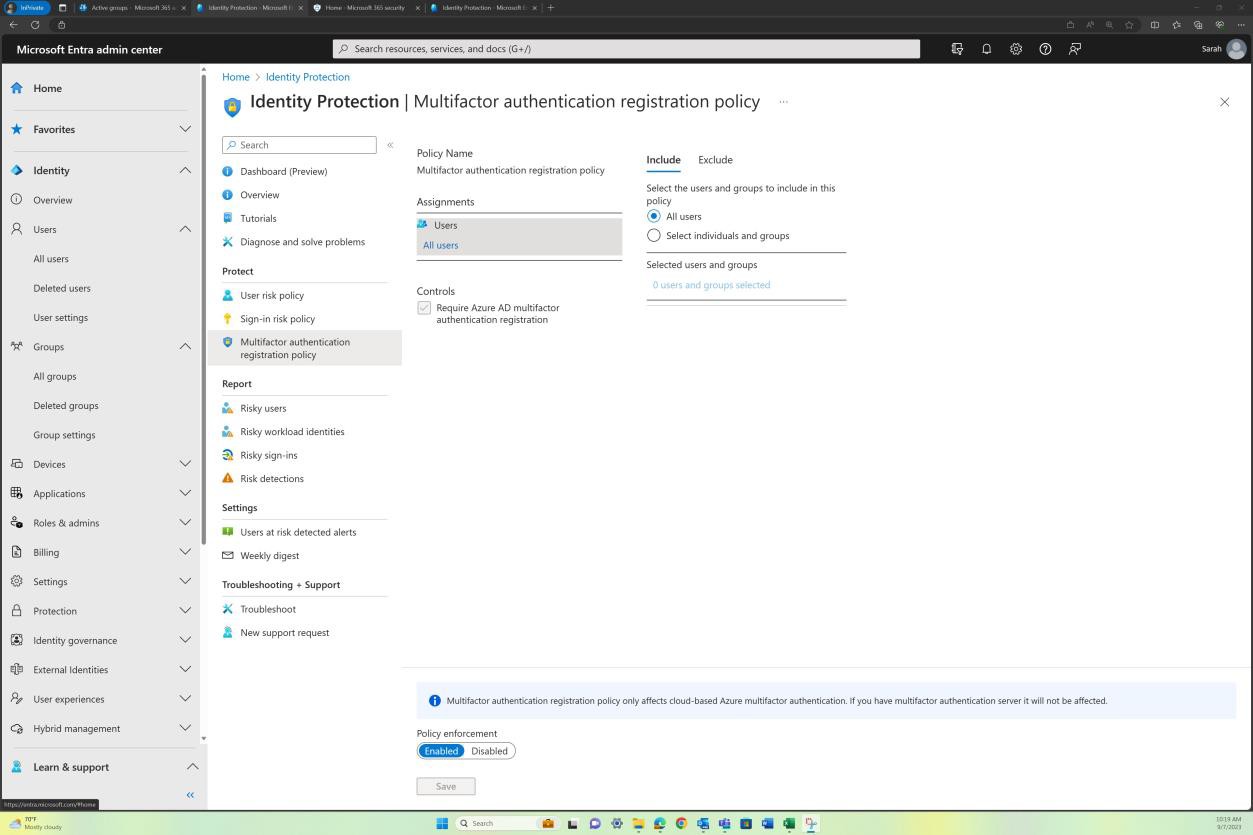

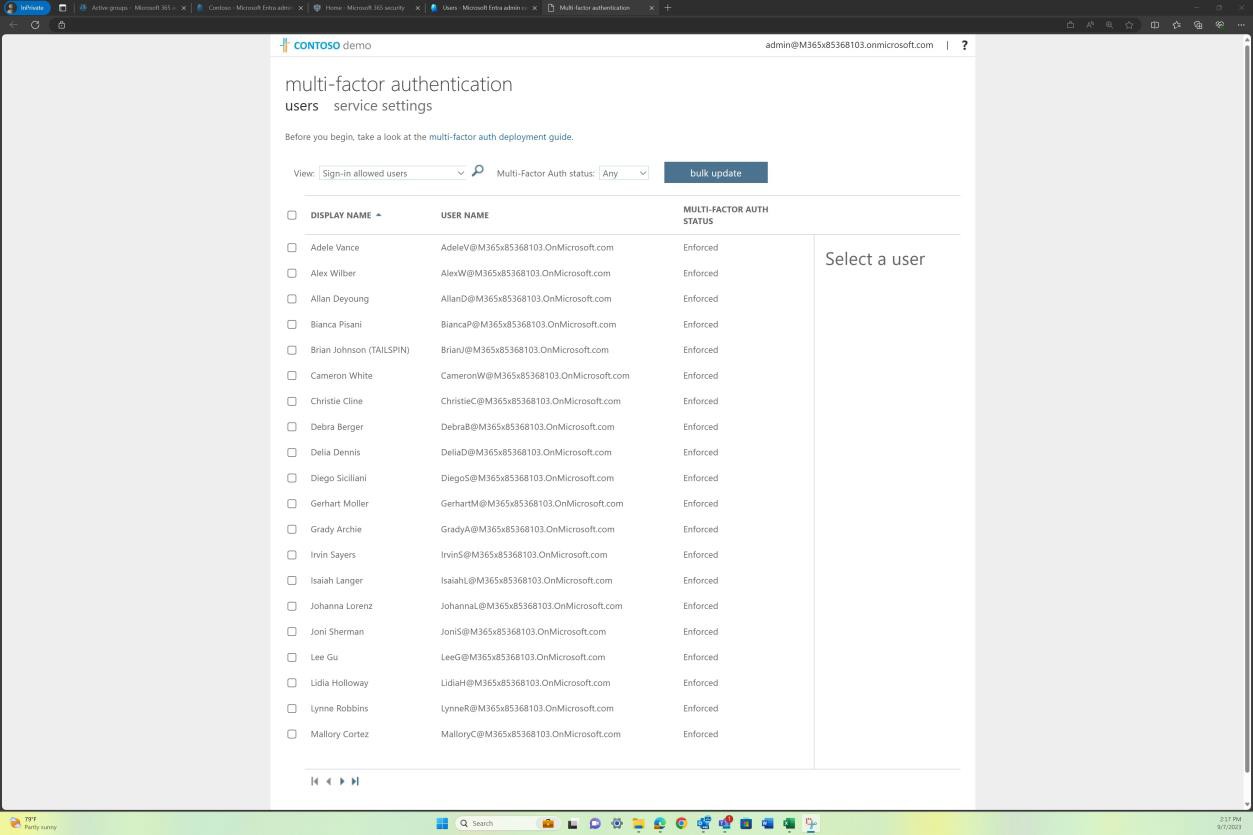

If the code repositories are stored and maintained in Azure DevOps, then depending on how MFA was setup at the tenant level, evidence can be provided from AAD e.g., “Per user MFA”. The next screenshot shows that MFA is enforced for all users in AAD, and this will also apply to Azure DevOps.

Example evidence: MFA

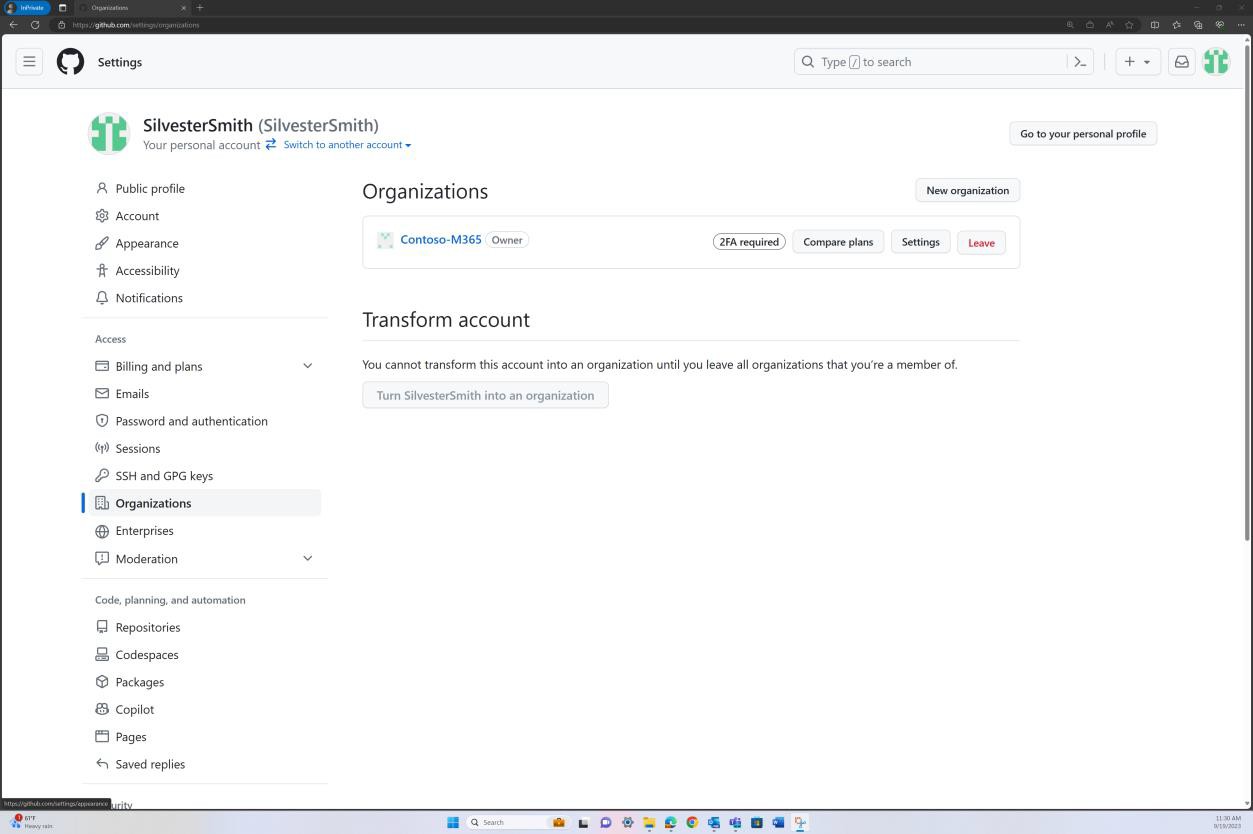

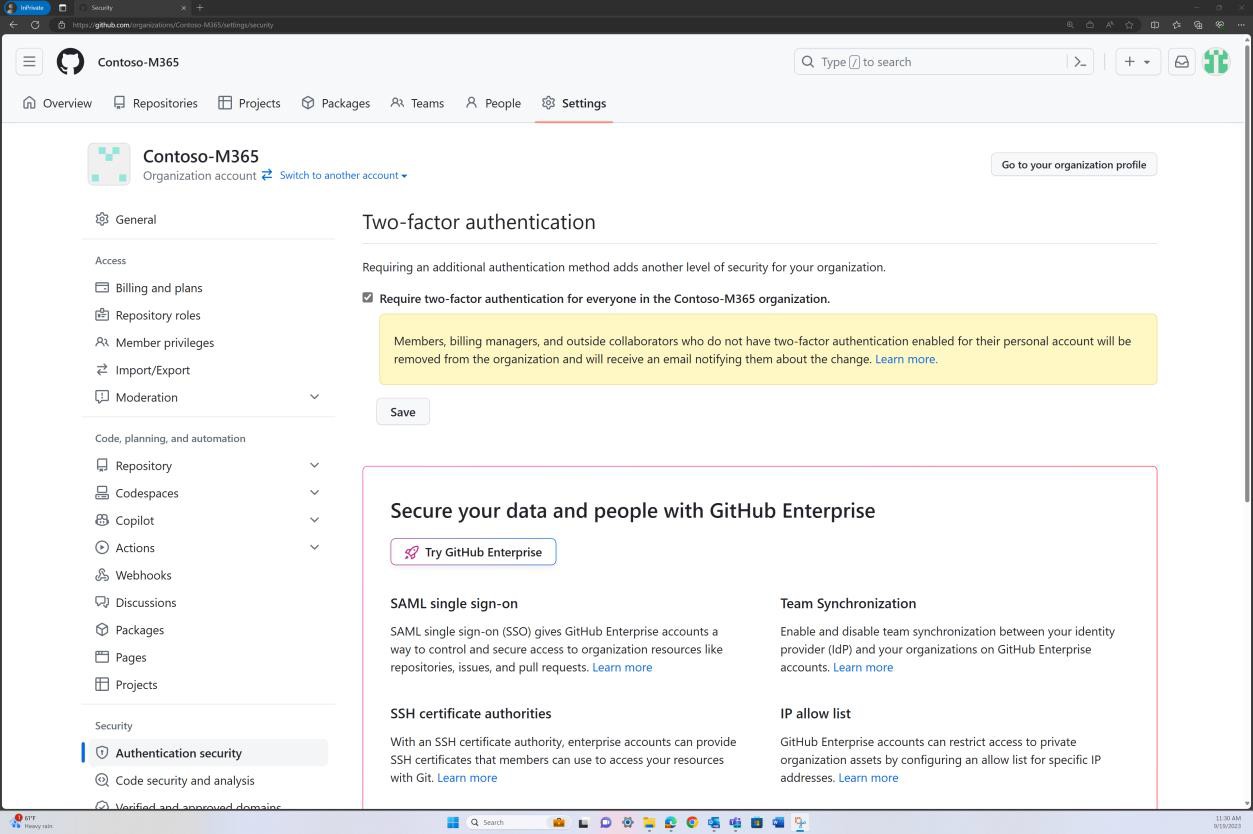

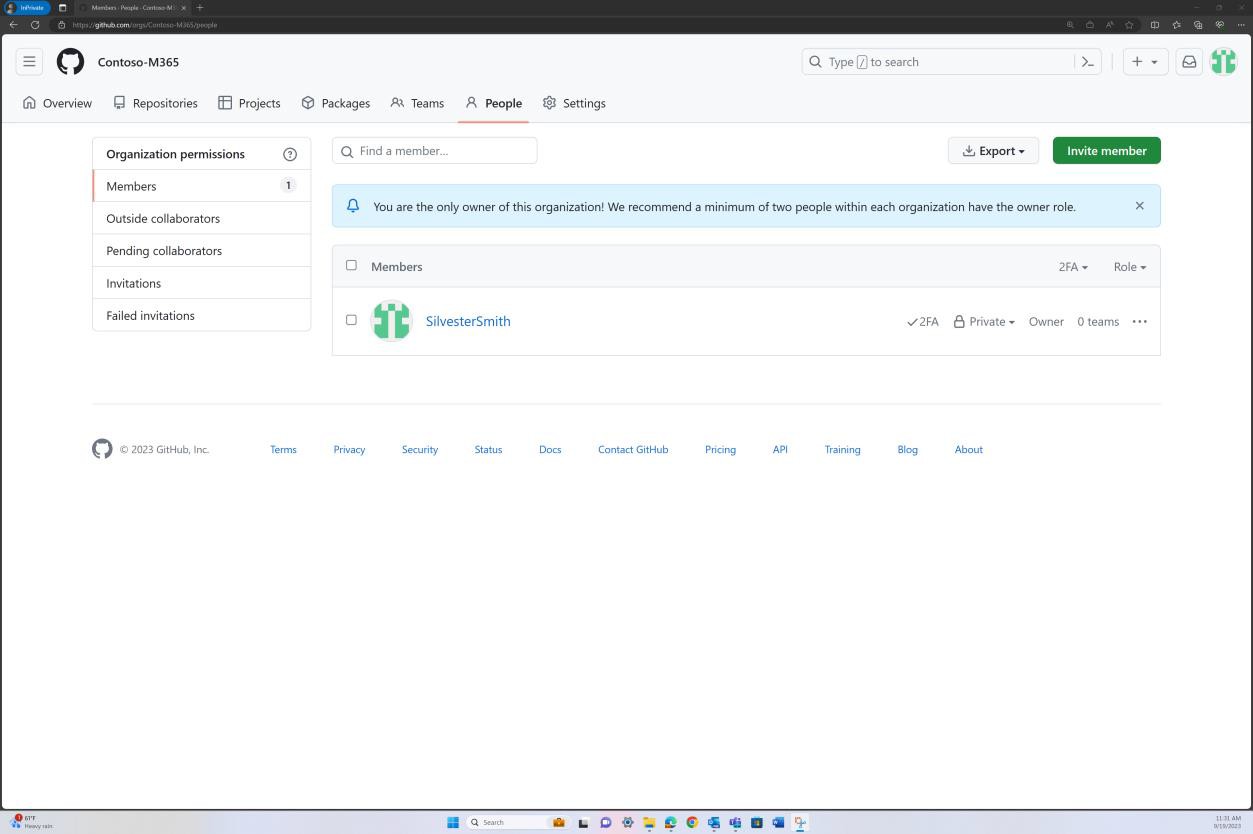

If the organization uses a platform such as GitHub, you can demonstrate that 2FA is enabled by sharing the evidence from the ‘Organization’ account as shown in the next screenshots.

To view whether 2FA is enforced for all members of your organization on GitHub, you can navigate to the organization’s settings tab, as in the next screenshot.

Navigating to the “People” tab in GitHub, it can be established that “2FA” is enabled for all users within the organization as shown in the next screenshot.

Intent: reviews

The intent with this control is to perform a review of the release into a development environment by another developer to help identify any coding mistakes, as well as misconfigurations which could introduce a vulnerability. Authorization should be established to ensure release reviews are carried out, testing is done, etc. prior to deployment in production. The authorization. step can validate that the correct processes have been followed which underpins the SDLC principles.

Guidelines

Provide evidence that all releases from the test/development environment into the production environment are being reviewed by a different person/developer than the initiator. If this is achieved via a Continuous Integration/Continuous Deployment pipeline, then evidence supplied must show (same as with code reviews ) that reviews are enforced.

Example evidence

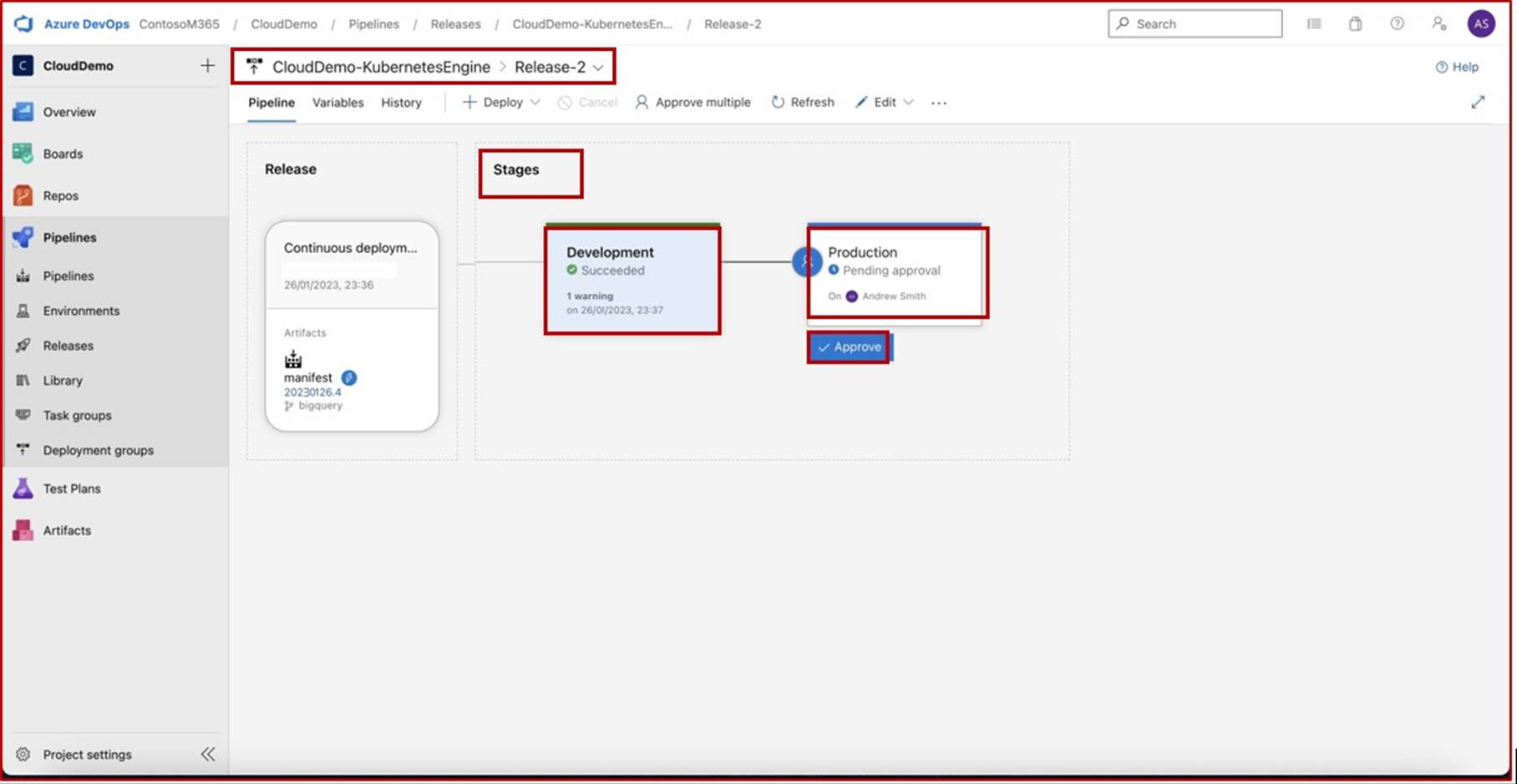

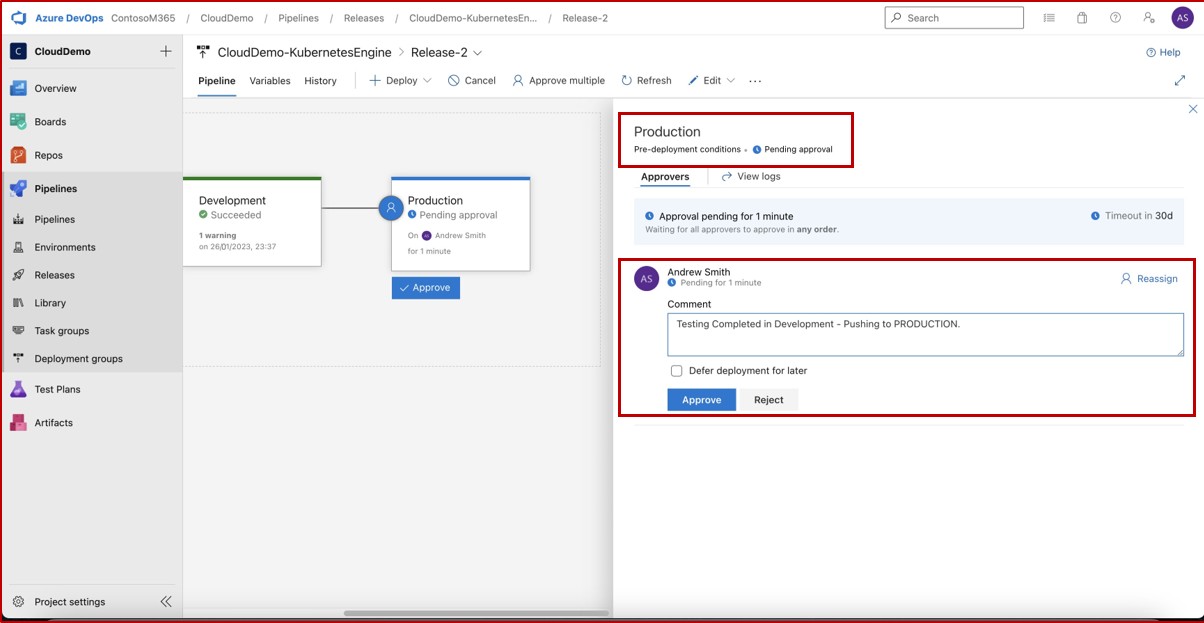

In the next screenshot we can see that a CI/CD pipeline is in use in Azure DevOps, the pipeline contains two stages: Development and Production. A release was triggered and successfully deployed into the Development environment but has not yet propagated into the second stage (Production) and that is pending approval from Andrew Smith.

The expectation is that once deployed to development, security testing occurs by the relevant team and only when the assigned individual with the right authority to review the deployment has performed a secondary review, and is satisfied that all conditions are met, will then grant approval which will allow the release to be made into production.

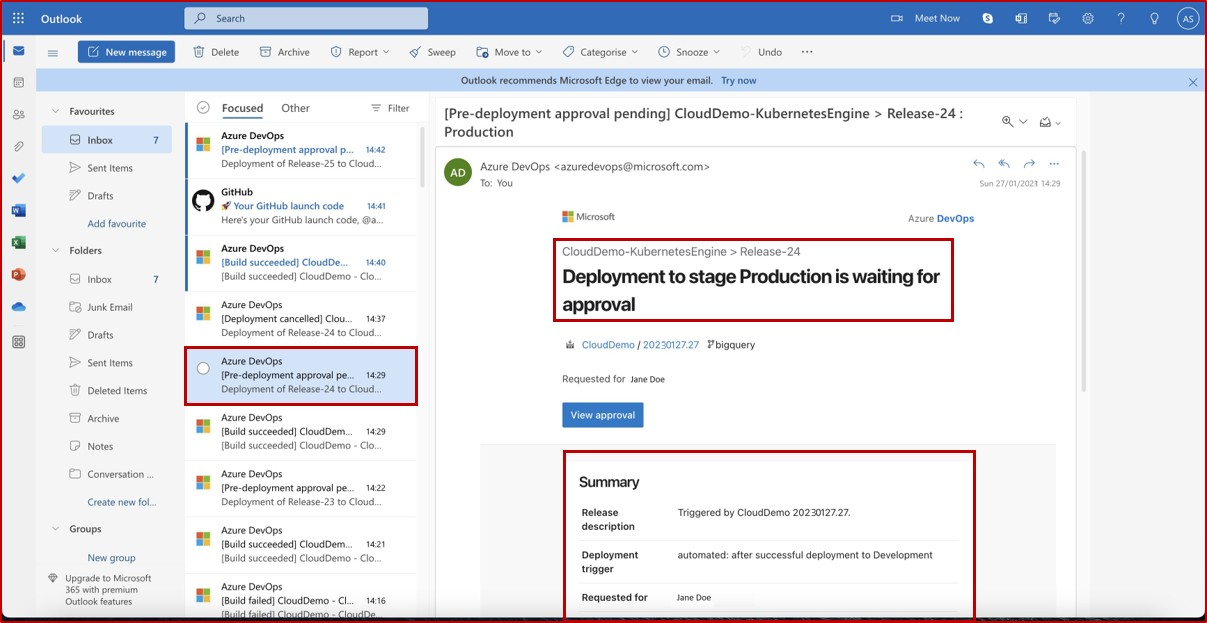

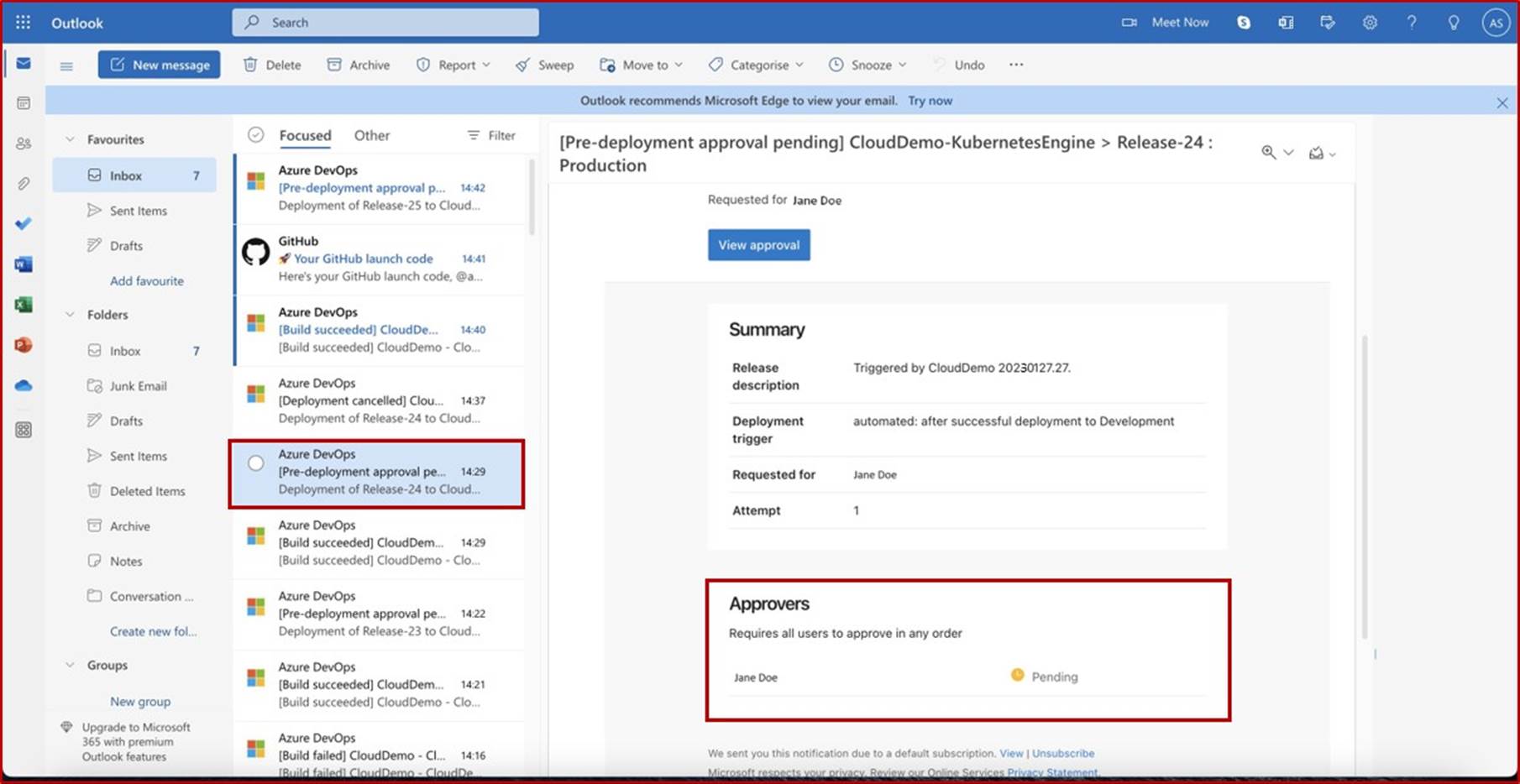

The email alert that would normally be received by the assigned reviewer informing that a pre-deployment condition was triggered and that a review and approval is pending.

Using the email notification, the reviewer can navigate to the release pipeline in DevOps and grant approval. We can see following that comments are added justifying the approval.

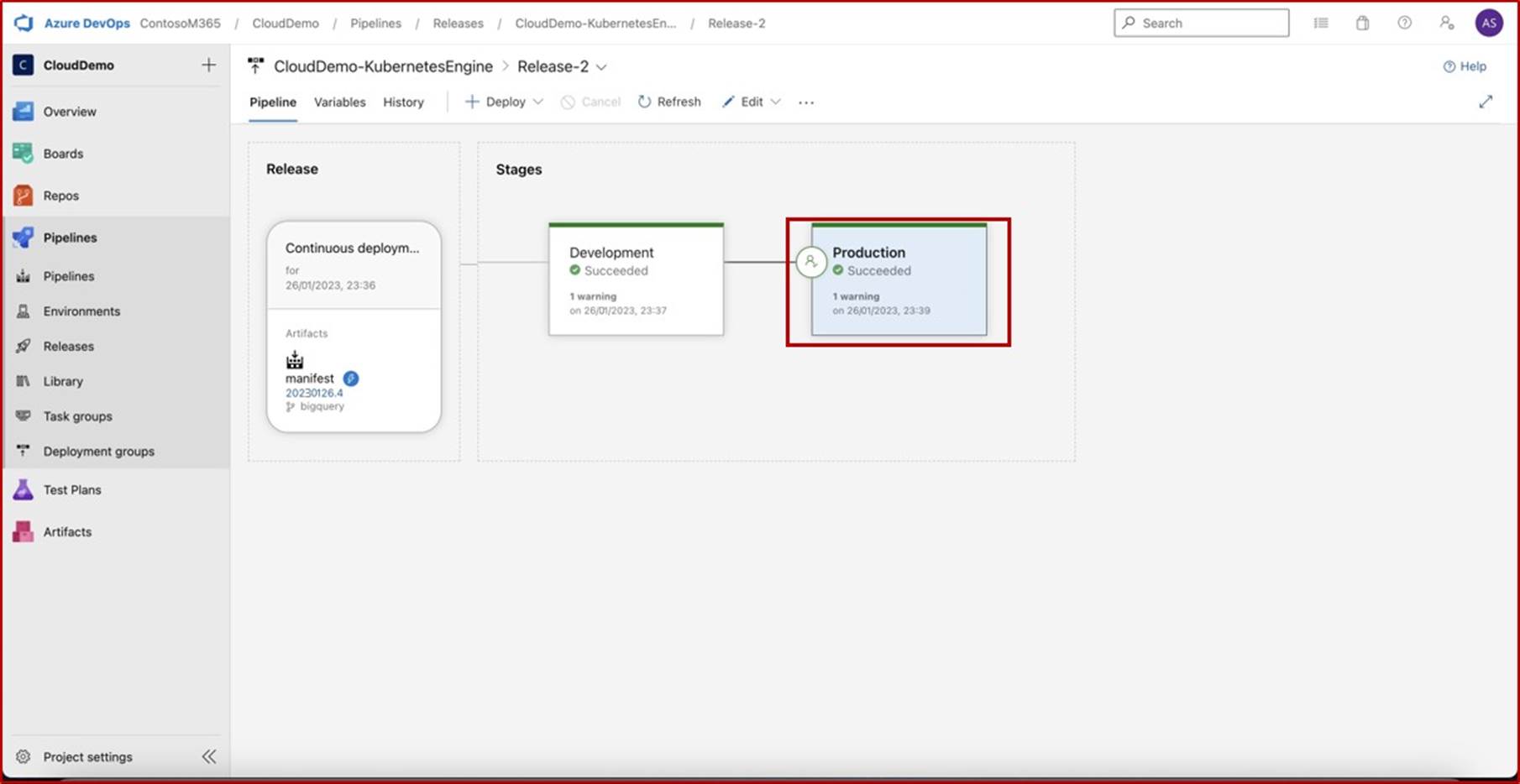

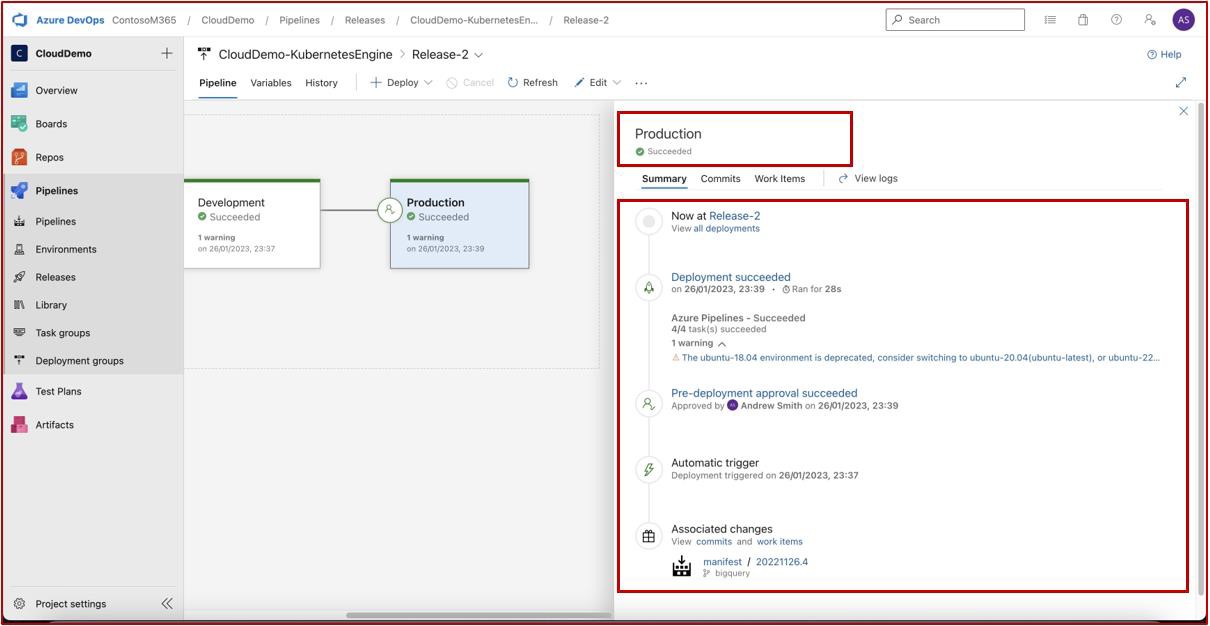

In the second screenshot it is shown that the approval was granted and that the release into production was successful.

The next two screenshots show a sample of the evidence that would be expected.

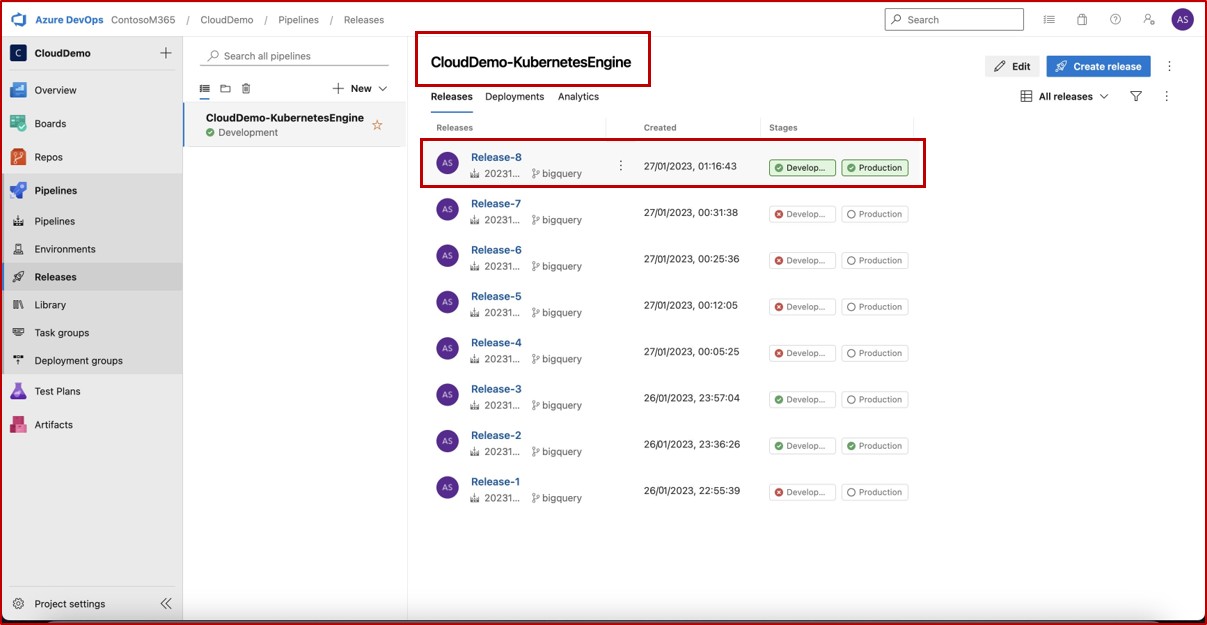

The evidence shows historical releases and that pre-deployment conditions are enforced, and a review and approval are required before deployment can be made to the Production environment.

Next screenshot shows history of releases including the recent release which we can see was successful deployed on both development and production.

Note: In the previous examples a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing URL, any logged in user and system time and date.

Account management

Secure account management practices are important as user accounts form the basis of allowing access to information systems, system environments and data. User accounts need to be properly secured as a compromise of the user's credentials can provide not only a foothold into the environment and access to sensitive data but may also provide administrative control over the entire environment or key systems if the user's credentials have administrative privileges.

Control No. 14

Provide evidence that:

Default credentials are either disabled, removed, or changed across the sampled system components.

A process is in place to secure (harden) service accounts and that this process has been followed.

Intent: default credentials

Although this is becoming less popular, there are still instances where threat actors can leverage default and well documented user credentials to compromise production system components. A popular example of this is with Dell iDRAC (Integrated Dell Remote Access Controller). This system can be used to remotely manage a Dell Server, which could be leveraged by a threat actor to gain control over the Server’s operating system. The default credential of root::calvin is documented and can often be leveraged by threat actors to gain access to systems used by organizations. The intent of this control is to ensure that default credentials are either disabled or removed to enhance security.

Guidelines: default credentials

There are various ways in which evidence can be collected to support this control. Screenshots of configured users across all system components can help, i.e., screenshots of the Windows default accounts through command prompt (CMD) and for Linux /etc/shadow and /etc/passwd files will help to demonstrate if accounts have been disabled.

Example evidence: default credentials

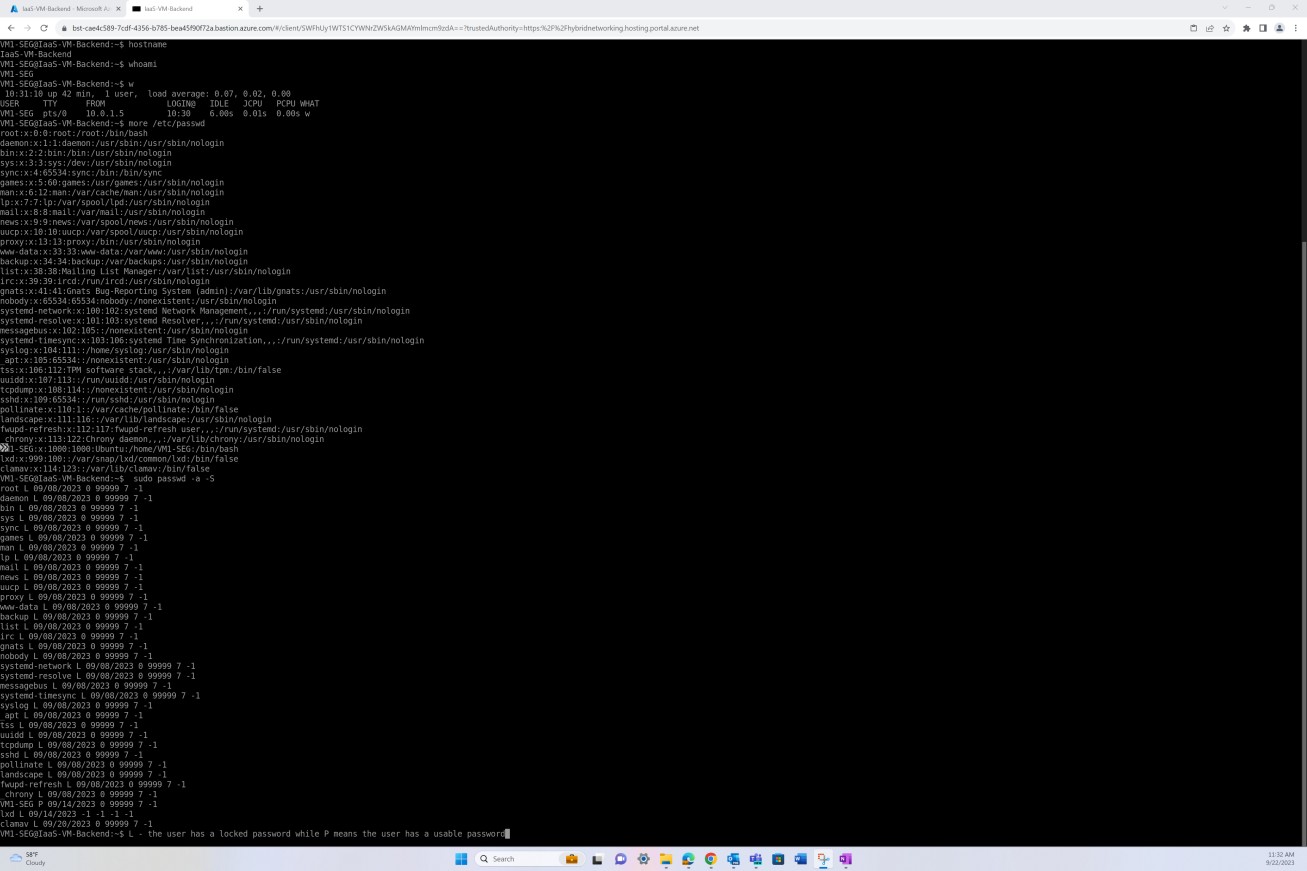

The next screenshot shows the /etc/shadow file to demonstrate that default accounts have a locked password, and the new created/active accounts have a usable password.

Note, that the /etc/shadow file would be needed to demonstrate accounts are truly disabled by observing that the password hash starts with an invalid character such as '!' indicating that the password is unusable. In the next screenshots the “L” means lock the password of the named account. This option disables a password by changing it to a value which matches no possible encrypted value (it adds a! at the beginning of the password). The “P” means it is a usable password.

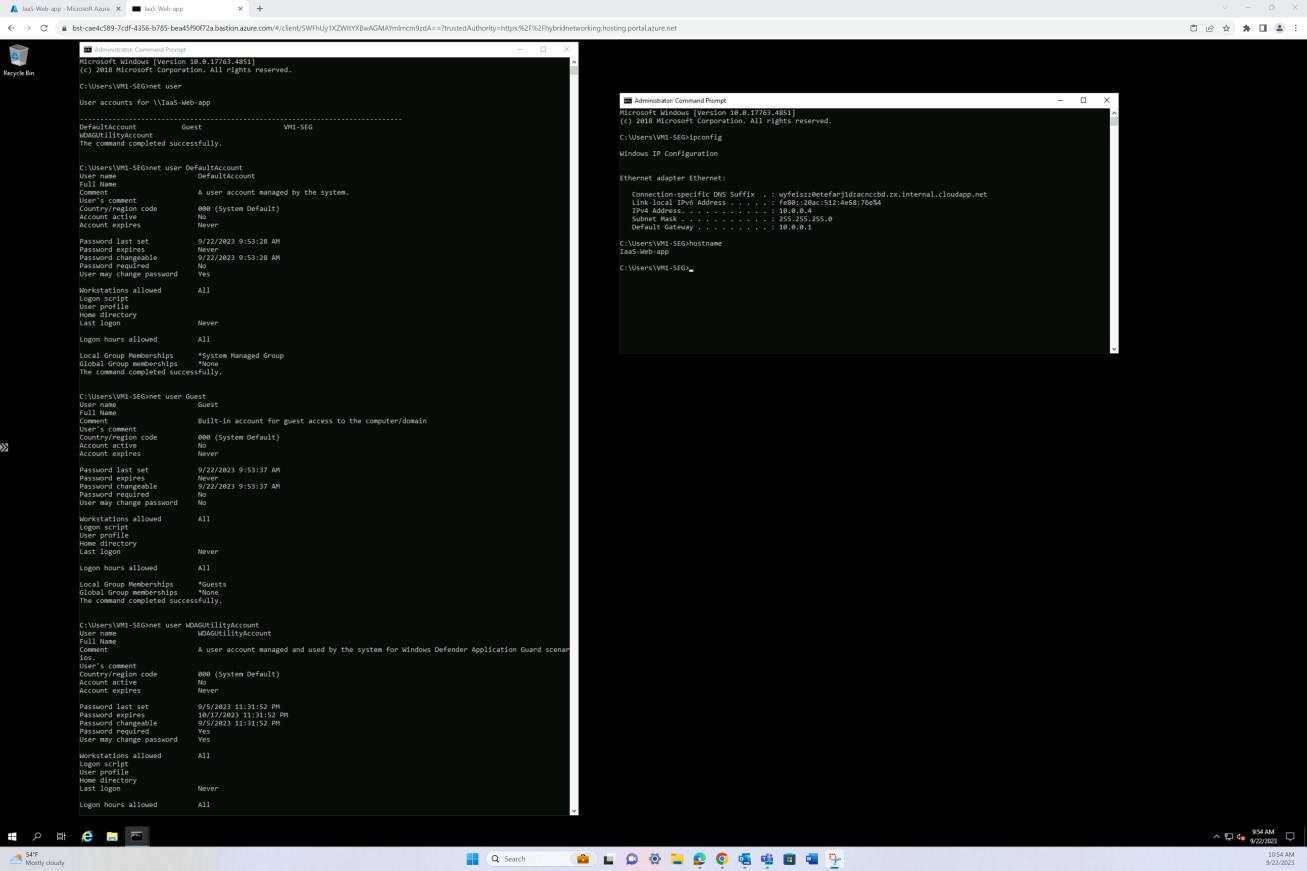

The second screenshot demonstrates that on the Windows server, all default accounts have been disabled. By using the ‘net user’ command on a terminal (CMD), you can list details of each of the existing accounts, observing that all these accounts are not active.

By using the net user command in CMD, you can display all accounts and observe that all default accounts are not active.

Intent: default credentials

Service accounts will often be targeted by threat actors because they are often configured with elevated privileges. These accounts may not follow the standard password policies because expiration of service account passwords often breaks functionality. Therefore, they may be configured with weak passwords or passwords that are reused within the organization. Another potential issue, particularly within a Windows environment, may be that the operating system caches the password hash. This can be a big problem if either:

the service account is configured within a directory service, since this account can be used access across multiple systems with the level of privileges configured, or

the service account is local, the likelihood is that the same account/password will be used across multiple systems within the environment.

The previous problems can lead to a threat actor gaining access to more systems within the environment and can lead to a further elevation of privilege and/or lateral movement. The intent therefore is to ensure that service accounts are properly hardened and secured to help protect them from being taken over by a threat actor, or by limiting the risk should one of these service accounts be compromised. The control requires that a formal process be in place for the hardening of these accounts, which may include restricting permissions, using complex passwords, and regular credential rotation.

Guidelines

There are many guides on the Internet to help harden service accounts. Evidence can be in the form of screenshots which demonstrate how the organization has implemented a secure hardening of the account. A few examples (the expectation is that multiple techniques would be used) include:

Restricting the accounts to a set of computers within Active Directory,

Setting the account so interactive sign in is not permitted,

Setting an extremely complex password,

For Active Directory, enable the "Account is sensitive and can't be delegated" flag.

Example evidence

There are multiple ways to harden a service account that will be dependent upon each individual environment. Following are some of the mechanisms that may be employed:

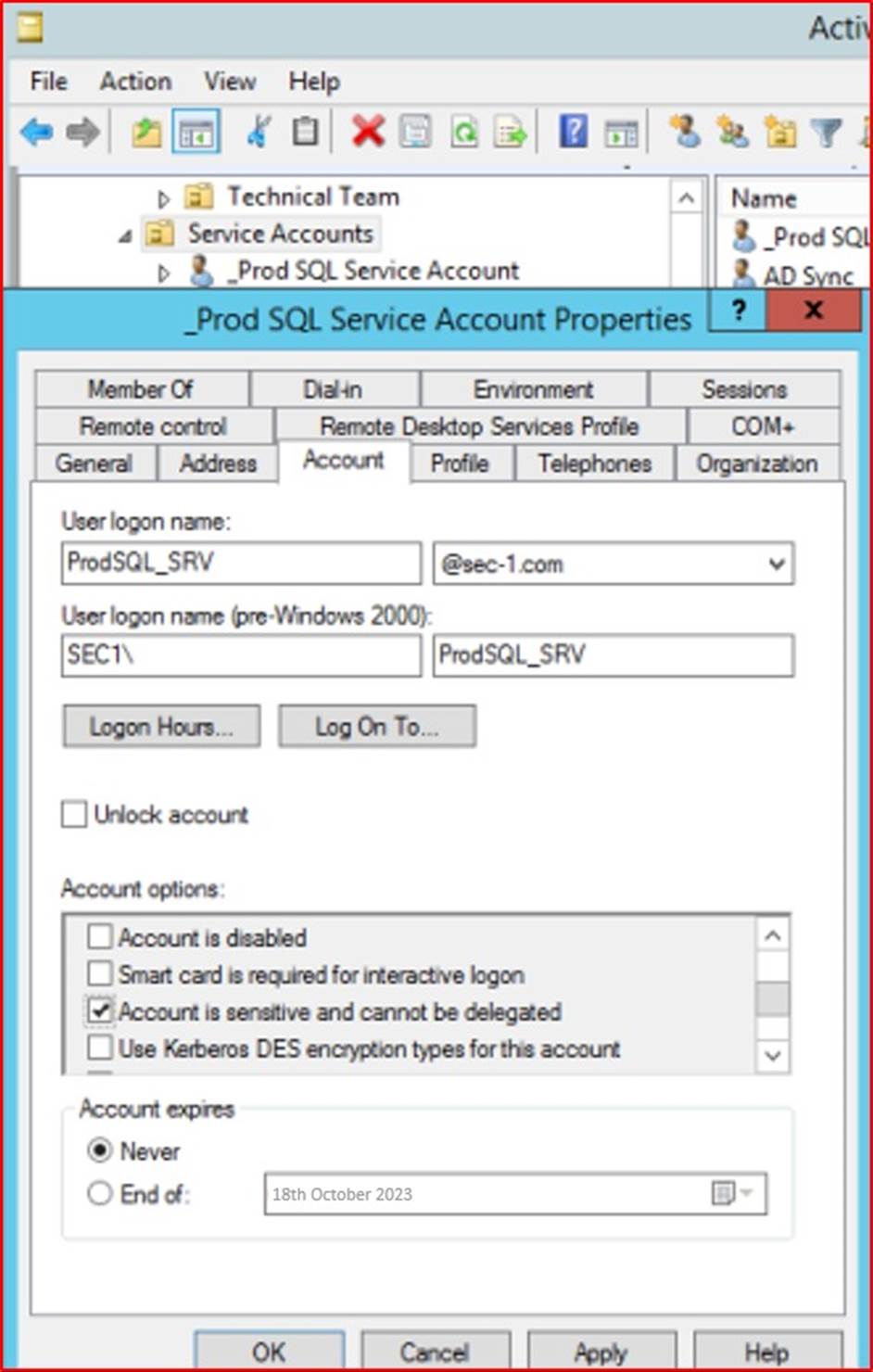

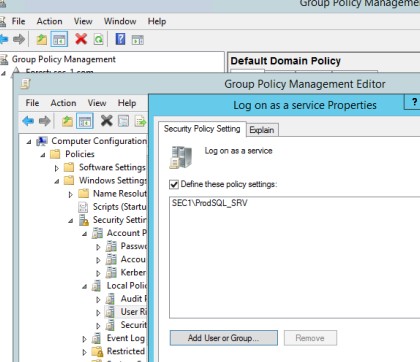

The next screenshot shows the 'Account is sensitive and connect be delegated' option is selected on the service account "_Prod SQL Service Account".

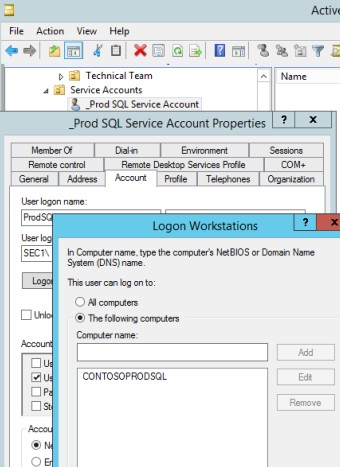

This second screenshot shows that the service account "_Prod SQL Service Account" is locked down to the SQL Server and can only sign in that server.

This next screenshot shows that the service account "_Prod SQL Service Account" is only allowed to sign in as a service.

Note: In the previous examples a full screenshot was not used, however ALL ISV submitted evidence screenshots must be full screenshots showing URL, any logged in user and system time and date.

Control No. 15

Provide evidence that:

Unique user accounts are issued to all users.

User least privilege principles are being followed within the environment.

A strong password/ passphrase policy or other suitable mitigations are in place.

A process is in place and followed at least every three months to either disable or delete accounts not used within 3 months.

Intent: secure service accounts

The intent of this control is accountability. By issuing users with their own unique user accounts, users will be accountable for their actions as user activity can be tracked to an individual user.

Guidelines: secure service accounts

Evidence would be by way of screenshots showing configured user accounts across the in-scope system components which may include servers, code repositories, cloud management platforms, Active Directory, Firewalls, etc.

Example evidence: secure service accounts

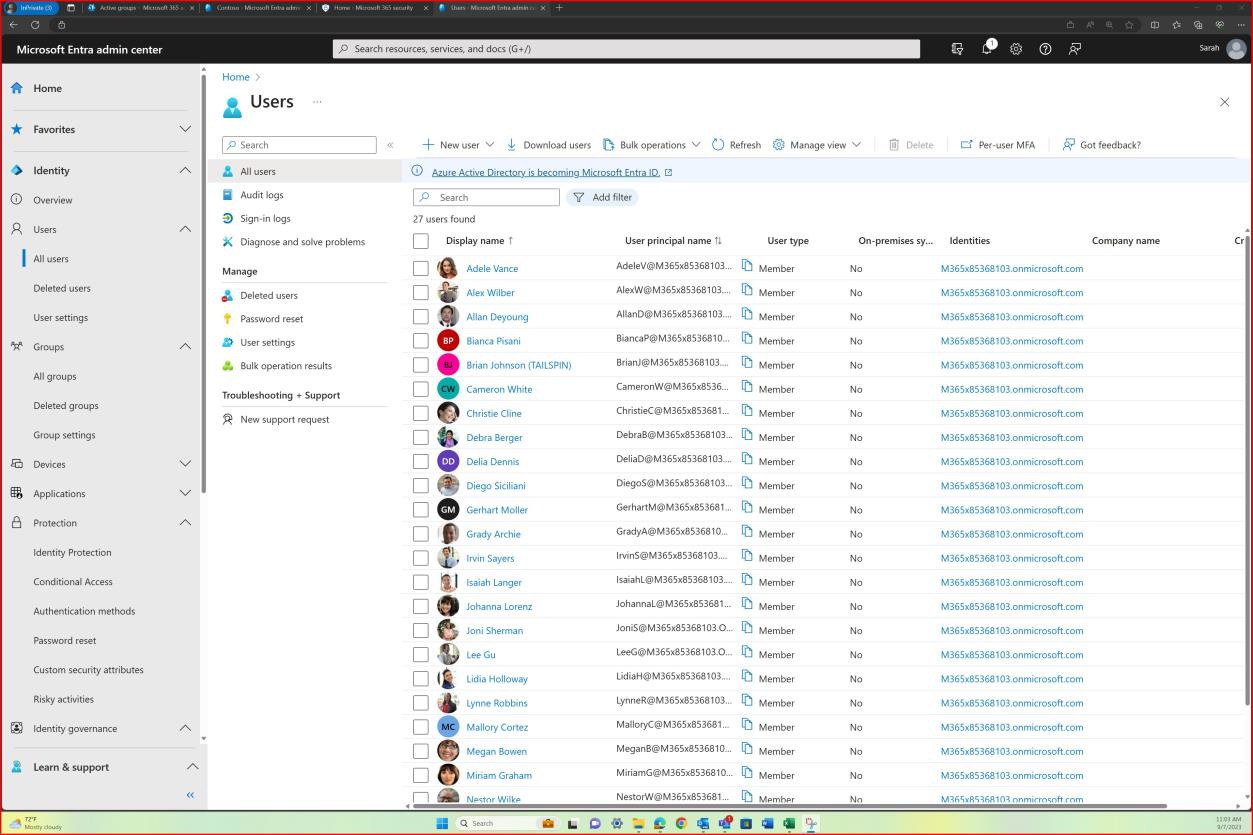

The next screenshot shows user accounts configured for the in-scope Azure environment and that all accounts are unique.

Intent: privileges

Users should only be provided with the privileges necessary to fulfil their job function. This is to limit the risk of a user intentionally or unintentionally accessing data they should not or carrying out a

malicious act. By following this principle, it also reduces the potential attack surface (i.e., privileged accounts) that can be targeted by a malicious threat actor.

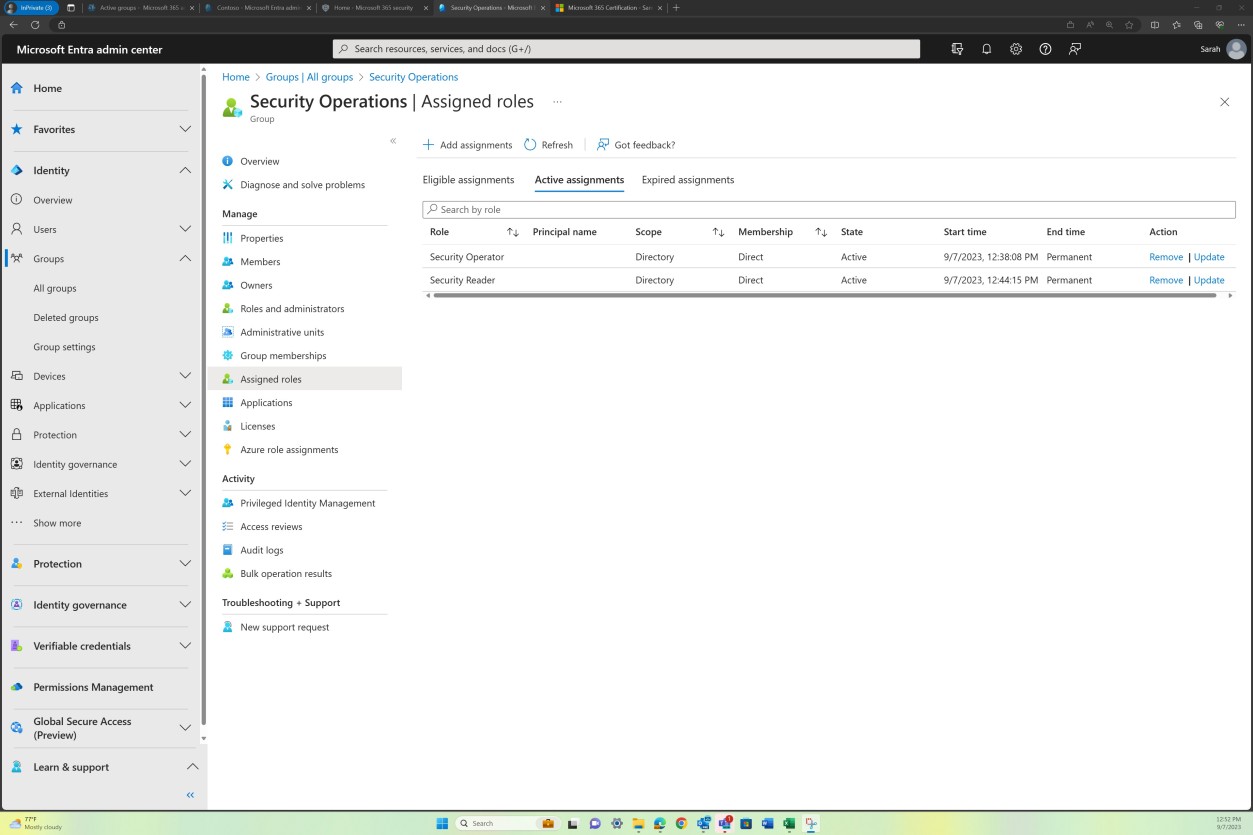

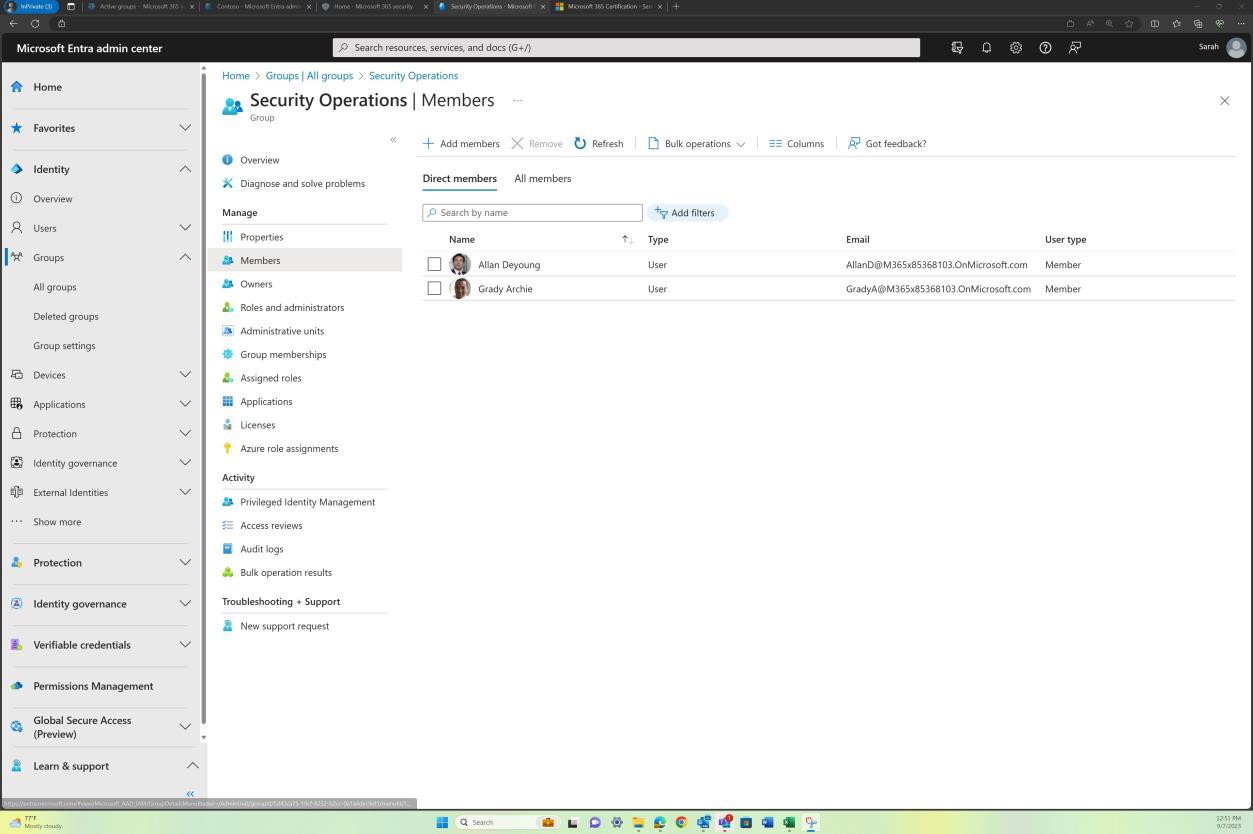

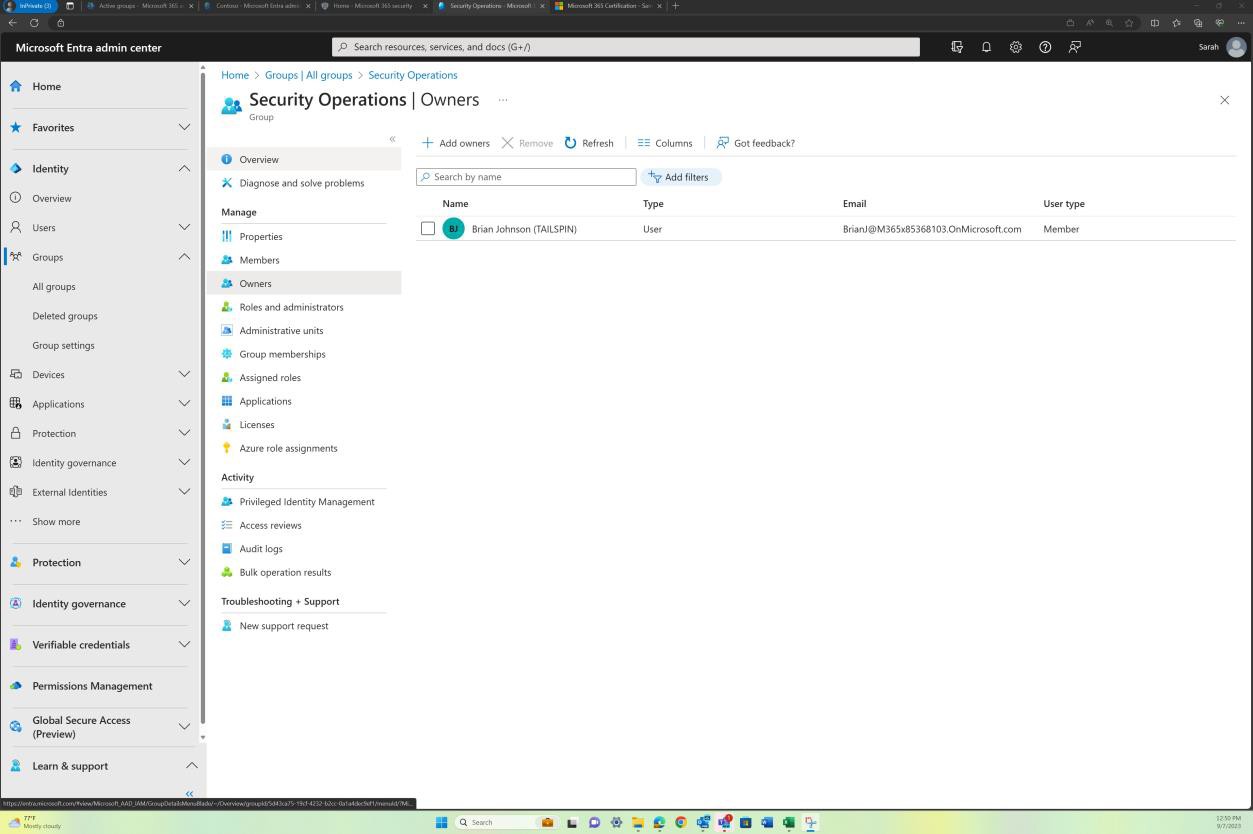

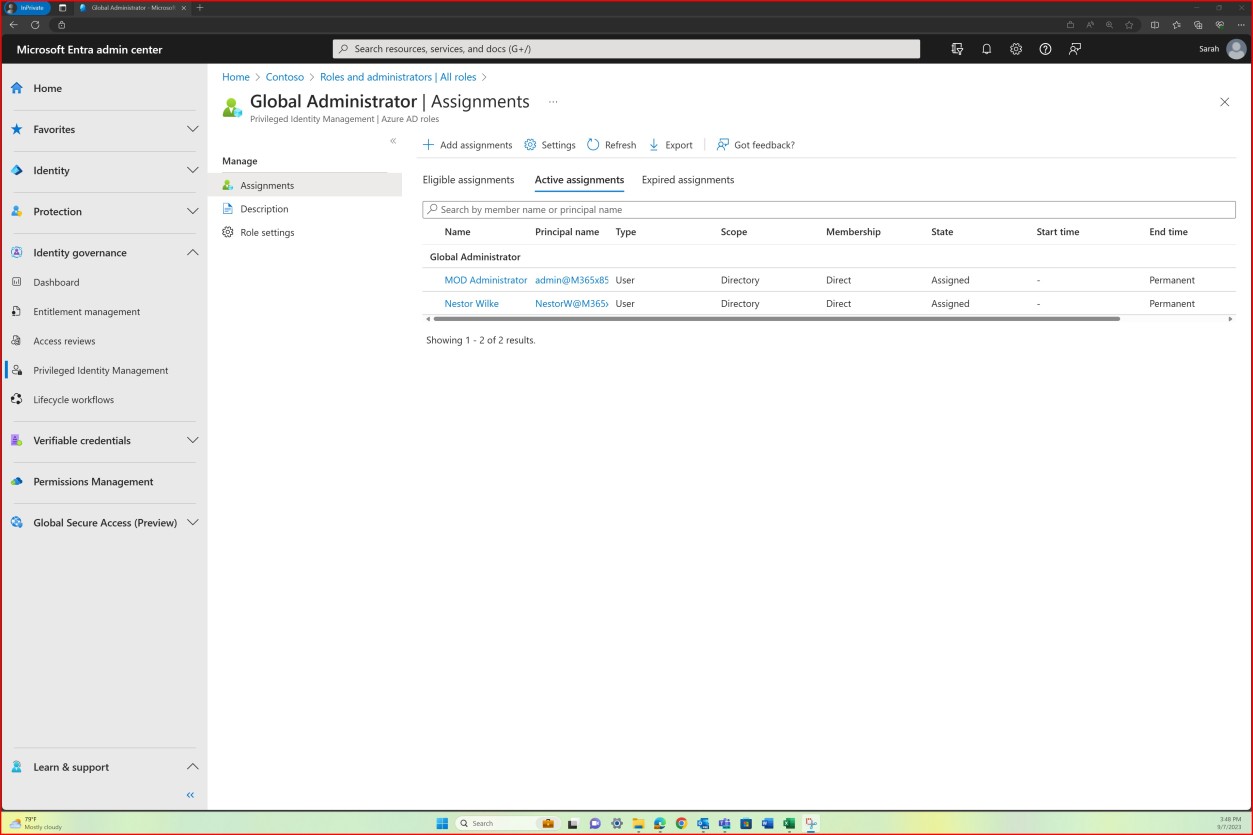

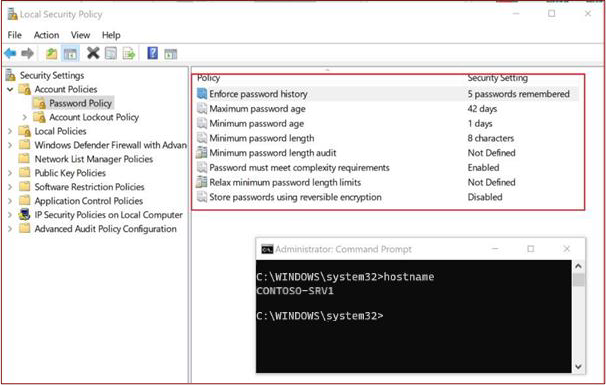

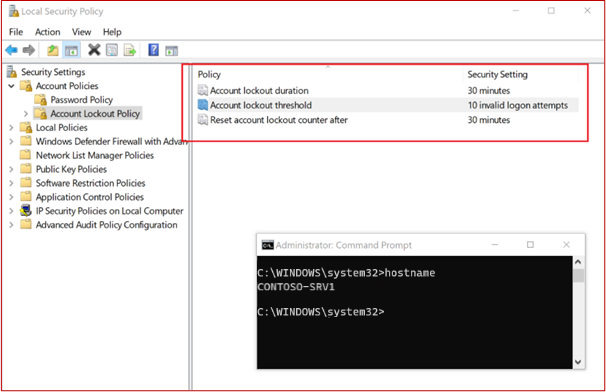

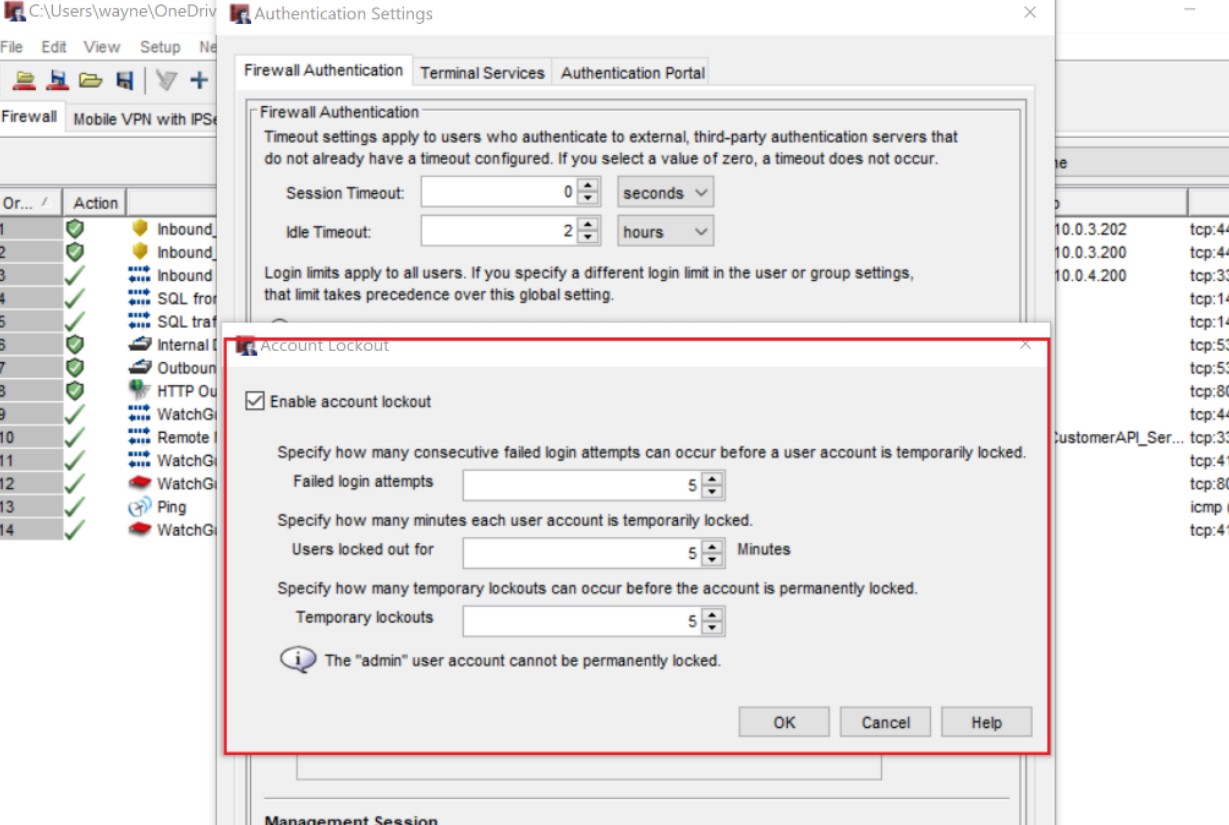

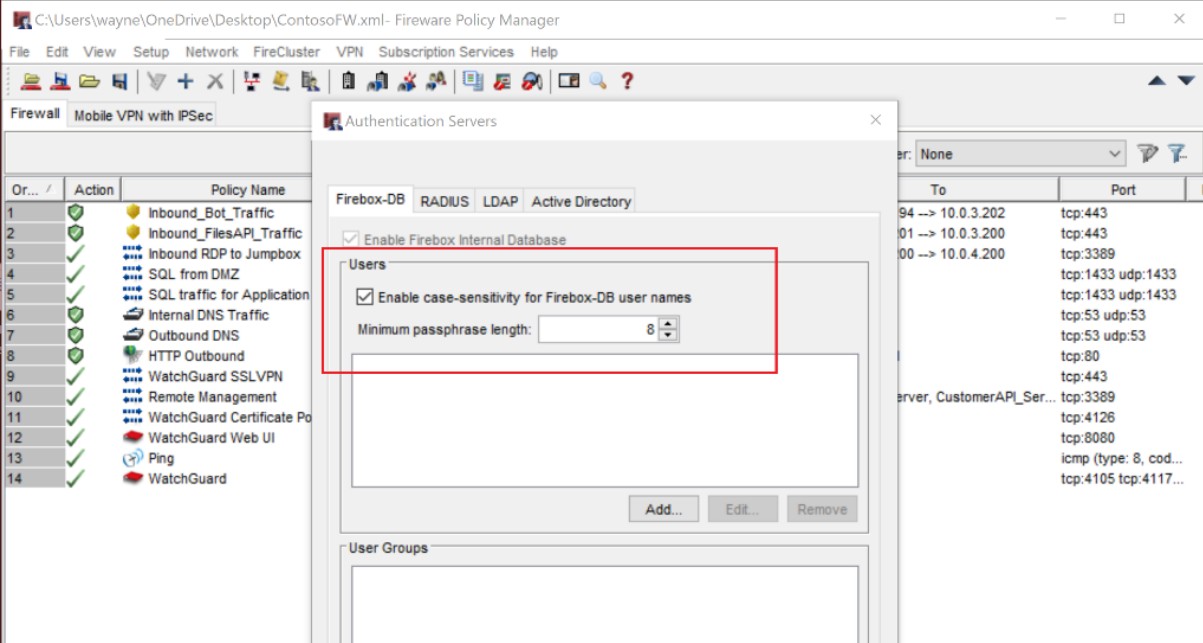

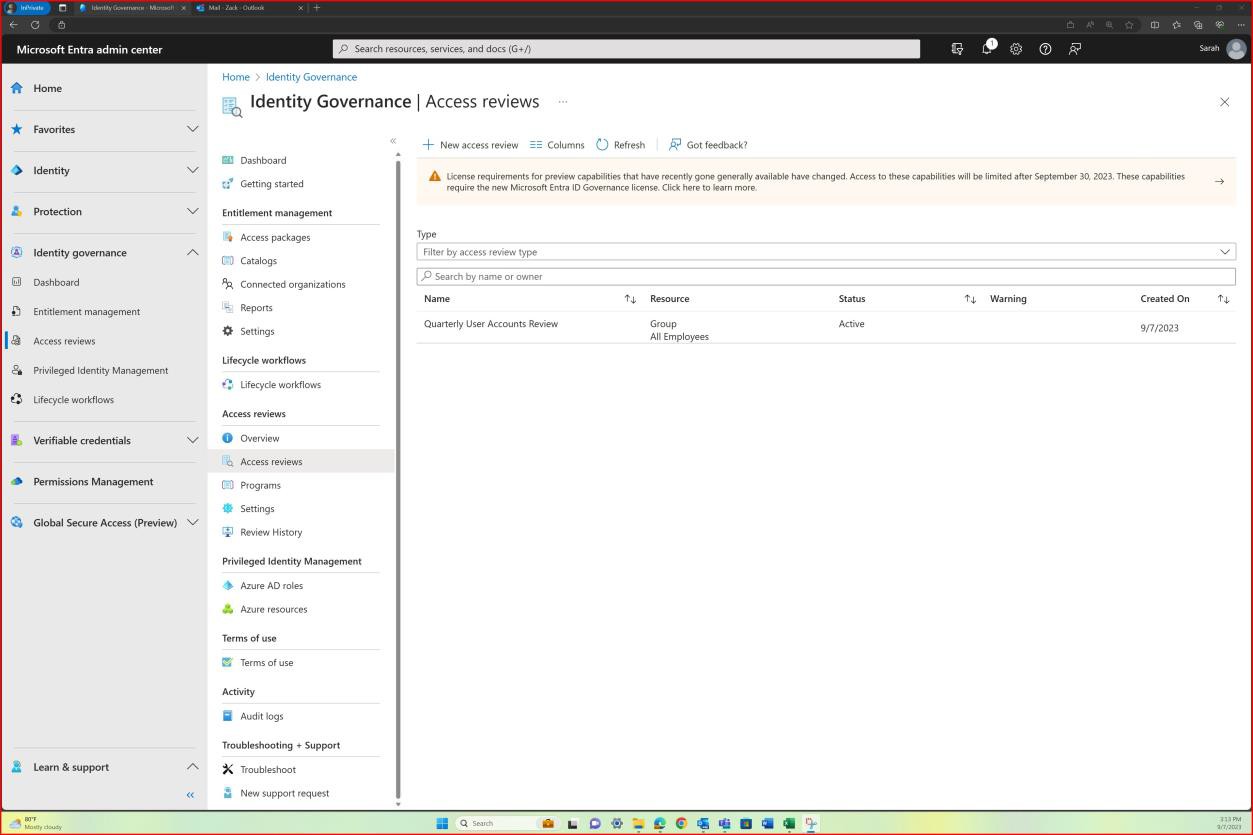

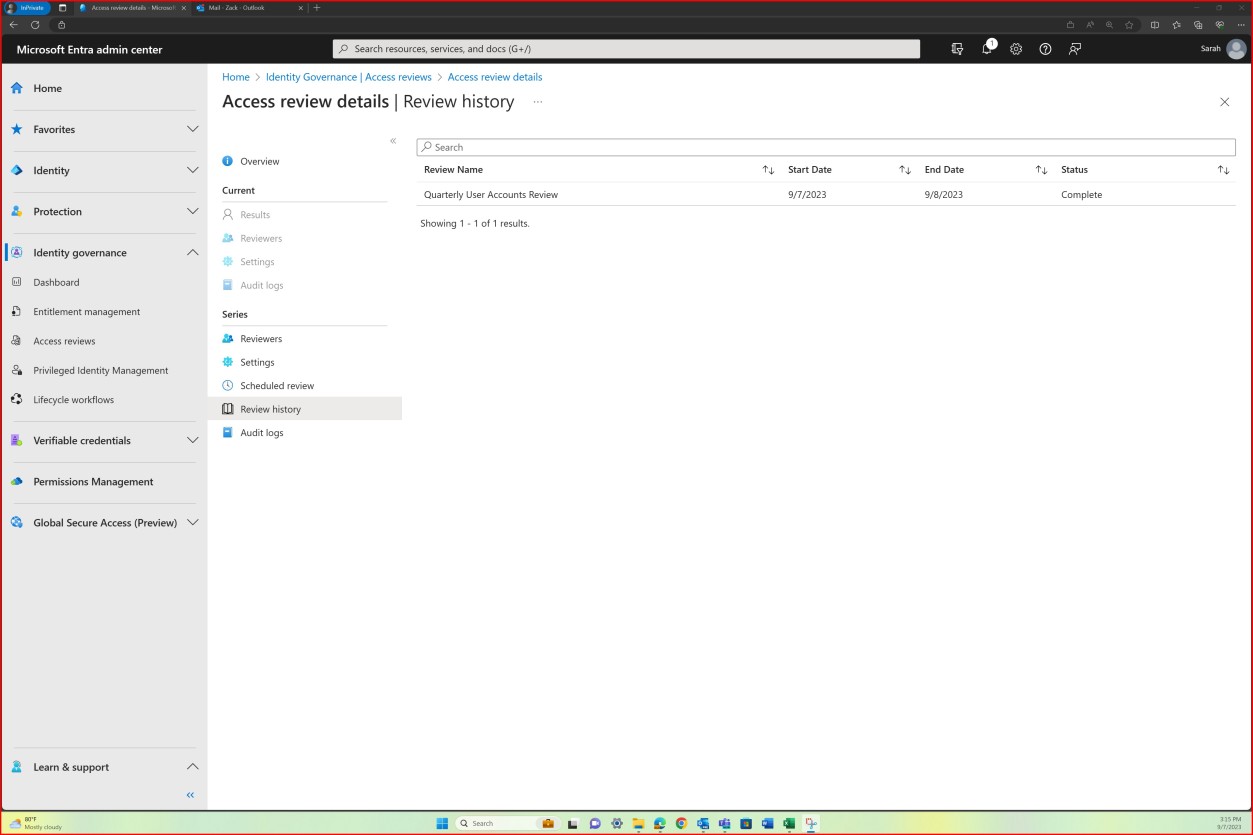

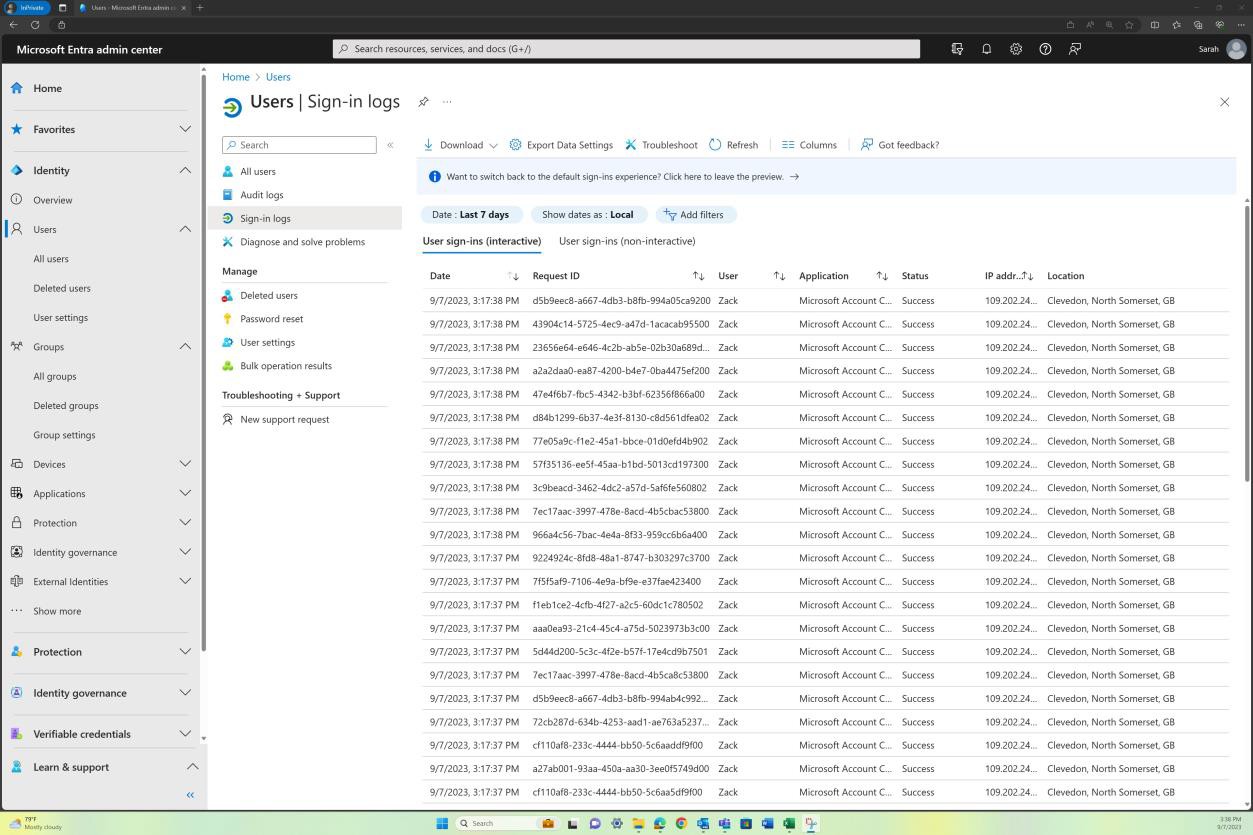

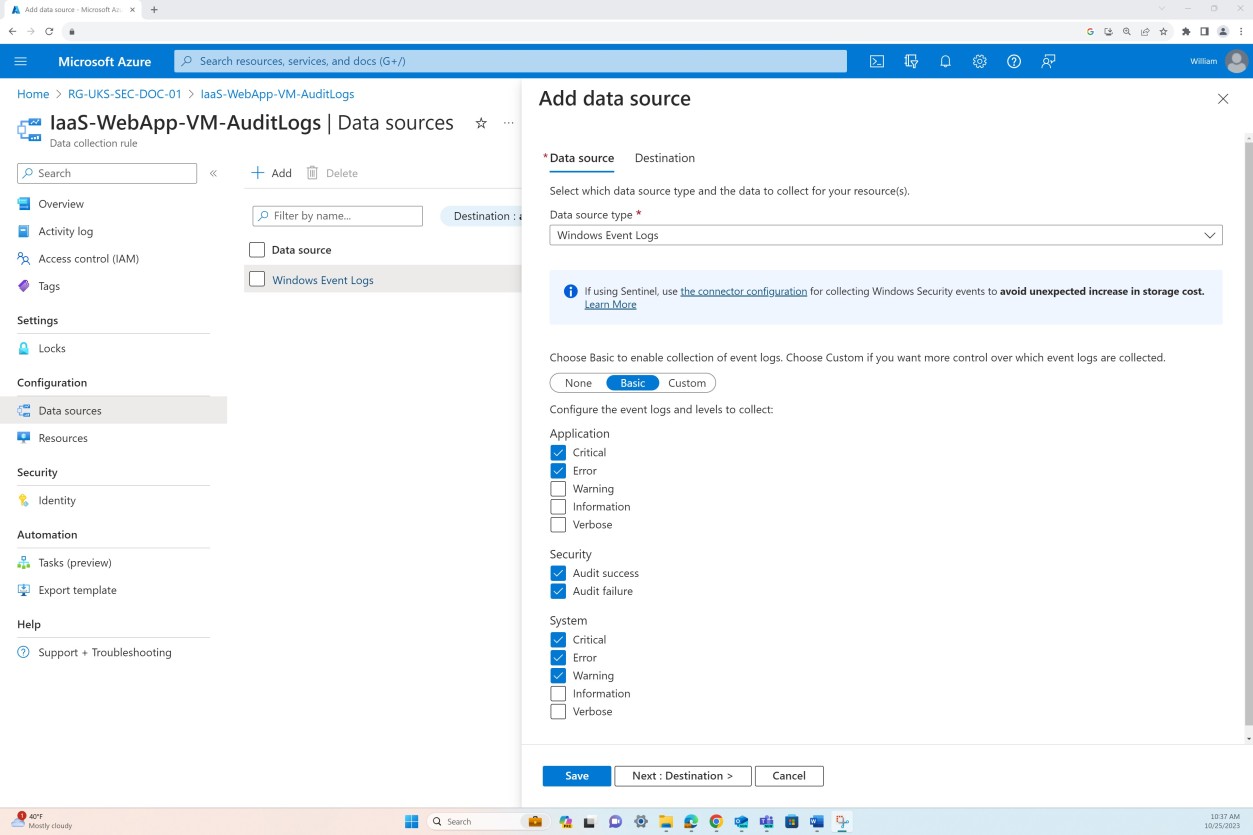

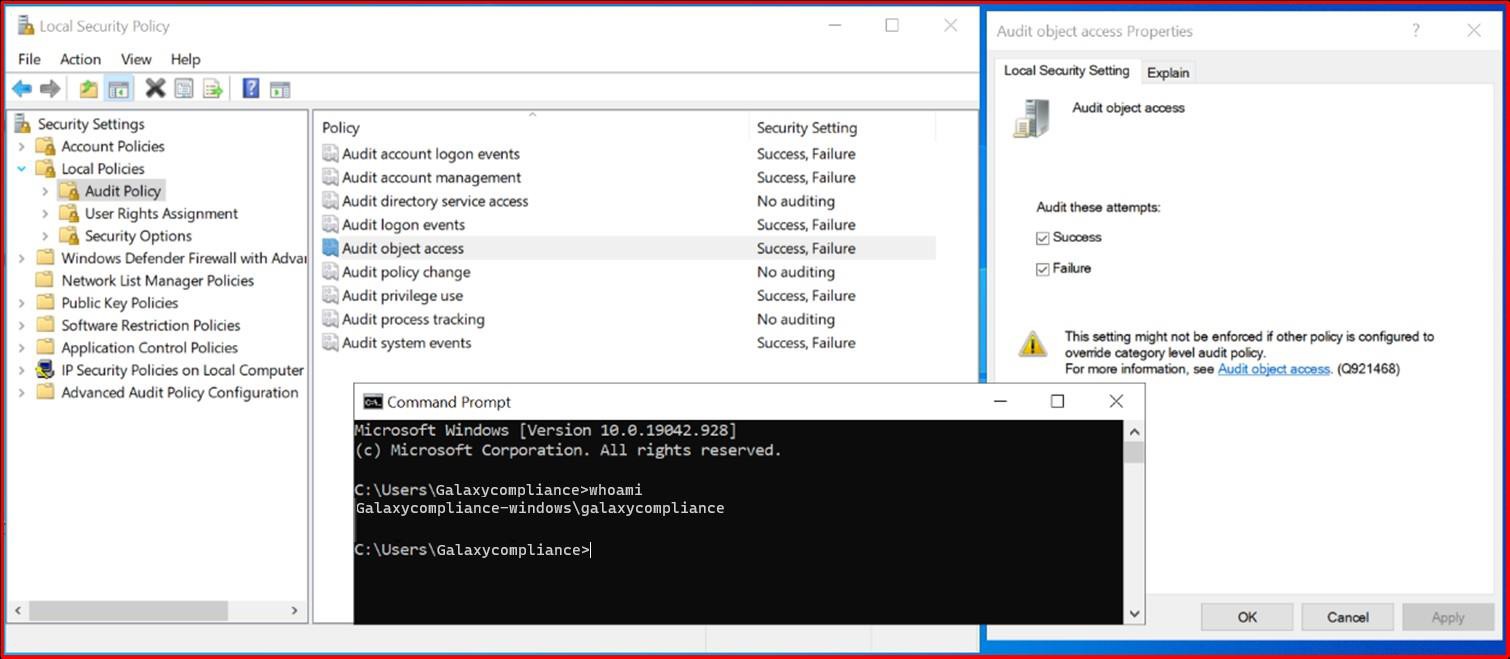

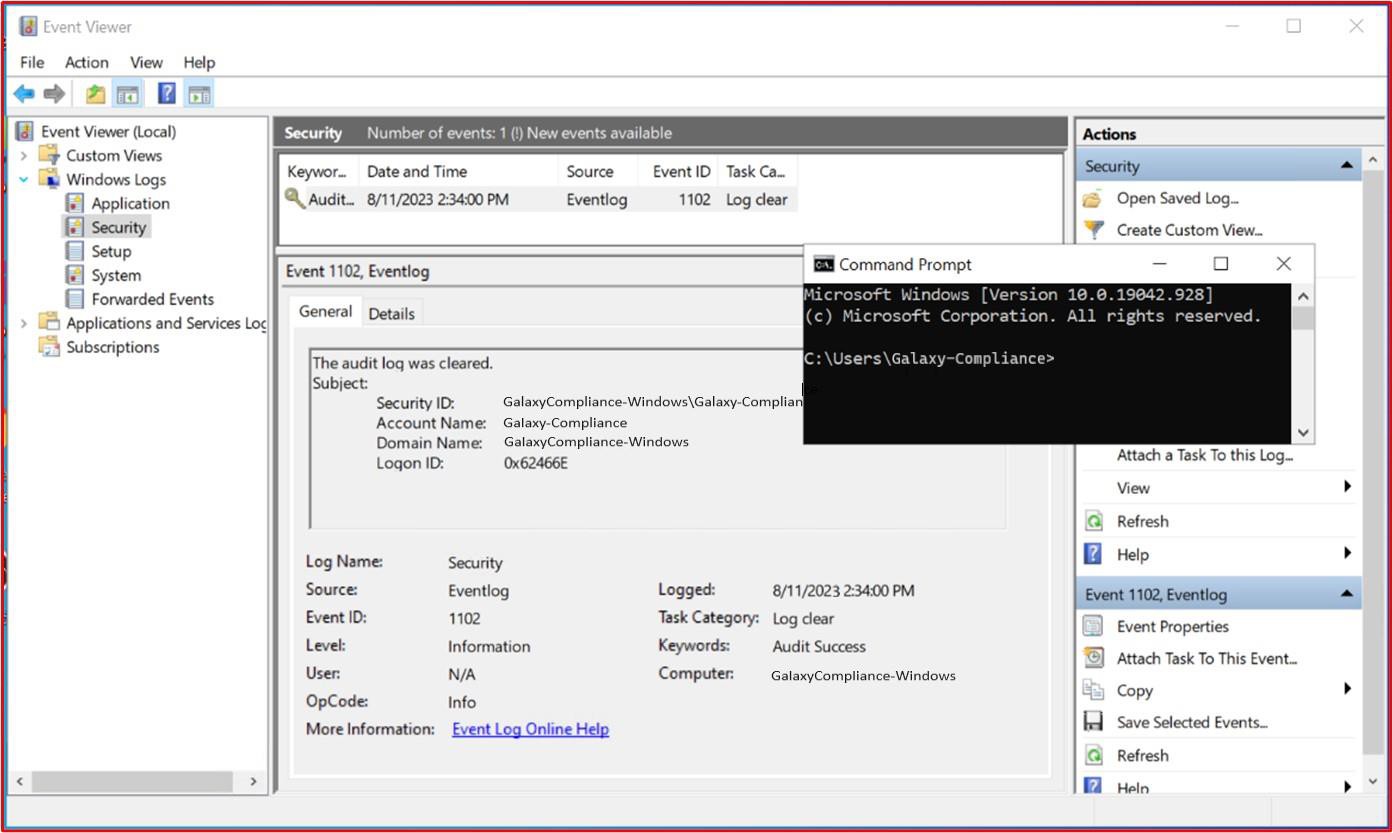

Guidelines: privileges