Index data from Azure Table Storage

In this article, learn how to configure an indexer that imports content from Azure Table Storage and makes it searchable in Azure AI Search. Inputs to the indexer are your entities, in a single table. Output is a search index with searchable content and metadata stored in individual fields.

This article supplements Create an indexer with information that's specific to indexing from Azure Table Storage. It uses the Azure portal and REST APIs to demonstrate a three-part workflow common to all indexers: create a data source, create an index, create an indexer. Data extraction occurs when you submit the Create Indexer request.

Prerequisites

Tables containing text. If you have binary data, consider AI enrichment for image analysis.

Read permissions on Azure Storage. A "full access" connection string includes a key that gives access to the content, but if you're using Azure roles, make sure the search service managed identity has Reader and Data Access permissions.

To work through the examples in this article, you need the Azure portal or a REST client. If you're using Azure portal, make sure that access to all public networks is enabled. Other approaches for creating an Azure Table indexer include Azure SDKs.

Try with sample data

Use these instructions to create a table in Azure Storage for testing purposes.

Sign in to the Azure portal, navigate to your storage account, and create a table named hotels.

Download HotelsData_toAzureSearch.csv from GitHub. This file is a subset of the built-in hotels sample dataset. It omits the rooms collection, translated descriptions, and geography coordinates.

In Azure Storage Explorer, sign in to Azure, select your subscription, and then select your storage account.

Open Tables and select hotels.

Select Import on the command bar, and then select the HotelsData_toAzureSearch.csv file.

Accept the defaults. Select Import to load the data.

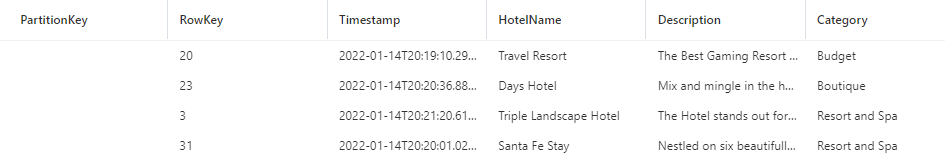

You should have 50 hotel records in the table with an autogenerated partitionKey, rowKey, and timestamp. You can now use this content for indexing in the Azure portal, REST client, or an Azure SDK.

The Description field provides the most verbose content. You should target this field for full text search and optional vector queries.

Use the Azure portal

You can use either the Import data wizard or Import and vectorize data wizard to automate indexing from an SQL database table or view. The data source configuration is similar for both wizards.

On Connect to your data, select or verify that the data source type is either Azure Table Storage or that the data selection fields prompt for tables.

The data source name refers to the data source connection object in Azure AI Search. If you use the vector wizard, your data source name is autogenerated using a custom prefix specified at the end of the wizard workflow.

Specify the storage account and table name. The query is optional. It's useful if you have specific columns you want to import.

Specify an authentication method, either a managed identity or built-in API key. If you don't specify a managed identity connection, the Azure portal uses the key.

If you configure Azure AI Search to use a managed identity, and you create a role assignment on Azure Storage that grants Reader and Data Access permissions to the identity, your indexer can connect to table storage using Microsoft Entra ID and roles.

For the Import and vectorize data wizard, you can specify options for deletion detection,

Deletion detection requires that you have a pre-existing field in the table that can be used as a soft-delete flag. It should be a Boolean field (you could name it IsDeleted). Specify

trueas the soft-delete value. In the search index, add a corresponding search field called IsDeleted set to retrievable and filterable.Continue with the remaining steps to complete the wizard:

Use the REST APIs

This section demonstrates the REST API calls that create a data source, index, and indexer.

Define the data source

The data source definition specifies the source data to index, credentials, and policies for change detection. A data source is an independent resource that can be used by multiple indexers.

Create or update a data source to set its definition:

POST https://[service name].search.windows.net/datasources?api-version=2024-07-01 { "name": "my-table-storage-ds", "description": null, "type": "azuretable", "subtype": null, "credentials": { "connectionString": "DefaultEndpointsProtocol=https;AccountName=<account name>" }, "container": { "name": "my-table-in-azure-storage", "query": "" }, "dataChangeDetectionPolicy": null, "dataDeletionDetectionPolicy": null, "encryptionKey": null, "identity": null }Set "type" to

"azuretable"(required).Set "credentials" to an Azure Storage connection string. The next section describes the supported formats.

Set "container" to the name of the table.

Optionally, set "query" to a filter on PartitionKey. Setting this property is a best practice that improves performance. If "query" is null, the indexer executes a full table scan, which can result in poor performance if the tables are large.

A data source definition can also include soft deletion policies, if you want the indexer to delete a search document when the source document is flagged for deletion.

Supported credentials and connection strings

Indexers can connect to a table using the following connections.

| Full access storage account connection string |

|---|

{ "connectionString" : "DefaultEndpointsProtocol=https;AccountName=<your storage account>;AccountKey=<your account key>;" } |

| You can get the connection string from the Storage account page in Azure portal by selecting Access keys in the left navigation pane. Make sure to select a full connection string and not just a key. |

| Managed identity connection string |

|---|

{ "connectionString" : "ResourceId=/subscriptions/<your subscription ID>/resourceGroups/<your resource group name>/providers/Microsoft.Storage/storageAccounts/<your storage account name>/;" } |

| This connection string doesn't require an account key, but you must have previously configured a search service to connect using a managed identity. |

| Storage account shared access signature** (SAS) connection string |

|---|

{ "connectionString" : "BlobEndpoint=https://<your account>.blob.core.windows.net/;SharedAccessSignature=?sv=2016-05-31&sig=<the signature>&spr=https&se=<the validity end time>&srt=co&ss=b&sp=rl;" } |

| The SAS should have the list and read permissions on tables and entities. |

| Container shared access signature |

|---|

{ "connectionString" : "ContainerSharedAccessUri=https://<your storage account>.blob.core.windows.net/<container name>?sv=2016-05-31&sr=c&sig=<the signature>&se=<the validity end time>&sp=rl;" } |

| The SAS should have the list and read permissions on the container. For more information, see Using Shared Access Signatures. |

Note

If you use SAS credentials, you'll need to update the data source credentials periodically with renewed signatures to prevent their expiration. When SAS credentials expire, the indexer will fail with an error message similar to "Credentials provided in the connection string are invalid or have expired".

Partition for improved performance

By default, Azure AI Search uses the following internal query filter to keep track of which source entities have been updated since the last run: Timestamp >= HighWaterMarkValue. Because Azure tables don’t have a secondary index on the Timestamp field, this type of query requires a full table scan and is therefore slow for large tables.

To avoid a full scan, you can use table partitions to narrow the scope of each indexer job.

If your data can naturally be partitioned into several partition ranges, create a data source and a corresponding indexer for each partition range. Each indexer now has to process only a specific partition range, resulting in better query performance. If the data that needs to be indexed has a small number of fixed partitions, even better: each indexer only does a partition scan.

For example, to create a data source for processing a partition range with keys from

000to100, use a query like this:"container" : { "name" : "my-table", "query" : "PartitionKey ge '000' and PartitionKey lt '100' " }If your data is partitioned by time (for example, if you create a new partition every day or week), consider the following approach:

In the data source definition, specify a query similar to the following example:

(PartitionKey ge <TimeStamp>) and (other filters).Monitor indexer progress by using Get Indexer Status API, and periodically update the

<TimeStamp>condition of the query based on the latest successful high-water-mark value.With this approach, if you need to trigger a full reindex, reset the data source query in addition to resetting the indexer.

Add search fields to an index

In a search index, add fields to accept the content and metadata of your table entities.

Create or update an index to define search fields that will store content from entities:

POST https://[service name].search.windows.net/indexes?api-version=2024-07-01 { "name" : "my-search-index", "fields": [ { "name": "Key", "type": "Edm.String", "key": true, "searchable": false }, { "name": "SomeColumnInMyTable", "type": "Edm.String", "searchable": true } ] }Create a document key field ("key": true), but allow the indexer to populate it automatically. A table indexer populates the key field with concatenated partition and row keys from the table. For example, if a row’s PartitionKey is

1and RowKey is1_123, then the key value is11_123. If the partition key is null, just the row key is used.If you're using the Import data wizard to create the index, the Azure portal infers a "Key" field for the search index and uses an implicit field mapping to connect the source and destination fields. You don't have to add the field yourself, and you don't need to set up a field mapping.

If you're using the REST APIs and you want implicit field mappings, create and name the document key field "Key" in the search index definition as shown in the previous step (

{ "name": "Key", "type": "Edm.String", "key": true, "searchable": false }). The indexer populates the Key field automatically, with no field mappings required.If you don't want a field named "Key" in your search index, add an explicit field mapping in the indexer definition with the field name you want, setting the source field to "Key":

"fieldMappings" : [ { "sourceFieldName" : "Key", "targetFieldName" : "MyDocumentKeyFieldName" } ]Now add any other entity fields that you want in your index. For example, if an entity looks like the following example, your search index should have fields for HotelName, Description, and Category to receive those values.

Using the same names and compatible data types minimizes the need for field mappings. When names and types are the same, the indexer can determine the data path automatically.

Configure and run the table indexer

Once you have an index and data source, you're ready to create the indexer. Indexer configuration specifies the inputs, parameters, and properties controlling run time behaviors.

Create or update an indexer by giving it a name and referencing the data source and target index:

POST https://[service name].search.windows.net/indexers?api-version=2024-07-01 { "name" : "my-table-indexer", "dataSourceName" : "my-table-storage-ds", "targetIndexName" : "my-search-index", "disabled": null, "schedule": null, "parameters" : { "batchSize" : null, "maxFailedItems" : null, "maxFailedItemsPerBatch" : null, "configuration" : { } }, "fieldMappings" : [ ], "cache": null, "encryptionKey": null }Specify field mappings if there are differences in field name or type, or if you need multiple versions of a source field in the search index. The Target field is the name of the field in the search index.

"fieldMappings" : [ { "sourceFieldName" : "Description", "targetFieldName" : "HotelDescription" } ]See Create an indexer for more information about other properties.

An indexer runs automatically when it's created. You can prevent this by setting "disabled" to true. To control indexer execution, run an indexer on demand or put it on a schedule.

Check indexer status

To monitor the indexer status and execution history, check the indexer execution history in the Azure portal, or send a Get Indexer Status REST APIrequest

On the search service page, open Search management > Indexers.

Select an indexer to access configuration and execution history.

Select a specific indexer job to view details, warnings, and errors.

Execution history contains up to 50 of the most recently completed executions, which are sorted in the reverse chronological order so that the latest execution comes first.

Next steps

Learn more about how to run the indexer, monitor status, or schedule indexer execution. The following articles apply to indexers that pull content from Azure Storage: