How to deploy a pipeline to perform batch scoring with preprocessing

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

In this article, you'll learn how to deploy an inference (or scoring) pipeline under a batch endpoint. The pipeline performs scoring over a registered model while also reusing a preprocessing component from when the model was trained. Reusing the same preprocessing component ensures that the same preprocessing is applied during scoring.

You'll learn to:

- Create a pipeline that reuses existing components from the workspace

- Deploy the pipeline to an endpoint

- Consume predictions generated by the pipeline

About this example

This example shows you how to reuse preprocessing code and the parameters learned during preprocessing before you use your model for inferencing. By reusing the preprocessing code and learned parameters, we can ensure that the same transformations (such as normalization and feature encoding) that were applied to the input data during training are also applied during inferencing. The model used for inference will perform predictions on tabular data from the UCI Heart Disease Data Set.

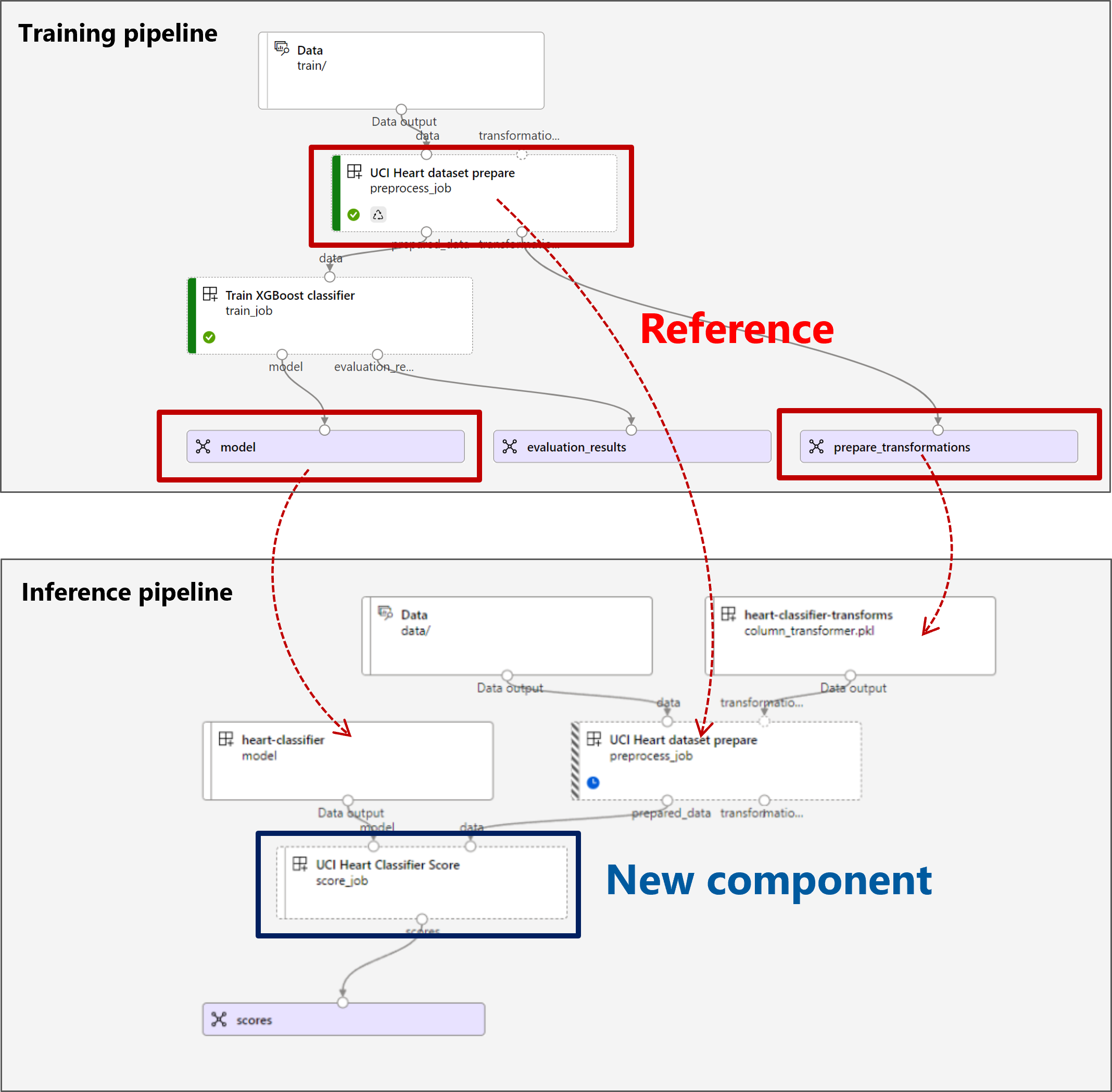

A visualization of the pipeline is as follows:

The example in this article is based on code samples contained in the azureml-examples repository. To run the commands locally without having to copy or paste YAML and other files, use the following commands to clone the repository and go to the folder for your coding language:

git clone https://github.com/Azure/azureml-examples --depth 1

cd azureml-examples/cli

The files for this example are in:

cd endpoints/batch/deploy-pipelines/batch-scoring-with-preprocessing

Follow along in Jupyter notebooks

You can follow along with the Python SDK version of this example by opening the sdk-deploy-and-test.ipynb notebook in the cloned repository.

Prerequisites

An Azure subscription. If you don't have an Azure subscription, create a free account before you begin.

An Azure Machine Learning workspace. To create a workspace, see Manage Azure Machine Learning workspaces.

The following permissions in the Azure Machine Learning workspace:

- For creating or managing batch endpoints and deployments: Use an Owner, Contributor, or custom role that has been assigned the

Microsoft.MachineLearningServices/workspaces/batchEndpoints/*permissions. - For creating Azure Resource Manager deployments in the workspace resource group: Use an Owner, Contributor, or custom role that has been assigned the

Microsoft.Resources/deployments/writepermission in the resource group where the workspace is deployed.

- For creating or managing batch endpoints and deployments: Use an Owner, Contributor, or custom role that has been assigned the

The Azure Machine Learning CLI or the Azure Machine Learning SDK for Python:

Run the following command to install the Azure CLI and the

mlextension for Azure Machine Learning:az extension add -n mlPipeline component deployments for batch endpoints are introduced in version 2.7 of the

mlextension for the Azure CLI. Use theaz extension update --name mlcommand to get the latest version.

Connect to your workspace

The workspace is the top-level resource for Azure Machine Learning. It provides a centralized place to work with all artifacts you create when you use Azure Machine Learning. In this section, you connect to the workspace where you perform your deployment tasks.

In the following command, enter your subscription ID, workspace name, resource group name, and location:

az account set --subscription <subscription>

az configure --defaults workspace=<workspace> group=<resource-group> location=<location>

Create the inference pipeline

In this section, we'll create all the assets required for our inference pipeline. We'll begin by creating an environment that includes necessary libraries for the pipeline's components. Next, we'll create a compute cluster on which the batch deployment will run. Afterwards, we'll register the components, models, and transformations we need to build our inference pipeline. Finally, we'll build and test the pipeline.

Create the environment

The components in this example will use an environment with the XGBoost and scikit-learn libraries. The environment/conda.yml file contains the environment's configuration:

environment/conda.yml

channels:

- conda-forge

dependencies:

- python=3.8.5

- pip

- pip:

- mlflow

- azureml-mlflow

- datasets

- jobtools

- cloudpickle==1.6.0

- dask==2023.2.0

- scikit-learn==1.1.2

- xgboost==1.3.3

name: mlflow-env

Create the environment as follows:

Define the environment:

environment/xgboost-sklearn-py38.yml

$schema: https://azuremlschemas.azureedge.net/latest/environment.schema.json name: xgboost-sklearn-py38 image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest conda_file: conda.yml description: An environment for models built with XGBoost and Scikit-learn.Create the environment:

Create a compute cluster

Batch endpoints and deployments run on compute clusters. They can run on any Azure Machine Learning compute cluster that already exists in the workspace. Therefore, multiple batch deployments can share the same compute infrastructure. In this example, we'll work on an Azure Machine Learning compute cluster called batch-cluster. Let's verify that the compute exists on the workspace or create it otherwise.

az ml compute create -n batch-cluster --type amlcompute --min-instances 0 --max-instances 5

Register components and models

We're going to register components, models, and transformations that we need to build our inference pipeline. We can reuse some of these assets for training routines.

Tip

In this tutorial, we'll reuse the model and the preprocessing component from an earlier training pipeline. You can see how they were created by following the example How to deploy a training pipeline with batch endpoints.

Register the model to use for prediction:

The registered model wasn't trained directly on input data. Instead, the input data was preprocessed (or transformed) before training, using a prepare component. We'll also need to register this component. Register the prepare component:

Tip

After registering the prepare component, you can now reference it from the workspace. For example,

azureml:uci_heart_prepare@latestwill get the last version of the prepare component.As part of the data transformations in the prepare component, the input data was normalized to center the predictors and limit their values in the range of [-1, 1]. The transformation parameters were captured in a scikit-learn transformation that we can also register to apply later when we have new data. Register the transformation as follows:

We'll perform inferencing for the registered model, using another component named

scorethat computes the predictions for a given model. We'll reference the component directly from its definition.Tip

Best practice would be to register the component and reference it from the pipeline. However, in this example, we're going to reference the component directly from its definition to help you see which components are reused from the training pipeline and which ones are new.

Build the pipeline

Now it's time to bind all the elements together. The inference pipeline we'll deploy has two components (steps):

preprocess_job: This step reads the input data and returns the prepared data and the applied transformations. The step receives two inputs:data: a folder containing the input data to scoretransformations: (optional) Path to the transformations that will be applied, if available. When provided, the transformations are read from the model that is indicated at the path. However, if the path isn't provided, then the transformations will be learned from the input data. For inferencing, though, you can't learn the transformation parameters (in this example, the normalization coefficients) from the input data because you need to use the same parameter values that were learned during training. Since this input is optional, thepreprocess_jobcomponent can be used during training and scoring.

score_job: This step will perform inferencing on the transformed data, using the input model. Notice that the component uses an MLflow model to perform inference. Finally, the scores are written back in the same format as they were read.

The pipeline configuration is defined in the pipeline.yml file:

pipeline.yml

$schema: https://azuremlschemas.azureedge.net/latest/pipelineComponent.schema.json

type: pipeline

name: batch_scoring_uci_heart

display_name: Batch Scoring for UCI heart

description: This pipeline demonstrates how to make batch inference using a model from the Heart Disease Data Set problem, where pre and post processing is required as steps. The pre and post processing steps can be components reusable from the training pipeline.

inputs:

input_data:

type: uri_folder

score_mode:

type: string

default: append

outputs:

scores:

type: uri_folder

mode: upload

jobs:

preprocess_job:

type: command

component: azureml:uci_heart_prepare@latest

inputs:

data: ${{parent.inputs.input_data}}

transformations:

path: azureml:heart-classifier-transforms@latest

type: custom_model

outputs:

prepared_data:

score_job:

type: command

component: components/score/score.yml

inputs:

data: ${{parent.jobs.preprocess_job.outputs.prepared_data}}

model:

path: azureml:heart-classifier@latest

type: mlflow_model

score_mode: ${{parent.inputs.score_mode}}

outputs:

scores:

mode: upload

path: ${{parent.outputs.scores}}

A visualization of the pipeline is as follows:

Test the pipeline

Let's test the pipeline with some sample data. To do that, we'll create a job using the pipeline and the batch-cluster compute cluster created previously.

The following pipeline-job.yml file contains the configuration for the pipeline job:

pipeline-job.yml

$schema: https://azuremlschemas.azureedge.net/latest/pipelineJob.schema.json

type: pipeline

display_name: uci-classifier-score-job

description: |-

This pipeline demonstrate how to make batch inference using a model from the Heart \

Disease Data Set problem, where pre and post processing is required as steps. The \

pre and post processing steps can be components reused from the training pipeline.

compute: batch-cluster

component: pipeline.yml

inputs:

input_data:

type: uri_folder

score_mode: append

outputs:

scores:

mode: upload

Create the test job:

Create a batch endpoint

Provide a name for the endpoint. A batch endpoint's name needs to be unique in each region since the name is used to construct the invocation URI. To ensure uniqueness, append any trailing characters to the name specified in the following code.

Configure the endpoint:

The

endpoint.ymlfile contains the endpoint's configuration.endpoint.yml

$schema: https://azuremlschemas.azureedge.net/latest/batchEndpoint.schema.json name: uci-classifier-score description: Batch scoring endpoint of the Heart Disease Data Set prediction task. auth_mode: aad_tokenCreate the endpoint:

Query the endpoint URI:

Deploy the pipeline component

To deploy the pipeline component, we have to create a batch deployment. A deployment is a set of resources required for hosting the asset that does the actual work.

Configure the deployment

The

deployment.ymlfile contains the deployment's configuration. You can check the full batch endpoint YAML schema for extra properties.deployment.yml

$schema: https://azuremlschemas.azureedge.net/latest/pipelineComponentBatchDeployment.schema.json name: uci-classifier-prepros-xgb endpoint_name: uci-classifier-batch type: pipeline component: pipeline.yml settings: continue_on_step_failure: false default_compute: batch-clusterCreate the deployment

Run the following code to create a batch deployment under the batch endpoint and set it as the default deployment.

az ml batch-deployment create --endpoint $ENDPOINT_NAME -f deployment.yml --set-defaultTip

Notice the use of the

--set-defaultflag to indicate that this new deployment is now the default.Your deployment is ready for use.

Test the deployment

Once the deployment is created, it's ready to receive jobs. Follow these steps to test it:

Our deployment requires that we indicate one data input and one literal input.

The

inputs.ymlfile contains the definition for the input data asset:inputs.yml

inputs: input_data: type: uri_folder path: data/unlabeled score_mode: type: string default: append outputs: scores: type: uri_folder mode: uploadTip

To learn more about how to indicate inputs, see Create jobs and input data for batch endpoints.

You can invoke the default deployment as follows:

You can monitor the progress of the show and stream the logs using:

Access job output

Once the job is completed, we can access its output. This job contains only one output named scores:

You can download the associated results using az ml job download.

az ml job download --name $JOB_NAME --output-name scores

Read the scored data:

import pandas as pd

import glob

output_files = glob.glob("named-outputs/scores/*.csv")

score = pd.concat((pd.read_csv(f) for f in output_files))

scoreThe output looks as follows:

| age | sex | ... | thal | prediction |

|---|---|---|---|---|

| 0.9338 | 1 | ... | 2 | 0 |

| 1.3782 | 1 | ... | 3 | 1 |

| 1.3782 | 1 | ... | 4 | 0 |

| -1.954 | 1 | ... | 3 | 0 |

The output contains the predictions plus the data that was provided to the score component, which was preprocessed. For example, the column age has been normalized, and column thal contains original encoding values. In practice, you probably want to output the prediction only and then concat it with the original values. This work has been left to the reader.

Clean up resources

Once you're done, delete the associated resources from the workspace:

Run the following code to delete the batch endpoint and its underlying deployment. --yes is used to confirm the deletion.

az ml batch-endpoint delete -n $ENDPOINT_NAME --yes

(Optional) Delete compute, unless you plan to reuse your compute cluster with later deployments.