Configure dataflow endpoints for local storage

Important

This page includes instructions for managing Azure IoT Operations components using Kubernetes deployment manifests, which is in preview. This feature is provided with several limitations, and shouldn't be used for production workloads.

See the Supplemental Terms of Use for Microsoft Azure Previews for legal terms that apply to Azure features that are in beta, preview, or otherwise not yet released into general availability.

To send data to local storage in Azure IoT Operations, you can configure a dataflow endpoint. This configuration allows you to specify the endpoint, authentication, table, and other settings.

Prerequisites

- An instance of Azure IoT Operations

- A PersistentVolumeClaim (PVC)

Create a local storage dataflow endpoint

Use the local storage option to send data to a locally available persistent volume, through which you can upload data via Azure Container Storage enabled by Azure Arc edge volumes.

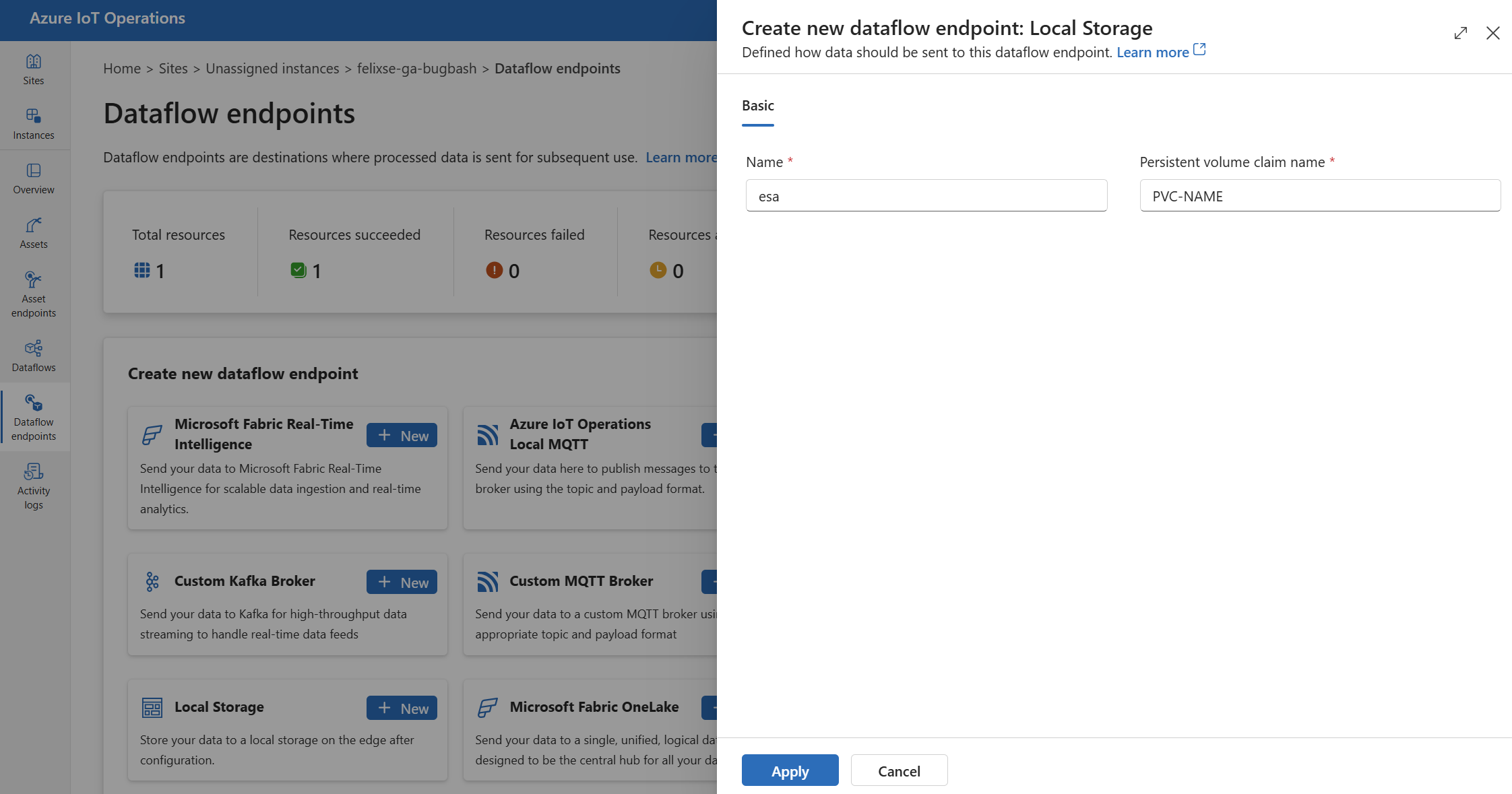

In the operations experience, select the Dataflow endpoints tab.

Under Create new dataflow endpoint, select Local Storage > New.

Enter the following settings for the endpoint:

Setting Description Name The name of the dataflow endpoint. Persistent volume claim name The name of the PersistentVolumeClaim (PVC) to use for local storage. Select Apply to provision the endpoint.

The PersistentVolumeClaim (PVC) must be in the same namespace as the DataflowEndpoint.

Supported serialization formats

The only supported serialization format is Parquet.

Use Azure Container Storage enabled by Azure Arc (ACSA)

You can use the local storage dataflow endpoint together with Azure Container Storage enabled by Azure Arc to store data locally or send data to a cloud destination.

Local shared volume

To write to a local shared volume, first create a PersistentVolumeClaim (PVC) according to the instructions from Local Shared Edge Volumes.

Then, when configuring your local storage dataflow endpoint, input the PVC name under persistentVolumeClaimRef.

Cloud ingest

To write your data to the cloud, follow the instructions in Cloud Ingest Edge Volumes configuration to create a PVC and attach a subvolume for your desired cloud destination.

Important

Don't forget to create the subvolume after creating the PVC, or else the dataflow fails to start and the logs show a "read-only file system" error.

Then, when configuring your local storage dataflow endpoint, input the PVC name under persistentVolumeClaimRef.

Finally, when you create the dataflow, the data destination parameter must match the spec.path parameter you created for your subvolume during configuration.

Next steps

To learn more about dataflows, see Create a dataflow.