Built-in Health Safeguards

Important

This feature is currently in Public Preview

Healthcare agent service with generative AI provides built-in Health Safeguards, for building copilot experiences that fits healthcare’s unique requirements and needs. These include

- Chat safeguards include customizable AI-related disclaimers that are incorporated into the chat experience presented to users, enabling the collection of end-user feedback, and analyzing the engagement through built-in dedicated reporting, as well as healthcare-adapted abuse monitoring, among other things.

- Clinical safeguards include healthcare-adapted filters and quality checks to allow verification of clinical evidence associated with answers, identifying hallucinations and omissions in generative answers, credible sources enforcement, and more.

- Compliance safeguards include built-in Data Subject Rights (DSRs), pre-built consent management, out-of-the-box audit trails, and more.

Healthcare Chat Safeguards

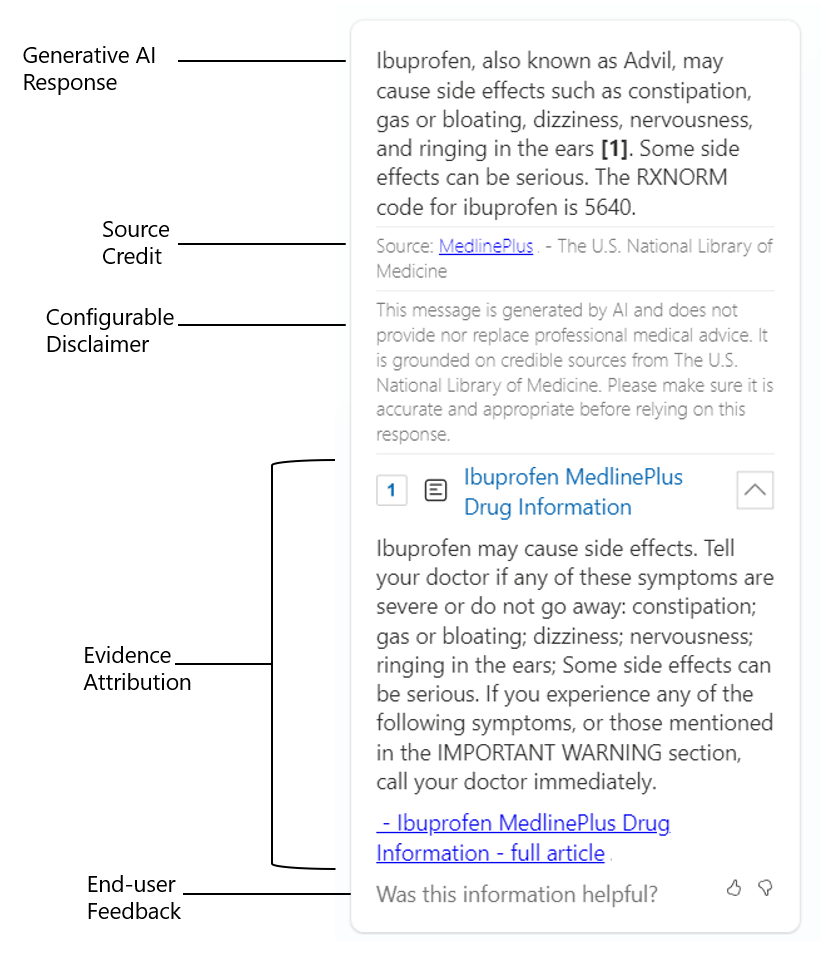

Every generated answer from an Large Language Model will be shown through our Healthcare Chat Safeguards, the chat safeguards consists out of 7 different elements.

- Generative AI response: the generated answer coming from the LLM.

- Source Credit: The source of the information. This can be our built-in credible sources such as CDC, FDA, MSD Manuals, .. This can also be a cominbation of multiple credible sources.

- Configurable Disclaimer: The required disclaimer that can be configured for customer sources (documents/websites), this is not configurable for our credible sources.

- Evidence Attribution: The source information that is used to augment to Generative AI response.

If the evidence is based on documents, the relevant section text will be shown when expanded.

If the evidence is based on websites, the relevant snippet and url from the webpage will be shown when expanded. - End-user Feedback: Feedback mechanism for the end-user to provide feedback on the generated response.

Next to these visible Chat Safeguards, there is also support for:

- Health-adapted abuse monitoring: Abuse monitoring in the healthcare agent service is a crucial feature that ensures the bot recognizes and blocks problematic prompts and responses in real-time. This is achieved by leveraging the Azure Content Filtering mechanism in combination with our Safeguards.

- Credible Fallback Enforcement: As a customer you can leverage the Generative Credible Fallback feature. This feature contains information that has been grounded on a set of reliable sources designed to provide trustworthy healthcare answers. Each evidence has the specific credible source associated with it to ensure the accuracy and dependability of the information provided.

Clinical Safeguards

The usage of Clinical Safeguards in the healthcare agent service is to ensure the quality and accuracy of AI-generated content in the healthcare domain. These safeguards are designed to address the unique complexities and regulations of healthcare, providing a suite of measures tailored for this field. Every answer will be checked against several safeguards that is managed by the platform. In the public preview we have enabled the following safeguards:

- Evidence verification: Evidence verification involves assessing the clinical entailment of answers alongside the provided evidence, ensuring that the evidence not only provides a response to the user's question but also verifies its relevance, existence, and authenticity, thereby preventing fabricated links. Additionally, the evidence is ranked based on its significance within the context of the query.

- Provenance: Verifying a relation exists between the generated output text and the input text and establishing meaningful links between them

- Hallucination detection: Identify and rectify hallucinations based on an original input text and a transformed Generative AI answer.

- Clinical Code verification: Checks to ensure the authenticity of clinical coding in AI Generated text preventing the fabrication of clinical information.

All the Clinical Safeguards are seamlessly integrated via the Chat Safeguards.

Compliance Safeguards

The healthcare agent service is a platform that has over 50 global and regional certifications, governed by Azure Global Compliance.

Next to these certifications, the platform also provide different Compliance Safeguards, such as:

- Audit Trails: The audit trails page contains all of the audit logs of the healthcare agent service.

- Consent Management: End users are prompted to explicitly consent to terms when they begin a new interaction with your bot.

- Conversation Data Retention: Customers can opt in to automatically delete the conversation log data, in combination with the retention period (one day, two days, ... until 180 days).