Prepare data for Foundation Model Fine-tuning

Important

This feature is in Public Preview in the following regions: centralus, eastus, eastus2, northcentralus, and westus.

This article describes the accepted training and evaluation data file formats for Foundation Model Fine-tuning (now part of Mosaic AI Model Training).

Notebook: Data validation for training runs

The following notebook shows how to validate your data. It is designed to run independently before you begin training. It validates that your data is in the correct format for Foundation Model Fine-tuning and includes code to help you estimate costs during the training run by tokenizing your raw dataset.

Validate data for training runs notebook

Prepare data for chat completion

For chat completion tasks, chat-formatted data must be in a .jsonl file, where each line is a separate JSON object representing a single chat session. Each chat session is represented as a JSON object with a single key, messages, that maps to an array of message objects. To train on chat data, provide the task_type = 'CHAT_COMPLETION' when you create your training run.

Messages in chat format are automatically formatted according to the model’s chat template, so there is no need to add special chat tokens to manually signal the beginning or end of a chat turn. An example of a model that uses a custom chat template is Meta Llama 3.1 8B Instruct.

Each message object in the array represents a single message in the conversation and has the following structure:

role: A string indicating the author of the message. Possible values aresystem,user, andassistant. If the role issystem, it must be the first chat in the messages list. There must be at least one message with the roleassistant, and any messages after the (optional) system prompt must alternate roles between user/assistant. There must not be two adjacent messages with the same role. The last message in themessagesarray must have the roleassistant.content: A string containing the text of the message.

Note

Mistral models do not accept system roles in their data formats.

The following is a chat-formatted data example:

{

"messages": [

{ "role": "system", "content": "A conversation between a user and a helpful assistant." },

{ "role": "user", "content": "Hi there. What's the capital of the moon?" },

{

"role": "assistant",

"content": "This question doesn't make sense as nobody currently lives on the moon, meaning it would have no government or political institutions. Furthermore, international treaties prohibit any nation from asserting sovereignty over the moon and other celestial bodies."

}

]

}

Prepare data for continued pre-training

For continued pre-training tasks, the training data is your unstructured text data. The training data must be in a Unity Catalog volume containing .txt files. Each .txt file is treated as a single sample. If your .txt files are in a Unity Catalog volume folder, those files are also obtained for your training data. Any non-txt files in the volume are ignored. See Upload files to a Unity Catalog volume.

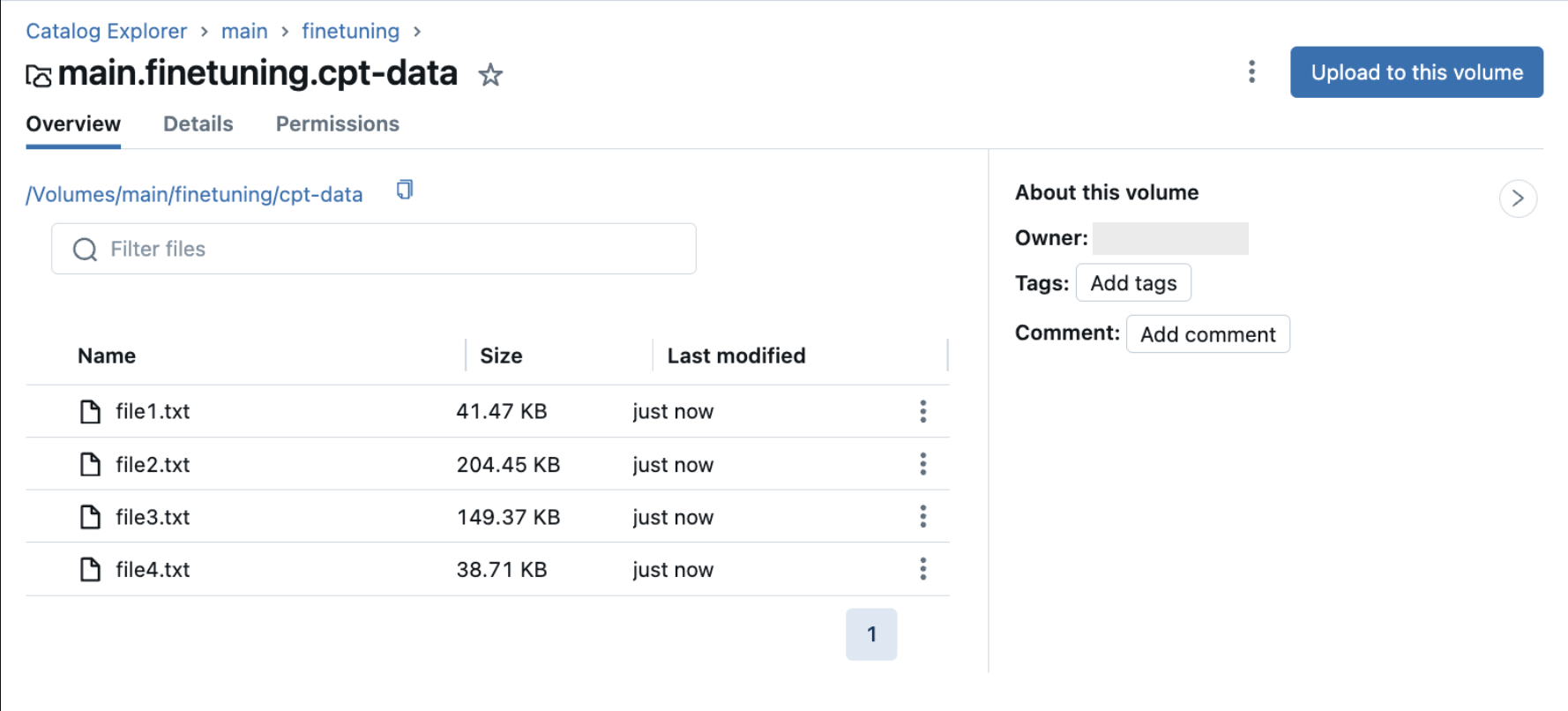

The following image shows example .txt files in a Unity Catalog volume. To use this data in your continued pre-training run configuration, set train_data_path = "dbfs:/Volumes/main/finetuning/cpt-data" and set task_type = 'CONTINUED_PRETRAIN'.

Format data yourself

Warning

The guidance in this section is not recommended, but available for scenarios where custom data formatting is required.

Databricks strongly recommends using chat-formatted data so the proper formatting is automatically applied to your data based on the model you are using.

Foundation Model Fine-tuning allows you to do data formatting yourself. Any data formatting must be applied when training and serving your model. To train your model using your formatted data, set task_type = 'INSTRUCTION_FINETUNE' when you create your training run.

The training and evaluation data must be in one of the following schemas:

Prompt and response pairs.

{ "prompt": "your-custom-prompt", "response": "your-custom-response" }Prompt and completion pairs.

{ "prompt": "your-custom-prompt", "completion": "your-custom-response" }

Important

Prompt-response and prompt-completion are not templated, so any model-specific templating, such as Mistral’s instruct formatting must be performed as a preprocessing step.

Supported data formats

The following are supported data formats:

A Unity Catalog Volume with a

.jsonlfile. The training data must be in JSONL format, where each line is a valid JSON object. The following example shows a prompt and response pair example:{ "prompt": "What is Databricks?", "response": "Databricks is a cloud-based data engineering platform that provides a fast, easy, and collaborative way to process large-scale data." }A Delta table that adheres to one of the accepted schemas mentioned above. For Delta tables, you must provide a

data_prep_cluster_idparameter for data processing. See Configure a training run.A public Hugging Face dataset.

If you use a public Hugging Face dataset as your training data, specify the full path with the split, for example,

mosaicml/instruct-v3/train and mosaicml/instruct-v3/test. This accounts for datasets that have different split schemas. Nested datasets from Hugging Face are not supported.For a more extensive example, see the

mosaicml/dolly_hhrlhfdataset on Hugging Face.The following example rows of data are from the

mosaicml/dolly_hhrlhfdataset.{"prompt": "Below is an instruction that describes a task. Write a response that appropriately completes the request. ### Instruction: what is Databricks? ### Response: ","response": "Databricks is a cloud-based data engineering platform that provides a fast, easy, and collaborative way to process large-scale data."} {"prompt": "Below is an instruction that describes a task. Write a response that appropriately completes the request. ### Instruction: Van Halen famously banned what color M&Ms in their rider? ### Response: ","response": "Brown."}