Apply AI on data using Azure Databricks AI Functions

Important

This feature is in Public Preview.

This article describes Azure Databricks AI Functions and the supported functions.

What are AI Functions?

AI Functions are built-in functions that you can use to apply AI, like text translation or sentiment analysis, on your data that is stored on Databricks. They can be run from anywhere on Databricks, including Databricks SQL, notebooks, Delta Live Tables, and Workflows.

AI Functions are simple to use, fast, and scalable. Analysts can use them to apply data intelligence to their proprietary data, while data scientists and machine learning engineers can use them to build production-grade batch pipelines.

AI Functions provide general purpose and task-specific functions.

ai_queryis a general-purpose function that allows you to apply any type of AI model on your data. See General purpose function:ai_query.- Task-specific functions provide high-level AI capabilities for tasks like summarizing text and translation. These task-specific functions are powered by state of the art generative AI models that are hosted and managed by Databricks. See Task-specific AI functions for supported functions and models.

General purpose function: ai_query

The ai_query() function allows you to apply any AI model to data for both generative AI and classical ML tasks, including extracting information, summarizing content, identifying fraud, and forecasting revenue.

The following table summarizes the supported model types, the associated models and their requirements.

| Type | Supported models | Requirements |

|---|---|---|

| Databricks-hosted foundation models |

These models are made available using Foundation Model APIs. |

Requires no endpoint provisioning or configuration. |

| Fine-tuned foundation models | Fine-tuned foundation models deployed on Mosaic AI Model Serving | Requires you to create a provisioned throughput endpoint in Model Serving. See Batch inference using custom models or fine-tuned foundation models. |

| Foundation models hosted outside of Databricks | Models made available using external models. See Access foundation models hosted outside of Databricks. | Requires you to create an external model serving endpoint. |

| Custom traditional ML and DL models | Any traditional ML or DL model, such as scikit-learn, xgboost, or PyTorch | Requires you to create a custom model serving endpoint |

Use ai_query with foundation models

The following example demonstrates how to use ai_query using a foundation model hosted by Databricks. For syntax details and parameters, see ai_query function function.

SELECT text, ai_query(

"databricks-meta-llama-3-3-70b-instruct",

"Summarize the given text comprehensively, covering key points and main ideas concisely while retaining relevant details and examples. Ensure clarity and accuracy without unnecessary repetition or omissions: " || text

) AS summary

FROM uc_catalog.schema.table;

Use ai_query with traditional ML models

ai_query supports traditional ML models, including fully custom ones. These models must be deployed on Model Serving endpoints. For syntax details and parameters, see ai_query function function.

SELECT text, ai_query(

endpoint => "spam-classification",

request => named_struct(

"timestamp", timestamp,

"sender", from_number,

"text", text),

returnType => "BOOLEAN") AS is_spam

FROM catalog.schema.inbox_messages

LIMIT 10

Task-specific AI functions

Task-specific functions are scoped for a certain task so you can automate routine tasks, like simple summaries and quick translations. These functions invoke a state-of-the-art generative AI model maintained by Databricks and they do not require any customization.

See Analyze customer reviews using AI Functions for an example.

The following table lists the supported functions and what task they each perform.

| Function | Description |

|---|---|

| ai_analyze_sentiment | Perform sentiment analysis on input text using a state-of-the-art generative AI model. |

| ai_classify | Classify input text according to labels you provide using a state-of-the-art generative AI model. |

| ai_extract | Extract entities specified by labels from text using a state-of-the-art generative AI model. |

| ai_fix_grammar | Correct grammatical errors in text using a state-of-the-art generative AI model. |

| ai_gen | Answer the user-provided prompt using a state-of-the-art generative AI model. |

| ai_mask | Mask specified entities in text using a state-of-the-art generative AI model. |

| ai_similarity | Compare two strings and compute the semantic similarity score using a state-of-the-art generative AI model. |

| ai_summarize | Generate a summary of text using SQL and state-of-the-art generative AI model. |

| ai_translate | Translate text to a specified target language using a state-of-the-art generative AI model. |

| ai_forecast | Forecast data up to a specified horizon. This table-valued function is designed to extrapolate time series data into the future. |

| vector_search | Search and query a Mosaic AI Vector Search index using a state-of-the-art generative AI model. |

Use AI Functions in existing Python workflows

AI Functions can be easily integrated in existing Python workflows.

The following writes the output of the ai_query to an output table:

df_out = df.selectExpr(

"ai_query('databricks-meta-llama-3-3-70b-instruct', CONCAT('Please provide a summary of the following text: ', text), modelParameters => named_struct('max_tokens', 100, 'temperature', 0.7)) as summary"

)

df_out.write.mode("overwrite").saveAsTable('output_table')

The following writes the summarized text into a table:

df_summary = df.selectExpr("ai_summarize(text) as summary")

df_summary.write.mode('overwrite').saveAsTable('summarized_table')

Use AI Functions in production workflows

For large-scale batch inference, ai_query can be integrated with production workflows, like Databricks workflows and Structured Streaming. This enables production-grade processing at scale. See Perform batch LLM inference using AI Functions for more details.

Monitor AI Functions progress

To understand how many inferences have completed or failed and troubleshoot performance, you can monitor the progress of AI functions using the query profile feature.

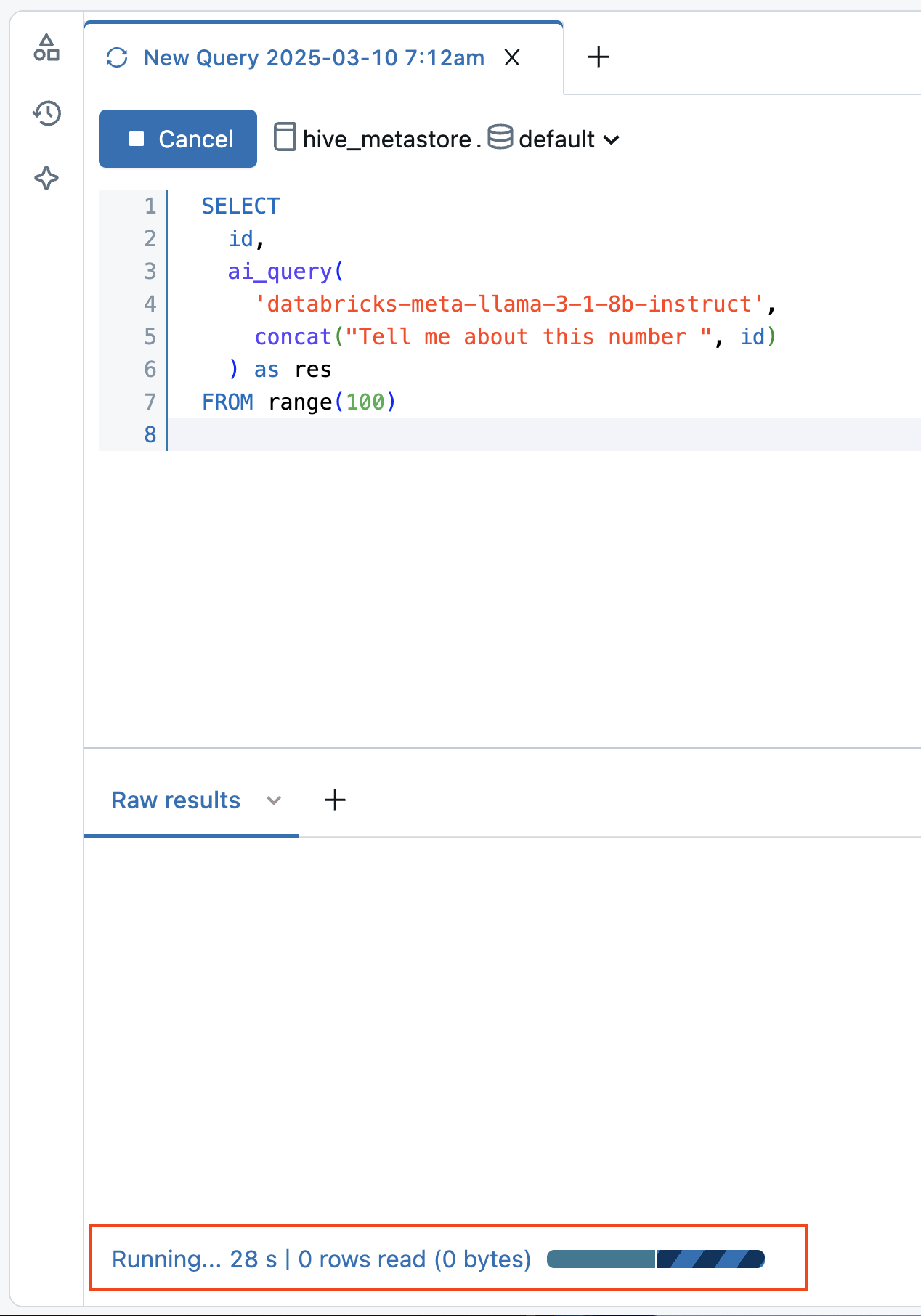

Do the following steps from the SQL editor query window in your workspace:

- Select the link, Running--- at the bottom of the Raw results window. The performance window appears on the right.

- Click See query profile to view performance details.

- Click AI Query to see metrics for that particular query including the number of completed and failed inferences and the total time the request took to complete.