Introduction to generative AI apps on Databricks

Mosaic AI is a code-first platform for building, evaluating, deploying, and monitoring generative AI applications (gen AI apps). It integrates with popular open-source frameworks, while adding enterprise-grade governance, observability, and operational tooling, collectively known as LLMOps.

Open source support in Mosaic AI

Mosaic AI complements but doesn't replace existing open-source gen AI libraries and SDKs, such as:

- OpenAI

- LangChain

- LangGraph

- AutoGen

- LlamaIndex

- CrewAI

- Semantic Kernel

- DSPy

By providing built-in logging, monitoring, robust infrastructure, and secure deployment options, Mosaic AI ensures these frameworks can be used to build high quality gen AI apps at scale for enterprises. Importantly, whether you build and host your gen AI app on Databricks or elsewhere, you can still leverage Mosaic AI evaluation and monitoring capabilities. For teams that prefer more granular, direct control, Mosaic AI also supports custom gen AI apps built from the ground up using Python only - no framework required.

What are gen AI apps?

A gen AI app is an application that uses generative AI models (such as LLMs, image generation models, and text-to-speech models) to create new outputs, automate complex tasks, or engage in intelligent interactions based on user input. While gen AI apps can use various models, this guide concentrates on applications powered by LLMs.

While LLM-powered gen AI apps can be built in different ways, they generally fall under one of two architectural patterns:

| Type 1: Monolithic LLM + prompt | Type 2 (recommended): Agent system | |

|---|---|---|

| What is it? | A single LLM with carefully designed prompts. | Multiple interacting components (LLM calls, retrievers, API calls) orchestrated together - ranging from simple chains to sophisticated multi-agent systems. |

| Example use case | Content classification: Using an LLM to categorize customer support tickets into predefined topics. | Intelligent assistant: Combining document retrieval, multiple LLM calls, and external APIs to research, analyze, and generate comprehensive reports. |

| Best for | Simple, focused tasks, quick prototypes, and clear, well-defined prompts. | Complex workflows, tasks requiring multiple capabilities, and tasks requiring reflection on previous steps. |

| Key benefits | Simpler implementation, faster development, and lower operational complexity. | More reliable and maintainable, better control and flexibility, easier to test and verify, and component-level optimization. |

| Limitations | Less flexible, harder to optimize, and limited functionality. | More complex implementation, more initial setup, and needs component coordination. |

For most enterprise use cases, Databricks recommends an agent system. By breaking systems into smaller, well-defined components, developers can better manage complexity while maintaining high levels of control and compliance required for enterprise applications.

Mosaic AI provides tooling and capabilities that work for both monolithic systems and agent systems, and the rest of this documentation covers building both types of gen AI apps.

To read more about the theory behind agent systems versus monolithic models, see blog posts from the Databricks founders:

- AI agent systems: Modular Engineering for Reliable Enterprise AI Applications

- The Shift from Models to Compound AI Systems

What is an agent system?

An agent system is an AI-driven system that can autonomously perceive, decide, and act in an environment to achieve goals. Unlike a standalone LLM that only produces an output when prompted, an agent system possesses a degree of agency. Modern LLM-based agent systems use a LLM as the “brain” to interpret context, reason about what to do next, and issue actions such as API calls, retrieval mechanisms, and tool invocations to carry out tasks.

An agent system is simply a system with a LLM at its core. That system:

- Receives user requests or messages from another agent.

- Reasons about how to proceed: which data to fetch, which logic to apply, which tools to call, or whether to request more input from the user.

- Executes a plan and possibly calls multiple tools or delegates to sub-agents.

- Returns an answer or prompts the user for additional clarification.

By bridging general intelligence (the LLM’s pretrained capabilities) and data intelligence (the specialized knowledge and APIs specific to your business), agent systems enable high-impact enterprise use cases such as advanced customer service flows, data-rich analytics bots, and multi-agent orchestration for complex operational tasks.

What can an agent system do?

An agent system can:

- Dynamically plan actions

- Carry state from one step to the next

- Adjust its strategy based on new information, without continuous human intervention

Where a standalone LLM might output a travel itinerary when asked, an agent system could retrieve customer information and actually go and book the flights by leveraging tools and APIs autonomously. By combining “general intelligence” from the LLM with “data intelligence” (domain-specific data or APIs), agent systems can tackle sophisticated enterprise use cases that a single static model would struggle to solve.

Agency is a continuum; the more freedom you provide models to control the behavior of the system, the more agentic the application becomes. In practice, most production systems carefully constrain the agent’s autonomy to ensure compliance and predictability, for example by requiring human approval for risky actions.

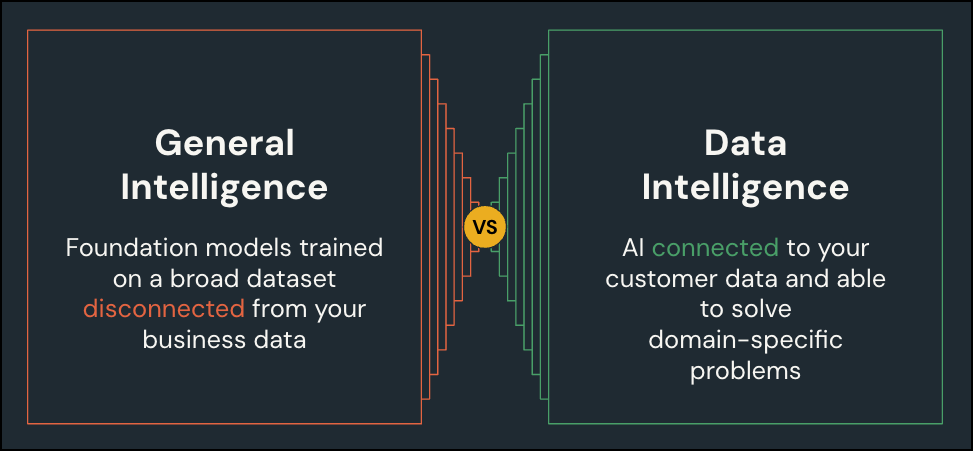

General intelligence vs. data intelligence

- General intelligence: Refers to what the LLM inherently knows from broad pretraining on diverse text. This is useful for language fluency and general reasoning.

- Data intelligence: Refers to your organization’s domain-specific data and APIs. This might include customer records, product information, knowledge bases, or documents that reflect your unique business environment.

Agent systems blend these two perspectives: They start with an LLM’s broad, generic knowledge, then bring in real-time or domain-specific data to answer detailed questions or perform specialized actions.

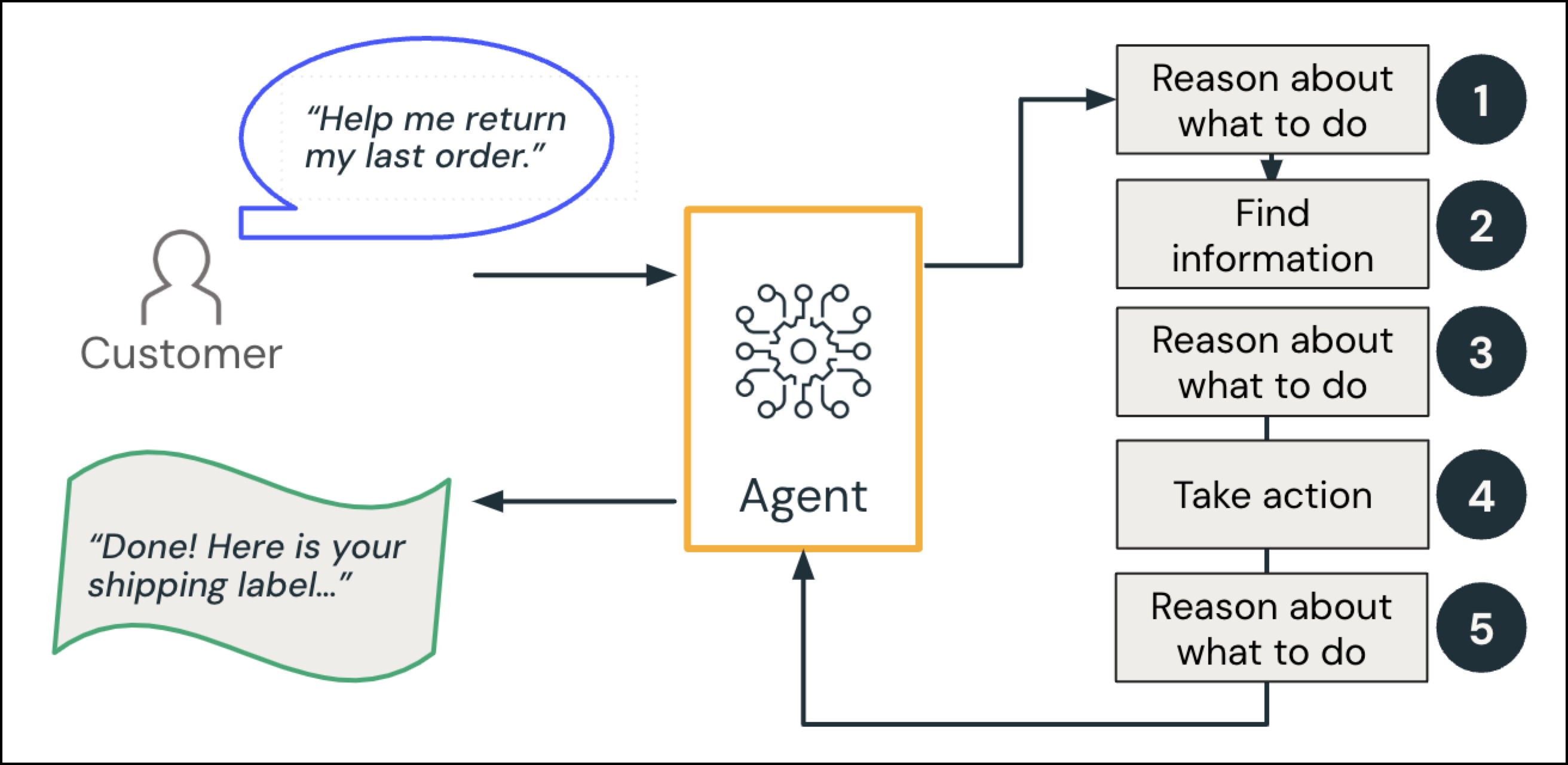

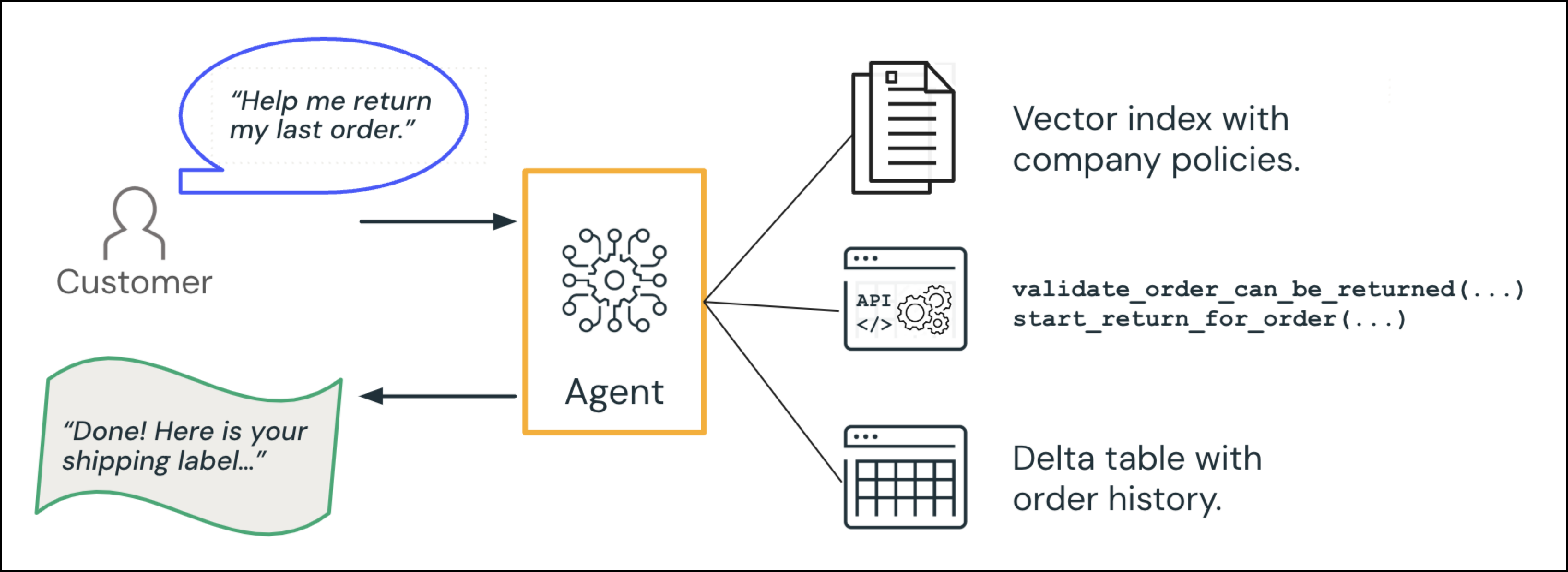

Example agent system

Consider a call center scenario between a customer and gen AI agent:

The customer makes a request: “Can you help me return my last order?”

- Reason and plan: Given the intent of the query, the agent “plans": “Look up the user’s recent order and check our return policy.”

- Find information (data intelligence): The agent queries the order database to retrieve the relevant order and references a policy document.

- Reason: The agent checks whether that order fits within the return window.

- Optional human-in-the-loop: The agent checks an additional rule: If the item is a certain category or outside the normal return window, escalate to a human.

- Action: The agent triggers the return process and generates a shipping label.

- Reason: The agent generates a response to the customer.

The AI agent responds to the customer: “Done! Here is your shipping label…”

In a human call center context, these steps are second nature. In an agent system context, the LLM “reasons” while the system calls on specialized tools or data sources to fill in the details.

Levels of complexity: From LLMs to agent systems

When building any LLM-powered application, start simple. A solution which gets you 80% of the way there often involves a single LLM call enhanced by retrieval or carefully engineered prompts. Introduce more complex agentic behaviors (like dynamic tool-calling or multi-agent orchestration) when you truly need them for better flexibility or model-driven decisions. Deterministic chains offer predictable, rule-based flows for well-defined tasks, while more agentic approaches come at the cost of extra complexity and potential latency.

You might encounter several levels of complexity when building AI systems:

- LLMs (LLM + Prompt)

- A standalone LLM that responds to text prompts using its pretrained knowledge.

- Good for simple or generic queries, but often disconnected from your real-world business data.

- Hard-coded agent system (“Chain”)

- You orchestrate using deterministic, pre-defined steps (e.g., always retrieve from a vector store, always combine the result with the user’s question, then call the LLM).

- The logic is fixed, and the LLM does not decide which tool to call next.

- Tool-calling agent system

- The LLM automatically selects and calls “tools” at runtime.

- This approach supports dynamic, context-aware decisions about which tools to invoke, such as a CRM database or a Slack-posting API.

- Multi-agent systems

- Multiple specialized agents, each with its own function or domain.

- A coordinator (sometimes an AI supervisor, sometimes rule-based) decides which agent to invoke at each step.

- Agents can hand tasks off to each other while preserving the overall conversation flow.

Mosaic AI Agent Framework is agnostic to these patterns, making it easy to start simple and evolve toward higher levels of automation and autonomy as your application requirements grow.

Tools in an agent system

In the context of an agent system, tools are single-interaction functions that an LLM may invoke to accomplish a well-defined task. The AI model typically generates parameters for each tool call, and the tool provides a straightforward input-output interaction. There is no multi-turn memory on the tool side.

Some common tool categories include:

- Tools that retrieve or analyze data

- Vector retrieval tools: Query a vector index to locate the most relevant text chunks.

- Structured retrieval tools: Query Delta tables or use APIs to retrieve structured information.

- Web search tool: Search the internet or an internal web corpus.

- Classic ML models: Tools that invoke ML models to perform classification or regression predictions, for example, a scikit-learn or XGBoost model.

- Gen AI models: Tools that perform specialized generation such as code generation or image generation and return the results.

- Tools that modify the state of an external system

- API calling tool: CRM endpoints, internal services, or other third-party integrations for tasks such as “update shipping status”.

- Code execution tool: Runs user-supplied (or in some cases LLM-generated) code in a sandbox.

- Slack or email integration: Posts a message or sends a notification.

- Tools that perform executes logic or performs a specific task

- Code executor tool: Runs user-supplied or LLM-generated code in a sandbox, for example, Python scripts.

To learn more about Mosaic AI agent tools, see AI agent tools.

Key characteristics of tools

Tools in an agent system:

- Perform a single, well-defined operation.

- Do not maintain ongoing context beyond that one invocation.

- Allow the agent system to reach external data or services that the LLM cannot directly access.

Tool error handling and safety

Because each tool call is an external operation, for example calling an API, the system should handle failures gracefully, such as - time-outs, error handling malformed responses, or invalid inputs. In production, you might limit the number of allowed tool calls, have a fallback response if all tool calls fail, and apply guardrails to ensure the agent system does not repeatedly attempt the same failing action.