Create and manage shares for Delta Sharing

This article explains how to create and manage shares for Delta Sharing.

A share is a securable object in Unity Catalog that you can use for sharing the following data assets with one or more recipients:

- Tables and table partitions

- Views, including dynamic views that restrict access at the row and column level

- Volumes

- Notebooks

- AI models

If you share an entire schema (database), the recipient can access all of the tables, views, models, and volumes in the schema at the moment you share it, along with any data and AI assets that are added to the schema in the future.

A share can contain data and AI assets from only one Unity Catalog metastore. You can add or remove data and AI assets from a share at any time.

For more information, see Shares, providers, and recipients.

Requirements

To create a share, you must:

- Be a metastore admin or have the

CREATE SHAREprivilege for the Unity Catalog metastore where the data you want to share is registered. - Create the share using an Azure Databricks workspace that has that Unity Catalog metastore attached.

To add tables or views to a share, you must:

- Be the share owner.

- Have the

USE CATALOGandUSE SCHEMAprivilege on the catalog and schema that contain the table or view, or ownership of the catalog or schema. - Have the

SELECTprivilege on the table or view. You must keep that privilege in order for the table or view to continue to be shared. If you lose it, the recipient cannot access the table or view through the share. Databricks therefore recommends that you use a group as the share owner.

To add volumes to a share, you must:

- Be the share owner.

- Have the

USE CATALOGandUSE SCHEMAprivilege on the catalog and schema that contain the volume, or ownership of the catalog or schema. - Have the

READ VOLUMEprivilege on the volume. You must keep that privilege in order for the volume to continue to be shared. If you lose it, the recipient cannot access the volume through the share. Databricks therefore recommends that you use a group as the share owner.

To add models to a share, you must:

- Be the share owner.

- Have the

USE CATALOGandUSE SCHEMAprivilege on the catalog and schema that contain the model, or ownership of the catalog or schema. - Have the

EXECUTEprivilege on the model. You must keep that privilege in order for the model to continue to be shared. If you lose it, the recipient cannot access the model through the share. Databricks therefore recommends that you use a group as the share owner.

To share an entire schema, you must:

- Be the share owner and the schema owner, or have

USE SCHEMA. - Have

SELECTon the schema to share tables. - Have

READ VOLUMEon the schema to share volumes.

To add notebook files to a share, you must be:

- The share owner and have CAN READ permission on the notebook.

To grant recipient access to a share, you must be one of these:

- Metastore admin.

- User with delegated permissions or ownership on both the share and the recipient objects ((

USE SHARE+SET SHARE PERMISSION) or share owner) AND (USE RECIPIENTor recipient owner).

To view shares, you must be one of these:

- A metastore admin (can view all)

- A user with the

USE SHAREprivilege (can view all) - The share object owner

Compute requirements:

- If you use a Databricks notebook to create the share, your compute resource must use Databricks Runtime 11.3 LTS or above and have a standard or dedicated access mode (formerly shared and single user).

- If you use SQL statements to add a schema to a share (or update or remove a schema), you must use a SQL warehouse or compute running Databricks Runtime 13.3 LTS or above. Doing the same using Catalog Explorer has no compute requirements.

Create a share object

To create a share, you can use Catalog Explorer, the Databricks Unity Catalog CLI, or the CREATE SHARE SQL command in an Azure Databricks notebook or the Databricks SQL query editor.

Permissions required: Metastore admin or user with the CREATE SHARE privilege for the metastore.

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, click the Share data button.

On the Create share page, enter the share Name and an optional comment.

Click Save and continue.

You can continue to add data assets, or you can stop and come back later.

On the Add data assets tab, select the tables, volumes, views, and models you want to share.

For detailed instructions, see:

Click Save and continue.

On the Add notebooks tab, select the notebooks you want to share.

For detailed instructions, see Add notebook files to a share.

Click Save and continue.

On the Add recipients tab, select the recipients you want to share with.

For detailed instructions, see Manage access to Delta Sharing data shares (for providers).

Click Share data to share the data with the recipients.

SQL

Run the following command in a notebook or the Databricks SQL query editor:

CREATE SHARE [IF NOT EXISTS] <share-name>

[COMMENT "<comment>"];

Now you can add tables, volumes, views, and models to the share.

For detailed instructions, see:

CLI

Run the following command using the Databricks CLI.

databricks shares create <share-name>

You can use --comment to add a comment or --json to add assets to the share. For details, see the sections that follow.

Now you can add tables, volumes, views, and models to the share.

For detailed instructions, see:

Add tables to a share

To add tables to a share, you can use Catalog Explorer, the Databricks Unity Catalog CLI, or SQL commands in an Azure Databricks notebook or the Databricks SQL query editor.

Note

Table comments, column comments, and primary key constraints are included in shares that are shared with a recipient using Databricks-to-Databricks sharing on or after July 25, 2024. If you want to start sharing comments and constraints through a share that was shared with a recipient before the release date, you must revoke and re-grant recipient access to trigger comment and constraint sharing.

Permissions required: Owner of the share object, USE CATALOG and USE SCHEMA on the catalog and schema that contain the table, and the SELECT privilege on the table. You must maintain the SELECT privilege for as long as you want to share the table. For more information, see Requirements.

Note

If you are a workspace admin and you inherited the USE SCHEMA and USE CATALOG permissions on the schema and catalog that contain the table from the workspace admin group, then you cannot add the table to a share. You must first grant yourself the USE SCHEMA and USE CATALOG permissions on the schema and catalog.

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find the share you want to add a table to and click its name.

Click Manage assets > Add data assets.

On the Add tables page, select either an entire schema (database) or individual tables and views.

To select a table or view, first select the catalog, then the schema that contains the table or view, then the table or view itself.

You can search for tables by name, column name, or comment using workspace search. See Search for workspace objects.

To select a schema, first select the catalog and then the schema.

For detailed information about sharing schemas, see Add schemas to a share.

History: Share the table history to allow recipients to perform time travel queries or read the table with Spark Structured Streaming. For Databricks-to-Databricks shares, the table’s Delta log is also shared to improve performance. See Improve table read performance with history sharing. History sharing requires Databricks Runtime 12.2 LTS or above.

Note

If, in addition to doing time travel queries and streaming reads, you want your customers to be able to query a table’s change data feed (CDF) using the table_changes() function, you must enable CDF on the table before you share it

WITH HISTORY.(Optional) Click Advanced table options to specify the following options. Alias and partitions are not available if you select an entire schema. Table history is included by default if you select an entire schema.

- Alias: An alternate table name to make the table name more readable. The alias is the table name that the recipient sees and must use in queries. Recipients cannot use the actual table name if an alias is specified.

- Partition: Share only part of the table. For example,

(column = 'value'). See Specify table partitions to share and Use recipient properties to do partition filtering.

Click Save.

SQL

Run the following command in a notebook or the Databricks SQL query editor to add a table:

ALTER SHARE <share-name> ADD TABLE <catalog-name>.<schema-name>.<table-name> [COMMENT "<comment>"]

[PARTITION(<clause>)] [AS <alias>]

[WITH HISTORY | WITHOUT HISTORY];

Run the following to add an entire schema. The ADD SCHEMA command requires a SQL warehouse or compute running Databricks Runtime 13.3 LTS or above. For detailed information about sharing schemas, see Add schemas to a share.

ALTER SHARE <share-name> ADD SCHEMA <catalog-name>.<schema-name>

[COMMENT "<comment>"];

Options include the following. PARTITION and AS <alias> are not available if you select an entire schema.

PARTITION(<clause>): If you want to share only part of the table, you can specify a partition. For example,(column = 'value')See Specify table partitions to share and Use recipient properties to do partition filtering.AS <alias>: An alternate table name, or Alias to make the table name more readable. The alias is the table name that the recipient sees and must use in queries. Recipients cannot use the actual table name if an alias is specified. Use the format<schema-name>.<table-name>.WITH HISTORYorWITHOUT HISTORY: WhenWITH HISTORYis specified, share the table with full history, allowing recipients to perform time travel queries and streaming reads. For Databricks-to-Databricks shares, history sharing also shares the table’s Delta log to improve performance. The default behavior for table sharing isWITH HISTORYif your compute is running Databricks Runtime 16.2 or above andWITHOUT HISTORYfor earlier Databricks Runtime versions. For schema sharing, the default isWITH HISTORYregardless of Databricks Runtime version.WITH HISTORYandWITHOUT HISTORYrequire Databricks Runtime 12.2 LTS or above. See also Improve table read performance with history sharing.Note

If, in addition to doing time travel queries and streaming reads, you want your customers to be able to query a table’s change data feed (CDF) using the table_changes() function, you must enable CDF on the table before you share it

WITH HISTORY.

For more information about ALTER SHARE options, see ALTER SHARE.

CLI

To add a table, run the following command using the Databricks CLI.

databricks shares update <share-name> \

--json '{

"updates": [

{

"action": "ADD",

"data_object": {

"name": "<table-full-name>",

"data_object_type": "TABLE",

"shared_as": "<table-alias>"

}

}

]

}'

To add a schema, run the following Databricks CLI command:

databricks shares update <share-name> \

--json '{

"updates": [

{

"action": "ADD",

"data_object": {

"name": "<schema-full-name>",

"data_object_type": "SCHEMA"

}

}

]

}'

Note

For tables, and only tables, you can omit "data_object_type".

To learn about the options listed in this example, view the instructions on the SQL tab.

To learn about additional parameters, run databricks shares update --help or see PATCH /api/2.1/unity-catalog/shares/ in the REST API reference.

For information about removing tables from a share, see Update shares.

Specify table partitions to share

To share only part of a table when you add the table to a share, you can provide a partition specification. You can specify partitions when you add a table to a share or update a share, using Catalog Explorer, the Databricks Unity Catalog CLI, or SQL commands in an Azure Databricks notebook or the Databricks SQL query editor. See Add tables to a share and Update shares.

Basic example

The following SQL example shares part of the data in the inventory table, partitioned by the year, month, and date columns:

- Data for the year 2021.

- Data for December 2020.

- Data for December 25, 2019.

ALTER SHARE share_name

ADD TABLE inventory

PARTITION (year = "2021"),

(year = "2020", month = "Dec"),

(year = "2019", month = "Dec", date = "2019-12-25");

Use recipient properties to do partition filtering

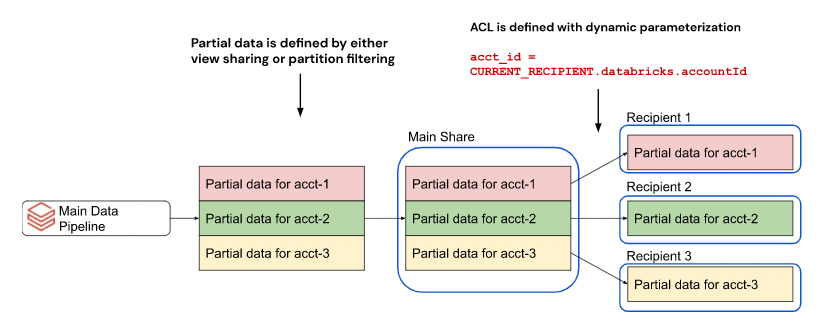

You can share a table partition that matches data recipient properties, also known as parameterized partition sharing.

Default properties include:

databricks.accountId: The Azure Databricks account that a data recipient belongs to (Databricks-to-Databricks sharing only).databricks.metastoreId: The Unity Catalog metastore that a data recipient belongs to (Databricks-to-Databricks sharing only).databricks.name: The name of the data recipient.

You can create any custom property you like when you create or update a recipient.

Filtering by recipient property enables you to share the same tables, using the same share, across multiple Databricks accounts, workspaces, and users while maintaining data boundaries between them.

For example, if your tables include an Azure Databricks account ID column, you can create a single share with table partitions defined by Azure Databricks account ID. When you share, Delta Sharing dynamically delivers to each recipient only the data associated with their Azure Databricks account.

Without the ability to dynamically partition by property, you would have to create a separate share for each recipient.

To specify a partition that filters by recipient properties when you create or update a share, you can use Catalog Explorer or the CURRENT_RECIPIENT SQL function in an Azure Databricks notebook or the Databricks SQL query editor:

Note

Recipient properties are available on Databricks Runtime 12.2 and above.

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find the share you want to update and click its name.

Click Manage assets > Add data assets.

On the Add tables page, select the catalog and database that contain the table, then select the table.

If you aren’t sure which catalog and database contain the table, you can search for it by name, column name, or comment using workspace search. See Search for workspace objects.

(Optional) Click Advanced table options to add Partition specifications.

On the Add partition to a table dialog, add the property-based partition specification using the following syntax:

(<column-name> = CURRENT_RECIPIENT().<property-key>)For example,

(country = CURRENT_RECIPIENT().'country')Click Save.

SQL

Run the following command in a notebook or the Databricks SQL query editor:

ALTER SHARE <share-name> ADD TABLE <catalog-name>.<schema-name>.<table-name>

PARTITION (<column-name> = CURRENT_RECIPIENT().<property-key>);

For example,

ALTER SHARE acme ADD TABLE acme.default.some_table

PARTITION (country = CURRENT_RECIPIENT().'country');

Add tables with deletion vectors or column mapping to a share

Important

This feature is in Public Preview.

Deletion vectors are a storage optimization feature that you can enable on Delta tables. See What are deletion vectors?.

Azure Databricks also supports column mapping for Delta tables. See Rename and drop columns with Delta Lake column mapping.

To share a table with deletion vectors or column mapping, you must share it with history. See Add tables to a share.

When you share a table with deletion vectors or column mapping, recipients can query the table using a SQL warehouse, a compute running Databricks Runtime 14.1 or above, or compute that is running open source delta-sharing-spark 3.1 or above. See Read tables with deletion vectors or column mapping enabled and Read tables with deletion vectors or column mapping enabled.

Add views to a share

Important

This feature is in Public Preview.

Views are read-only objects created from one or more tables or other views. A view can be created from tables and other views that are contained in multiple schemas and catalogs in a Unity Catalog metastore. See Create and manage views.

This section describes how to add views to a share using Catalog Explorer, Databricks CLI, or SQL commands in an Azure Databricks notebook or the Databricks SQL query editor. If you prefer to use the Unity Catalog REST API, see PATCH /api/2.1/unity-catalog/shares/ in the REST API reference.

Permissions required: Owner of the share object, USE CATALOG and USE SCHEMA on the catalog and schema that contain the view, and SELECT on the view. You must maintain the SELECT privilege for as long as you want to share the view. For more information, see Requirements.

Additional requirements:

- You must enable Serverless compute for workflows, notebooks, and DLT in the account where view sharing is set up. See Enable serverless compute.

- Shareable views must be defined on Delta tables or other shareable views.

- You cannot share views that reference shared tables or shared views.

- You must use a SQL warehouse or a compute on Databricks Runtime 13.3 LTS or above when you add a view to a share.

- For requirements and limitations on recipient usage of views, see Read shared views.

To add views to a share:

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find the share you want to add a view to and click its name.

Click Manage assets > Add data assets.

On the Add tables page, search or browse for the view that you want to share and select it.

(Optional) Click Advanced table options to specify an Alias, or alternate view name, to make the view name more readable. The alias is the name that the recipient sees and must use in queries. Recipients cannot use the actual view name if an alias is specified.

Click Save.

SQL

Run the following command in a notebook or the Databricks SQL query editor:

ALTER SHARE <share-name> ADD VIEW <catalog-name>.<schema-name>.<view-name>

[COMMENT "<comment>"]

[AS <alias>];

Options include:

AS <alias>: An alternate view name, or alias, to make the view name more readable. The alias is the view name that the recipient sees and must use in queries. Recipients cannot use the actual view name if an alias is specified. Use the format<schema-name>.<view-name>.COMMENT "<comment>": Comments appear in the Catalog Explorer UI and when you list and display view details using SQL statements.

For more information about ALTER SHARE options, see ALTER SHARE.

CLI

Run the following Databricks CLI command:

databricks shares update <share-name> \

--json '{

"updates": [

{

"action": "ADD",

"data_object": {

"name": "<view-full-name>",

"data_object_type": "VIEW",

"shared_as": "<view-alias>"

}

}

]

}'

"shared_as": "<view-alias>" is optional and provides an alternate view name, or alias, to make the view name more readable. The alias is the view name that the recipient sees and must use in queries. Recipients cannot use the actual view name if an alias is specified. Use the format <schema-name>.<view-name>.

To learn about additional parameters, run databricks shares update --help or see PATCH /api/2.1/unity-catalog/shares/ in the REST API reference.

For information about removing views from a share, see Update shares.

Add dynamic views to a share to filter rows and columns

Important

This feature is in Public Preview.

You can use dynamic views to configure fine-grained access control to table data, including:

- Security at the level of columns or rows.

- Data masking.

When you create a dynamic view that uses the CURRENT_RECIPIENT() function, you can limit recipient access according to properties that you specify in the recipient definition.

This section provides examples of restricting recipient access to table data at both the row and column level using a dynamic view.

Requirements

- Databricks Runtime version: The

CURRENT_RECIPIENTfunction is supported in Databricks Runtime 14.2 and above. - Permissions:

- To create a view, you must be the owner of the share object, have

USE CATALOGandUSE SCHEMAon the catalog and schema that contain the view, along withSELECTon the view. You must maintain theSELECTprivilege for as long as you want to share the view. - To set properties on a recipient, you must be the owner of the recipient object.

- To create a view, you must be the owner of the share object, have

- Limitations: All limitations for view sharing, including restriction to Databricks-to-Databricks sharing, plus the following:

- When a provider shares a view that uses the

CURRENT_RECIPIENTfunction, the provider can’t query the view directly because of the sharing context. To test such a dynamic view, the provider must share the view with themselves and query the view as a recipient. - Providers cannot create a view that references a dynamic view.

- When a provider shares a view that uses the

Set a recipient property

In these examples, the table to be shared has a column named country, and only recipients with a matching country property can view certain rows or columns.

You can set recipient properties using Catalog Explorer or SQL commands in an Azure Databricks notebook or the SQL query editor.

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Recipients tab, find the recipient you want to add the properties to and click its name.

Click Edit properties.

On the Edit recipient properties dialog, enter the column name as a key (in this case

country) and the value you want to filter by as the value (for example,CA).Click Save.

SQL

To set the property on the recipient, use ALTER RECIPIENT. In this example, the country property is set to CA.

ALTER RECIPIENT recipient1 SET PROPERTIES ('country' = 'CA');

Create a dynamic view with row-level permission for recipients

In this example, only recipients with a matching country property can view certain rows.

CREATE VIEW my_catalog.default.view1 AS

SELECT * FROM my_catalog.default.my_table

WHERE country = CURRENT_RECIPIENT('country');

Another option is for the data provider to maintain a separate mapping table that maps fact table fields to recipient properties, allowing recipient properties and fact table fields to be decoupled for greater flexiblity.

Create a dynamic view with column-level permission for recipients

In this example, only recipients that match the country property can view certain columns. Others see the data returned as REDACTED:

CREATE VIEW my_catalog.default.view2 AS

SELECT

CASE

WHEN CURRENT_RECIPIENT('country') = 'US' THEN pii

ELSE 'REDACTED'

END AS pii

FROM my_catalog.default.my_table;

Share the dynamic view with a recipient

To share the dynamic view with a recipient, use the same SQL commands or UI procedure as you would for a standard view. See Add views to a share.

Add volumes to a share

Volumes are Unity Catalog objects that represent a logical volume of storage in a cloud object storage location. They are intended primarily to provide governance over non-tabular data assets. See What are Unity Catalog volumes?.

This section describes how to add volumes to a share using Catalog Explorer, the Databricks CLI, or SQL commands in an Azure Databricks notebook or SQL query editor. If you prefer to use the Unity Catalog REST API, see PATCH /api/2.1/unity-catalog/shares/ in the REST API reference.

Note

Volume comments are included in shares that are shared with a recipient using Databricks-to-Databricks sharing on or after July 25, 2024. If you want to start sharing comments through a share that was shared with a recipient before the release date, you must revoke and re-grant recipient access to trigger comment sharing.

Permissions required: Owner of the share object, USE CATALOG and USE SCHEMA on the catalog and schema that contain the volume, and READ VOLUME on the volume. You must maintain the READ VOLUME privilege for as long as you want to share the volume. For more information, see Requirements.

Additional requirements:

- Volume sharing is supported only in Databricks-to-Databricks sharing.

- You must use a SQL warehouse on version 2023.50 or above or a compute resource on Databricks Runtime 14.1 or above when you add a volume to a share.

- If the volume storage on the provider side has custom network configurations (such as a firewall or private link), then the provider must ensure that the recipient’s control plane and data plane addresses are properly allowlisted to be able to connect to the storage location of the volume.

To add volumes to a share:

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find the share you want to add a volume to and click its name.

Click Manage assets > Edit assets.

On the Edit assets page, search or browse for the volume that you want to share and select it.

Alternatively, you can select the entire schema that contains the volume. See Add schemas to a share.

(Optional) Click Advanced options to specify an alternate volume name, or Alias, to make the volume name more readable.

Aliases are not available if you select an entire schema.

The alias is the name that the recipient sees and must use in queries. Recipients cannot use the actual volume name if an alias is specified.

Click Save.

SQL

Run the following command in a notebook or the Databricks SQL query editor:

ALTER SHARE <share-name> ADD VOLUME <catalog-name>.<schema-name>.<volume-name>

[COMMENT "<comment>"]

[AS <alias>];

Options include:

AS <alias>: An alternate volume name, or alias, to make the volume name more readable. The alias is the volume name that the recipient sees and must use in queries. Recipients cannot use the actual volume name if an alias is specified. Use the format<schema-name>.<volume-name>.COMMENT "<comment>": Comments appear in the Catalog Explorer UI and when you list and display volume details using SQL statements.

For more information about ALTER SHARE options, see ALTER SHARE.

CLI

Run the following command using Databricks CLI 0.210 or above:

databricks shares update <share-name> \

--json '{

"updates": [

{

"action": "ADD",

"data_object": {

"name": "<volume-full-name>",

"data_object_type": "VOLUME",

"string_shared_as": "<volume-alias>"

}

}

]

}'

"string_shared_as": "<volume-alias>" is optional and provides an alternate volume name, or alias, to make the volume name more readable. The alias is the volume name that the recipient sees and must use in queries. Recipients cannot use the actual volume name if an alias is specified. Use the format <schema-name>.<volume-name>.

To learn about additional parameters, run databricks shares update --help or see PATCH /api/2.1/unity-catalog/shares/ in the REST API reference.

For information about removing volumes from a share, see Update shares.

Add models to a share

This section describes how to add models to a share using Catalog Explorer, the Databricks CLI, or SQL commands in an Azure Databricks notebook or SQL query editor. If you prefer to use the Unity Catalog REST API, see PATCH /api/2.1/unity-catalog/shares/ in the REST API reference.

Note

Model comments and model version comments are included in shares that are shared using Databricks-to-Databricks sharing.

Permissions required: Owner of the share object, USE CATALOG and USE SCHEMA on the catalog and schema that contain the model, and EXECUTE on the model. You must maintain the EXECUTE privilege for as long as you want to share the model. For more information, see Requirements.

Additional requirements:

- Model sharing is supported only in Databricks-to-Databricks sharing.

- You must use a SQL warehouse on version 2023.50 or above or a compute resource on Databricks Runtime 14.0 or above when you add a model to a share.

To add models to a share:

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find the share you want to add a model to and click its name.

Click Manage assets > Edit assets.

On the Edit assets page, search or browse for the model that you want to share and select it.

Alternatively, you can select the entire schema that contains the model. See Add schemas to a share.

(Optional) Click Advanced options to specify an alternate model name, or Alias, to make the model name more readable.

Aliases are not available if you select an entire schema.

The alias is the name that the recipient sees and must use in queries. Recipients cannot use the actual model name if an alias is specified.

Click Save.

SQL

Run the following command in a notebook or the Databricks SQL query editor:

ALTER SHARE <share-name> ADD MODEL <catalog-name>.<schema-name>.<model-name>

[COMMENT "<comment>"]

[AS <alias>];

Options include:

AS <alias>: An alternate model name, or alias, to make the model name more readable. The alias is the model name that the recipient sees and must use in queries. Recipients cannot use the actual model name if an alias is specified. Use the format<schema-name>.<model-name>.COMMENT "<comment>": Comments appear in the Catalog Explorer UI and when you list and display model details using SQL statements.

For more information about ALTER SHARE options, see ALTER SHARE.

CLI

Run the following command using Databricks CLI 0.210 or above:

databricks shares update <share-name> \

--json '{

"updates": [

{

"action": "ADD",

"data_object": {

"name": "<model-full-name>",

"data_object_type": "MODEL",

"string_shared_as": "<model-alias>"

}

}

]

}'

"string_shared_as": "<model-alias>" is optional and provides an alternate model name, or alias, to make the model name more readable. The alias is the model name that the recipient sees and must use in queries. Recipients cannot use the actual model name if an alias is specified. Use the format <schema-name>.<model-name>.

To learn about additional parameters, run databricks shares update --help or see PATCH /api/2.1/unity-catalog/shares/ in the REST API reference.

For information about removing models from a share, see Update shares.

Add schemas to a share

When you add an entire schema to a share, your recipients will have access not only to all of the data assets in the schema at the time that you create the share, but any assets that are added to the schema over time. This includes all tables, views, and volumes in the schema. Tables shared this way always include full history.

Adding, updating, or removing a schema using SQL requires a SQL warehouse or compute running Databricks Runtime 13.3 LTS or above. Doing the same using Catalog Explorer has no compute requirements.

Permissions required: Owner of the share object and owner of the schema (or a user with USE SCHEMA and SELECT privileges on the schema).

To add a schema to a share, follow the instructions in Add tables to a share, paying attention to the content that specifies how to add a schema.

Table aliases, partitions, and volume aliases are not available if you select an entire schema. If you have created aliases or partitions for any assets in the schema, these are removed when you add the entire schema to the share.

If you want to specify advanced options for a table or volume that you are sharing using schema sharing, you must share the table or volume using SQL and give the table or volume an alias with a different schema name.

Add notebook files to a share

Use Catalog Explorer to add a notebook file to a share.

Permissions required: Owner of the share object and CAN READ permission on the notebook you want to share.

- In your Azure Databricks workspace, click

Catalog.

Catalog. - On the Quick access page, click the Delta Sharing > button.

- On the Shared by me tab, find the share you want to add a notebook to and click its name.

- Click Manage assets and select Add notebook file.

- On the Add notebook file page, click the file icon to browse for the notebook you want to share.

- Click the file you want to share and click Select.

- (Optionally) specify a user-friendly alias for the file in the Share as field. This is the identifier that recipients will see.

- Under Storage location, enter the external location in cloud storage where you want to store the notebook. You can specify a subpath under the defined external location. If you don’t specify an external location, the notebook will be stored in the metastore-level storage location (or “metastore root location”). If no root location is defined for the metastore, you must enter an external location here. See Add managed storage to an existing metastore.

- Click Save.

The shared notebook file now appears in the Notebook files list on the Assets tab.

Remove notebook files from shares

To remove a notebook file from a share:

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find the share that includes the notebook, and click the share name.

On the Assets tab, find the notebook file you want to remove from the share.

Click the

kebab menu to the right of the row, and select Delete notebook file.

kebab menu to the right of the row, and select Delete notebook file.On the confirmation dialog, click Delete.

Update notebook files in shares

To update a notebook that you have already shared, you must re-add it, giving it a new alias in the Share as field. Databricks recommends that you use a name that indicates the notebook’s revised status, such as <old-name>-update-1. You may need to notify the recipient of the change. The recipient must select and clone the new notebook to take advantage of your update.

Grant recipients access to a share

To grant share access to recipients, you can use Catalog Explorer, the Databricks Unity Catalog CLI, or the GRANT ON SHARE SQL command in an Azure Databricks notebook or the Databricks SQL query editor.

Permissions required: One of the following:

- Metastore admin.

- Delegated permissions or ownership on both the share and the recipient objects ((

USE SHARE+SET SHARE PERMISSION) or share owner) AND (USE RECIPIENTor recipient owner).

For instructions, see Manage access to Delta Sharing data shares (for providers). This article also explains how to revoke a recipient’s access to a share.

View shares and share details

To view a list of shares or details about a share, you can use Catalog Explorer, the Databricks Unity Catalog CLI, or SQL commands in an Azure Databricks notebook or the Databricks SQL query editor.

Permissions required: The list of shares returned depends on your role and permissions. Metastore admins and users with the USE SHARE privilege see all shares. Otherwise, you can view only the shares for which you are the share object owner.

Details include:

- The share’s owner, creator, creation timestamp, updater, updated timestamp, comments.

- Data assets in the share.

- Recipients with access to the share.

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

Open the Shares tab to view a list of shares.

View share details on the Details tab.

SQL

To view a list of shares, run the following command in a notebook or the Databricks SQL query editor. Optionally, replace <pattern> with a LIKE predicate.

SHOW SHARES [LIKE <pattern>];

To view details about a specific share, run the following command.

DESCRIBE SHARE <share-name>;

To view details about all tables, views, and volumes in a share, run the following command.

SHOW ALL IN SHARE <share-name>;

CLI

To view a list of shares, run the following command using the Databricks CLI.

databricks shares list

To view details about a specific share, run the following command.

databricks shares get <share-name>

View the recipients who have permissions on a share

To view the list of shares that a recipient has been granted access to, you can use Catalog Explorer, the Databricks Unity Catalog CLI, or the SHOW GRANTS TO RECIPIENT SQL command in an Azure Databricks notebook or the Databricks SQL query editor.

Permissions required: Metastore admin, USE SHARE privilege, or share object owner.

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find and select the recipient.

Go to the Recipients tab to view the list of recipients who can access the share.

SQL

Run the following command in a notebook or the Databricks SQL query editor.

SHOW GRANTS ON SHARE <share-name>;

CLI

Run the following command using the Databricks CLI.

databricks shares share-permissions <share-name>

Update shares

In addition to adding tables, views, volumes, and notebooks to a share, you can:

- Rename a share.

- Remove tables, views, volumes, and schemas from a share.

- Add or update a comment on a share.

- Enable or disable access to a table’s history data, allowing recipients to perform time travel queries or streaming reads of the table.

- Add, update, or remove partition definitions.

- Change the share owner.

To make these updates to shares, you can use Catalog Explorer, the Databricks Unity Catalog CLI, or SQL commands in an Azure Databricks notebook or the Databricks SQL query editor. You cannot use Catalog Explorer to rename the share.

Permissions required: To update the share owner, you must be one of the following: a metastore admin, the owner of the share object, or a user with both the USE SHARE and SET SHARE PERMISSION privileges. To update the share name, you must be a metastore admin (or user with the CREATE_SHARE privilege) and share owner. To update any other share properties, you must be the owner.

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find the share you want to update and click its name.

On the share details page, do the following:

- Click the

edit icon next to the Owner or Comment field to update these values.

edit icon next to the Owner or Comment field to update these values. - Click the kebab menu

button in an asset row to remove it.

button in an asset row to remove it. - Click Manage assets > Edit assets to update all other properties:

- To remove an asset, clear the checkbox next to the asset.

- To add, update, or remove partition definitions, click Advanced options.

SQL

Run the following commands in a notebook or the Databricks SQL editor.

Rename a share:

ALTER SHARE <share-name> RENAME TO <new-share-name>;

Remove tables from a share:

ALTER SHARE share_name REMOVE TABLE <table-name>;

Remove volumes from a share:

ALTER SHARE share_name REMOVE VOLUME <volume-name>;

Add or update a comment on a share:

COMMENT ON SHARE <share-name> IS '<comment>';

Add or modify partitions for a table in a share:

ALTER SHARE <share-name> ADD TABLE <table-name> PARTITION(<clause>);

Change share owner:

ALTER SHARE <share-name> OWNER TO '<principal>'

-- Principal must be an account-level user email address or group name.

Enable history sharing for a table:

ALTER SHARE <share-name> ADD TABLE <table-name> WITH HISTORY;

For details about ALTER SHARE parameters, see ALTER SHARE.

CLI

Run the following commands using the Databricks CLI.

Rename a share:

databricks shares update <share-name> --name <new-share-name>

Remove tables from a share:

databricks shares update <share-name> \

--json '{

"updates": [

{

"action": "REMOVE",

"data_object": {

"name": "<table-full-name>",

"data_object_type": "TABLE",

"shared_as": "<table-alias>"

}

}

]

}'

Remove volumes from a share (using Databricks CLI 0.210 or above):

databricks shares update <share-name> \

--json '{

"updates": [

{

"action": "REMOVE",

"data_object": {

"name": "<volume-full-name>",

"data_object_type": "VOLUME",

"string_shared_as": "<volume-alias>"

}

}

]

}'

Note

Use the name property if there is no alias for the volume. Use string_shared_as if there is an alias.

Add or update a comment on a share:

databricks shares update <share-name> --comment '<comment>'

Change share owner:

databricks shares update <share-name> --owner '<principal>'

Principal must be an account-level user email address or group name.

Delete a share

To delete a share, you can use Catalog Explorer, the Databricks Unity Catalog CLI, or the DELETE SHARE SQL command in an Azure Databricks notebook or the Databricks SQL query editor. You must be an owner of the share.

When you delete a share, recipients can no longer access the shared data.

Permissions required: Share object owner.

Catalog Explorer

In your Azure Databricks workspace, click

Catalog.

Catalog.At the top of the Catalog pane, click the

gear icon and select Delta Sharing.

gear icon and select Delta Sharing.Alternatively, from the Quick access page, click the Delta Sharing > button.

On the Shared by me tab, find the share you want to delete and click its name.

Click the

kebab menu and select Delete.

kebab menu and select Delete.On the confirmation dialog, click Delete.

SQL

Run the following command in a notebook or the Databricks SQL query editor.

DROP SHARE [IF EXISTS] <share-name>;

CLI

Run the following command using the Databricks CLI.

databricks shares delete <share-name>