DatabricksIQ trust and safety

Databricks understands the importance of your data and the trust you place in us when you use our platform and DatabricksIQ-powered features. Databricks is committed to the highest standards of data protection, and has implemented rigorous measures to ensure information you submit to DatabricksIQ-powered features is protected.

- Your data remains confidential.

- Databricks does not train generative foundation models with data you submit to these features, and Databricks does not use this data to generate suggestions displayed for other customers.

- Our model partners do not retain data you submit through these features, even for abuse monitoring. Our Partner-powered AI assistive features use zero data retention endpoints from our model partners.

- Protection from harmful output. Databricks also uses Azure OpenAI content filtering to protect users from harmful content. In addition, Databricks has performed an extensive evaluation with thousands of simulated user interactions to ensure that the protections put in place to protect against harmful content, jailbreaks, insecure code generation, and use of third-party copyright content are effective.

- Databricks uses only the data necessary to provide the service. Data is only sent when you interact with DatabricksIQ-powered features. Databricks sends your prompt, relevant table metadata and values, errors, as well as input code or queries to help return more relevant results. Databricks does not send other row-level data to third party models.

- Data is protected in transit. All traffic between Databricks and model partners is encrypted in transit with industry standard TLS encryption.

- Databricks offers data residency controls. DatabricksIQ-powered features are Designated Services and comply with data residency boundaries. For more details, see Databricks Geos: Data residency and Databricks Designated Services.

For more more information about Databricks Assistant privacy, see Privacy and security.

Features governed by the Partner-powered AI assistive features setting

Partner-powered AI refers to Azure OpenAI service. Following is a breakdown of features governed by the Partner-powered AI assistive features setting:

| Feature | Where is the model hosted? | Controlled by Partner-powered AI setting? |

|---|---|---|

| Databricks Assistant chat | Azure OpenAI service | Yes |

| Quick fix | Azure OpenAI service | Yes |

| AI-generated UC comments | Compliance security profile (CSP) workspaces: Azure OpenAI service | Yes, for all CSP workspaces. |

| AI/BI dashboard AI-assisted visualizations and companion Genie spaces | Azure OpenAI service | Yes |

| Genie | Azure OpenAI service | Yes |

| Databricks Inline Assistant | Azure OpenAI service | Yes |

| Databricks Assistant Autocomplete | Databricks-hosted model | No |

| Intelligent search | Azure OpenAI service | Yes |

Use a Databricks-hosted model

Important

This feature is in Public Preview.

Learn about using a Databricks-hosted model to power DatabricksIQ features that would otherwise be powered by Azure OpenAI. This section explains how it works.

How it works

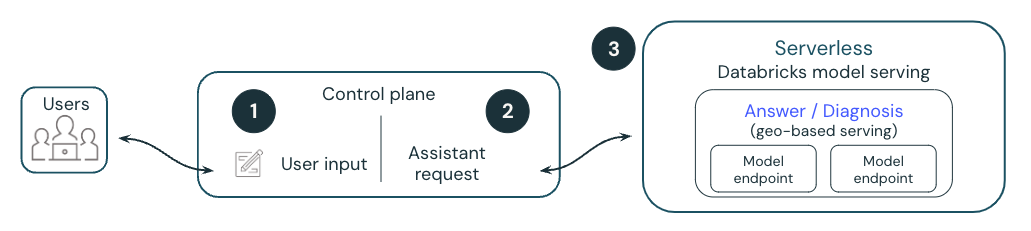

The following diagram provides an overview of how a Databricks-hosted model powers DatabricksIQ features such as Quick Fix.

- A user executes a cell, which results in an error.

- Databricks attaches metadata to a request and sends it to a Databricks-hosted large-language model (LLM). All data is encrypted at rest. Customers can use a customer-managed key (CMK).

- The Databricks-hosted model responds with the suggested code edits to fix the error, which is displayed to the user.

This feature is in public preview and is subject to change. Reach out to your representative to ask which DatabricksIQ features can be supported by a Databricks-hosted model.

Databricks-hosted models

When DatabricksIQ features use Databricks-hosted models, they use Meta Llama 3 or other models that are also available for commercial use. Meta Llama 3 is licensed under the Meta Llama 3 Community License, Copyright © Meta Platforms, Inc. All Rights Reserved.

FAQ about Databricks-hosted models for Assistant

Can I have my own private model serving instance?

Not at this time. This preview uses Model serving endpoints that are managed and secured by Databricks. The model serving endpoints are stateless, protected through multiple layers of isolation and implement the following security controls to protect your data:

- Every customer request to Model Serving is logically isolated, authenticated, and authorized.

- Mosaic AI Model Serving encrypts all data at rest (AES-256) and in transit (TLS 1.2+).

Does the metadata sent to the models respect the user’s Unity Catalog permissions?

Yes, all of the data sent to the model respects the user’s Unity Catalog permissions. For example, it does not send metadata relating to tables that the user does not have permission to see.

Where is the data stored?

The Databricks Assistant chat history is stored in the control plane database, along with the notebook. The control plane database is AES-256 bit encrypted and customers that require control over the encryption key can utilize our Customer-Managed Key feature.

Note

- Like other workspace objects, the retention period for Databricks Assistant chat history is scoped to the lifecycle of the object itself. If a user deletes the notebook, it and any associated chat history is deleted in 30 days.

- If the notebook is exported, the chat history is not exported with it.

- Notebook and query chat histories aren't available to other users or admins, even if the query or notebook is shared.

Can I bring my own API key for my model or host my own models?

Not at this time. The Databricks Assistant is fully managed and hosted by Databricks. Assistant functionality is heavily dependent on model serving features (for example, function calling), performance, and quality. Databricks continuously evaluates new models for the best performance and may update the model in future versions of this feature.

Who owns the output data? If Assistant generates code, who owns that IP?

The customer owns their own output.

Opt out of using Databricks-hosted models

To opt out of using Databricks-hosted models:

- Click your username in the top bar of the Databricks workspace.

- From the menu, select Previews.

- Turn off Use Assistant with Databricks-hosted models.

To learn more about managing previews, see Manage Azure Databricks Previews.