Add a node on Azure Local

Applies to: Azure Local 2311.2 and later

This article describes how to manage capacity by adding a node (often called scale-out) to your Azure Local instance. In this article, each server is referred to as a node.

About add nodes

You can easily scale the compute and storage at the same time on Azure Local by adding nodes to an existing system. Your Azure Local instance supports a maximum of 16 nodes.

Each new physical node that you add to your system must closely match the rest of the nodes in terms of CPU type, memory, number of drives, and the type and size of the drives.

You can dynamically scale your Azure Local instance from 1 to 16 nodes. In response to the scaling, the orchestrator (also known as Lifecycle Manager) adjusts the drive resiliency, network configuration including the on-premises agents such as orchestrator agents, and Arc registration. The dynamic scaling may require the network architecture change from connected without a switch to connected via a network switch.

Important

- In this release, you can only add one node at any given time. You can however add multiple nodes sequentially so that the storage pool is rebalanced only once.

- It is not possible to permanently remove a node from a system.

Add node workflow

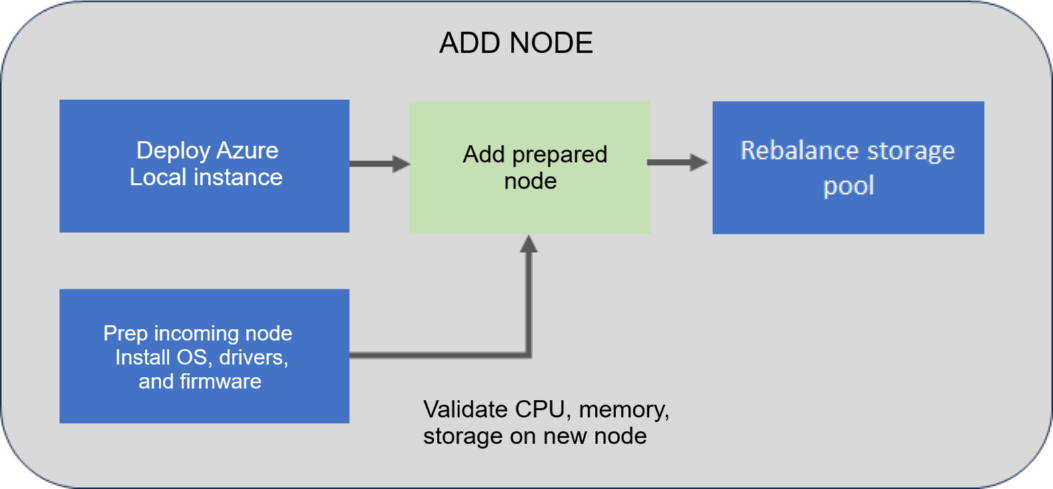

The following flow diagram shows the overall process to add a node:

To add a node, follow these high-level steps:

- Install the operating system, drivers, and firmware on the new node that you plan to add. For more information, see Install OS.

- Add the prepared node via the

Add-serverPowerShell cmdlet. - When adding a node to the system, the system validates that the new incoming node meets the CPU, memory, and storage (drives) requirements before it actually adds the node.

- Once the node is added, the system is also validated to ensure that it's functioning normally. Next, the storage pool is automatically rebalanced. Storage rebalance is a low priority task that doesn't impact actual workloads. The rebalance can run for multiple days depending on number of the nodes and the storage used.

Note

If you deployed your Azure Local instance using custom storage IPs, you must manually assign IPs to the storage network adapters after the node is added.

Supported scenarios

For adding a node, the following scale-out scenarios are supported:

| Start scenario | Target scenario | Resiliency settings | Storage network architecture | Witness settings |

|---|---|---|---|---|

| Single-node | Two-node system | Two-way mirror | Configured with and without a switch | Witness required for target scenario. |

| Two-node system | Three-node system | Three-way mirror | Configured with a switch only | Witness optional for target scenario. |

| Three-node system | N-node system | Three-way mirror | Switch only | Witness optional for target scenario. |

When upgrading a system from two to three nodes, the storage resiliency level is changed from a two-way mirror to a three-way mirror.

Resiliency settings

In this release, for add node operation, specific tasks aren't performed on the workload volumes created after the deployment.

For add node operation, the resiliency settings are updated for the required infrastructure volumes and the workload volumes created during the deployment. The settings remain unchanged for other workload volumes that you created after the deployment (since the intentional resiliency settings of these volumes aren't known and you may just want a 2-way mirror volume regardless of the system scale).

However, the default resiliency settings are updated at the storage pool level and so any new workload volumes that you created after the deployment will inherit the resiliency settings.

Hardware requirements

When adding a node, the system validates the hardware of the new, incoming node and ensures that the node meets the hardware requirements before it's added to the system.

| Component | Compliance check |

|---|---|

| CPU | Validate the new node has the same number of or more CPU cores. If the CPU cores on the incoming node don't meet this requirement, a warning is presented. The operation is however allowed. |

| Memory | Validate the new node has the same amount of or more memory installed. If the memory on the incoming node doesn't meet this requirement, a warning is presented. The operation is however allowed. |

| Drives | Validate that the new node has the same number of data drives available for Storage Spaces Direct. If the number of drives on the incoming node doesn't meet this requirement, an error is reported and the operation is blocked. |

Prerequisites

Before you add a node, you would need to complete the hardware and software prerequisites.

Hardware prerequisites

Make sure to complete the following prerequisites:

- The first step is to acquire new Azure Local hardware from your original OEM. Always refer to your OEM-provided documentation when adding new node hardware for use in your system.

- Place the new physical node in the predetermined location, for example, a rack, and cable it appropriately.

- Enable and adjust physical switch ports as applicable in your network environment.

Software prerequisites

Make sure to complete the following prerequisites:

AzureStackLCMUseris active in Active Directory. For more information, see Prepare the Active Directory.- Signed in as

AzureStackLCMUseror another user with equivalent permissions. - Credentials for the

AzureStackLCMUserhaven't changed.

Add a node

This section describes how to add a node using PowerShell, monitor the status of the Add-Server operation and troubleshoot, if there are any issues.

Add a node using PowerShell

Make sure that you have reviewed and completed the prerequisites.

On the new node that you plan to add, follow these steps.

Install the operating system and required drivers on the new node that you plan to add. Follow the steps in Install the Azure Stack HCI Operating System, version 23H2.

Register the node with Arc. Follow the steps in Register with Arc and set up permissions.

Note

You must use the same parameters as the existing node to register with Arc. For example: Resource Group name, Region, Subscription, and Tenant.

Assign the following permissions to the newly added nodes:

- Azure Local Device Management Role

- Key Vault Secrets User For more information, see Assign permissions to the node.

On a node that already exists on your system, follow these steps:

Sign in with the domain user credentials (AzureStaclLCMUser or another user with equivalent permissions) that you provided during the deployment of the system.

(Optional) Before you add the node, make sure to get an updated authentication token. Run the following command:

Update-AuthenticationTokenIf you are running a version prior to 2405.3, you must run the following command on the new node to clean up conflicting files:

Get-ChildItem -Path "$env:SystemDrive\NugetStore" -Exclude Microsoft.AzureStack.Solution.LCMControllerWinService*,Microsoft.AzureStack.Role.Deployment.Service* | Remove-Item -Recurse -ForceRun the following command to add the new incoming node using a local adminsitrator credential for the new node:

$HostIpv4 = "<IPv 4 for the new node>" $Cred = Get-Credential Add-Server -Name "<Name of the new node>" -HostIpv4 $HostIpv4 -LocalAdminCredential $CredMake a note of the operation ID as output by the

Add-Servercommand. You use this operation ID later to monitor the progress of theAdd-Serveroperation.

Monitor operation progress

To monitor the progress of the add node operation, follow these steps:

Run the following cmdlet and provide the operation ID from the previous step.

$ID = "<Operation ID>" Start-MonitoringActionplanInstanceToComplete -actionPlanInstanceID $IDAfter the operation is complete, the background storage rebalancing job will continue to run. Wait for the storage rebalance job to complete. To verify the progress of this storage rebalancing job, use the following cmdlet:

Get-VirtualDisk|Get-StorageJobIf the storage rebalance job is complete, the cmdlet won't return an output.

The newly added node shows in the Azure portal in your Azure Local instance list after several hours. To force the node to show up in Azure portal, run the following command:

Sync-AzureStackHCI

Recovery scenarios

Following recovery scenarios and the recommended mitigation steps are tabulated for adding a node:

| Scenario description | Mitigation | Supported? |

|---|---|---|

| Added a new node out of band without using the orchestrator. | Remove the added node. Use the orchestrator to add the node. |

No |

| Added a new node with orchestrator and the operation failed. | To complete the operation, investigate the failure. Rerun the failed operation using Add-Server -Rerun. |

Yes |

| Added a new node with orchestrator. The operation succeeded partially but had to start with a fresh operating system install. |

In this scenario, orchestrator has already updated its knowledge store with the new node. Use the repair node scenario. | Yes |

Troubleshoot issues

If you experience failures or errors while adding a node, you can capture the output of the failures in a log file. On a node that already exists on your system, follow these steps:

Sign in with the domain user credentials that you provided during the deployment of the system. Capture the issue in the log files.

Get-ActionPlanInstance -ActionPlanInstanceID $ID|out-file log.txtTo rerun the failed operation, use the following cmdlet:

Add-Server -Rerun

Next steps

- Learn more about how to Repair a node.