Migrate your workload from Service Fabric to AKS

Many organizations move to containerized apps as part of a push toward adopting modern app development, maintenance best practices, and cloud-native architectures. Because technologies continue to evolve, organizations must evaluate the many containerized app platforms that are available in the public cloud.

There's no one-size-fits-all solution for apps, but organizations often find that Azure Kubernetes Service (AKS) meets the requirements for many of their containerized applications. AKS is a managed Kubernetes service that simplifies application deployments via Kubernetes by managing the control plane to provide core services for application workloads. Many organizations use AKS as their primary infrastructure platform and transition workloads that are hosted on other platforms to AKS.

This article describes how to migrate containerized apps from Azure Service Fabric to AKS. The article assumes that you're familiar with Service Fabric but are interested in learning how its features and functionality compare to those of AKS. The article also provides best practices for you to consider during migration.

Comparing AKS to Service Fabric

To start, review Choose an Azure compute service, alongside other Azure compute services. This section highlights notable similarities and differences that are relevant to migration.

Both Service Fabric and AKS are container orchestrators. Service Fabric provides support for several programming models, but AKS only supports containers.

Programming models: Service Fabric supports multiple ways to write and manage your services, including Linux and Windows containers, Reliable Services, Reliable Actors, ASP.NET Core, and guest executables.

Containers on AKS: AKS only supports containerization with Windows and Linux containers that run on the container runtime containerd, which is managed automatically.

Both Service Fabric and AKS provide integrations with other Azure services, including Azure Pipelines, Azure Monitor, Azure Key Vault, and Microsoft Entra ID.

Key differences

When you deploy a traditional Service Fabric cluster, instead of a managed cluster, you need to explicitly define a cluster resource together with many supporting resources in your Azure Resource Manager templates (ARM templates) or Bicep modules. These resources include a virtual machine scale set for each cluster node type, network security groups, and load balancers. It's your responsibility to make sure that these resources are correctly configured. In contrast, the encapsulation model for Service Fabric managed clusters consists of a single managed cluster resource. All underlying resources for the cluster are abstracted away and managed by Azure.

AKS simplifies the deployment of a managed Kubernetes cluster in Azure by offloading the operational overhead to Azure. Because AKS is a hosted Kubernetes service, Azure handles critical tasks like infrastructure health monitoring and maintenance. Azure manages the Kubernetes master nodes, so you only need to manage and maintain the agent nodes.

To move your workload from Service Fabric to AKS, you need to understand the differences in the underlying infrastructure so that you can confidently migrate your containerized applications. The following table compares the capabilities and features of the two hosting platforms.

| Capability or component | Service Fabric | AKS |

|---|---|---|

| Non-containerized applications | Yes | No |

| Linux and Windows containers | Yes | Yes |

| Azure-managed control plane | No | Yes |

| Support for both stateless and stateful workloads | Yes | Yes |

| Worker node placement | Virtual Machine Scale Sets, customer configured | Virtual Machine Scale Sets, Azure managed |

| Configuration manifest | XML | YAML |

| Azure Monitor integration | Yes | Yes |

| Native support for Reliable Services and Reliable Actor pattern | Yes | No |

| WCF-based communication stack for Reliable Services | Yes | No |

| Persistent storage | Azure Files volume driver | Support for various storage systems, like managed disks, Azure Files, and Azure Blob Storage via CSI Storage classes, persistent volume, and persistent volume claims |

| Networking modes | Azure Virtual Network integration | Support for multiple network plug-ins (Azure CNI, kubenet, BYOCNI), network policies (Azure, Calico), and ingress controllers (Application Gateway Ingress Controller, NGINX, and more) |

| Ingress controllers | A reverse proxy that's built in to Service Fabric. It helps microservices that run in a Service Fabric cluster discover and communicate with other services that have HTTP endpoints. You can also use Traefik on Service Fabric | Managed NGINX ingress controller, BYO ingress open source and commercial controllers that use platform-managed public or internal load balancers, like NGINX ingress controller, and Application Gateway Ingress Controller |

Note

If you use Windows containers on Service Fabric, we recommend that you use them on AKS to make your migration easier.

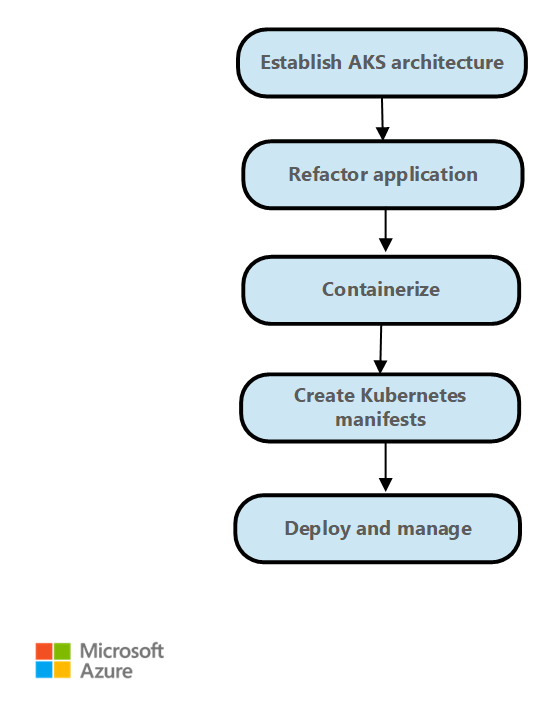

Migration steps

In general, the migration steps from Service Fabric to AKS are as follows.

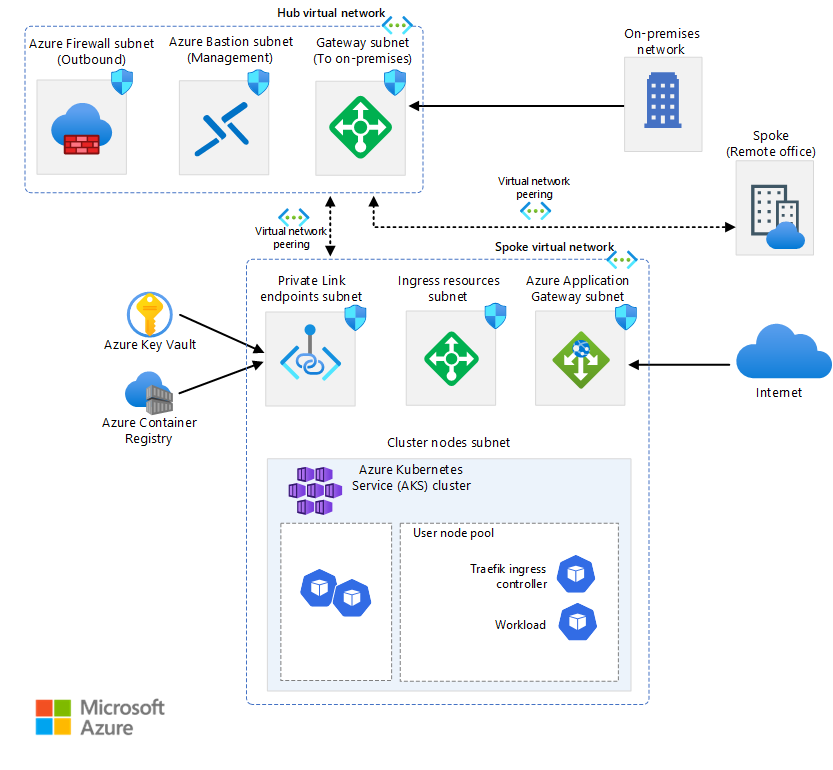

Establish deployment architecture and create the AKS cluster. AKS provides various options to configure the cluster access, node and pod scalability, network access and configuration, and more. For more information about a typical deployment architecture, see Example architecture. The AKS baseline architecture provides cluster deployment and monitoring guidelines as well. AKS construction provides quickstart templates for deploying your AKS cluster based on business and technical requirements.

Rearchitect the Service Fabric application. If you use programming models such as Reliable Services or Reliable Actors, or if you use other Service Fabric specific constructs, you might need to rearchitect your application. To implement state management when you migrate from Reliable Services, use Distributed Application Runtime (Dapr). Kubernetes provides patterns and examples for migrating from Reliable Actors.

Package the application as containers. Visual Studio provides options to generate the Dockerfile and package the application as containers. Push the container images that you create to Azure Container Registry.

Rewrite Service Fabric configuration XML files as Kubernetes YAML files. You deploy the application to AKS using YAML files or as a package by using Helm charts. For more information, see Application and service manifest.

Deploy the application to AKS cluster.

Shift traffic to the AKS cluster based on your deployment strategies, and monitor the application's behavior, availability, performance.

Example architecture

AKS and Azure provide flexibility to configure your environment to fit your business needs. AKS is well integrated with other Azure services. The AKS baseline architecture is an example.

As a starting point, familiarize yourself with some key Kubernetes concepts and then review some example architectures:

Note

When you migrate a workload from Service Fabric to AKS, you can replace Service Fabric Reliable Actors with the Dapr actors building block. You can replace Service Fabric Reliable Collections with the Dapr state management building block.

The Dapr provides APIs that simplify microservice connectivity. For more information, see Introduction to Dapr. We recommend that you install Dapr as an AKS extension.

Application and service manifest

Service Fabric and AKS have different application and service manifest file types and constructs. Service Fabric uses XML files for application and service definition. AKS uses the Kubernetes YAML file manifest to define Kubernetes objects. There are no tools that are specifically intended to migrate a Service Fabric XML file to a Kubernetes YAML file. However, you can learn about how YAML files work on Kubernetes by reviewing the following resources.

Kubernetes documentation: Understanding Kubernetes Objects.

AKS documentation for Windows nodes/applications: Create a Windows Server container on an AKS cluster by using the Azure CLI.

You can use Helm to define parameterized YAML manifests and create generic templates by replacing static, hardcoded values with placeholders that you can replace with custom values that are supplied at deployment time. The resulting templates that contain the custom values are rendered as valid manifests for Kubernetes.

Kustomize introduces a template-free way to customize application configuration that simplifies the use of off-the-shelf applications. You can use Kustomize together with Helm or as an alternative to Helm.

For more information about Helm and Kustomize, see the following resources:

- Helm documentation

- Artifact Hub

- Kustomize documentation

- Overview of a kustomization file

- Declarative management of kubernetes objects using Kustomize

Networking

AKS provides two options for the underlying network:

Bring your own Azure virtual network to provision the AKS cluster nodes into a subnet from a virtual network that you provide.

Let the AKS resource provider create a new Azure virtual network for you in the node resource group that contains all the Azure resources that a cluster uses.

If you choose the second option, Azure manages the virtual network.

Service Fabric doesn't provide a choice of network plug-ins. If you use AKS, you need to choose one of the following:

kubenet. If you use kubenet, nodes get an IP address from the Azure virtual network subnet. Pods receive an IP address from an address space that's logically different from that of the Azure virtual network subnet of the nodes. Network address translation (NAT) is then configured so that the pods can reach resources on the Azure virtual network. The source IP address of the traffic is translated via NAT to the node's primary IP address. This approach significantly reduces the number of IP addresses that you need to reserve in your network space for pods to use.

Azure CNI. If you use Container Networking Interface (CNI), every pod gets an IP address from the subnet and can be accessed directly. These IP addresses must be unique across your network space, and must be planned in advance. Each node has a configuration parameter for the maximum number of pods that it supports. You then reserve the equivalent number of IP addresses for each node. This approach requires more planning and often leads to IP address exhaustion or the need to rebuild clusters in a larger subnet as your application demands grow. You can configure the maximum number of pods that are deployable to a node when you create the cluster or when you create new node pools.

Azure CNI Overlay networking. If you use Azure CNI Overlay, the cluster nodes are deployed into an Azure Virtual Network subnet. Pods are assigned IP addresses from a private CIDR that are logically different from the address of the virtual network that hosts the nodes. Pod and node traffic within the cluster uses an overlay network. NAT uses the node's IP address to reach resources outside the cluster. This solution saves a significant number of virtual network IP addresses and helps you seamlessly scale your cluster to large sizes. An added advantage is that you can reuse the private CIDR in different AKS clusters, which extends the IP space that's available for containerized applications in AKS.

Azure CNI Powered by Cilium. Azure CNI Powered by Cilium combines the robust control plane of Azure CNI with the data plane of Cilium to help provide high-performance networking and enhanced security.

Bring your own CNI plug-in. Kubernetes doesn't provide a network interface system by default. This functionality is provided by network plug-ins. AKS provides several supported CNI plug-ins. For more information about supported plug-ins, see Network concepts for applications in AKS.

Windows containers currently support only the Azure CNI plug-in. Various choices for network policies and ingress controllers are available.

In AKS, you can use Kubernetes network policies to segregate and help secure intra-service communications by controlling which components can communicate with each other. By default, all pods in a Kubernetes cluster can send and receive traffic without limitations. To improve security, you can use Azure network policies or Calico network policies to define rules that control the traffic flow between microservices.

If you want to use Azure Network Policy Manager, you must use the Azure CNI plug-in. You can use Calico network policies with either the Azure CNI plug-in or the kubenet CNI plug-in. The use of Azure Network Policy Manager for Windows nodes is supported only on Windows Server 2022. For more information, see Secure traffic between pods using network policies in AKS. For more information about AKS networking, see Networking in AKS.

In Kubernetes, an ingress controller acts as a service proxy and intermediary between a service and the outside world, allowing external traffic to access the service. The service proxy typically provides various functionalities like TLS termination, path-based request routing, load balancing, and security features like authentication and authorization. Ingress controllers also provide another layer of abstraction and control for routing external traffic to Kubernetes services based on HTTP and HTTPS rules. This layer provides more fine-grained control over traffic flow and traffic management.

In AKS, there are multiple options to deploy, run, and operate an ingress controller. One option is the Application Gateway Ingress Controller, which allows you to use Azure Application Gateway as the ingress controller for TLS termination, path-based routing, and as a web access firewall. Another option is the managed NGINX ingress controller, which provides the Azure-managed option for the widely used NGINX ingress controller. Traefik ingress controller is another popular ingress controller for Kubernetes.

Each of these ingress controllers has strengths and weaknesses. To decide which one to use, consider the requirements of the application and the environment. Be sure that you use the latest release of Helm and have access to the appropriate Helm repository when you install an unmanaged ingress controller.

Persistent storage

Both Service Fabric and AKS have mechanisms to provide persistent storage to containerized applications. In Service Fabric, the Azure Files volume driver, which is a Docker volume plug-in, provides Azure Files volumes for Linux and Windows containers. It's packaged as a Service Fabric application that you can deploy to a Service Fabric cluster to provide volumes for other Service Fabric containerized applications within the cluster. For more information, see Azure Files volume driver for Service Fabric.

Applications that run in AKS might need to store and retrieve data from a persistent file storage system. AKS integrates with Azure storage services like Azure managed disks, Azure Files, Blob Storage, and Azure NetApp Storage (ANF). It also integrates with non-Microsoft storage systems like Rook and GlusterFS via Container Storage Interface (CSI) drivers.

CSI is a standard for exposing block and file storage systems to containerized workloads on Kubernetes. Non-Microsoft storage providers that use CSI can write, deploy, and update plug-ins to expose new storage systems in Kubernetes, or to improve existing ones, without needing to change the core Kubernetes code and wait for its release cycles.

The CSI storage driver support on AKS allows you to natively use these Azure storage services:

Azure Disks. You can use Azure Disks to create a Kubernetes DataDisk resource. Disks can use Azure premium storage that's backed by high-performance SSDs, or Azure standard storage that's backed by standard HDDs or SSDs. For most production and development workloads, use premium storage. Azure Disks are mounted as ReadWriteOnce and are only available to one node in AKS. For storage volumes that multiple pods can access simultaneously, use Azure Files.

In contrast, Service Fabric supports the creation of a cluster or a node type that uses managed disks, but not applications that dynamically create attached managed disks via a declarative approach. For more information, see Deploy a Service Fabric cluster node type with managed data disks.

Azure Files. You can use Azure Files to mount an SMB 3.0 or 3.1 share backed by an Azure storage account to pods. With Azure Files, you can share data across multiple nodes and pods. Azure Files can use Azure standard storage backed by standard HDDs or Azure premium storage backed by high-performance SSDs.

Service Fabric provides an Azure Files volume driver as a Docker volume plug-in that provides Azure Files volumes for Docker containers. Service Fabric provides one version of the driver for Windows clusters and one for Linux clusters.

Blob Storage. You can use Blob Storage to mount blob storage (or object storage) as a file system into a container or pod. Blob storage enables an AKS cluster to support applications that work with large unstructured datasets, like log file data, images or documents, and HPC workloads. If you ingest data into Azure Data Lake Storage, you can directly mount the storage and use it in AKS without configuring another interim file system. Service Fabric doesn't support any mechanism for mounting blob storage in declarative mode.

For more information about storage options, see Storage in AKS.

Application and cluster monitoring

Both Service Fabric and AKS provide native integration with Azure Monitor and its services, like Log Analytics. Monitoring and diagnostics are critical to develop, test, and deploy workloads in any cloud environment. Monitoring includes infrastructure and application monitoring.

For example, you can track how your applications are used, the actions that the Service Fabric platform takes, your resource utilization via performance counters, and the overall health of your cluster. You can use this information to diagnose and correct problems and prevent them from occurring in the future. For more information, see Monitoring and diagnostics for Service Fabric. When you host and operate containerized applications in a Service Fabric cluster, you need to set up the container monitoring solution to view container events and logs.

However, AKS has built-in integration with Azure Monitor and Container Insights, which is designed to monitor the performance of containerized workloads deployed to the cloud. Container Insights provides performance visibility by collecting memory and processor metrics from controllers, nodes, and containers that are available in Kubernetes through the Metrics API.

After you enable monitoring from Kubernetes clusters, metrics and container logs are automatically collected via a containerized version of the Log Analytics agent for Linux. Metrics are sent to the metrics database in Azure Monitor. Log data is sent to your Log Analytics workspace. This allows you to get monitor and telemetry data for both the AKS cluster and the containerized applications that run on top of it. For more information, see Monitor AKS with Azure Monitor.

As an alternative or companion solution to Container Insights, you can configure your AKS cluster to collect metrics in Azure Monitor managed service for Prometheus. You can use this configuration to collect and analyze metrics at scale by using a Prometheus-compatible monitoring solution, which is based on the Prometheus project. The fully managed service allows you to use the Prometheus query language (PromQL) to analyze the performance of monitored infrastructure and workloads. It also lets you receive alerts without needing to operate the underlying infrastructure.

Azure Monitor managed service for Prometheus is a component of Azure Monitor Metrics. It provides more flexibility in the types of metric data that you can collect and analyze by using Azure Monitor. Prometheus metrics share some features with platform and custom metrics, but they have extra features to better support open-source tools like PromQLand Grafana.

You can configure the Azure Monitor managed service for Prometheus as a data source for both Azure Managed Grafana and self-hosted Grafana, which can run on an Azure virtual machine. For more information, see Use Azure Monitor managed service for Prometheus as data source for Grafana using managed system identity.

Add-ons for AKS

When you migrate from Service Fabric to AKS, you should consider using add-ons and extensions. AKS provides extra supported functionality for your clusters via add-ons and extensions like Kubernetes Event-driven Autoscaling (KEDA) and GitOps Flux v2. Many more integrations provided by open-source projects and third parties are commonly used with AKS. The AKS support policy doesn't cover open-source and non-Microsoft integrations. For more information, see Add-ons, extensions, and other integrations with AKS.

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal authors:

Ally Ford | Product Manager II

Paolo Salvatori | Principal Customer Engineer

Brandon Smith | Program Manager II Other contributors:

Mick Alberts | Technical Writer

Ayobami Ayodeji | Senior Program Manager

Moumita Dey Verma | Senior Cloud Solutions Architect

Francis Simy Nazareth | Senior Technology Specialist

To see non-public LinkedIn profiles, sign in to LinkedIn.

Next steps

- AKS news: AKS release notes, the AKS Roadmap, and Azure updates

- Using Windows containers to containerize existing applications

- Frequently asked questions about AKS