This article describes an architecture for many models that uses Machine Learning and compute clusters. It provides great versatility for situations that require complex setup.

A companion article, Many models machine learning at scale in Azure with Spark, uses Apache Spark in either Azure Databricks or Azure Synapse Analytics.

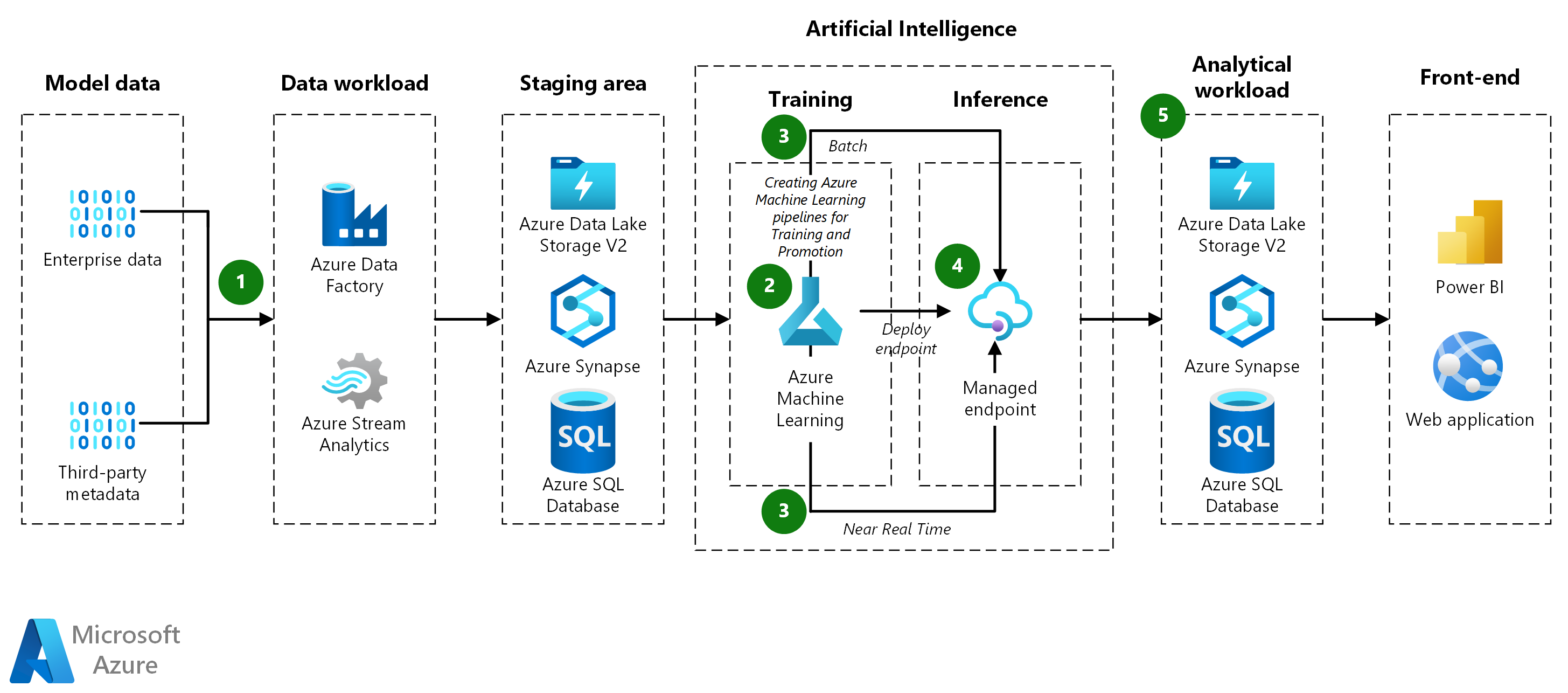

Architecture

Download a Visio file of this architecture.

Workflow

- Data ingestion: Azure Data Factory pulls data from a source database and copies it to Azure Data Lake Storage. It then stores it in a Machine Learning datastore as a tabular dataset.

- Model-training pipeline:

- Prepare data: The training pipeline pulls the data from the datastore and transforms it further, as needed. It also groups the data into datasets for training the models.

- Train models: The pipeline trains models for all the datasets that were created during data preparation. It uses the ParallelRunStep class to train multiple models in parallel. After a model is trained, the pipeline registers it into Machine Learning along with the testing metrics.

- Model-promotion pipeline:

- Evaluate models: The promotion pipeline evaluates the trained models before moving them to production. A DevOps pipeline applies business logic to determine whether a model meets the criteria for deployment. For example, it might check that the accuracy of the testing data is over 80 percent.

- Register models: The promotion pipeline registers the models that qualify to the production Machine Learning workspace.

- Model batch-scoring pipeline:

- Prepare data: The batch-scoring pipeline pulls data from the datastore and further transforms each file as needed. It also groups the data into datasets for scoring.

- Score models: The pipeline uses the ParallelRunStep class to score multiple datasets in parallel. It finds the appropriate model for each dataset in Machine Learning by searching the model tags. Then it downloads the model and uses it to score the dataset. It uses the DataTransferStep class to write the results back to Azure Data Lake, and then passes predictions from Azure Data Lake to Synapse SQL for serving.

- Real-time scoring: Azure Kubernetes Service (AKS) can do real-time scoring if needed. Because of the large number of models, they should be loaded on demand, not pre-loaded.

- Results:

- Predictions: The batch-scoring pipeline saves predictions to Synapse SQL.

- Metrics: Power BI connects to the model predictions to retrieve and aggregate results for presentation.

Components

- Azure Machine Learning is an enterprise-grade machine learning service for building and deploying models quickly. It provides users at all skill levels with a low-code designer, automated ML (AutoML), and a hosted Jupyter notebook environment that supports various IDEs.

- Azure Databricks is a cloud-based data-engineering tool that's based on Apache Spark. It can process and transform massive quantities of data and explore it by using machine learning models. You can write jobs in R, Python, Java, Scala, and Spark SQL.

- Azure Synapse Analytics is an analytics service that unifies data integration, enterprise data warehousing, and big data analytics.

- Synapse SQL is a distributed query system for T-SQL that enables data warehousing and data virtualization scenarios and extends T-SQL to address streaming and machine learning scenarios. It offers both serverless and dedicated resource models.

- Azure Data Lake Storage is a massively scalable and secure storage service for high-performance analytics workloads.

- Azure Kubernetes Service (AKS) is a fully managed Kubernetes service for deploying and managing containerized applications. AKS simplifies deployment of a managed AKS cluster in Azure by offloading the operational overhead to Azure.

- Azure DevOps is a set of developer services that provide comprehensive application and infrastructure lifecycle management. DevOps includes work tracking, source control, build and CI/CD, package management, and testing solutions.

- Microsoft Power BI is a collection of software services, apps, and connectors that work together to turn unrelated sources of data into coherent, visually immersive, and interactive insights.

Alternatives

- The source data can come from any database.

- You can use a managed online endpoint or AKS to deploy real-time inferencing.

Scenario details

Many machine learning problems are too complex for a single machine learning model to solve. Whether it's predicting sales for every item of every store, or modeling maintenance for hundreds of oil wells, having a model for each instance might improve results on many machine learning problems. This many models pattern is common across a wide variety of industries, and has many real-world use cases. With the use of Azure Machine Learning, an end-to-end many models pipeline can include model training, batch-inferencing deployment, and real-time deployment.

A many models solution requires a different dataset for every model during training and scoring. For instance, if the task is to predict sales for every item of every store, every dataset will be for a unique item-store combination.

Potential use cases

- Retail: A grocery store chain needs to create a separate revenue forecast model for each store and item, totaling over 1,000 models per store.

- Supply chain: For each combination of warehouse and product, a distribution company needs to optimize inventory.

- Restaurants: A chain with thousands of franchises needs to forecast the demand for each.

Considerations

These considerations implement the pillars of the Azure Well-Architected Framework, which is a set of guiding tenets that can be used to improve the quality of a workload. For more information, see Microsoft Azure Well-Architected Framework.

- Data partitions Partitioning the data is the key to implementing the many models pattern. If you want one model per store, a dataset comprises all the data for one store, and there are as many datasets as there are stores. If you want to model products by store, there will be a dataset for every combination of product and store. Depending on the source data format, it might be easy to partition the data, or it might require extensive data shuffling and transformation. Spark and Synapse SQL scale very well for such tasks, while Python pandas doesn't, since it runs only on one node and process.

- Model management: The training and scoring pipelines identify and invoke the right model for each dataset. To do this, they calculate tags that characterize the dataset, and then use the tags to find the matching model. The tags identify the data partition key and the model version, and might also provide other information.

- Choosing the right architecture:

- Spark is appropriate when your training pipeline has complex data transformation and grouping requirements. It provides flexible splitting and grouping techniques to group data by combinations of characteristics, such as product-store or location-product. The results can be placed in a Spark DataFrame for use in subsequent steps.

- When your machine learning training and scoring algorithms are straightforward, you might be able to partition data with libraries such as scikit-learn. In such cases, you might not need Spark, so you can avoid possible complexities that can arise when installing Azure Synapse or Azure Databricks.

- When the training datasets are already created—for example, they're in separate files or in separate rows or columns—you don't need Spark for complex data transformations.

- The Machine Learning and compute clusters solution provides great versatility for situations that require complex setup. For example, you can make use of a custom Docker container, or download files, or download pre-trained models. Computer vision and natural language processing (NLP) deep learning are examples of applications that might require such versatility.

- Spark training and scoring: When you use the Spark architecture, you can use the Spark pandas function API for parallel training and scoring.

- Separate model repos: To protect the deployed models, consider storing them in their own repository that the training and testing pipelines don't touch.

- ParallelRunStep Class: The Python ParallelRunStep Class is a powerful option to run many models training and inferencing. It can partition your data in a variety of ways, and then apply your machine learning script on elements of the partition in parallel. Like other forms of Machine Learning training, you can specify a custom training environment with access to Python Package Index (PyPI) packages, or a more advanced custom Docker environment for configurations that require more than standard PyPI. There are many CPUs and GPUs to choose from.

- Online inferencing: If a pipeline loads and caches all models at the start, the models might exhaust the container's memory. Therefore, load the models on demand in the run method, even though it might increase latency slightly.

Cost Optimization

Cost Optimization is about looking at ways to reduce unnecessary expenses and improve operational efficiencies. For more information, see Design review checklist for Cost Optimization.

To better understand the cost of running this scenario on Azure, use the pricing calculator. Good starting assumptions are:

- The serving models are trained daily to keep them current.

- For a dataset of 40 million rows with 10 thousand combinations of store and product, training on Azure Databricks using a cluster provisioned with 12 VMs that use Ls16_v2 instances, takes about 30 minutes.

- Batch scoring with the same set of data takes about 20 minutes.

- You can use Machine Learning to deploy real-time inferencing. Depending on your request volume, choose an appropriate VM type and cluster size.

- An AKS cluster autoscales as needed, resulting in two nodes per month being active on average.

To see how pricing differs for your use case, change the variables to match your expected data size and serving load requirements. For larger or smaller training data sizes, increase or decrease the size of the Azure Databricks cluster. To handle more concurrent users during model serving, increase the AKS cluster size.

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal author:

- James Nguyen | Principal Cloud Solution Architect

Next steps

- Configure a Kubernetes cluster for Azure Machine Learning

- Many Models Solution Accelerator GitHub repository

- ParallelRunStep Class

- DataTransferStep Class

- Connect to storage services on Azure

- What is Azure Synapse Analytics?

- Deploy a model to an Azure Kubernetes Service cluster