Upgrade an Azure Kubernetes Service (AKS) cluster

Part of the AKS cluster lifecycle involves performing periodic upgrades to the latest Kubernetes version. It's important you apply the latest security releases and upgrades to get the latest features. This article shows you how to check for and apply upgrades to your AKS cluster.

Kubernetes version upgrades

When you upgrade a supported AKS cluster, you can't skip Kubernetes minor versions. You must perform all upgrades sequentially by major version number. For example, upgrades between 1.14.x -> 1.15.x or 1.15.x -> 1.16.x are allowed. 1.14.x -> 1.16.x isn't allowed. You can only skip multiple versions when upgrading from an unsupported version back to a supported version. For example, you can perform an upgrade from an unsupported 1.10.x to a supported 1.12.x if available.

When you perform an upgrade from an unsupported version that skips two or more minor versions, the upgrade has no guarantee of functionality and is excluded from the service-level agreements and limited warranty. If your version is out of date, we recommend you recreate your cluster instead.

Before you begin

- If you're using the Azure CLI, this article requires Azure CLI version 2.34.1 or later. Run

az --versionto find the version. If you need to install or upgrade, see Install Azure CLI. - If you're using Azure PowerShell, this article requires Azure PowerShell version 5.9.0 or later. Run

Get-InstalledModule -Name Azto find the version. If you need to install or upgrade, see Install Azure PowerShell. - Performing upgrade operations requires the

Microsoft.ContainerService/managedClusters/agentPools/writeRBAC role. For more on Azure RBAC roles, see the Azure resource provider operations. - Starting with 1.30 kubernetes version and 1.27 LTS versions the beta APIs will be disabled by default when you upgrade to them.

Warning

An AKS cluster upgrade triggers a cordon and drain of your nodes. If you have a low compute quota available, the upgrade might fail. For more information, see increase quotas.

Check for available AKS cluster upgrades

Note

To stay up to date with AKS fixes, releases, and updates, see the AKS release tracker.

Check which Kubernetes releases are available for your cluster using the

az aks get-upgradescommand.az aks get-upgrades --resource-group myResourceGroup --name myAKSCluster --output tableThe following example output shows the current version as 1.26.6 and lists the available versions under

upgrades:{ "agentPoolProfiles": null, "controlPlaneProfile": { "kubernetesVersion": "1.26.6", ... "upgrades": [ { "isPreview": null, "kubernetesVersion": "1.27.1" }, { "isPreview": null, "kubernetesVersion": "1.27.3" } ] }, ... }

Troubleshoot AKS cluster upgrade error messages

The following example output means the appservice-kube extension isn't compatible with your Azure CLI version (a minimum of version 2.34.1 is required):

The 'appservice-kube' extension is not compatible with this version of the CLI.

You have CLI core version 2.0.81 and this extension requires a min of 2.34.1.

Table output unavailable. Use the --query option to specify an appropriate query. Use --debug for more info.

If you receive this output, you need to update your Azure CLI version. The az upgrade command was added in version 2.11.0 and doesn't work with versions prior to 2.11.0. You can update older versions by reinstalling Azure CLI as described in Install the Azure CLI. If your Azure CLI version is 2.11.0 or later, run az upgrade to upgrade Azure CLI to the latest version.

If your Azure CLI is updated and you receive the following example output, it means that no upgrades are available:

ERROR: Table output unavailable. Use the --query option to specify an appropriate query. Use --debug for more info.

If no upgrades are available, create a new cluster with a supported version of Kubernetes and migrate your workloads from the existing cluster to the new cluster. AKS doesn't support upgrading a cluster to a newer Kubernetes version when az aks get-upgrades shows that no upgrades are available.

Upgrade an AKS cluster

During the cluster upgrade process, AKS performs the following operations:

- Add a new buffer node (or as many nodes as configured in max surge) to the cluster that runs the specified Kubernetes version.

- Cordon and drain one of the old nodes to minimize disruption to running applications. If you're using max surge, it cordons and drains as many nodes at the same time as the number of buffer nodes specified.

- For long running pods, you can configure the node drain time-out, which allows for custom wait time on the eviction of pods and graceful termination per node. If not specified, the default is 30 minutes. Minimum allowed time-out value is 5 minutes. The maximum limit for drain time-out is 24 hours.

- When the old node is fully drained, it's reimaged to receive the new version and becomes the buffer node for the following node to be upgraded.

- Optionally, you can set a duration of time to wait between draining a node and proceeding to reimage it and move on to the next node. A short interval allows you to complete other tasks, such as checking application health from a Grafana dashboard during the upgrade process. We recommend a short timeframe for the upgrade process, as close to 0 minutes as reasonably possible. Otherwise, a higher node soak time affects how long before you discover an issue. The minimum soak time value is 0 minutes, with a maximum of 30 minutes. If not specified, the default value is 0 minutes.

- This process repeats until all nodes in the cluster are upgraded.

- At the end of the process, the last buffer node is deleted, maintaining the existing agent node count and zone balance.

Note

If no patch is specified, the cluster automatically upgrades to the specified minor version's latest GA patch. For example, setting --kubernetes-version to 1.28 results in the cluster upgrading to 1.28.9.

For more information, see Supported Kubernetes minor version upgrades in AKS.

Upgrade your cluster using the

az aks upgradecommand.az aks upgrade \ --resource-group myResourceGroup \ --name myAKSCluster \ --kubernetes-version <KUBERNETES_VERSION>Confirm the upgrade was successful using the

az aks showcommand.az aks show --resource-group myResourceGroup --name myAKSCluster --output tableThe following example output shows that the cluster now runs 1.27.3:

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn ------------ ---------- --------------- ------------------- ------------------- ---------------------------------------------- myAKSCluster eastus myResourceGroup 1.27.3 Succeeded myakscluster-dns-379cbbb9.hcp.eastus.azmk8s.io

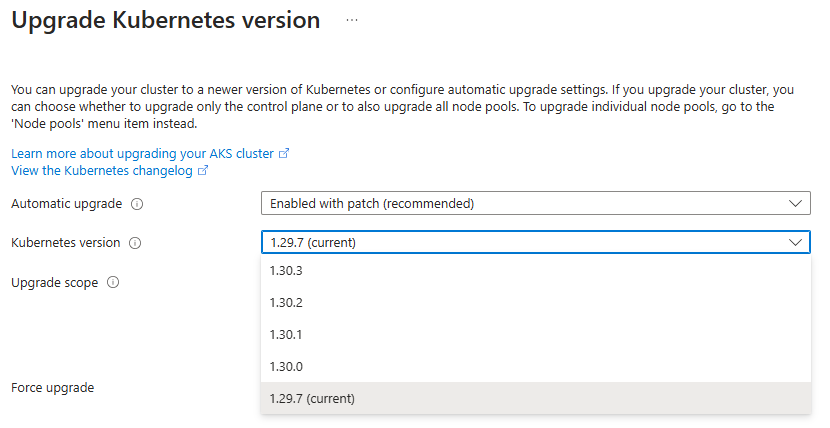

Set auto-upgrade channel

You can set an auto-upgrade channel on your cluster. For more information, see Auto-upgrading an AKS cluster.

Customize node surge upgrade

Important

Node surges require subscription quota for the requested max surge count for each upgrade operation. For example, a cluster that has five node pools, each with a count of four nodes, has a total of 20 nodes. If each node pool has a max surge value of 50%, additional compute and IP quota of 10 nodes (2 nodes * 5 pools) is required to complete the upgrade.

The max surge setting on a node pool is persistent. Subsequent Kubernetes upgrades or node version upgrades will use this setting. You can change the max surge value for your node pools at any time. For production node pools, we recommend a max-surge setting of 33%.

If you're using Azure CNI, validate there are available IPs in the subnet to satisfy IP requirements of Azure CNI.

AKS configures upgrades to surge with one extra node by default. A default value of one for the max surge settings enables AKS to minimize workload disruption by creating an extra node before the cordon/drain of existing applications to replace an older versioned node. You can customize the max surge value per node pool. When you increase the max surge value, the upgrade process completes faster, and you might experience disruptions during the upgrade process.

For example, a max surge value of 100% provides the fastest possible upgrade process, but also causes all nodes in the node pool to be drained simultaneously. You might want to use a higher value such as this for testing environments. For production node pools, we recommend a max_surge setting of 33%.

AKS accepts both integer values and a percentage value for max surge. An integer such as 5 indicates five extra nodes to surge. A value of 50% indicates a surge value of half the current node count in the pool. Max surge percent values can be a minimum of 1% and a maximum of 100%. A percent value is rounded up to the nearest node count. If the max surge value is higher than the required number of nodes to be upgraded, the number of nodes to be upgraded is used for the max surge value. During an upgrade, the max surge value can be a minimum of 1 and a maximum value equal to the number of nodes in your node pool. You can set larger values, but you can't set the maximum number of nodes used for max surge higher than the number of nodes in the pool at the time of upgrade.

Set max surge value

Set max surge values for new or existing node pools using the

az aks nodepool addoraz aks nodepool updatecommand.# Set max surge for a new node pool az aks nodepool add --name mynodepool --resource-group MyResourceGroup --cluster-name MyManagedCluster --max-surge 33% # Update max surge for an existing node pool az aks nodepool update --name mynodepool --resource-group MyResourceGroup --cluster-name MyManagedCluster --max-surge 5

Set node drain timeout value

At times, you may have a long running workload on a certain pod and it can't be rescheduled to another node during runtime, for example, a memory intensive stateful workload that must finish running. In these cases, you can configure a node drain time-out that AKS will respect in the upgrade workflow. If no node drain time-out value is specified, the default is 30 minutes. Minimum allowed drain time-out value is 5 minutes and the maximum limit of drain time-out is 24 hours.

If the drain time-out value elapses and pods are still running, then the upgrade operation is stopped. Any subsequent PUT operation shall resume the stopped upgrade. It's also recommended for long running pods to configure the [terminationGracePeriodSeconds][https://kubernetes.io/docs/concepts/containers/container-lifecycle-hooks/].

Set node drain time-out for new or existing node pools using the

az aks nodepool addoraz aks nodepool updatecommand.# Set drain time-out for a new node pool az aks nodepool add --name mynodepool --resource-group MyResourceGroup --cluster-name MyManagedCluster --drain-time-out 100 # Update drain time-out for an existing node pool az aks nodepool update --name mynodepool --resource-group MyResourceGroup --cluster-name MyManagedCluster --drain-time-out 45

Set node soak time value

To allow for a duration of time to wait between draining a node and proceeding to reimage it and move on to the next node, you can set the soak time to a value between 0 and 30 minutes. If no node soak time value is specified, the default is 0 minutes.

Set node soak time for new or existing node pools using the

az aks nodepool add,az aks nodepool update, oraz aks nodepool upgradecommand.# Set node soak time for a new node pool az aks nodepool add --name MyNodePool --resource-group MyResourceGroup --cluster-name MyManagedCluster --node-soak-duration 10 # Update node soak time for an existing node pool az aks nodepool update --name MyNodePool --resource-group MyResourceGroup --cluster-name MyManagedCluster --max-surge 33% --node-soak-duration 5 # Set node soak time when upgrading an existing node pool az aks nodepool upgrade --name MyNodePool --resource-group MyResourceGroup --cluster-name MyManagedCluster --max-surge 33% --node-soak-duration 20

Upgrading through significant version drift

When upgrading from an unsupported version of Kubernetes to a supported version, it's important to test your workloads on the target version. While AKS will make every effort to upgrade your control plane and data plane, where is no guarantee your workloads will continue to work. If your workloads rely on deprecated Kubernetes APIs, the platform has introduced breaking changes or behaviors (documented in the AKS release notes), these need to be resolved.

In these situations we recommend testing your workloads on the new version, and resolving any version issues before doing an in place upgrade of your cluster.

A common pattern in this situation is to carry out a blue / green deployment of your modified workloads to a new cluster that is on a supported Kubernetes version, and route requests to the new cluster.

View upgrade events

View upgrade events using the

kubectl get eventscommand.kubectl get eventsThe following example output shows some of the above events listed during an upgrade:

... default 2m1s Normal Drain node/aks-nodepool1-96663640-vmss000001 Draining node: [aks-nodepool1-96663640-vmss000001] ... default 1m45s Normal Upgrade node/aks-nodepool1-96663640-vmss000001 Soak duration 5m0s after draining node: aks-nodepool1-96663640-vmss000001 ... default 9m22s Normal Surge node/aks-nodepool1-96663640-vmss000002 Created a surge node [aks-nodepool1-96663640-vmss000002 nodepool1] for agentpool nodepool1 ...

Next steps

To learn how to configure automatic upgrades, see Configure automatic upgrades for an AKS cluster.

For a detailed discussion of upgrade best practices and other considerations, see AKS patch and upgrade guidance.

Azure Kubernetes Service