How to evaluate generative AI models and applications with Azure AI Foundry

To thoroughly assess the performance of your generative AI models and applications when applied to a substantial dataset, you can initiate an evaluation process. During this evaluation, your model or application is tested with the given dataset, and its performance will be quantitatively measured with both mathematical based metrics and AI-assisted metrics. This evaluation run provides you with comprehensive insights into the application's capabilities and limitations.

To carry out this evaluation, you can utilize the evaluation functionality in Azure AI Foundry portal, a comprehensive platform that offers tools and features for assessing the performance and safety of your generative AI model. In Azure AI Foundry portal, you're able to log, view, and analyze detailed evaluation metrics.

In this article, you learn to create an evaluation run against model, a test dataset or a flow with built-in evaluation metrics from Azure AI Foundry UI. For greater flexibility, you can establish a custom evaluation flow and employ the custom evaluation feature. Alternatively, if your objective is solely to conduct a batch run without any evaluation, you can also utilize the custom evaluation feature.

Prerequisites

To run an evaluation with AI-assisted metrics, you need to have the following ready:

- A test dataset in one of these formats:

csvorjsonl. - An Azure OpenAI connection. A deployment of one of these models: GPT 3.5 models, GPT 4 models, or Davinci models. Required only when you run AI-assisted quality evaluation.

Create an evaluation with built-in evaluation metrics

An evaluation run allows you to generate metric outputs for each data row in your test dataset. You can choose one or more evaluation metrics to assess the output from different aspects. You can create an evaluation run from the evaluation, model catalog or prompt flow pages in Azure AI Foundry portal. Then an evaluation creation wizard appears to guide you through the process of setting up an evaluation run.

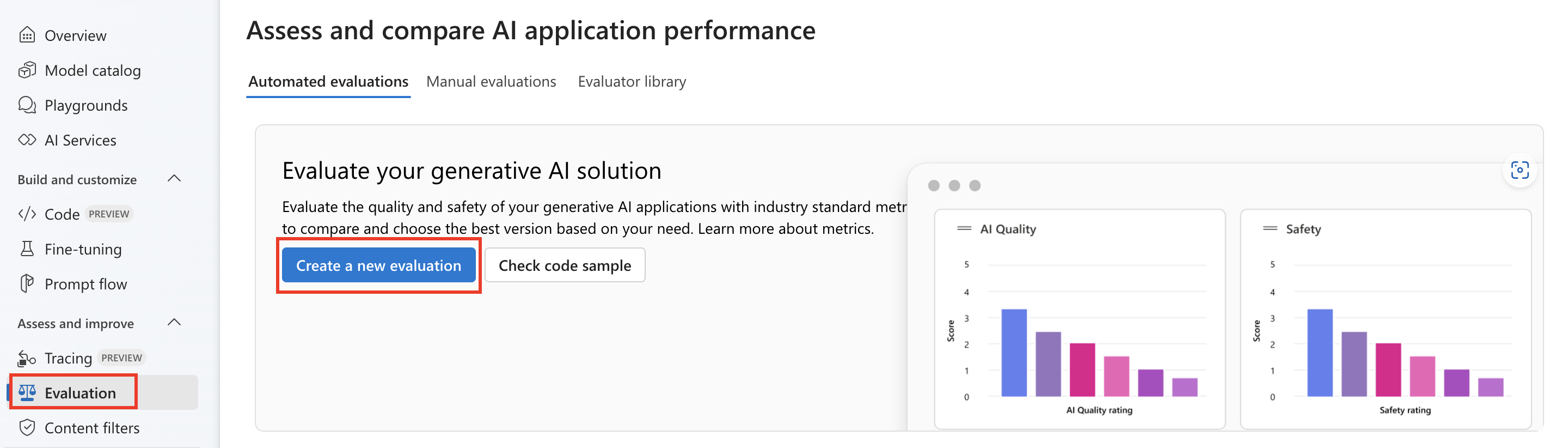

From the evaluate page

From the collapsible left menu, select Evaluation > + Create a new evaluation.

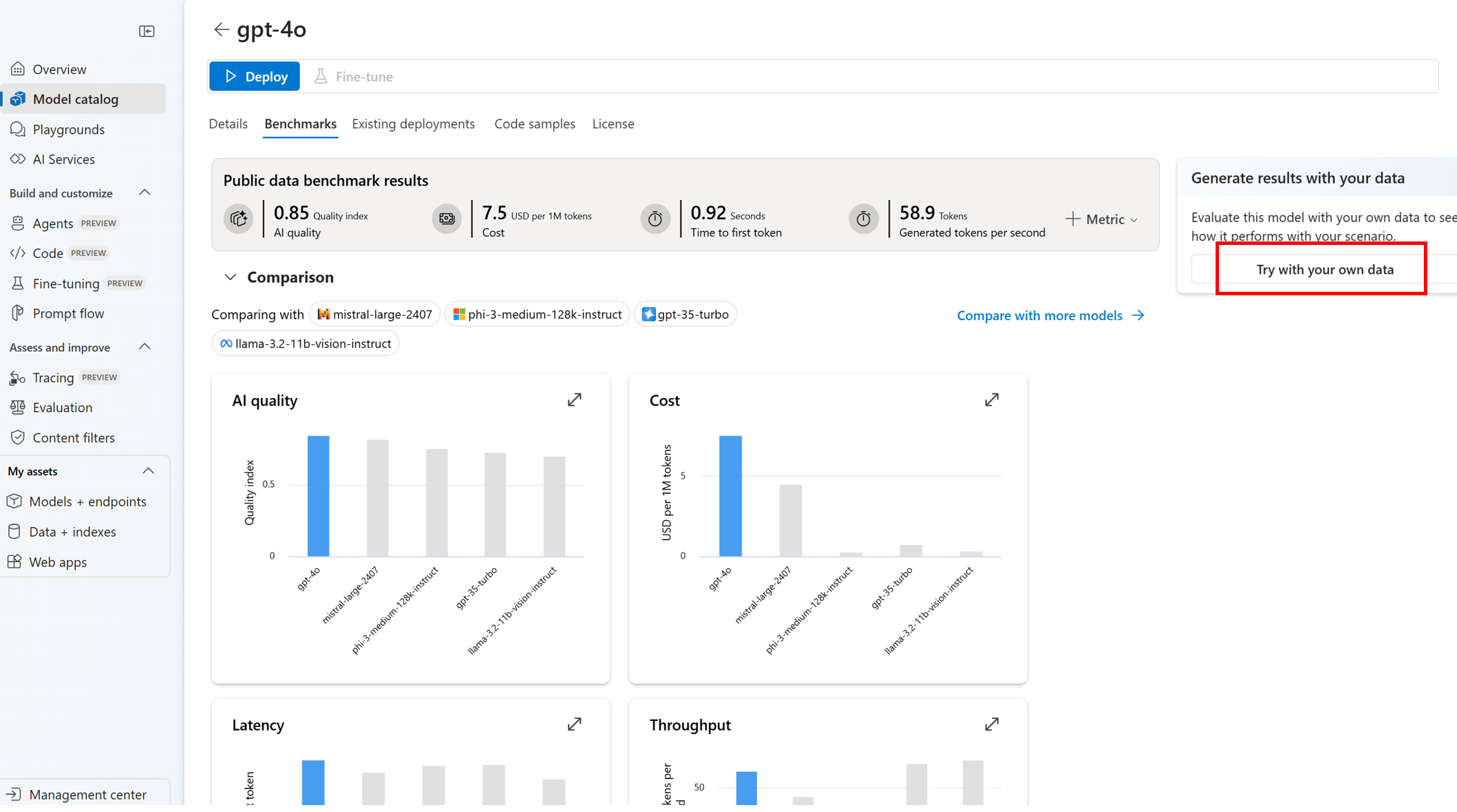

From the model catalog page

From the collapsible left menu, select Model catalog > go to specific model > navigate to the benchmark tab > Try with your own data. This opens the model evaluation panel for you to create an evaluation run against your selected model.

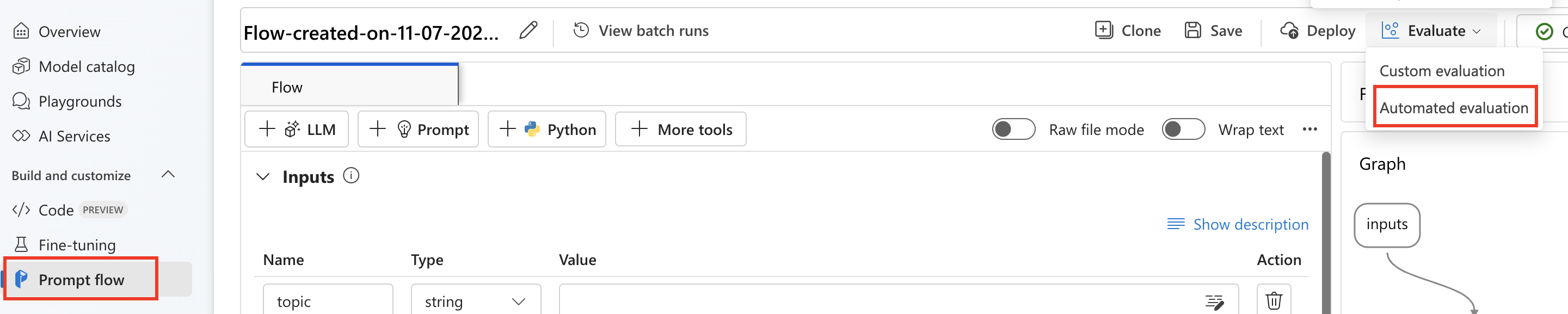

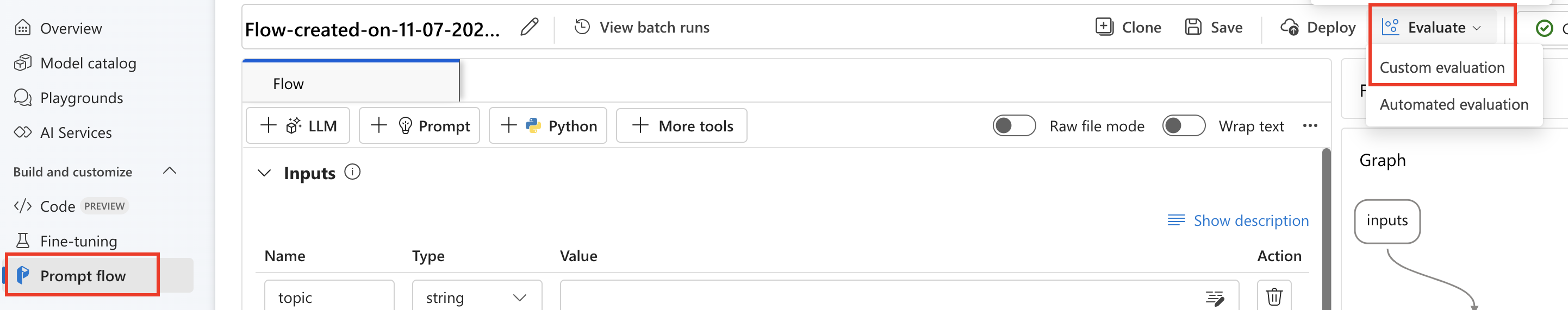

From the flow page

From the collapsible left menu, select Prompt flow > Evaluate > Automated evaluation.

Evaluation target

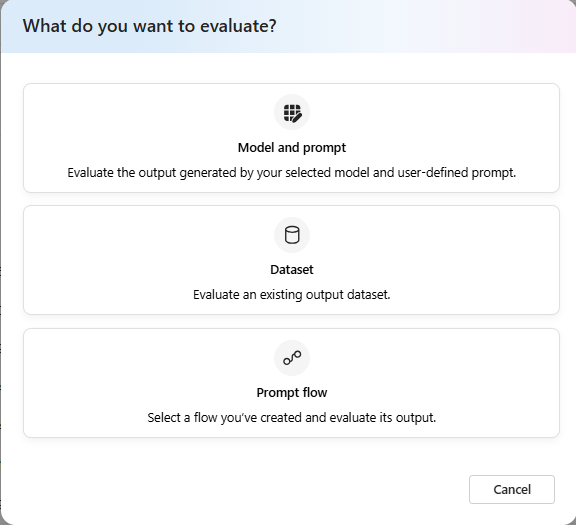

When you start an evaluation from the evaluate page, you need to decide what is the evaluation target first. By specifying the appropriate evaluation target, we can tailor the evaluation to the specific nature of your application, ensuring accurate and relevant metrics. We support three types of evaluation target:

- Model and prompt: You want to evaluate the output generated by your selected model and user-defined prompt.

- Dataset: You already have your model generated outputs in a test dataset.

- Prompt flow: You have created a flow, and you want to evaluate the output from the flow.

Dataset or prompt flow evaluation

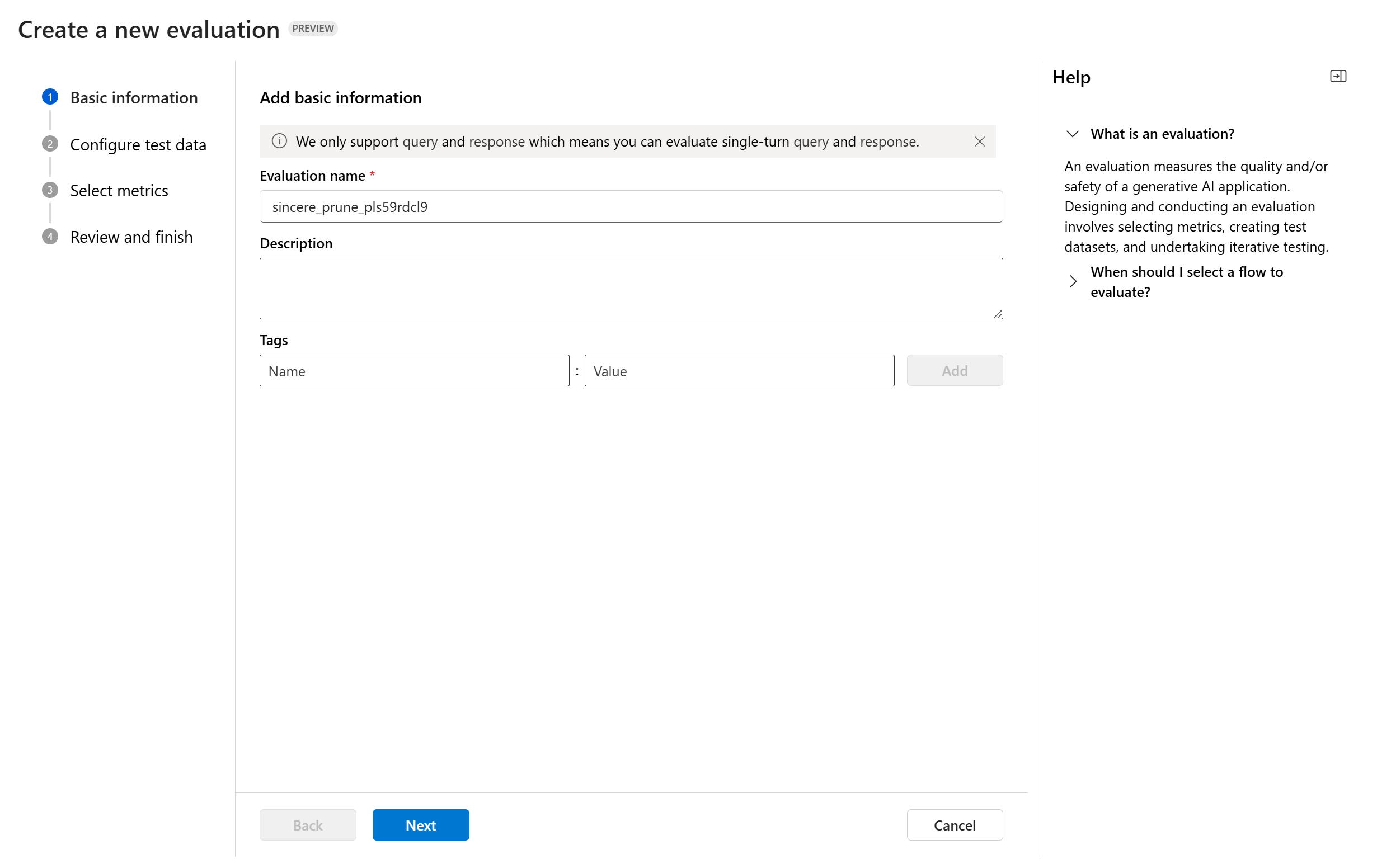

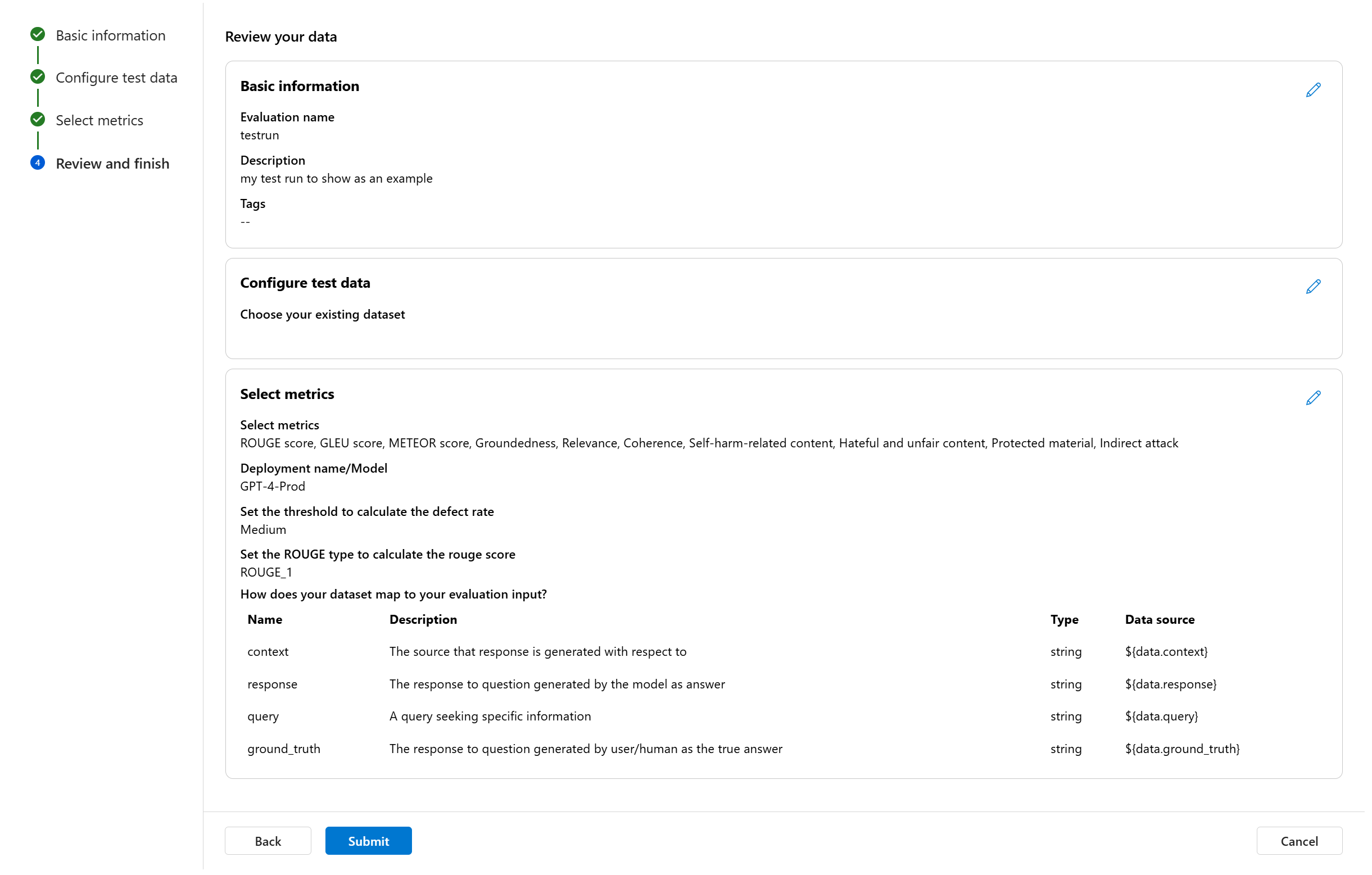

When you enter the evaluation creation wizard, you can provide an optional name for your evaluation run. We currently offer support for the query and response scenario, which is designed for applications that involve answering user queries and providing responses with or without context information.

You can optionally add descriptions and tags to evaluation runs for improved organization, context, and ease of retrieval.

You can also use the help panel to check the FAQs and guide yourself through the wizard.

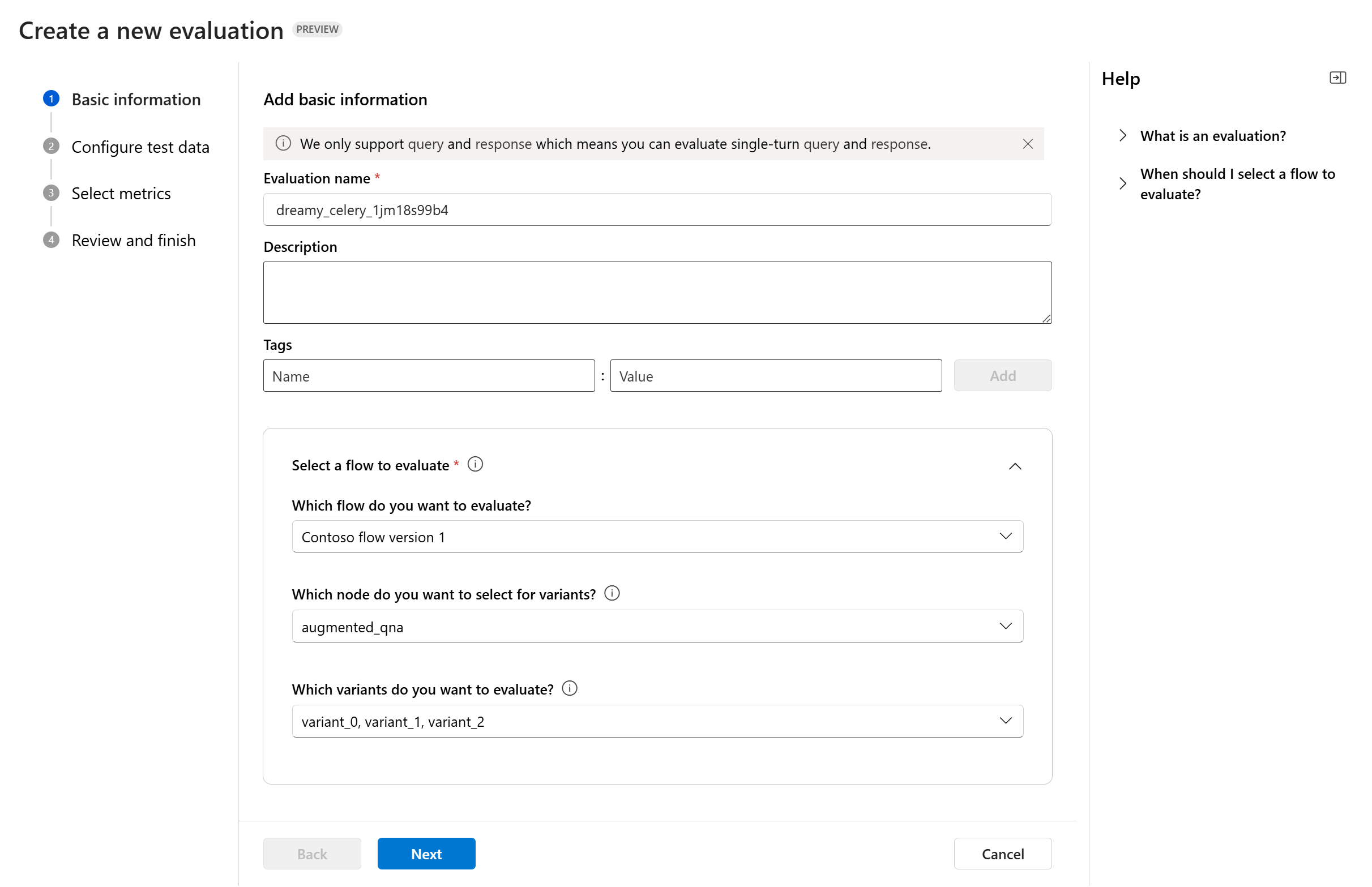

If you're evaluating a prompt flow, you can select the flow to evaluate. If you initiate the evaluation from the Flow page, we'll automatically select your flow to evaluate. If you intend to evaluate another flow, you can select a different one. It's important to note that within a flow, you might have multiple nodes, each of which could have its own set of variants. In such cases, you must specify the node and the variants you wish to assess during the evaluation process.

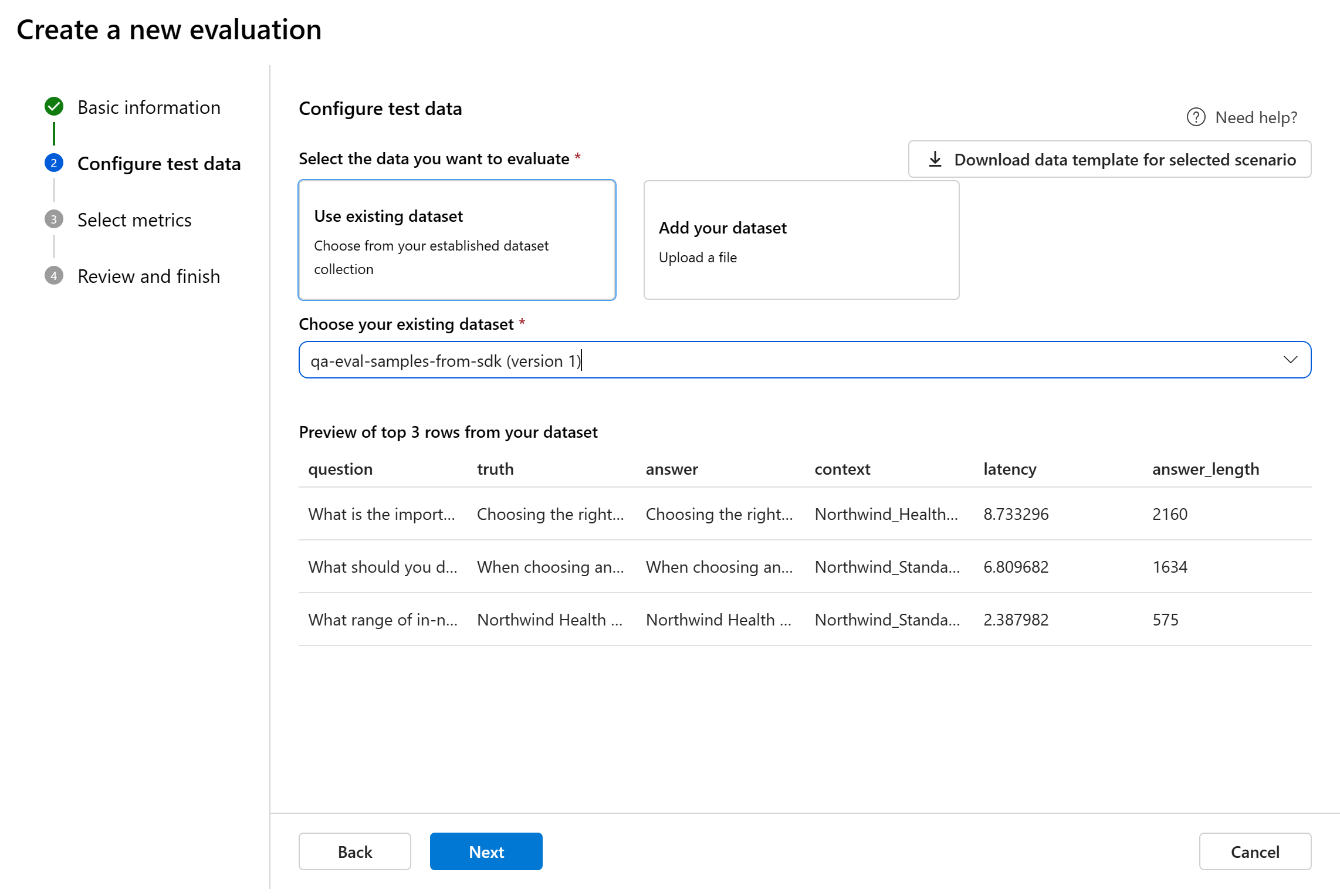

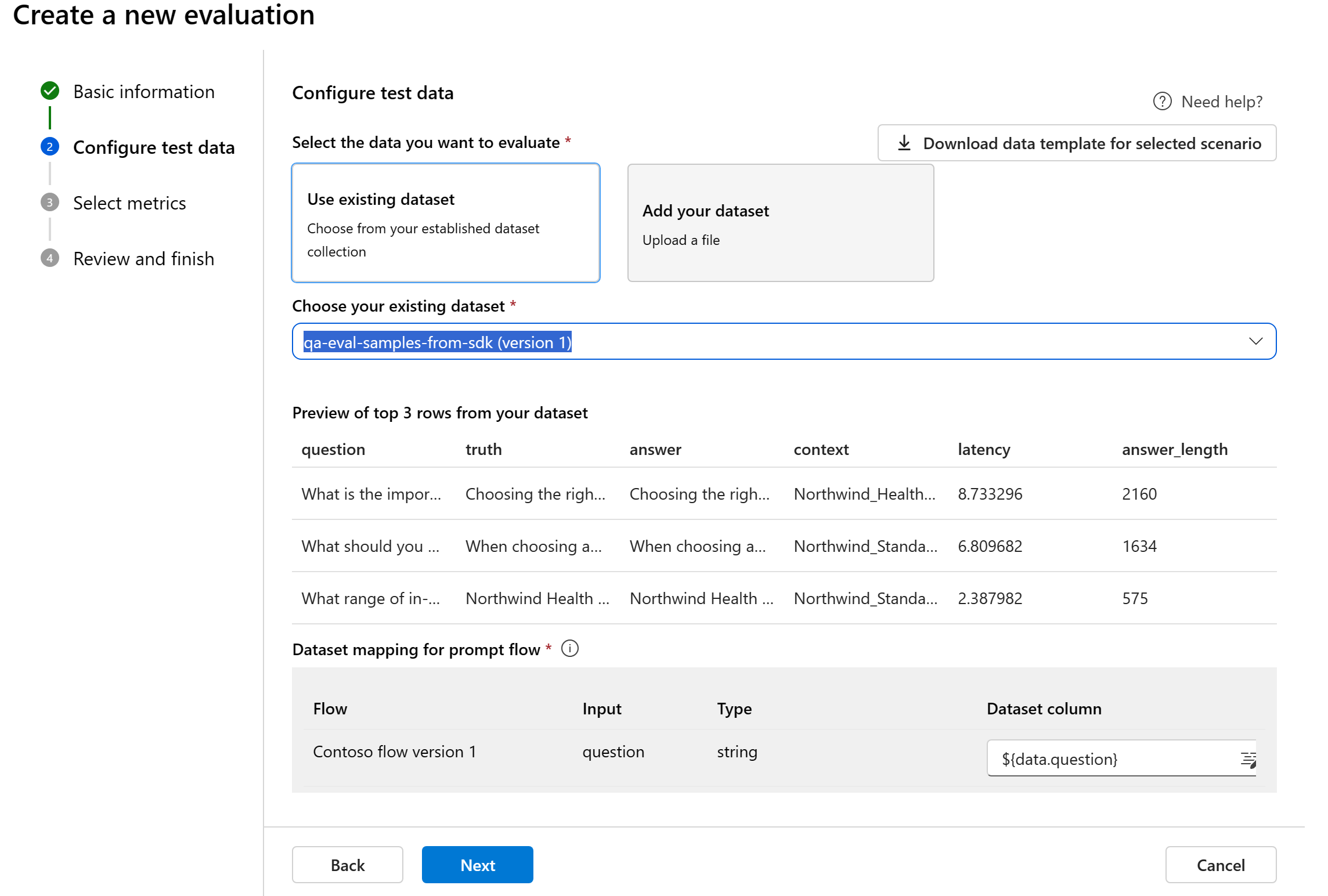

Configure test data

You can select from pre-existing datasets or upload a new dataset specifically to evaluate. The test dataset needs to have the model generated outputs to be used for evaluation if there's no flow selected in the previous step.

Choose existing dataset: You can choose the test dataset from your established dataset collection.

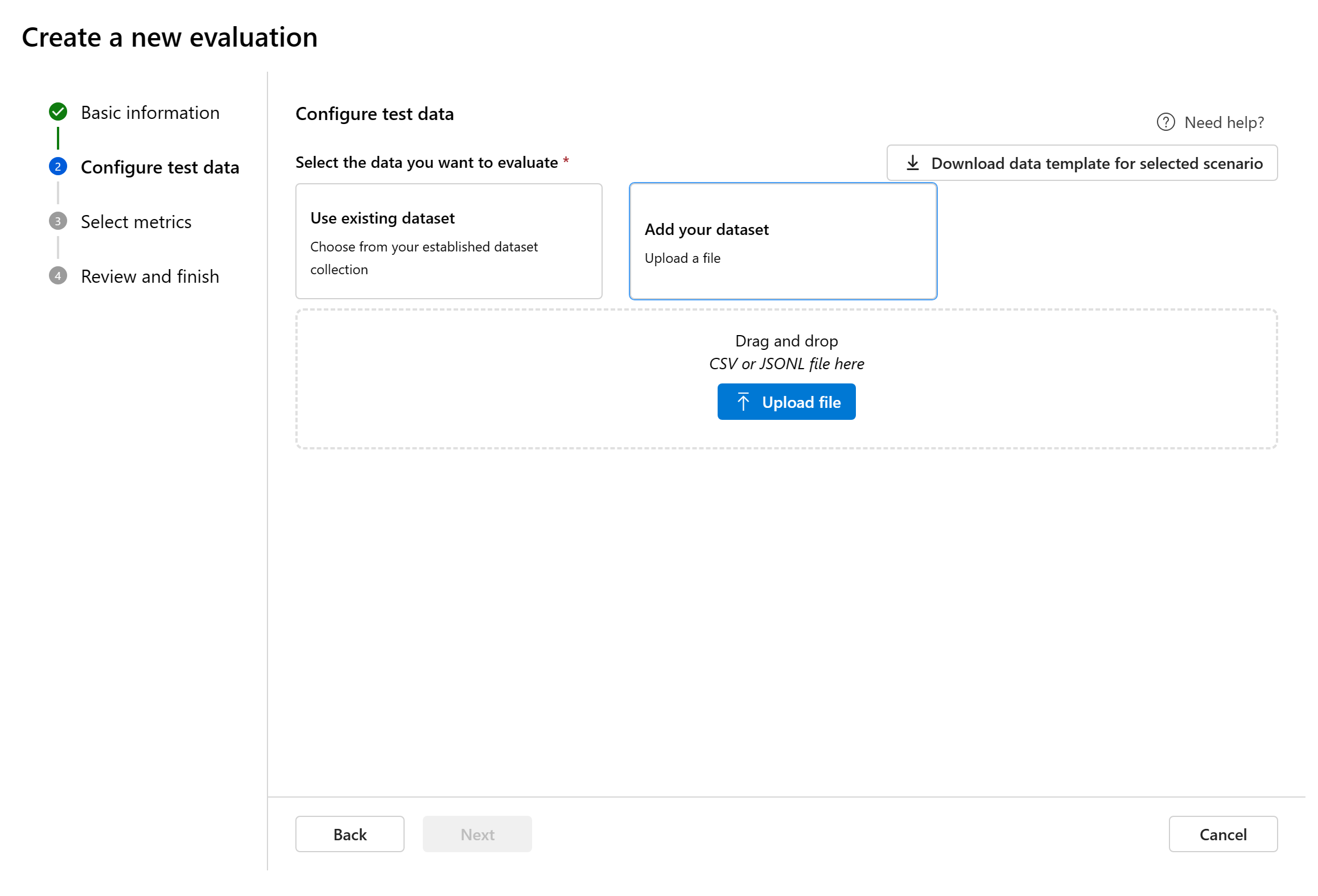

Add new dataset: You can upload files from your local storage. We only support

.csvand.jsonlfile formats.Data mapping for flow: If you select a flow to evaluate, ensure that your data columns are configured to align with the required inputs for the flow to execute a batch run, generating output for assessment. The evaluation will then be conducted using the output from the flow. Then, configure the data mapping for evaluation inputs in the next step.

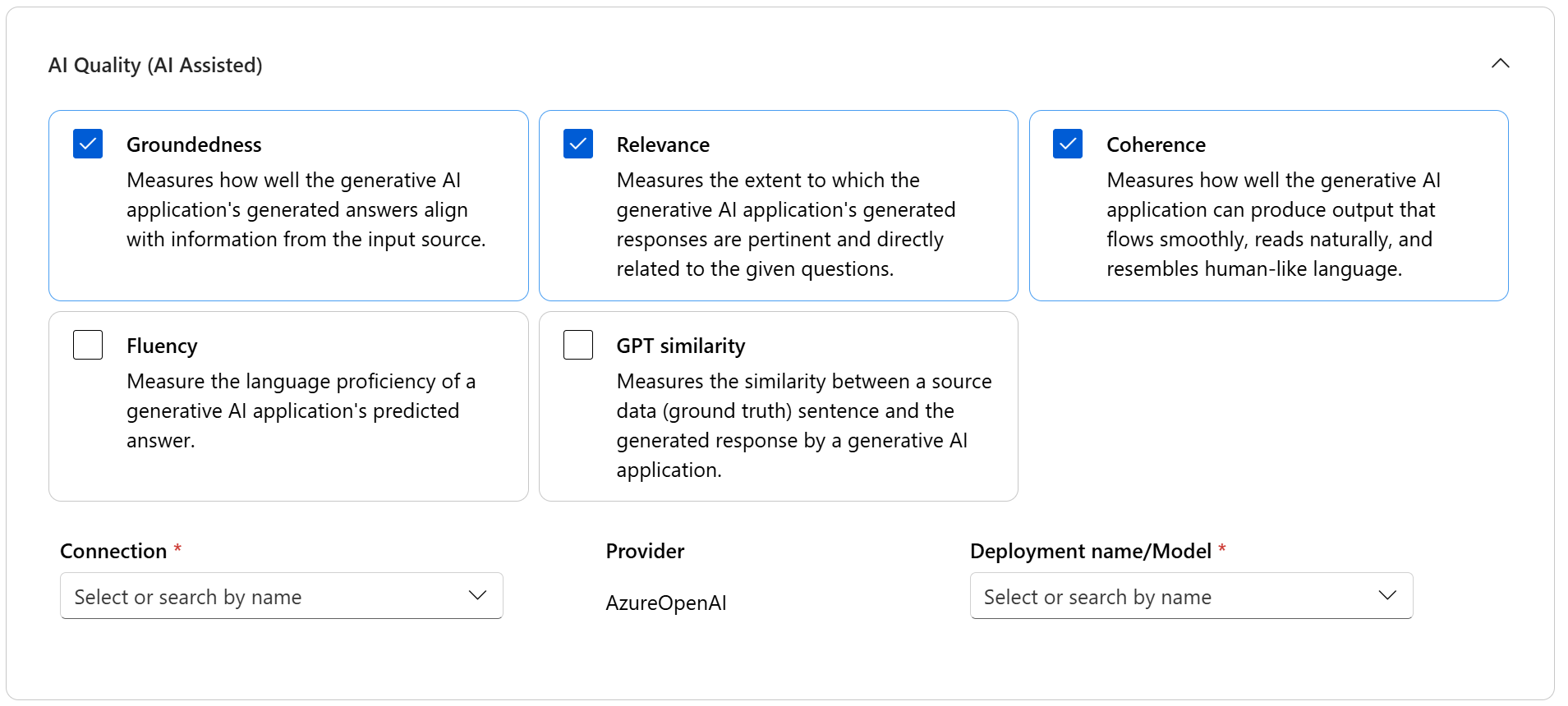

Select metrics

We support three types of metrics curated by Microsoft to facilitate a comprehensive evaluation of your application:

- AI quality (AI assisted): These metrics evaluate the overall quality and coherence of the generated content. To run these metrics, it requires a model deployment as judge.

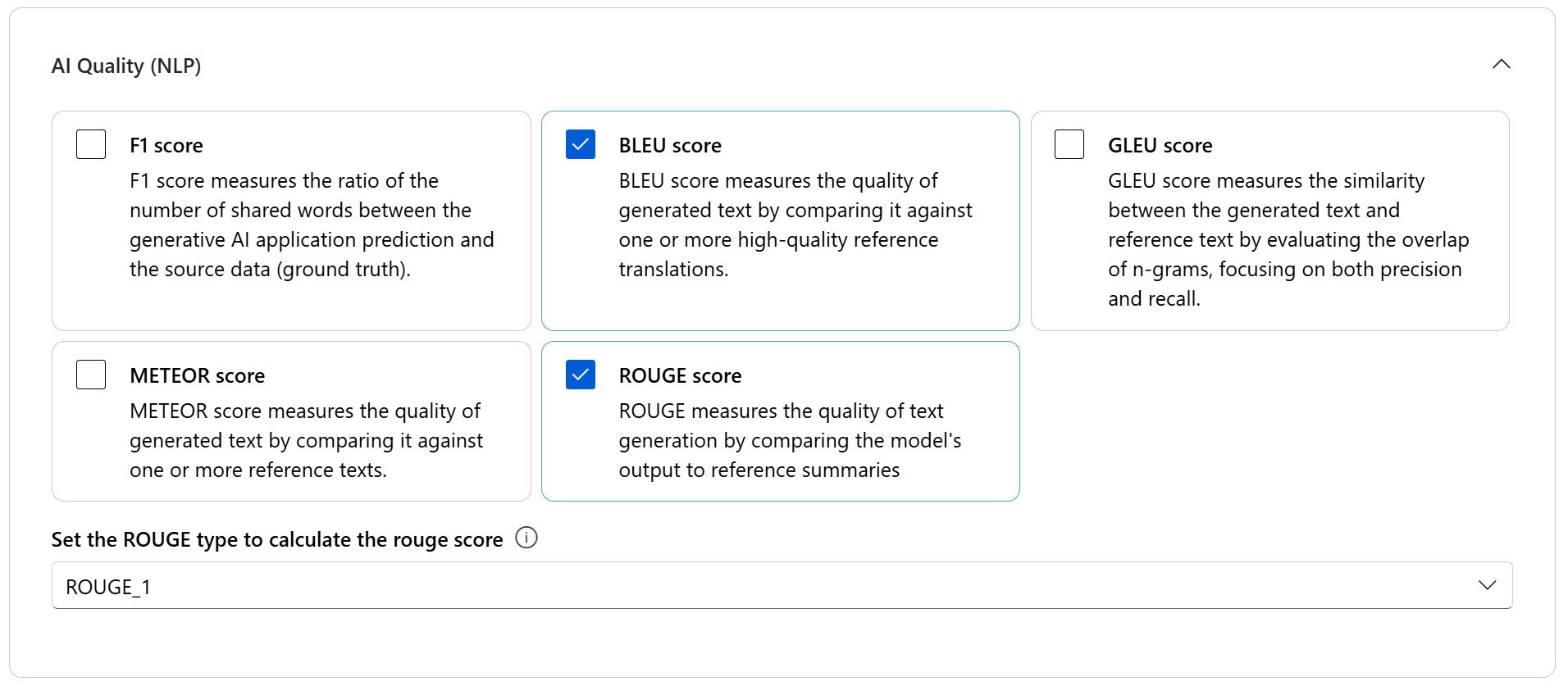

- AI quality (NLP): These NLP metrics are mathematical based, and they also evaluate the overall quality of the generated content. They often require ground truth data, but they don't require model deployment as judge.

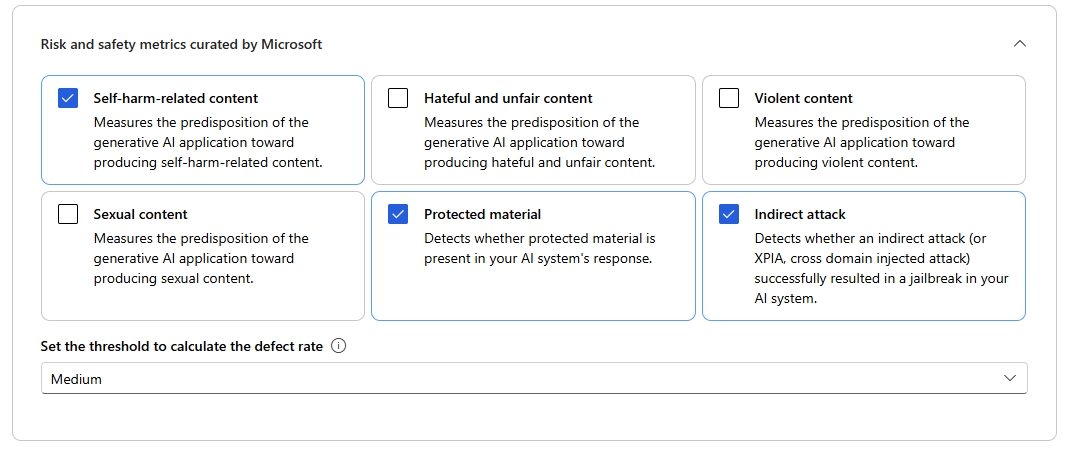

- Risk and safety metrics: These metrics focus on identifying potential content risks and ensuring the safety of the generated content.

You can refer to the table for the complete list of metrics we offer support for in each scenario. For more in-depth information on each metric definition and how it's calculated, see Evaluation and monitoring metrics.

| AI quality (AI assisted) | AI quality (NLP) | Risk and safety metrics |

|---|---|---|

| Groundedness, Relevance, Coherence, Fluency, GPT similarity | F1 score, ROUGE, score, BLEU score, GLEU score, METEOR score | Self-harm-related content, Hateful and unfair content, Violent content, Sexual content, Protected material, Indirect attack |

When running AI assisted quality evaluation, you must specify a GPT model for the calculation process. Choose an Azure OpenAI connection and a deployment with either GPT-3.5, GPT-4, or the Davinci model for our calculations.

AI Quality (NLP) metrics are mathematically based measurements that assess your application's performance. They often require ground truth data for calculation. ROUGE is a family of metrics. You can select the ROUGE type to calculate the scores. Various types of ROUGE metrics offer ways to evaluate the quality of text generation. ROUGE-N measures the overlap of n-grams between the candidate and reference texts.

For risk and safety metrics, you don't need to provide a connection and deployment. The Azure AI Foundry portal safety evaluations back-end service provisions a GPT-4 model that can generate content risk severity scores and reasoning to enable you to evaluate your application for content harms.

You can set the threshold to calculate the defect rate for the content harm metrics (self-harm-related content, hateful and unfair content, violent content, sexual content). The defect rate is calculated by taking a percentage of instances with severity levels (Very low, Low, Medium, High) above a threshold. By default, we set the threshold as "Medium".

For protected material and indirect attack, the defect rate is calculated by taking a percentage of instances where the output is 'true' (Defect Rate = (#trues / #instances) × 100).

Note

AI-assisted risk and safety metrics are hosted by Azure AI Foundry safety evaluations back-end service and is only available in the following regions: East US 2, France Central, UK South, Sweden Central

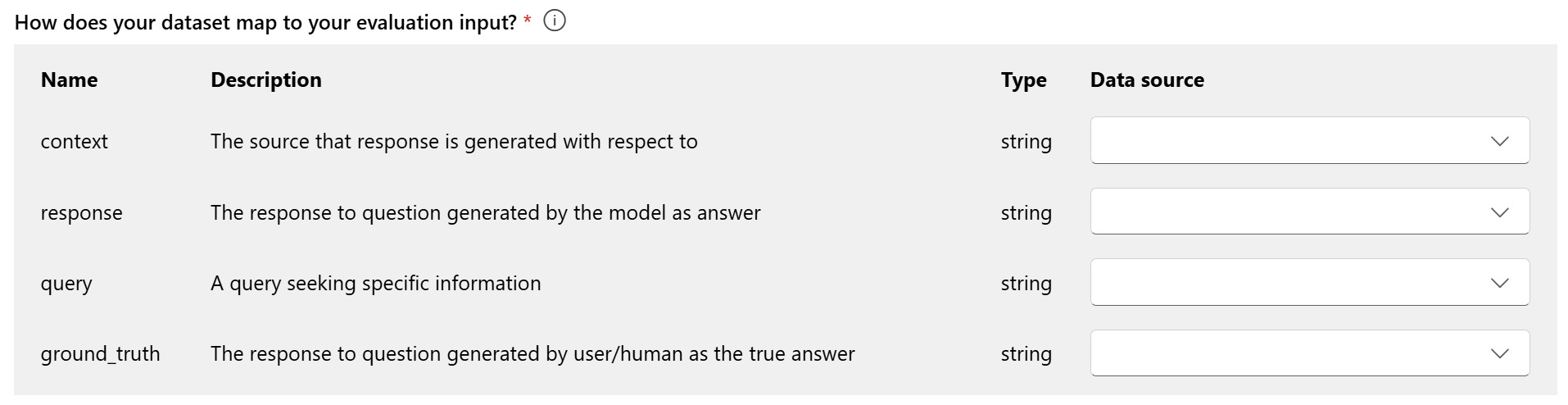

Data mapping for evaluation: You must specify which data columns in your dataset correspond with inputs needed in the evaluation. Different evaluation metrics demand distinct types of data inputs for accurate calculations.

Note

If you are evaluating from data, "response" should map to the response column in your dataset ${data$response}. If you are evaluating from flow, "response" should come from flow output ${run.outputs.response}.

For guidance on the specific data mapping requirements for each metric, refer to the information provided in the table:

Query and response metric requirements

| Metric | Query | Response | Context | Ground truth |

|---|---|---|---|---|

| Groundedness | Required: Str | Required: Str | Required: Str | N/A |

| Coherence | Required: Str | Required: Str | N/A | N/A |

| Fluency | Required: Str | Required: Str | N/A | N/A |

| Relevance | Required: Str | Required: Str | Required: Str | N/A |

| GPT-similarity | Required: Str | Required: Str | N/A | Required: Str |

| F1 score | N/A | Required: Str | N/A | Required: Str |

| BLEU score | N/A | Required: Str | N/A | Required: Str |

| GLEU score | N/A | Required: Str | N/A | Required: Str |

| METEOR score | N/A | Required: Str | N/A | Required: Str |

| ROUGE score | N/A | Required: Str | N/A | Required: Str |

| Self-harm-related content | Required: Str | Required: Str | N/A | N/A |

| Hateful and unfair content | Required: Str | Required: Str | N/A | N/A |

| Violent content | Required: Str | Required: Str | N/A | N/A |

| Sexual content | Required: Str | Required: Str | N/A | N/A |

| Protected material | Required: Str | Required: Str | N/A | N/A |

| Indirect attack | Required: Str | Required: Str | N/A | N/A |

- Query: a query seeking specific information.

- Response: the response to query generated by the model.

- Context: the source that response is generated with respect to (that is, grounding documents)...

- Ground truth: the response to query generated by user/human as the true answer.

Review and finish

After completing all the necessary configurations, you can review and proceed to select 'Submit' to submit the evaluation run.

Model and prompt evaluation

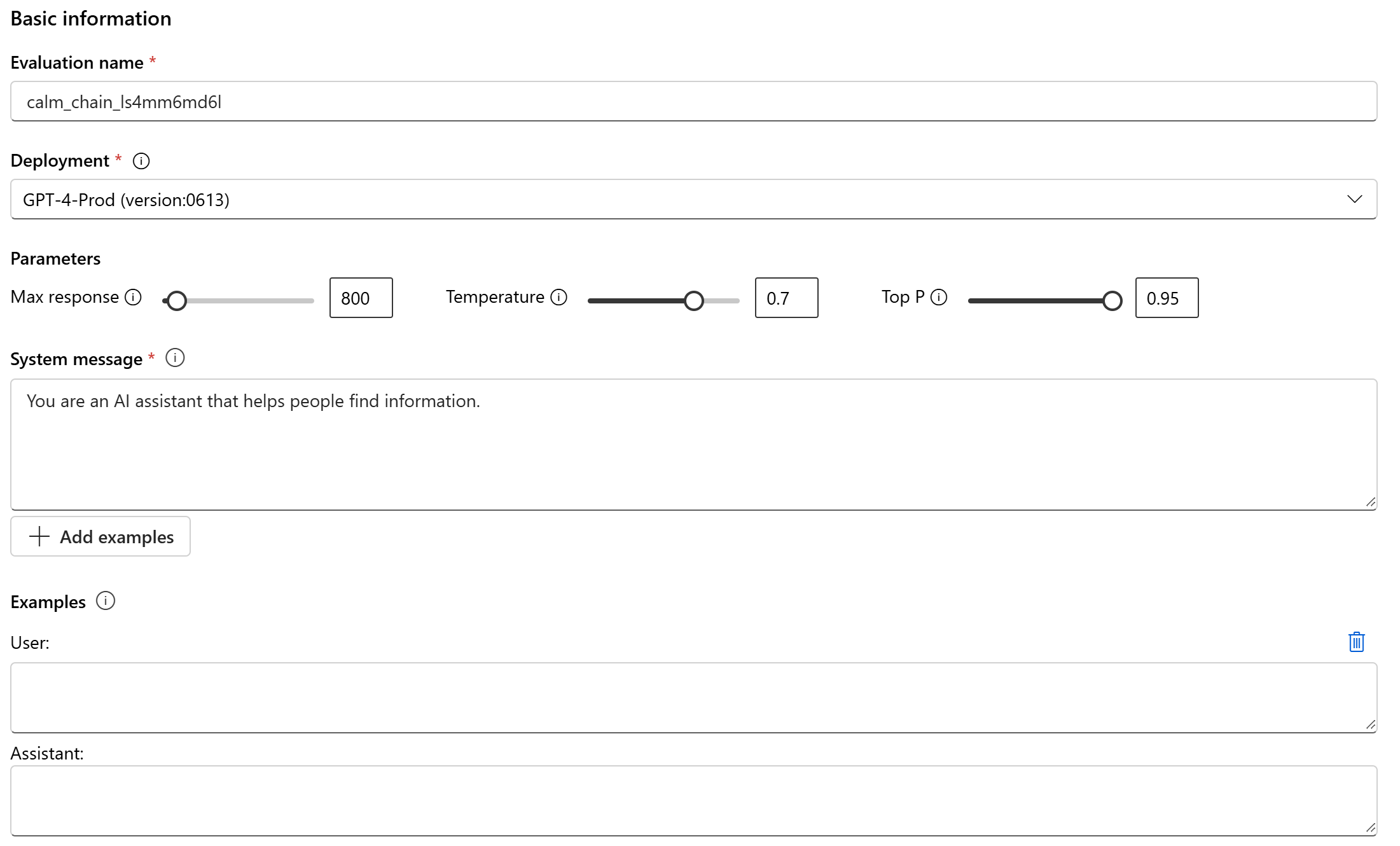

To create a new evaluation for your selected model deployment and defined prompt, use the simplified model evaluation panel. This streamlined interface allows you to configure and initiate evaluations within a single, consolidated panel.

Basic information

To start, you can set up the name for your evaluation run. Then select the model deployment you want to evaluate. We support both Azure OpenAI models and other open models compatible with Model-as-a-Service (MaaS), such as Meta Llama and Phi-3 family models. Optionally, you can adjust the model parameters like max response, temperature, and top P based on your need.

In the System message text box, provide the prompt for your scenario. For more information on how to craft your prompt, see the prompt catalog. You can choose to add example to show the chat what responses you want. It will try to mimic any responses you add here to make sure they match the rules you laid out in the system message.

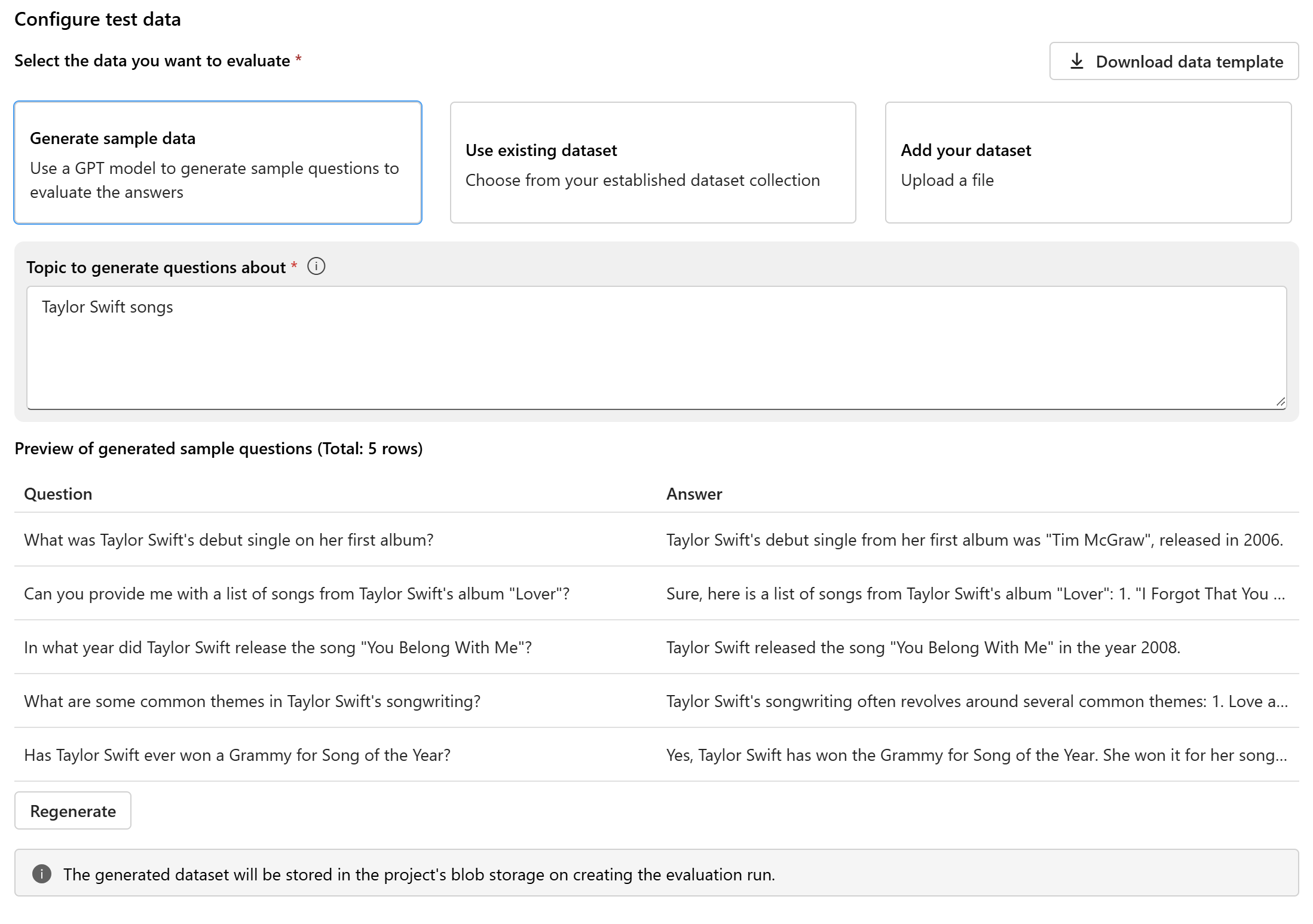

Configure test data

After configuring the model and prompt, set up the test dataset that will be used for evaluation. This dataset will be sent to the model to generate responses for assessment. You have three options for configuring your test data:

- Generate sample data

- Use existing dataset

- Add your dataset

If you don't have a dataset readily available and would like to run an evaluation with a small sample, you can select the option to use a GPT model to generate sample questions based on your chosen topic. The topic helps tailor the generated content to your area of interest. The queries and responses will be generated in real time, and you have the option to regenerate them as needed.

Note

The generated dataset will be saved to the project’s blob storage once the evaluation run is created.

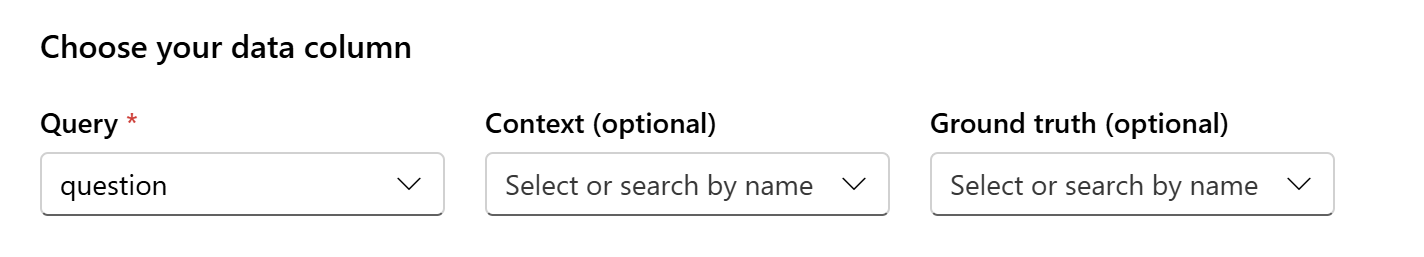

Data mapping

If you choose to use an existing dataset or upload a new dataset, you'll need to map your dataset’s columns to the required fields for evaluation. During evaluation, the model’s response will be assessed against key inputs such as:

- Query: required for all metrics

- Context: optional

- Ground Truth: optional, required for AI quality (NLP) metrics

These mappings ensure accurate alignment between your data and the evaluation criteria.

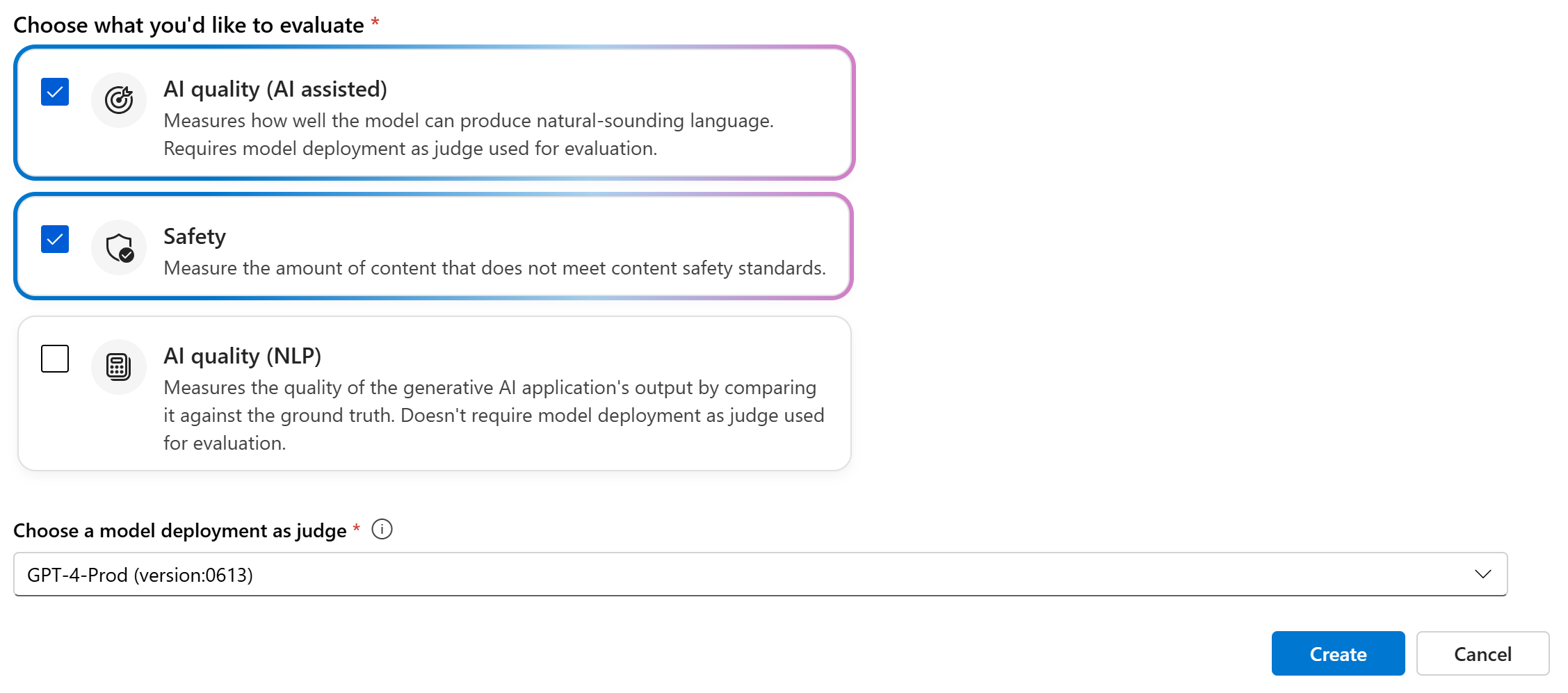

Choose evaluation metrics

The last step is to select what you’d like to evaluate. Instead of selecting individual metrics and having to familiarize yourself with all the options available, we simplify the process by allowing you to select metric categories that best meet your needs. When you choose a category, all relevant metrics within that category will be calculated based on the data columns you provided in the previous step. Once you select the metric categories, you can select “Create” to submit the evaluation run and go to the evaluation page to see the results.

We support three categories:

- AI quality (AI assisted): You need to provide an Azure OpenAI model deployment as the judge to calculate the AI assisted metrics.

- AI quality (NLP)

- Safety

| AI quality (AI assisted) | AI quality (NLP) | Safety |

|---|---|---|

| Groundedness (require context), Relevance (require context), Coherence, Fluency | F1 score, ROUGE, score, BLEU score, GLEU score, METEOR score | Self-harm-related content, Hateful and unfair content, Violent content, Sexual content, Protected material, Indirect attack |

Create an evaluation with custom evaluation flow

You can develop your own evaluation methods:

From the flow page: From the collapsible left menu, select Prompt flow > Evaluate > Custom evaluation.

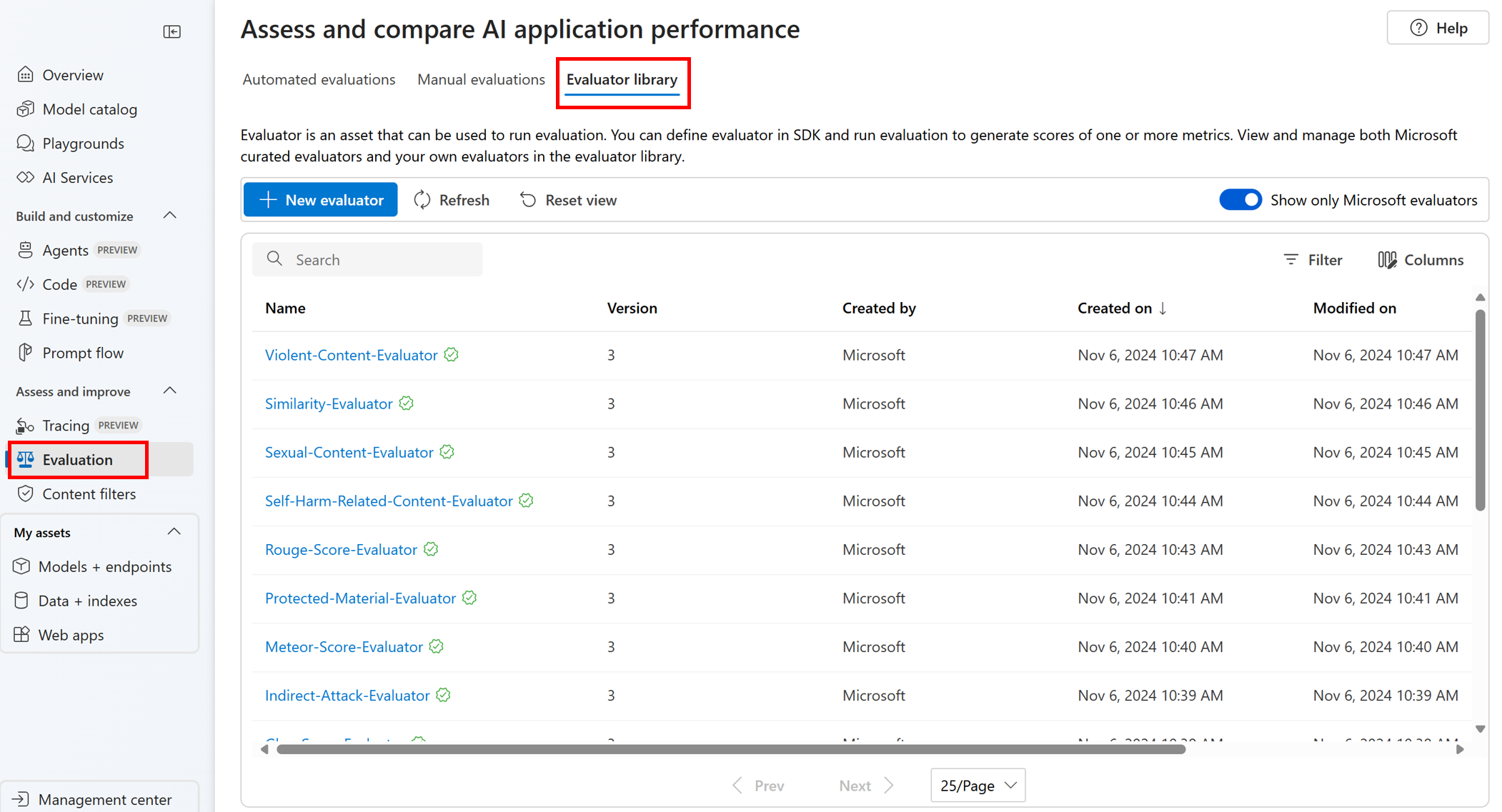

View and manage the evaluators in the evaluator library

The evaluator library is a centralized place that allows you to see the details and status of your evaluators. You can view and manage Microsoft curated evaluators.

Tip

You can use custom evaluators via the prompt flow SDK. For more information, see Evaluate with the prompt flow SDK.

The evaluator library also enables version management. You can compare different versions of your work, restore previous versions if needed, and collaborate with others more easily.

To use the evaluator library in Azure AI Foundry portal, go to your project's Evaluation page and select the Evaluator library tab.

You can select the evaluator name to see more details. You can see the name, description, and parameters, and check any files associated with the evaluator. Here are some examples of Microsoft curated evaluators:

- For performance and quality evaluators curated by Microsoft, you can view the annotation prompt on the details page. You can adapt these prompts to your own use case by changing the parameters or criteria according to your data and objectives Azure AI Evaluation SDK. For example, you can select Groundedness-Evaluator and check the Prompty file showing how we calculate the metric.

- For risk and safety evaluators curated by Microsoft, you can see the definition of the metrics. For example, you can select the Self-Harm-Related-Content-Evaluator and learn what it means and how Microsoft determines the various severity levels for this safety metric.

Next steps

Learn more about how to evaluate your generative AI applications: