Develop applications with LlamaIndex and Azure AI Foundry

In this article, you learn how to use LlamaIndex with models deployed from the Azure AI model catalog in Azure AI Foundry portal.

Models deployed to Azure AI Foundry can be used with LlamaIndex in two ways:

Using the Azure AI model inference API: All models deployed to Azure AI Foundry support the Azure AI model inference API, which offers a common set of functionalities that can be used for most of the models in the catalog. The benefit of this API is that, since it's the same for all the models, changing from one to another is as simple as changing the model deployment being use. No further changes are required in the code. When working with LlamaIndex, install the extensions

llama-index-llms-azure-inferenceandllama-index-embeddings-azure-inference.Using the model's provider specific API: Some models, like OpenAI, Cohere, or Mistral, offer their own set of APIs and extensions for LlamaIndex. Those extensions may include specific functionalities that the model support and hence are suitable if you want to exploit them. When working with

llama-index, install the extension specific for the model you want to use, likellama-index-llms-openaiorllama-index-llms-cohere.

In this example, we are working with the Azure AI model inference API.

Prerequisites

To run this tutorial, you need:

An Azure AI project as explained at Create a project in Azure AI Foundry portal.

A model supporting the Azure AI model inference API deployed. In this example, we use a

Mistral-Largedeployment, but use any model of your preference. For using embeddings capabilities in LlamaIndex, you need an embedding model likecohere-embed-v3-multilingual.- You can follow the instructions at Deploy models as serverless APIs.

Python 3.8 or later installed, including pip.

LlamaIndex installed. You can do it with:

pip install llama-indexIn this example, we are working with the Azure AI model inference API, hence we install the following packages:

pip install -U llama-index-llms-azure-inference pip install -U llama-index-embeddings-azure-inferenceImportant

Using the Azure AI model inference service requires version

0.2.4forllama-index-llms-azure-inferenceorllama-index-embeddings-azure-inference.

Configure the environment

To use LLMs deployed in Azure AI Foundry portal, you need the endpoint and credentials to connect to it. Follow these steps to get the information you need from the model you want to use:

Go to the Azure AI Foundry.

Open the project where the model is deployed, if it isn't already open.

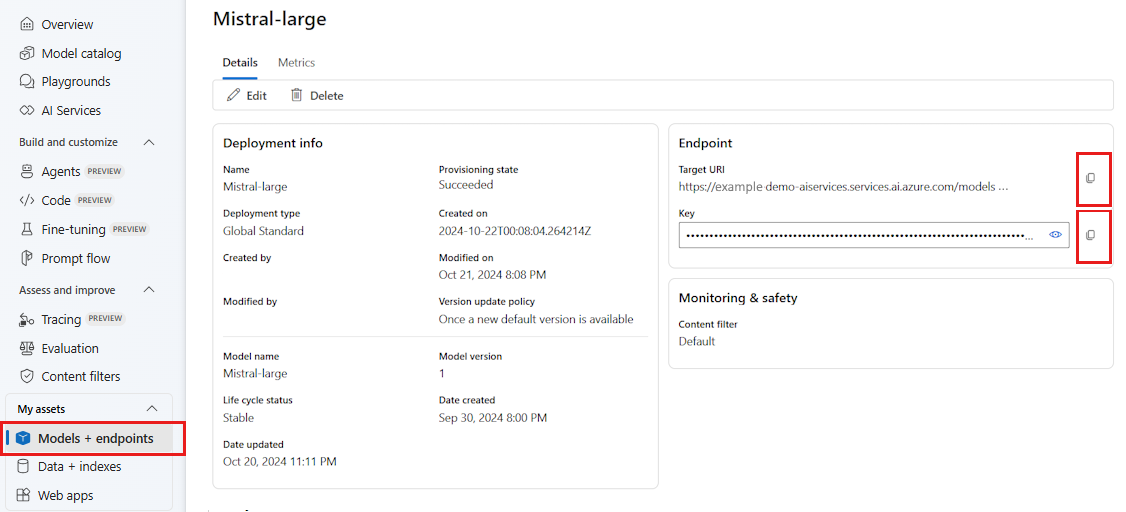

Go to Models + endpoints and select the model you deployed as indicated in the prerequisites.

Copy the endpoint URL and the key.

Tip

If your model was deployed with Microsoft Entra ID support, you don't need a key.

In this scenario, we placed both the endpoint URL and key in the following environment variables:

export AZURE_INFERENCE_ENDPOINT="<your-model-endpoint-goes-here>"

export AZURE_INFERENCE_CREDENTIAL="<your-key-goes-here>"

Once configured, create a client to connect to the endpoint.

import os

from llama_index.llms.azure_inference import AzureAICompletionsModel

llm = AzureAICompletionsModel(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=os.environ["AZURE_INFERENCE_CREDENTIAL"],

)

Tip

If your model deployment is hosted in Azure OpenAI service or Azure AI Services resource, configure the client as indicated at Azure OpenAI models and Azure AI model inference service.

If your endpoint is serving more than one model, like with the Azure AI model inference service or GitHub Models, you have to indicate model_name parameter:

import os

from llama_index.llms.azure_inference import AzureAICompletionsModel

llm = AzureAICompletionsModel(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=os.environ["AZURE_INFERENCE_CREDENTIAL"],

model_name="mistral-large-2407",

)

Alternatively, if your endpoint support Microsoft Entra ID, you can use the following code to create the client:

import os

from azure.identity import DefaultAzureCredential

from llama_index.llms.azure_inference import AzureAICompletionsModel

llm = AzureAICompletionsModel(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=DefaultAzureCredential(),

)

Note

When using Microsoft Entra ID, make sure that the endpoint was deployed with that authentication method and that you have the required permissions to invoke it.

If you are planning to use asynchronous calling, it's a best practice to use the asynchronous version for the credentials:

from azure.identity.aio import (

DefaultAzureCredential as DefaultAzureCredentialAsync,

)

from llama_index.llms.azure_inference import AzureAICompletionsModel

llm = AzureAICompletionsModel(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=DefaultAzureCredentialAsync(),

)

Azure OpenAI models and Azure AI model inference service

If you are using Azure OpenAI service or Azure AI model inference service, ensure you have at least version 0.2.4 of the LlamaIndex integration. Use api_version parameter in case you need to select a specific api_version.

For the Azure AI model inference service, you need to pass model_name parameter:

from llama_index.llms.azure_inference import AzureAICompletionsModel

llm = AzureAICompletionsModel(

endpoint="https://<resource>.services.ai.azure.com/models",

credential=os.environ["AZURE_INFERENCE_CREDENTIAL"],

model_name="mistral-large-2407",

)

For Azure OpenAI service:

from llama_index.llms.azure_inference import AzureAICompletionsModel

llm = AzureAICompletionsModel(

endpoint="https://<resource>.openai.azure.com/openai/deployments/<deployment-name>",

credential=os.environ["AZURE_INFERENCE_CREDENTIAL"],

api_version="2024-05-01-preview",

)

Tip

Check which is the API version that your deployment is using. Using a wrong api_version or one not supported by the model results in a ResourceNotFound exception.

Inference parameters

You can configure how inference in performed for all the operations that are using this client by setting extra parameters. This helps avoid indicating them on each call you make to the model.

llm = AzureAICompletionsModel(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=os.environ["AZURE_INFERENCE_CREDENTIAL"],

temperature=0.0,

model_kwargs={"top_p": 1.0},

)

Parameters not supported in the Azure AI model inference API (reference) but available in the underlying model, you can use the model_extras argument. In the following example, the parameter safe_prompt, only available for Mistral models, is being passed.

llm = AzureAICompletionsModel(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=os.environ["AZURE_INFERENCE_CREDENTIAL"],

temperature=0.0,

model_kwargs={"model_extras": {"safe_prompt": True}},

)

Use LLMs models

You can use the client directly or Configure the models used by your code in LlamaIndex. To use the model directly, use the chat method for chat instruction models:

from llama_index.core.llms import ChatMessage

messages = [

ChatMessage(

role="system", content="You are a pirate with colorful personality."

),

ChatMessage(role="user", content="Hello"),

]

response = llm.chat(messages)

print(response)

You can stream the outputs also:

response = llm.stream_chat(messages)

for r in response:

print(r.delta, end="")

The complete method is still available for model of type chat-completions. On those cases, your input text is converted to a message with role="user".

Use embeddings models

In the same way you create an LLM client, you can connect to an embeddings model. In the following example, we are setting the environment variable to now point to an embeddings model:

export AZURE_INFERENCE_ENDPOINT="<your-model-endpoint-goes-here>"

export AZURE_INFERENCE_CREDENTIAL="<your-key-goes-here>"

Then create the client:

from llama_index.embeddings.azure_inference import AzureAIEmbeddingsModel

embed_model = AzureAIEmbeddingsModel(

endpoint=os.environ["AZURE_INFERENCE_ENDPOINT"],

credential=os.environ['AZURE_INFERENCE_CREDENTIAL'],

)

The following example shows a simple test to verify it works:

from llama_index.core.schema import TextNode

nodes = [

TextNode(

text="Before college the two main things I worked on, "

"outside of school, were writing and programming."

)

]

response = embed_model(nodes=nodes)

print(response[0].embedding)

Configure the models used by your code

You can use the LLM or embeddings model client individually in the code you develop with LlamaIndex or you can configure the entire session using the Settings options. Configuring the session has the advantage of all your code using the same models for all the operations.

from llama_index.core import Settings

Settings.llm = llm

Settings.embed_model = embed_model

However, there are scenarios where you want to use a general model for most of the operations but a specific one for a given task. On those cases, it's useful to set the LLM or embedding model you are using for each LlamaIndex construct. In the following example, we set a specific model:

from llama_index.core.evaluation import RelevancyEvaluator

relevancy_evaluator = RelevancyEvaluator(llm=llm)

In general, you use a combination of both strategies.