Install and run Docker containers for LUIS

Important

LUIS will be retired on October 1st 2025 and starting April 1st 2023 you will not be able to create new LUIS resources. We recommend migrating your LUIS applications to conversational language understanding to benefit from continued product support and multilingual capabilities.

Note

The container image location has recently changed. Read this article to see the updated location for this container.

Containers enable you to use LUIS in your own environment. Containers are great for specific security and data governance requirements. In this article you'll learn how to download, install, and run a LUIS container.

The Language Understanding (LUIS) container loads your trained or published Language Understanding model. As a LUIS app, the docker container provides access to the query predictions from the container's API endpoints. You can collect query logs from the container and upload them back to the Language Understanding app to improve the app's prediction accuracy.

The following video demonstrates using this container.

If you don't have an Azure subscription, create a free account before you begin.

Prerequisites

To run the LUIS container, note the following prerequisites:

- Docker installed on a host computer. Docker must be configured to allow the containers to connect with and send billing data to Azure.

- On Windows, Docker must also be configured to support Linux containers.

- You should have a basic understanding of Docker concepts.

- A LUIS resource with the free (F0) or standard (S) pricing tier.

- A trained or published app packaged as a mounted input to the container with its associated App ID. You can get the packaged file from the LUIS portal or the Authoring APIs. If you are getting LUIS packaged app from the authoring APIs, you will also need your Authoring Key.

Gather required parameters

Three primary parameters for all Azure AI containers are required. The Microsoft Software License Terms must be present with a value of accept. An Endpoint URI and API key are also needed.

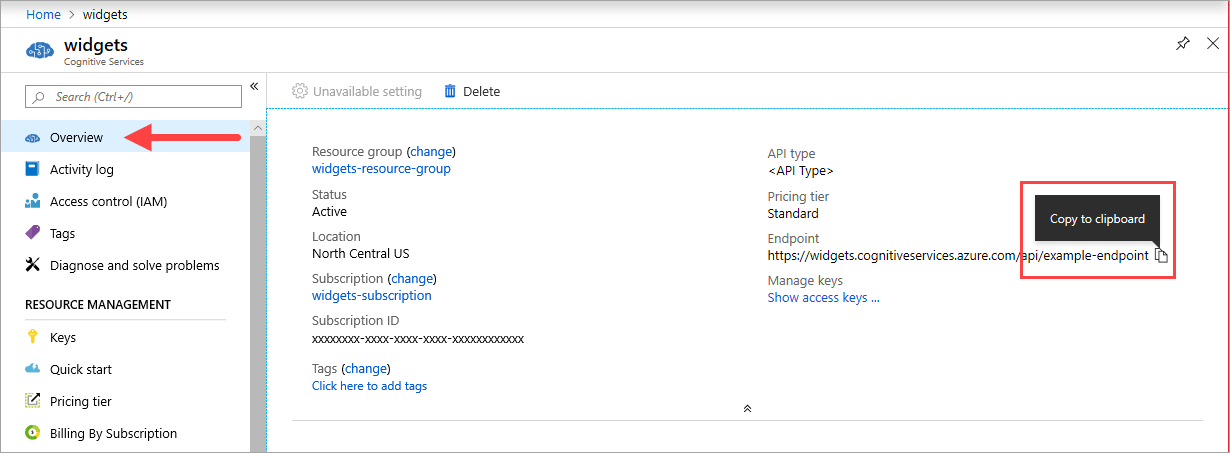

Endpoint URI

The {ENDPOINT_URI} value is available on the Azure portal Overview page of the corresponding Azure AI services resource. Go to the Overview page, hover over the endpoint, and a Copy to clipboard icon appears. Copy and use the endpoint where needed.

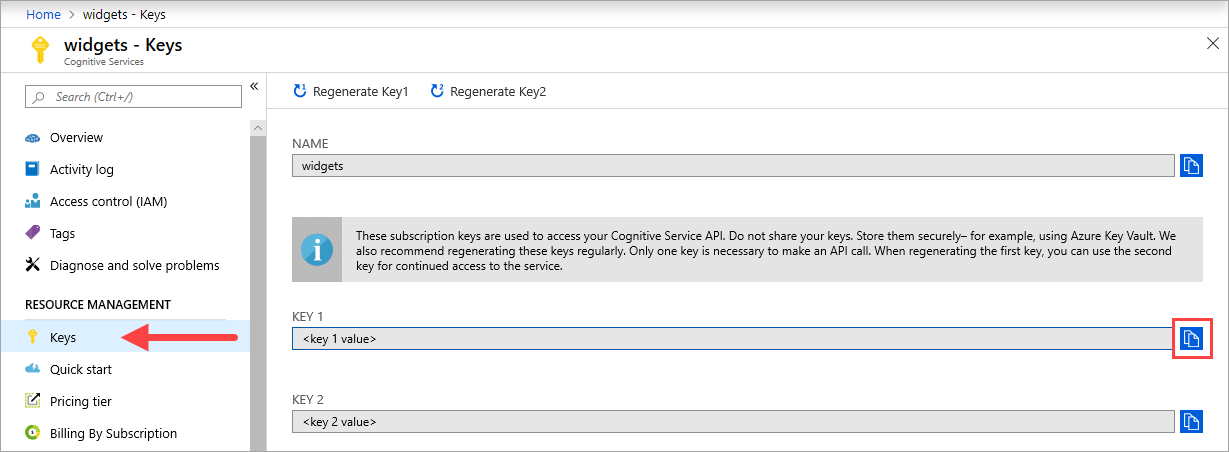

Keys

The {API_KEY} value is used to start the container and is available on the Azure portal's Keys page of the corresponding Azure AI services resource. Go to the Keys page, and select the Copy to clipboard icon.

Important

These subscription keys are used to access your Azure AI services API. Don't share your keys. Store them securely. For example, use Azure Key Vault. We also recommend that you regenerate these keys regularly. Only one key is necessary to make an API call. When you regenerate the first key, you can use the second key for continued access to the service.

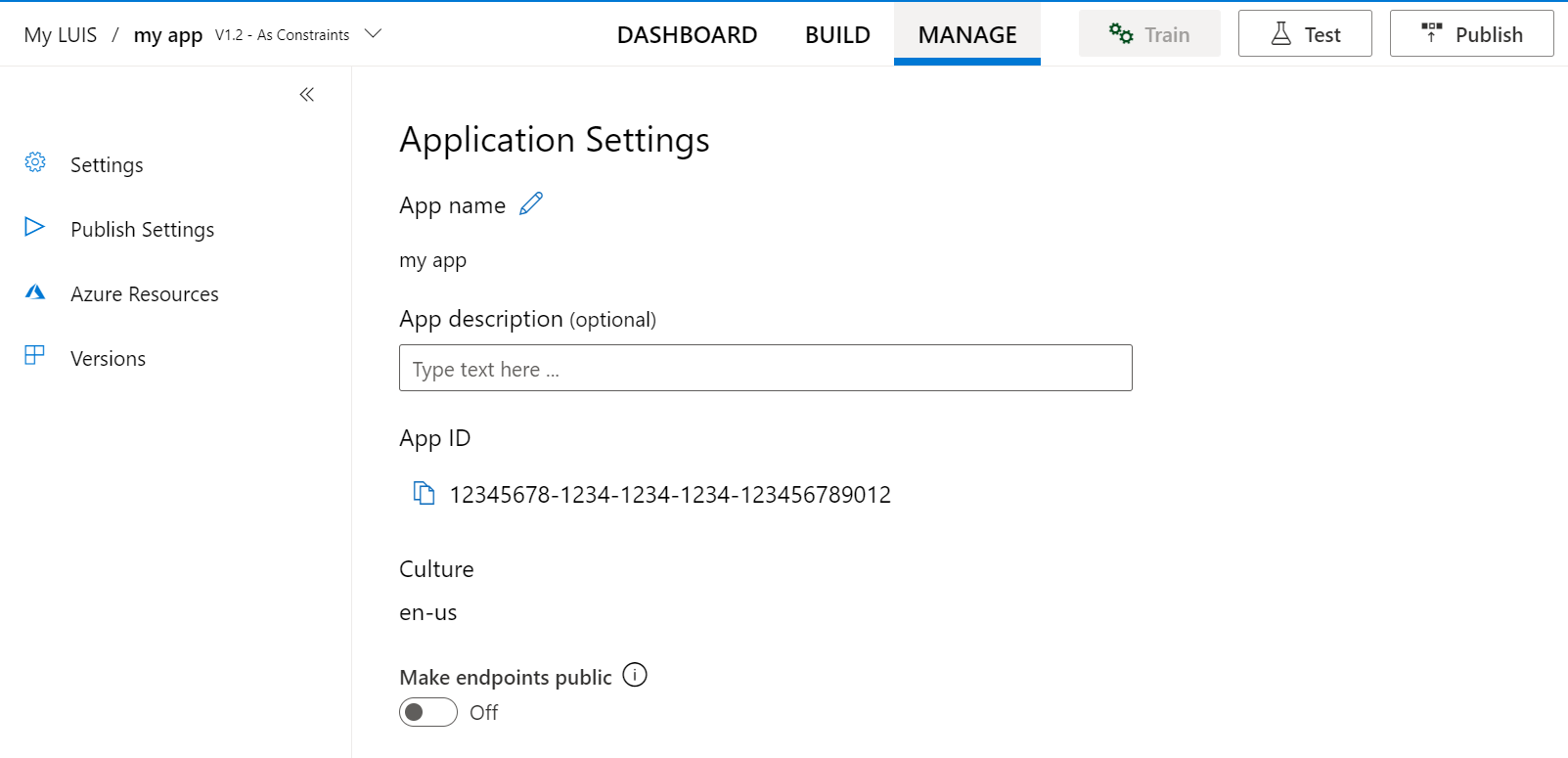

App ID {APP_ID}

This ID is used to select the app. You can find the app ID in the LUIS portal by clicking Manage at the top of the screen for your app, and then Settings.

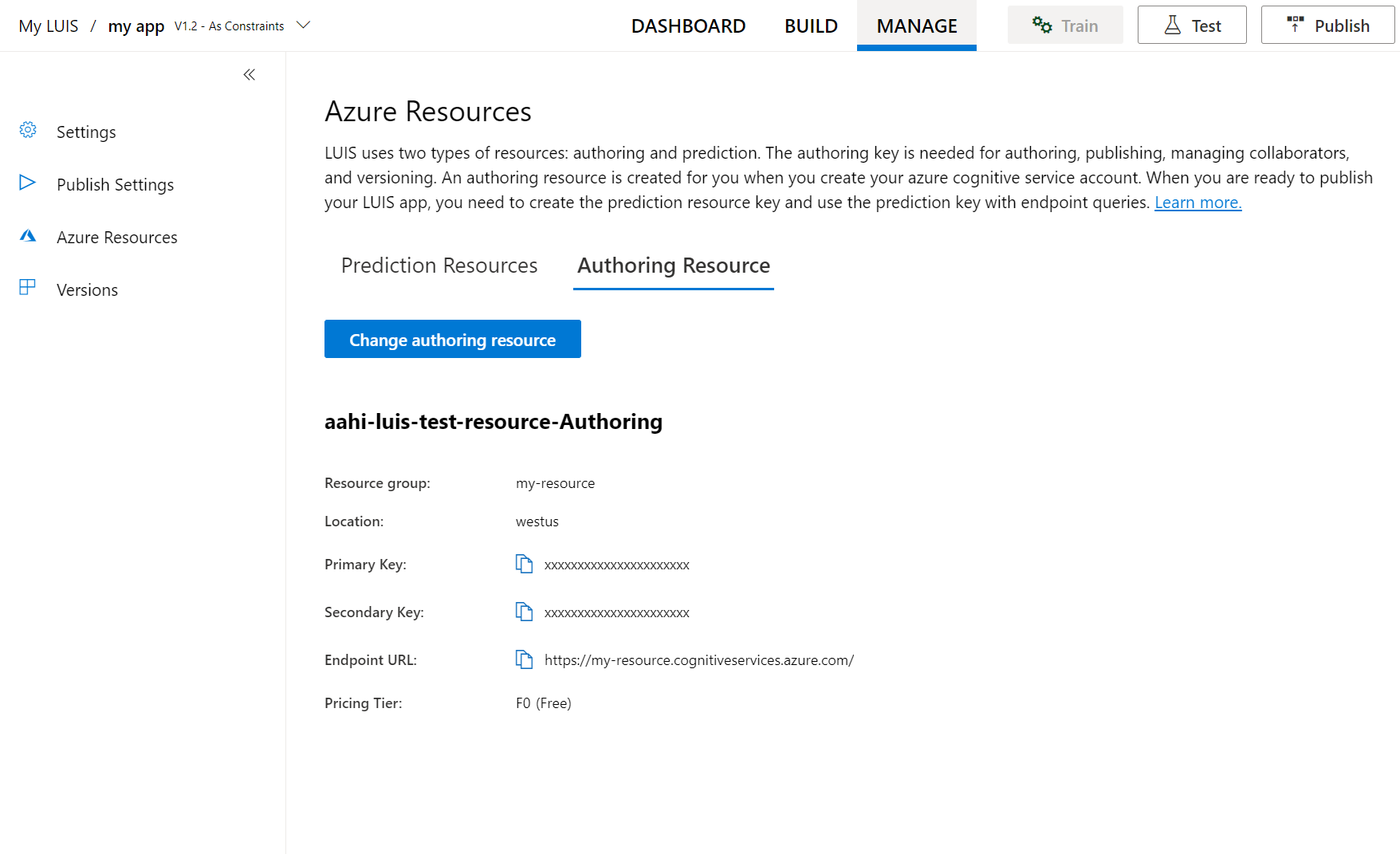

Authoring key {AUTHORING_KEY}

This key is used to get the packaged app from the LUIS service in the cloud and upload the query logs back to the cloud. You will need your authoring key if you export your app using the REST API, described later in the article.

You can get your authoring key from the LUIS portal by clicking Manage at the top of the screen for your app, and then Azure Resources.

Authoring APIs for package file

Authoring APIs for packaged apps:

The host computer

The host is an x64-based computer that runs the Docker container. It can be a computer on your premises or a Docker hosting service in Azure, such as:

- Azure Kubernetes Service.

- Azure Container Instances.

- A Kubernetes cluster deployed to Azure Stack. For more information, see Deploy Kubernetes to Azure Stack.

Container requirements and recommendations

The below table lists minimum and recommended values for the container host. Your requirements may change depending on traffic volume.

| Container | Minimum | Recommended | TPS (Minimum, Maximum) |

|---|---|---|---|

| LUIS | 1 core, 2-GB memory | 1 core, 4-GB memory | 20, 40 |

- Each core must be at least 2.6 gigahertz (GHz) or faster.

- TPS - transactions per second

Core and memory correspond to the --cpus and --memory settings, which are used as part of the docker run command.

Get the container image with docker pull

The LUIS container image can be found on the mcr.microsoft.com container registry syndicate. It resides within the azure-cognitive-services/language repository and is named luis. The fully qualified container image name is, mcr.microsoft.com/azure-cognitive-services/language/luis.

To use the latest version of the container, you can use the latest tag. You can also find a full list of tags on the MCR.

Use the docker pull command to download a container image from the mcr.microsoft.com/azure-cognitive-services/language/luis repository:

docker pull mcr.microsoft.com/azure-cognitive-services/language/luis:latest

For a full description of available tags, such as latest used in the preceding command, see LUIS on Docker Hub.

Tip

You can use the docker images command to list your downloaded container images. For example, the following command lists the ID, repository, and tag of each downloaded container image, formatted as a table:

docker images --format "table {{.ID}}\t{{.Repository}}\t{{.Tag}}"

IMAGE ID REPOSITORY TAG

<image-id> <repository-path/name> <tag-name>

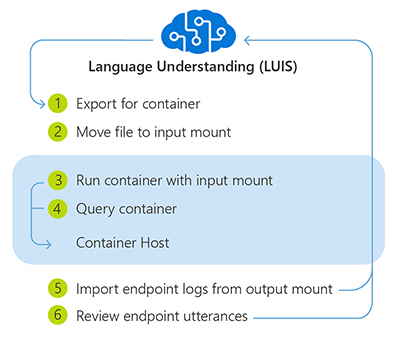

How to use the container

Once the container is on the host computer, use the following process to work with the container.

- Export package for container from LUIS portal or LUIS APIs.

- Move package file into the required input directory on the host computer. Do not rename, alter, overwrite, or decompress the LUIS package file.

- Run the container, with the required input mount and billing settings. More examples of the

docker runcommand are available. - Querying the container's prediction endpoint.

- When you are done with the container, import the endpoint logs from the output mount in the LUIS portal and stop the container.

- Use LUIS portal's active learning on the Review endpoint utterances page to improve the app.

The app running in the container can't be altered. In order to change the app in the container, you need to change the app in the LUIS service using the LUIS portal or use the LUIS authoring APIs. Then train and/or publish, then download a new package and run the container again.

The LUIS app inside the container can't be exported back to the LUIS service. Only the query logs can be uploaded.

Export packaged app from LUIS

The LUIS container requires a trained or published LUIS app to answer prediction queries of user utterances. In order to get the LUIS app, use either the trained or published package API.

The default location is the input subdirectory in relation to where you run the docker run command.

Place the package file in a directory and reference this directory as the input mount when you run the docker container.

Package types

The input mount directory can contain the Production, Staging, and Versioned models of the app simultaneously. All the packages are mounted.

| Package Type | Query Endpoint API | Query availability | Package filename format |

|---|---|---|---|

| Versioned | GET, POST | Container only | {APP_ID}_v{APP_VERSION}.gz |

| Staging | GET, POST | Azure and container | {APP_ID}_STAGING.gz |

| Production | GET, POST | Azure and container | {APP_ID}_PRODUCTION.gz |

Important

Do not rename, alter, overwrite, or decompress the LUIS package files.

Packaging prerequisites

Before packaging a LUIS application, you must have the following:

| Packaging Requirements | Details |

|---|---|

| Azure Azure AI services resource instance | Supported regions include West US ( westus)West Europe ( westeurope)Australia East ( australiaeast) |

| Trained or published LUIS app | With no unsupported dependencies. |

| Access to the host computer's file system | The host computer must allow an input mount. |

Export app package from LUIS portal

The LUIS Azure portal provides the ability to export the trained or published app's package.

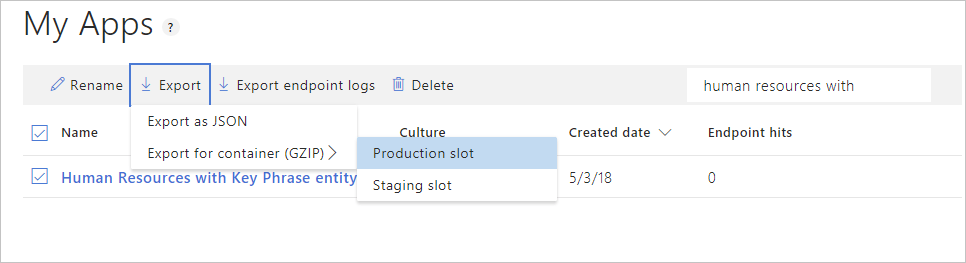

Export published app's package from LUIS portal

The published app's package is available from the My Apps list page.

- Sign on to the LUIS Azure portal.

- Select the checkbox to the left of the app name in the list.

- Select the Export item from the contextual toolbar above the list.

- Select Export for container (GZIP).

- Select the environment of Production slot or Staging slot.

- The package is downloaded from the browser.

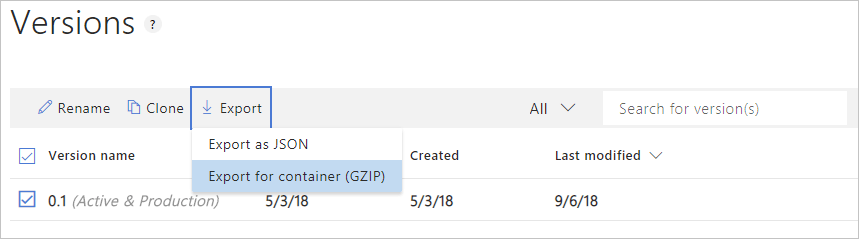

Export versioned app's package from LUIS portal

The versioned app's package is available from the Versions list page.

- Sign on to the LUIS Azure portal.

- Select the app in the list.

- Select Manage in the app's navigation bar.

- Select Versions in the left navigation bar.

- Select the checkbox to the left of the version name in the list.

- Select the Export item from the contextual toolbar above the list.

- Select Export for container (GZIP).

- The package is downloaded from the browser.

Export published app's package from API

Use the following REST API method, to package a LUIS app that you've already published. Substituting your own appropriate values for the placeholders in the API call, using the table below the HTTP specification.

GET /luis/api/v2.0/package/{APP_ID}/slot/{SLOT_NAME}/gzip HTTP/1.1

Host: {AZURE_REGION}.api.cognitive.microsoft.com

Ocp-Apim-Subscription-Key: {AUTHORING_KEY}

| Placeholder | Value |

|---|---|

| {APP_ID} | The application ID of the published LUIS app. |

| {SLOT_NAME} | The environment of the published LUIS app. Use one of the following values:PRODUCTIONSTAGING |

| {AUTHORING_KEY} | The authoring key of the LUIS account for the published LUIS app. You can get your authoring key from the User Settings page on the LUIS portal. |

| {AZURE_REGION} | The appropriate Azure region:westus - West USwesteurope - West Europeaustraliaeast - Australia East |

To download the published package, refer to the API documentation here. If successfully downloaded, the response is a LUIS package file. Save the file in the storage location specified for the input mount of the container.

Export versioned app's package from API

Use the following REST API method, to package a LUIS application that you've already trained. Substituting your own appropriate values for the placeholders in the API call, using the table below the HTTP specification.

GET /luis/api/v2.0/package/{APP_ID}/versions/{APP_VERSION}/gzip HTTP/1.1

Host: {AZURE_REGION}.api.cognitive.microsoft.com

Ocp-Apim-Subscription-Key: {AUTHORING_KEY}

| Placeholder | Value |

|---|---|

| {APP_ID} | The application ID of the trained LUIS app. |

| {APP_VERSION} | The application version of the trained LUIS app. |

| {AUTHORING_KEY} | The authoring key of the LUIS account for the published LUIS app. You can get your authoring key from the User Settings page on the LUIS portal. |

| {AZURE_REGION} | The appropriate Azure region:westus - West USwesteurope - West Europeaustraliaeast - Australia East |

To download the versioned package, refer to the API documentation here. If successfully downloaded, the response is a LUIS package file. Save the file in the storage location specified for the input mount of the container.

Run the container with docker run

Use the docker run command to run the container. Refer to gather required parameters for details on how to get the {ENDPOINT_URI} and {API_KEY} values.

Examples of the docker run command are available.

docker run --rm -it -p 5000:5000 ^

--memory 4g ^

--cpus 2 ^

--mount type=bind,src=c:\input,target=/input ^

--mount type=bind,src=c:\output\,target=/output ^

mcr.microsoft.com/azure-cognitive-services/language/luis ^

Eula=accept ^

Billing={ENDPOINT_URI} ^

ApiKey={API_KEY}

- This example uses the directory off the

C:drive to avoid any permission conflicts on Windows. If you need to use a specific directory as the input directory, you might need to grant the docker service permission. - Do not change the order of the arguments unless you are familiar with docker containers.

- If you are using a different operating system, use the correct console/terminal, folder syntax for mounts, and line continuation character for your system. These examples assume a Windows console with a line continuation character

^. Because the container is a Linux operating system, the target mount uses a Linux-style folder syntax.

This command:

- Runs a container from the LUIS container image

- Loads LUIS app from input mount at C:\input, located on container host

- Allocates two CPU cores and 4 gigabytes (GB) of memory

- Exposes TCP port 5000 and allocates a pseudo-TTY for the container

- Saves container and LUIS logs to output mount at C:\output, located on container host

- Automatically removes the container after it exits. The container image is still available on the host computer.

More examples of the docker run command are available.

Important

The Eula, Billing, and ApiKey options must be specified to run the container; otherwise, the container won't start. For more information, see Billing.

The ApiKey value is the Key from the Azure Resources page in the LUIS portal and is also available on the Azure Azure AI services resource keys page.

Run multiple containers on the same host

If you intend to run multiple containers with exposed ports, make sure to run each container with a different exposed port. For example, run the first container on port 5000 and the second container on port 5001.

You can have this container and a different Azure AI services container running on the HOST together. You also can have multiple containers of the same Azure AI services container running.

Endpoint APIs supported by the container

Both V2 and V3 versions of the API are available with the container.

Query the container's prediction endpoint

The container provides REST-based query prediction endpoint APIs. Endpoints for published (staging or production) apps have a different route than endpoints for versioned apps.

Use the host, http://localhost:5000, for container APIs.

| Package type | HTTP verb | Route | Query parameters |

|---|---|---|---|

| Published | GET, POST | /luis/v3.0/apps/{appId}/slots/{slotName}/predict? /luis/prediction/v3.0/apps/{appId}/slots/{slotName}/predict? |

query={query}[ &verbose][ &log][ &show-all-intents] |

| Versioned | GET, POST | /luis/v3.0/apps/{appId}/versions/{versionId}/predict? /luis/prediction/v3.0/apps/{appId}/versions/{versionId}/predict |

query={query}[ &verbose][ &log][ &show-all-intents] |

The query parameters configure how and what is returned in the query response:

| Query parameter | Type | Purpose |

|---|---|---|

query |

string | The user's utterance. |

verbose |

boolean | A boolean value indicating whether to return all the metadata for the predicted models. Default is false. |

log |

boolean | Logs queries, which can be used later for active learning. Default is false. |

show-all-intents |

boolean | A boolean value indicating whether to return all the intents or the top scoring intent only. Default is false. |

Query the LUIS app

An example CURL command for querying the container for a published app is:

To query a model in a slot, use the following API:

curl -G \

-d verbose=false \

-d log=true \

--data-urlencode "query=turn the lights on" \

"http://localhost:5000/luis/v3.0/apps/{APP_ID}/slots/production/predict"

To make queries to the Staging environment, replace production in the route with staging:

http://localhost:5000/luis/v3.0/apps/{APP_ID}/slots/staging/predict

To query a versioned model, use the following API:

curl -G \

-d verbose=false \

-d log=false \

--data-urlencode "query=turn the lights on" \

"http://localhost:5000/luis/v3.0/apps/{APP_ID}/versions/{APP_VERSION}/predict"

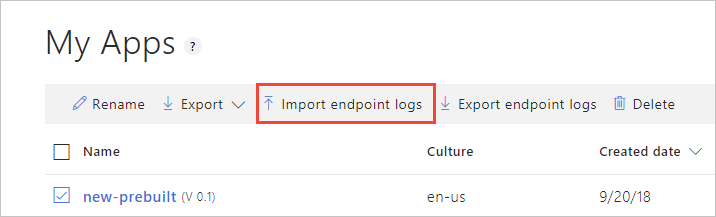

Import the endpoint logs for active learning

If an output mount is specified for the LUIS container, app query log files are saved in the output directory, where {INSTANCE_ID} is the container ID. The app query log contains the query, response, and timestamps for each prediction query submitted to the LUIS container.

The following location shows the nested directory structure for the container's log files.

/output/luis/{INSTANCE_ID}/

From the LUIS portal, select your app, then select Import endpoint logs to upload these logs.

After the log is uploaded, review the endpoint utterances in the LUIS portal.

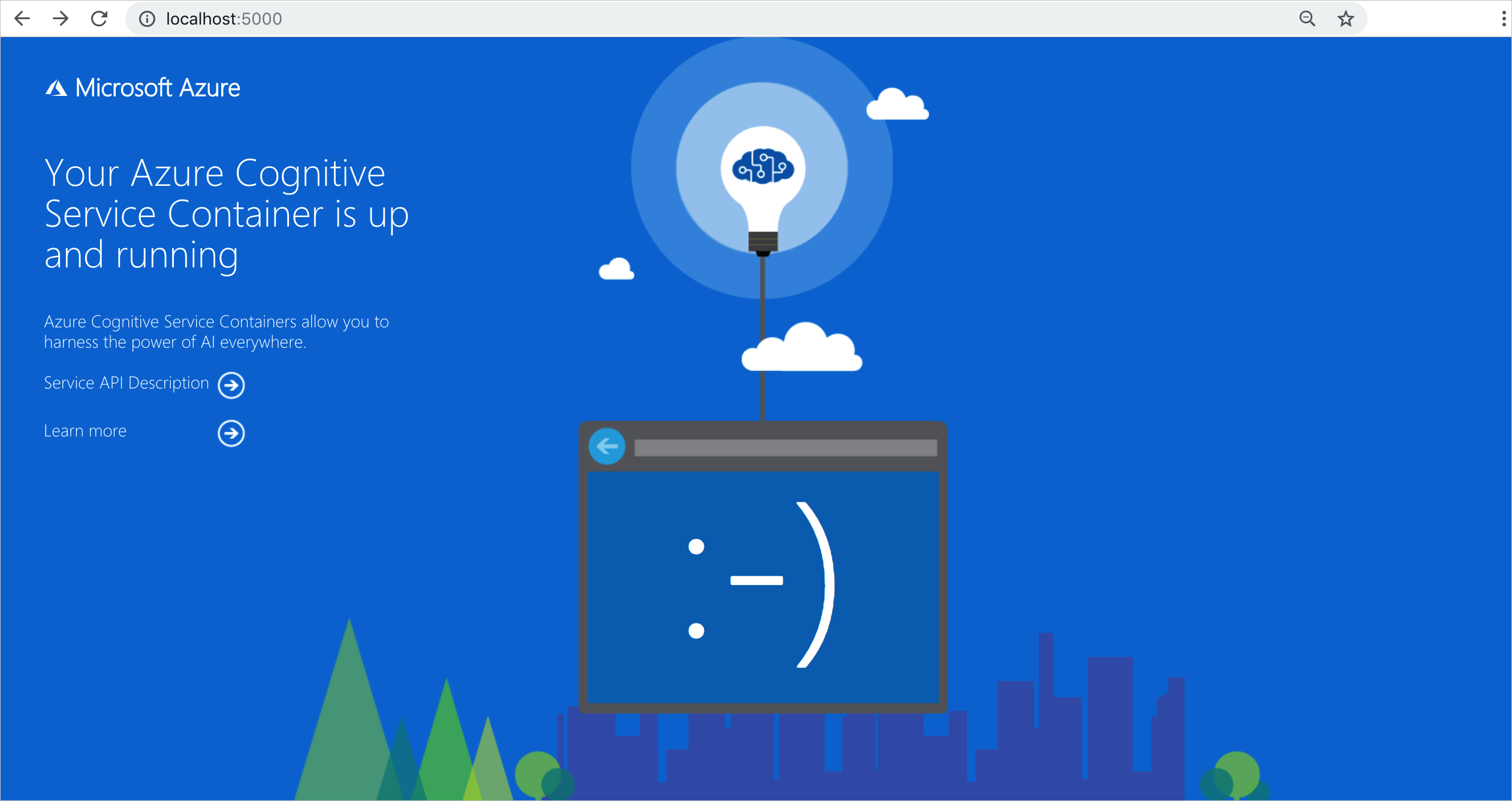

Validate that a container is running

There are several ways to validate that the container is running. Locate the External IP address and exposed port of the container in question, and open your favorite web browser. Use the various request URLs that follow to validate the container is running. The example request URLs listed here are http://localhost:5000, but your specific container might vary. Make sure to rely on your container's External IP address and exposed port.

| Request URL | Purpose |

|---|---|

http://localhost:5000/ |

The container provides a home page. |

http://localhost:5000/ready |

Requested with GET, this URL provides a verification that the container is ready to accept a query against the model. This request can be used for Kubernetes liveness and readiness probes. |

http://localhost:5000/status |

Also requested with GET, this URL verifies if the api-key used to start the container is valid without causing an endpoint query. This request can be used for Kubernetes liveness and readiness probes. |

http://localhost:5000/swagger |

The container provides a full set of documentation for the endpoints and a Try it out feature. With this feature, you can enter your settings into a web-based HTML form and make the query without having to write any code. After the query returns, an example CURL command is provided to demonstrate the HTTP headers and body format that's required. |

Run the container disconnected from the internet

To use this container disconnected from the internet, you must first request access by filling out an application, and purchasing a commitment plan. See Use Docker containers in disconnected environments for more information.

If you have been approved to run the container disconnected from the internet, use the following example shows the formatting of the docker run command you'll use, with placeholder values. Replace these placeholder values with your own values.

The DownloadLicense=True parameter in your docker run command will download a license file that will enable your Docker container to run when it isn't connected to the internet. It also contains an expiration date, after which the license file will be invalid to run the container. You can only use a license file with the appropriate container that you've been approved for. For example, you can't use a license file for a speech to text container with a Document Intelligence container.

| Placeholder | Value | Format or example |

|---|---|---|

{IMAGE} |

The container image you want to use. | mcr.microsoft.com/azure-cognitive-services/form-recognizer/invoice |

{LICENSE_MOUNT} |

The path where the license will be downloaded, and mounted. | /host/license:/path/to/license/directory |

{ENDPOINT_URI} |

The endpoint for authenticating your service request. You can find it on your resource's Key and endpoint page, on the Azure portal. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{API_KEY} |

The key for your Text Analytics resource. You can find it on your resource's Key and endpoint page, on the Azure portal. | xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx |

{CONTAINER_LICENSE_DIRECTORY} |

Location of the license folder on the container's local filesystem. | /path/to/license/directory |

docker run --rm -it -p 5000:5000 \

-v {LICENSE_MOUNT} \

{IMAGE} \

eula=accept \

billing={ENDPOINT_URI} \

apikey={API_KEY} \

DownloadLicense=True \

Mounts:License={CONTAINER_LICENSE_DIRECTORY}

Once the license file has been downloaded, you can run the container in a disconnected environment. The following example shows the formatting of the docker run command you'll use, with placeholder values. Replace these placeholder values with your own values.

Wherever the container is run, the license file must be mounted to the container and the location of the license folder on the container's local filesystem must be specified with Mounts:License=. An output mount must also be specified so that billing usage records can be written.

| Placeholder | Value | Format or example |

|---|---|---|

{IMAGE} |

The container image you want to use. | mcr.microsoft.com/azure-cognitive-services/form-recognizer/invoice |

{MEMORY_SIZE} |

The appropriate size of memory to allocate for your container. | 4g |

{NUMBER_CPUS} |

The appropriate number of CPUs to allocate for your container. | 4 |

{LICENSE_MOUNT} |

The path where the license will be located and mounted. | /host/license:/path/to/license/directory |

{OUTPUT_PATH} |

The output path for logging usage records. | /host/output:/path/to/output/directory |

{CONTAINER_LICENSE_DIRECTORY} |

Location of the license folder on the container's local filesystem. | /path/to/license/directory |

{CONTAINER_OUTPUT_DIRECTORY} |

Location of the output folder on the container's local filesystem. | /path/to/output/directory |

docker run --rm -it -p 5000:5000 --memory {MEMORY_SIZE} --cpus {NUMBER_CPUS} \

-v {LICENSE_MOUNT} \

-v {OUTPUT_PATH} \

{IMAGE} \

eula=accept \

Mounts:License={CONTAINER_LICENSE_DIRECTORY}

Mounts:Output={CONTAINER_OUTPUT_DIRECTORY}

Stop the container

To shut down the container, in the command-line environment where the container is running, press Ctrl+C.

Troubleshooting

If you run the container with an output mount and logging enabled, the container generates log files that are helpful to troubleshoot issues that happen while starting or running the container.

Tip

For more troubleshooting information and guidance, see Azure AI containers frequently asked questions (FAQ).

If you're having trouble running an Azure AI services container, you can try using the Microsoft diagnostics container. Use this container to diagnose common errors in your deployment environment that might prevent Azure AI containers from functioning as expected.

To get the container, use the following docker pull command:

docker pull mcr.microsoft.com/azure-cognitive-services/diagnostic

Then run the container. Replace {ENDPOINT_URI} with your endpoint, and replace {API_KEY} with your key to your resource:

docker run --rm mcr.microsoft.com/azure-cognitive-services/diagnostic \

eula=accept \

Billing={ENDPOINT_URI} \

ApiKey={API_KEY}

The container will test for network connectivity to the billing endpoint.

Billing

The LUIS container sends billing information to Azure, using an Azure AI services resource on your Azure account.

Queries to the container are billed at the pricing tier of the Azure resource that's used for the ApiKey parameter.

Azure AI services containers aren't licensed to run without being connected to the metering or billing endpoint. You must enable the containers to communicate billing information with the billing endpoint at all times. Azure AI services containers don't send customer data, such as the image or text that's being analyzed, to Microsoft.

Connect to Azure

The container needs the billing argument values to run. These values allow the container to connect to the billing endpoint. The container reports usage about every 10 to 15 minutes. If the container doesn't connect to Azure within the allowed time window, the container continues to run but doesn't serve queries until the billing endpoint is restored. The connection is attempted 10 times at the same time interval of 10 to 15 minutes. If it can't connect to the billing endpoint within the 10 tries, the container stops serving requests. See the Azure AI services container FAQ for an example of the information sent to Microsoft for billing.

Billing arguments

The docker run command will start the container when all three of the following options are provided with valid values:

| Option | Description |

|---|---|

ApiKey |

The API key of the Azure AI services resource that's used to track billing information. The value of this option must be set to an API key for the provisioned resource that's specified in Billing. |

Billing |

The endpoint of the Azure AI services resource that's used to track billing information. The value of this option must be set to the endpoint URI of a provisioned Azure resource. |

Eula |

Indicates that you accepted the license for the container. The value of this option must be set to accept. |

For more information about these options, see Configure containers.

Summary

In this article, you learned concepts and workflow for downloading, installing, and running Language Understanding (LUIS) containers. In summary:

- Language Understanding (LUIS) provides one Linux container for Docker providing endpoint query predictions of utterances.

- Container images are downloaded from the Microsoft Container Registry (MCR).

- Container images run in Docker.

- You can use REST API to query the container endpoints by specifying the host URI of the container.

- You must specify billing information when instantiating a container.

Important

Azure AI containers are not licensed to run without being connected to Azure for metering. Customers need to enable the containers to communicate billing information with the metering service at all times. Azure AI containers do not send customer data (for example, the image or text that is being analyzed) to Microsoft.

Next steps

- Review Configure containers for configuration settings.

- See LUIS container limitations for known capability restrictions.

- Refer to Troubleshooting to resolve issues related to LUIS functionality.

- Use more Azure AI containers