Quickstart: Create a new agent

Azure AI Agent Service allows you to create AI agents tailored to your needs through custom instructions and augmented by advanced tools like code interpreter, and custom functions.

| Reference documentation | Samples | Library source code | Package (NuGet) |

Prerequisites

- An Azure subscription - Create one for free.

- The latest version of .NET

- Make sure you have the Azure AI Developer RBAC role assigned at the appropriate level.

- Install the Azure CLI and the machine learning extension. If you have the CLI already installed, make sure it's updated to the latest version.

Set up your Azure AI Hub and Agent project

The following section shows you how to set up the required resources for getting started with Azure AI Agent Service:

Creating an Azure AI Hub to set up your app environment and Azure resources.

Creating an Azure AI project under your Hub creates an endpoint for your app to call, and sets up app services to access to resources in your tenant.

Connecting an Azure OpenAI resource or an Azure AI Services resource

Choose Basic or Standard Agent Setup

Basic Setup: Agents use multitenant search and storage resources fully managed by Microsoft. You don't have visibility or control over these underlying Azure resources.

Standard Setup: Agents use customer-owned, single-tenant search and storage resources. With this setup, you have full control and visibility over these resources, but you incur costs based on your usage.

[Optional] Model selection in autodeploy template

You can customize the model used by your agent by editing the model parameters in the autodeploy template. To deploy a different model, you need to update at least the modelName and modelVersion parameters.

By default, the deployment template is configured with the following values:

| Model Parameter | Default Value |

|---|---|

| modelName | gpt-4o-mini |

| modelFormat | OpenAI (for Azure OpenAI) |

| modelVersion | 2024-07-18 |

| modelSkuName | GlobalStandard |

| modelLocation | eastus |

Important

Don't change the modelFormat parameter.

The templates only support deployment of Azure OpenAI models. See which Azure OpenAI models are supported in the Azure AI Agent Service model support documentation.

[Optional] Use your own resources during agent setup

Note

If you use an existing AI Services or Azure OpenAI resource, no model will be deployed. You can deploy a model to the resource after the agent setup is complete.

Use an existing AI Services, Azure OpenAI, AI Search, and/or Azure Blob Storage resource by providing the full arm resource ID in the parameters file:

aiServiceAccountResourceIdaiSearchServiceResourceIdaiStorageAccountResourceId

If you want to use an existing Azure OpenAI resource, you need to update the aiServiceAccountResourceId and the aiServiceKind parameters in the parameter file. The aiServiceKind parameter should be set to AzureOpenAI.

For more information, see how to use your own resources.

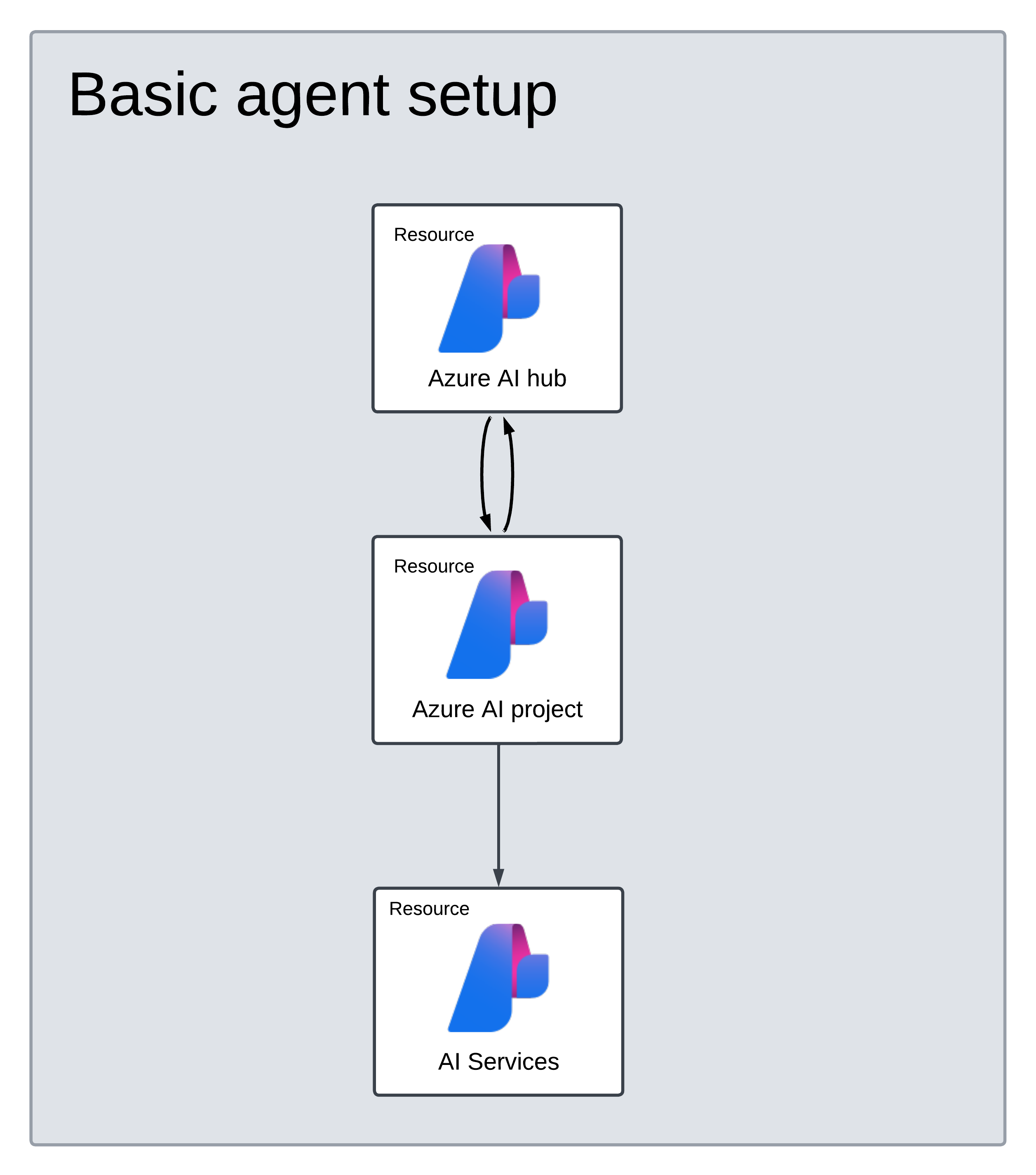

Basic agent setup resource architecture

Resources for the AI hub, AI project, and AI Services are created for you. A storage account is created because it's a required resource for hubs, but this storage account isn't used by agents. The AI Services account is connected to your project/hub and a gpt-4o-mini model is deployed in the eastus region. A Microsoft-managed key vault, storage account, and search resource is used by default.

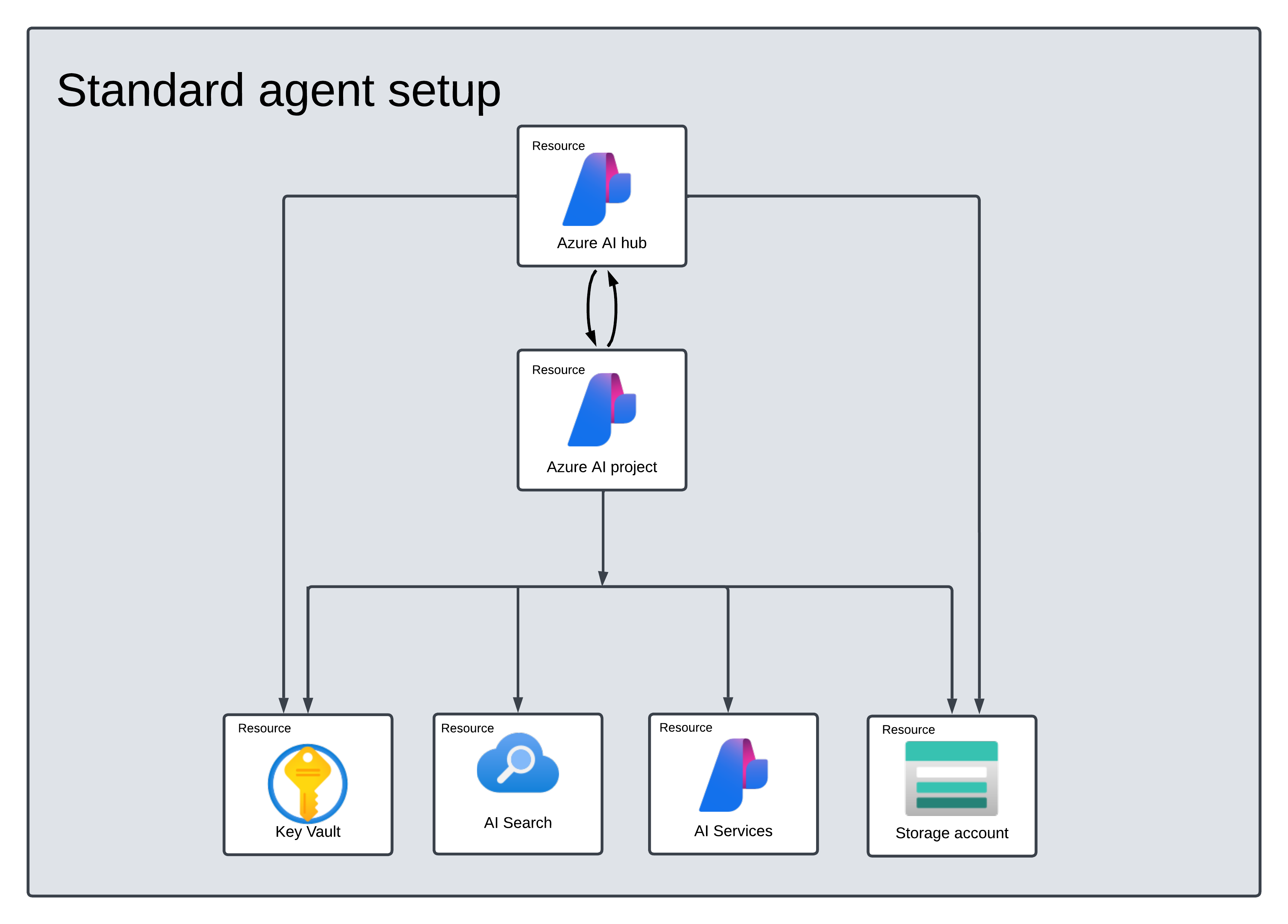

Standard agent setup resource architecture

Resources for the AI hub, AI project, key vault, storage account, AI Services, and AI Search are created for you. The AI Services, AI Search, key vault, and storage account are connected to your project and hub. A gpt-4o-mini model is deployed in eastus region using the Azure OpenAI endpoint for your resource.

Configure and run an agent

| Component | Description |

|---|---|

| Agent | Custom AI that uses AI models in conjunction with tools. |

| Tool | Tools help extend an agent’s ability to reliably and accurately respond during conversation. Such as connecting to user-defined knowledge bases to ground the model, or enabling web search to provide current information. |

| Thread | A conversation session between an agent and a user. Threads store Messages and automatically handle truncation to fit content into a model’s context. |

| Message | A message created by an agent or a user. Messages can include text, images, and other files. Messages are stored as a list on the Thread. |

| Run | Activation of an agent to begin running based on the contents of Thread. The agent uses its configuration and Thread’s Messages to perform tasks by calling models and tools. As part of a Run, the agent appends Messages to the Thread. |

| Run Step | A detailed list of steps the agent took as part of a Run. An agent can call tools or create Messages during its run. Examining Run Steps allows you to understand how the agent is getting to its results. |

Install the .NET package to your project. For example if you're using the .NET CLI, run the following command.

dotnet add package Azure.AI.Projects --version 1.0.0-beta.1

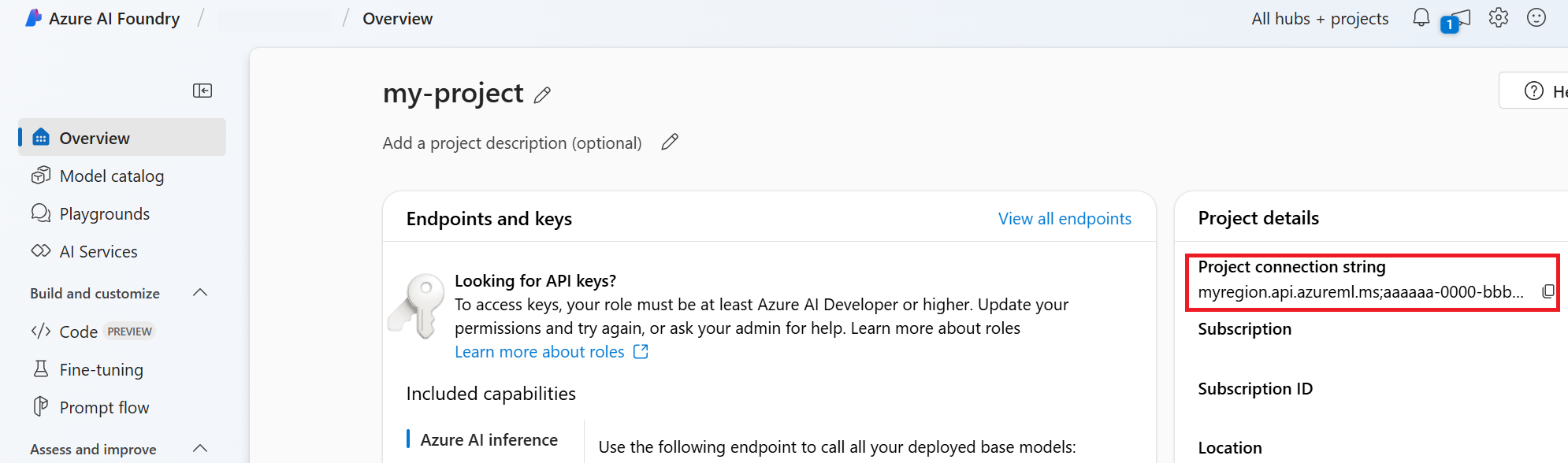

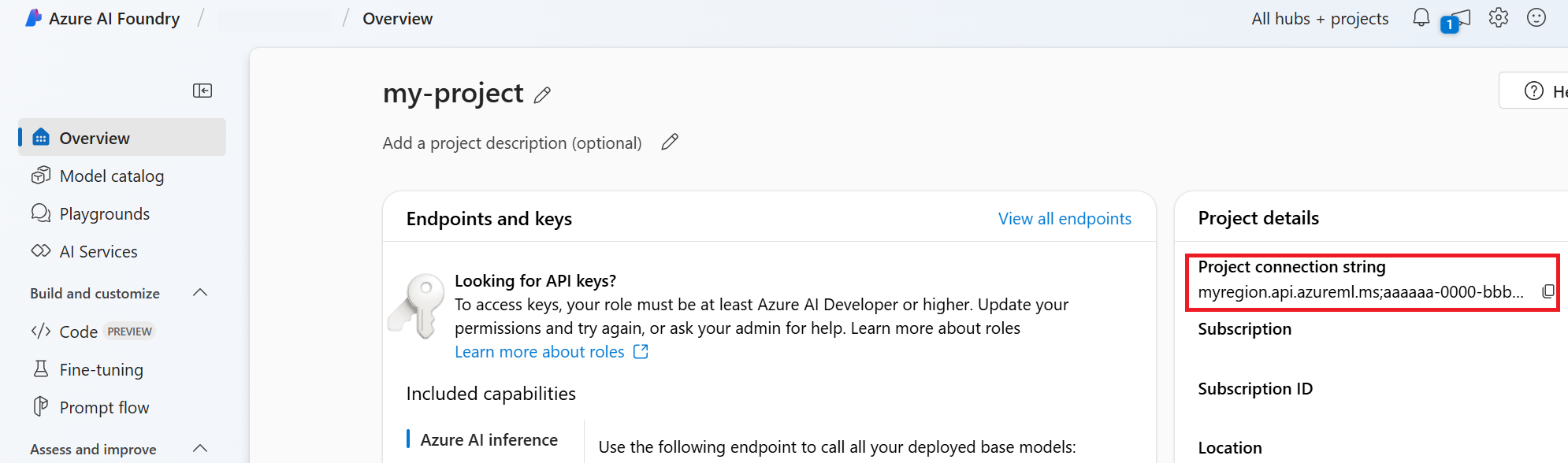

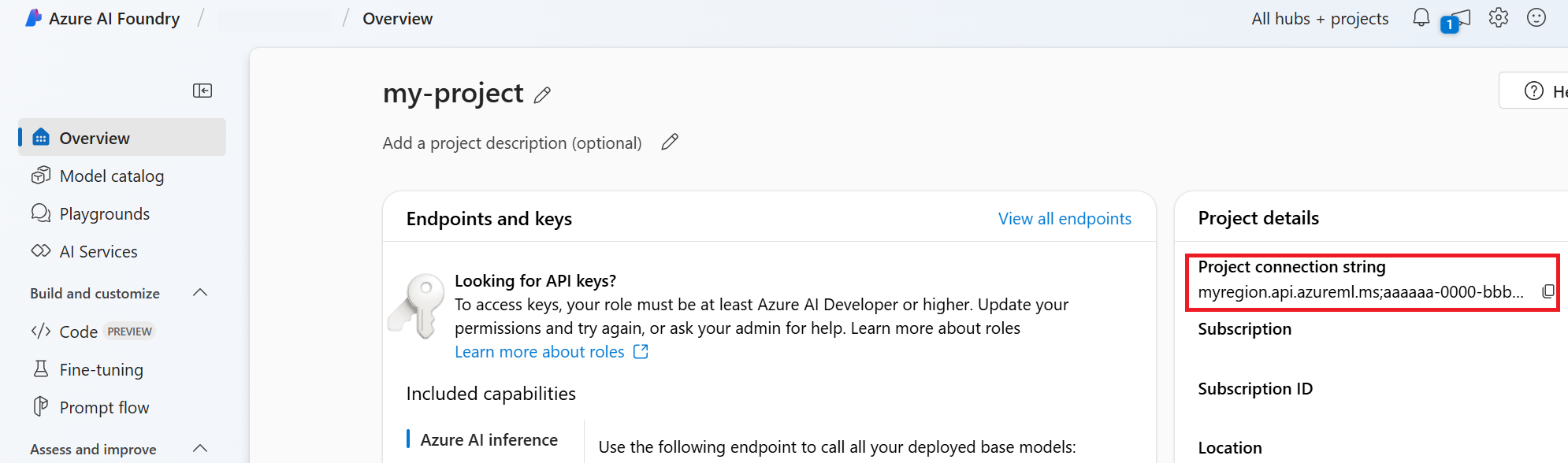

Use the following code to create and run an agent. To run this code, you will need to create a connection string using information from your project. This string is in the format:

<HostName>;<AzureSubscriptionId>;<ResourceGroup>;<ProjectName>

Tip

You can also find your connection string in the overview for your project in the Azure AI Foundry portal, under Project details > Project connection string.

HostName can be found by navigating to your discovery_url and removing the leading https:// and trailing /discovery. To find your discovery_url, run this CLI command:

az ml workspace show -n {project_name} --resource-group {resource_group_name} --query discovery_url

For example, your connection string may look something like:

eastus.api.azureml.ms;12345678-abcd-1234-9fc6-62780b3d3e05;my-resource-group;my-project-name

Set this connection string as an environment variable named PROJECT_CONNECTION_STRING.

// Copyright (c) Microsoft Corporation. All rights reserved.

// Licensed under the MIT License.

#nullable disable

using System;

using System.Collections.Generic;

using System.Threading.Tasks;

using Azure.Core.TestFramework;

using NUnit.Framework;

namespace Azure.AI.Projects.Tests;

public partial class Sample_Agent_Basics : SamplesBase<AIProjectsTestEnvironment>

{

[Test]

public async Task BasicExample()

{

var connectionString = Environment.GetEnvironmentVariable("AZURE_AI_CONNECTION_STRING");

AgentsClient client = new AgentsClient(connectionString, new DefaultAzureCredential());

#endregion

// Step 1: Create an agent

#region Snippet:OverviewCreateAgent

Response<Agent> agentResponse = await client.CreateAgentAsync(

model: "gpt-4o",

name: "Math Tutor",

instructions: "You are a personal math tutor. Write and run code to answer math questions.",

tools: new List<ToolDefinition> { new CodeInterpreterToolDefinition() });

Agent agent = agentResponse.Value;

#endregion

// Intermission: agent should now be listed

Response<PageableList<Agent>> agentListResponse = await client.GetAgentsAsync();

//// Step 2: Create a thread

#region Snippet:OverviewCreateThread

Response<AgentThread> threadResponse = await client.CreateThreadAsync();

AgentThread thread = threadResponse.Value;

#endregion

// Step 3: Add a message to a thread

#region Snippet:OverviewCreateMessage

Response<ThreadMessage> messageResponse = await client.CreateMessageAsync(

thread.Id,

MessageRole.User,

"I need to solve the equation `3x + 11 = 14`. Can you help me?");

ThreadMessage message = messageResponse.Value;

#endregion

// Intermission: message is now correlated with thread

// Intermission: listing messages will retrieve the message just added

Response<PageableList<ThreadMessage>> messagesListResponse = await client.GetMessagesAsync(thread.Id);

Assert.That(messagesListResponse.Value.Data[0].Id == message.Id);

// Step 4: Run the agent

#region Snippet:OverviewCreateRun

Response<ThreadRun> runResponse = await client.CreateRunAsync(

thread.Id,

agent.Id,

additionalInstructions: "Please address the user as Jane Doe. The user has a premium account.");

ThreadRun run = runResponse.Value;

#endregion

#region Snippet:OverviewWaitForRun

do

{

await Task.Delay(TimeSpan.FromMilliseconds(500));

runResponse = await client.GetRunAsync(thread.Id, runResponse.Value.Id);

}

while (runResponse.Value.Status == RunStatus.Queued

|| runResponse.Value.Status == RunStatus.InProgress);

#endregion

#region Snippet:OverviewListUpdatedMessages

Response<PageableList<ThreadMessage>> afterRunMessagesResponse

= await client.GetMessagesAsync(thread.Id);

IReadOnlyList<ThreadMessage> messages = afterRunMessagesResponse.Value.Data;

// Note: messages iterate from newest to oldest, with the messages[0] being the most recent

foreach (ThreadMessage threadMessage in messages)

{

Console.Write($"{threadMessage.CreatedAt:yyyy-MM-dd HH:mm:ss} - {threadMessage.Role,10}: ");

foreach (MessageContent contentItem in threadMessage.ContentItems)

{

if (contentItem is MessageTextContent textItem)

{

Console.Write(textItem.Text);

}

else if (contentItem is MessageImageFileContent imageFileItem)

{

Console.Write($"<image from ID: {imageFileItem.FileId}");

}

Console.WriteLine();

}

}

#endregion

}

}

| Reference documentation | Samples | Library source code | Package (PyPi) |

Prerequisites

- An Azure subscription - Create one for free.

- Python 3.8 or later

- Make sure you have the Azure AI Developer RBAC role assigned at the appropriate level.

- Install the Azure CLI and the machine learning extension. If you have the CLI already installed, make sure it's updated to the latest version.

Set up your Azure AI Hub and Agent project

The following section shows you how to set up the required resources for getting started with Azure AI Agent Service:

Creating an Azure AI Hub to set up your app environment and Azure resources.

Creating an Azure AI project under your Hub creates an endpoint for your app to call, and sets up app services to access to resources in your tenant.

Connecting an Azure OpenAI resource or an Azure AI Services resource

Choose Basic or Standard Agent Setup

Basic Setup: Agents use multitenant search and storage resources fully managed by Microsoft. You don't have visibility or control over these underlying Azure resources.

Standard Setup: Agents use customer-owned, single-tenant search and storage resources. With this setup, you have full control and visibility over these resources, but you incur costs based on your usage.

[Optional] Model selection in autodeploy template

You can customize the model used by your agent by editing the model parameters in the autodeploy template. To deploy a different model, you need to update at least the modelName and modelVersion parameters.

By default, the deployment template is configured with the following values:

| Model Parameter | Default Value |

|---|---|

| modelName | gpt-4o-mini |

| modelFormat | OpenAI (for Azure OpenAI) |

| modelVersion | 2024-07-18 |

| modelSkuName | GlobalStandard |

| modelLocation | eastus |

Important

Don't change the modelFormat parameter.

The templates only support deployment of Azure OpenAI models. See which Azure OpenAI models are supported in the Azure AI Agent Service model support documentation.

[Optional] Use your own resources during agent setup

Note

If you use an existing AI Services or Azure OpenAI resource, no model will be deployed. You can deploy a model to the resource after the agent setup is complete.

Use an existing AI Services, Azure OpenAI, AI Search, and/or Azure Blob Storage resource by providing the full arm resource ID in the parameters file:

aiServiceAccountResourceIdaiSearchServiceResourceIdaiStorageAccountResourceId

If you want to use an existing Azure OpenAI resource, you need to update the aiServiceAccountResourceId and the aiServiceKind parameters in the parameter file. The aiServiceKind parameter should be set to AzureOpenAI.

For more information, see how to use your own resources.

Basic agent setup resource architecture

Resources for the AI hub, AI project, and AI Services are created for you. A storage account is created because it's a required resource for hubs, but this storage account isn't used by agents. The AI Services account is connected to your project/hub and a gpt-4o-mini model is deployed in the eastus region. A Microsoft-managed key vault, storage account, and search resource is used by default.

Standard agent setup resource architecture

Resources for the AI hub, AI project, key vault, storage account, AI Services, and AI Search are created for you. The AI Services, AI Search, key vault, and storage account are connected to your project and hub. A gpt-4o-mini model is deployed in eastus region using the Azure OpenAI endpoint for your resource.

Configure and run an agent

| Component | Description |

|---|---|

| Agent | Custom AI that uses AI models in conjunction with tools. |

| Tool | Tools help extend an agent’s ability to reliably and accurately respond during conversation. Such as connecting to user-defined knowledge bases to ground the model, or enabling web search to provide current information. |

| Thread | A conversation session between an agent and a user. Threads store Messages and automatically handle truncation to fit content into a model’s context. |

| Message | A message created by an agent or a user. Messages can include text, images, and other files. Messages are stored as a list on the Thread. |

| Run | Activation of an agent to begin running based on the contents of Thread. The agent uses its configuration and Thread’s Messages to perform tasks by calling models and tools. As part of a Run, the agent appends Messages to the Thread. |

| Run Step | A detailed list of steps the agent took as part of a Run. An agent can call tools or create Messages during its run. Examining Run Steps allows you to understand how the agent is getting to its results. |

Run the following commands to install the python packages.

pip install azure-ai-projects

pip install azure-identity

Use the following code to create and run an agent. To run this code, you will need to create a connection string using information from your project. This string is in the format:

<HostName>;<AzureSubscriptionId>;<ResourceGroup>;<ProjectName>

Tip

You can also find your connection string in the overview for your project in the Azure AI Foundry portal, under Project details > Project connection string.

HostName can be found by navigating to your discovery_url and removing the leading https:// and trailing /discovery. To find your discovery_url, run this CLI command:

az ml workspace show -n {project_name} --resource-group {resource_group_name} --query discovery_url

For example, your connection string may look something like:

eastus.api.azureml.ms;12345678-abcd-1234-9fc6-62780b3d3e05;my-resource-group;my-project-name

Set this connection string as an environment variable named PROJECT_CONNECTION_STRING.

import os

from azure.ai.projects import AIProjectClient

from azure.ai.projects.models import CodeInterpreterTool

from azure.identity import DefaultAzureCredential

from typing import Any

from pathlib import Path

# Create an Azure AI Client from a connection string, copied from your Azure AI Foundry project.

# At the moment, it should be in the format "<HostName>;<AzureSubscriptionId>;<ResourceGroup>;<ProjectName>"

# HostName can be found by navigating to your discovery_url and removing the leading "https://" and trailing "/discovery"

# To find your discovery_url, run the CLI command: az ml workspace show -n {project_name} --resource-group {resource_group_name} --query discovery_url

# Project Connection example: eastus.api.azureml.ms;12345678-abcd-1234-9fc6-62780b3d3e05;my-resource-group;my-project-name

# Customer needs to login to Azure subscription via Azure CLI and set the environment variables

project_client = AIProjectClient.from_connection_string(

credential=DefaultAzureCredential(), conn_str=os.environ["PROJECT_CONNECTION_STRING"]

)

with project_client:

# Create an instance of the CodeInterpreterTool

code_interpreter = CodeInterpreterTool()

# The CodeInterpreterTool needs to be included in creation of the agent

agent = project_client.agents.create_agent(

model="gpt-4o-mini",

name="my-agent",

instructions="You are helpful agent",

tools=code_interpreter.definitions,

tool_resources=code_interpreter.resources,

)

print(f"Created agent, agent ID: {agent.id}")

# Create a thread

thread = project_client.agents.create_thread()

print(f"Created thread, thread ID: {thread.id}")

# Create a message

message = project_client.agents.create_message(

thread_id=thread.id,

role="user",

content="Could you please create a bar chart for the operating profit using the following data and provide the file to me? Company A: $1.2 million, Company B: $2.5 million, Company C: $3.0 million, Company D: $1.8 million",

)

print(f"Created message, message ID: {message.id}")

# Run the agent

run = project_client.agents.create_and_process_run(thread_id=thread.id, assistant_id=agent.id)

print(f"Run finished with status: {run.status}")

if run.status == "failed":

# Check if you got "Rate limit is exceeded.", then you want to get more quota

print(f"Run failed: {run.last_error}")

# Get messages from the thread

messages = project_client.agents.list_messages(thread_id=thread.id)

print(f"Messages: {messages}")

# Get the last message from the sender

last_msg = messages.get_last_text_message_by_sender("assistant")

if last_msg:

print(f"Last Message: {last_msg.text.value}")

# Generate an image file for the bar chart

for image_content in messages.image_contents:

print(f"Image File ID: {image_content.image_file.file_id}")

file_name = f"{image_content.image_file.file_id}_image_file.png"

project_client.agents.save_file(file_id=image_content.image_file.file_id, file_name=file_name)

print(f"Saved image file to: {Path.cwd() / file_name}")

# Print the file path(s) from the messages

for file_path_annotation in messages.file_path_annotations:

print(f"File Paths:")

print(f"Type: {file_path_annotation.type}")

print(f"Text: {file_path_annotation.text}")

print(f"File ID: {file_path_annotation.file_path.file_id}")

print(f"Start Index: {file_path_annotation.start_index}")

print(f"End Index: {file_path_annotation.end_index}")

project_client.agents.save_file(file_id=file_path_annotation.file_path.file_id, file_name=Path(file_path_annotation.text).name)

# Delete the agent once done

project_client.agents.delete_agent(agent.id)

print("Deleted agent")

| Reference documentation | Library source code | Package (PyPi) |

Prerequisites

- An Azure subscription - Create one for free.

- Python 3.8 or later

- Make sure you have the Azure AI Developer RBAC role assigned at the appropriate level.

- You need the Cognitive Services OpenAI User role assigned to use the Azure AI Services resource.

- Install the Azure CLI and the machine learning extension. If you have the CLI already installed, make sure it's updated to the latest version.

Set up your Azure AI Hub and Agent project

The following section shows you how to set up the required resources for getting started with Azure AI Agent Service:

Creating an Azure AI Hub to set up your app environment and Azure resources.

Creating an Azure AI project under your Hub creates an endpoint for your app to call, and sets up app services to access to resources in your tenant.

Connecting an Azure OpenAI resource or an Azure AI Services resource

Choose Basic or Standard Agent Setup

Basic Setup: Agents use multitenant search and storage resources fully managed by Microsoft. You don't have visibility or control over these underlying Azure resources.

Standard Setup: Agents use customer-owned, single-tenant search and storage resources. With this setup, you have full control and visibility over these resources, but you incur costs based on your usage.

[Optional] Model selection in autodeploy template

You can customize the model used by your agent by editing the model parameters in the autodeploy template. To deploy a different model, you need to update at least the modelName and modelVersion parameters.

By default, the deployment template is configured with the following values:

| Model Parameter | Default Value |

|---|---|

| modelName | gpt-4o-mini |

| modelFormat | OpenAI (for Azure OpenAI) |

| modelVersion | 2024-07-18 |

| modelSkuName | GlobalStandard |

| modelLocation | eastus |

Important

Don't change the modelFormat parameter.

The templates only support deployment of Azure OpenAI models. See which Azure OpenAI models are supported in the Azure AI Agent Service model support documentation.

[Optional] Use your own resources during agent setup

Note

If you use an existing AI Services or Azure OpenAI resource, no model will be deployed. You can deploy a model to the resource after the agent setup is complete.

Use an existing AI Services, Azure OpenAI, AI Search, and/or Azure Blob Storage resource by providing the full arm resource ID in the parameters file:

aiServiceAccountResourceIdaiSearchServiceResourceIdaiStorageAccountResourceId

If you want to use an existing Azure OpenAI resource, you need to update the aiServiceAccountResourceId and the aiServiceKind parameters in the parameter file. The aiServiceKind parameter should be set to AzureOpenAI.

For more information, see how to use your own resources.

Basic agent setup resource architecture

Resources for the AI hub, AI project, and AI Services are created for you. A storage account is created because it's a required resource for hubs, but this storage account isn't used by agents. The AI Services account is connected to your project/hub and a gpt-4o-mini model is deployed in the eastus region. A Microsoft-managed key vault, storage account, and search resource is used by default.

Standard agent setup resource architecture

Resources for the AI hub, AI project, key vault, storage account, AI Services, and AI Search are created for you. The AI Services, AI Search, key vault, and storage account are connected to your project and hub. A gpt-4o-mini model is deployed in eastus region using the Azure OpenAI endpoint for your resource.

Configure and run an agent

| Component | Description |

|---|---|

| Agent | Custom AI that uses AI models in conjunction with tools. |

| Tool | Tools help extend an agent’s ability to reliably and accurately respond during conversation. Such as connecting to user-defined knowledge bases to ground the model, or enabling web search to provide current information. |

| Thread | A conversation session between an agent and a user. Threads store Messages and automatically handle truncation to fit content into a model’s context. |

| Message | A message created by an agent or a user. Messages can include text, images, and other files. Messages are stored as a list on the Thread. |

| Run | Activation of an agent to begin running based on the contents of Thread. The agent uses its configuration and Thread’s Messages to perform tasks by calling models and tools. As part of a Run, the agent appends Messages to the Thread. |

| Run Step | A detailed list of steps the agent took as part of a Run. An agent can call tools or create Messages during its run. Examining Run Steps allows you to understand how the agent is getting to its results. |

Run the following commands to install the python packages.

pip install azure-ai-projects

pip install azure-identity

pip install openai

Use the following code to create and run an agent. To run this code, you will need to create a connection string using information from your project. This string is in the format:

<HostName>;<AzureSubscriptionId>;<ResourceGroup>;<ProjectName>

Tip

You can also find your connection string in the overview for your project in the Azure AI Foundry portal, under Project details > Project connection string.

HostName can be found by navigating to your discovery_url and removing the leading https:// and trailing /discovery. To find your discovery_url, run this CLI command:

az ml workspace show -n {project_name} --resource-group {resource_group_name} --query discovery_url

For example, your connection string may look something like:

eastus.api.azureml.ms;12345678-abcd-1234-9fc6-62780b3d3e05;my-resource-group;my-project-name

Set this connection string as an environment variable named PROJECT_CONNECTION_STRING.

import os, time

from azure.ai.projects import AIProjectClient

from azure.identity import DefaultAzureCredential

from openai import AzureOpenAI

with AIProjectClient.from_connection_string(

credential=DefaultAzureCredential(),

conn_str=os.environ["PROJECT_CONNECTION_STRING"],

) as project_client:

# Explicit type hinting for IntelliSense

client: AzureOpenAI = project_client.inference.get_azure_openai_client(

# The latest API version is 2024-10-01-preview

api_version = os.environ.get("AZURE_OPENAI_API_VERSION"),

)

with client:

agent = client.beta.assistants.create(

model="gpt-4o-mini", name="my-agent", instructions="You are a helpful agent"

)

print(f"Created agent, agent ID: {agent.id}")

thread = client.beta.threads.create()

print(f"Created thread, thread ID: {thread.id}")

message = client.beta.threads.messages.create(thread_id=thread.id, role="user", content="Hello, tell me a joke")

print(f"Created message, message ID: {message.id}")

run = client.beta.threads.runs.create(thread_id=thread.id, assistant_id=agent.id)

# Poll the run while run status is queued or in progress

while run.status in ["queued", "in_progress", "requires_action"]:

time.sleep(1) # Wait for a second

run = client.beta.threads.runs.retrieve(thread_id=thread.id, run_id=run.id)

print(f"Run status: {run.status}")

client.beta.assistants.delete(agent.id)

print("Deleted agent")

messages = client.beta.threads.messages.list(thread_id=thread.id)

print(f"Messages: {messages}")