Azure + Bing Maps: Accessing spatial data with Entity Framework

This is the fifth article in our "Bring the clouds together: Azure + Bing Maps"

series. You can find a preview of live demonstration on

https://sqlazurebingmap.cloudapp.net/. For a list of articles in the series,

please refer to

Introduction

In our

previous post, we introduced how to work with spatial data in SQL Azure.

This post will focus on how to access spatial data in a .NET application,

and a few considerations you must take when working with SQL Azure.

Before reading, we assume you have a basic understanding of the

following technologies:

ADO.NET Entity Framework (EF). If you're new to EF, please refer to

the MSDN

tutorial to get started. You can also find a bunch of getting started

video tutorials on the Silverlight web site, such asthis one. This post targets users who know how to work with Visual

Studio tools to generate models, but may not understand more advanced topics

such as working with stored procedures.Spatial data. If you don't understand spatial data, please read the

A SQL Azure account if you want to use SQL Azure as the data store.

Entity Framework and SQL Azure

Today, the simplest solution to access a database from a .NET application is

using ADO.NET Entity Framework (EF). EF is able to generate an object model

automatically from the relational database model. Thus it allows you to work

with objects in an object oriented programming language (such as C#), and use

LINQ to query the objects, rather than dealing with SQL connections and commands

manually. EF increases developers productivity tremendously, compared to

traditional data accessing frameworks like ADO.NET DataSet. EF is also a

built-in provider for WCF Data Services.

Entity Framework is fully supported in SQL Azure. If you're using Visual Studio

2010, you can generate the object model from a SQL Azure database just like

generating from a SQL Server database.

Unfortunately, at the moment EF only supports relational data. It doesn't

support spatial data, be it in SQL Azure or a local SQL Server.

Make Entity Framework work with spatial data

There're several workarounds to make Entity Framework work with spatial data. A

common approach is described in

Julie's post. She creates a view which converts the geography type to a

varbinary(max) type. Recall SQL Azure stores geography data in its binary

format. So this conversion is straightforward. Entity Framework supports binary

data type, so now we can create an object model that maps the binary CLR type to

the geography SQL type.

We will take the same approach here. Let's create a view:

CREATE

VIEW [dbo].[TravelView]

AS

SELECT

PartitionKey,

RowKey,

Place,

CAST(GeoLocation AS

varbinary(MAX))

AS GeoLocation, Time

FROM

dbo.Travel

Then we will be able to generate an Entity Framework model. As you can see from

the below screenshot, the GeoLocation property has been correctly included in

the model. Its type is Binary. Also note with Visual Studio 2010, you can

generate EF models from SQL Azure, just like generating models from local SQL

Server.

Add custom properties

Keep in mind EF represents the data access layer. Very often data access objects

are not exactly the same as business objects. Usually, you need to create a

business object class, and then convert the EF object to the business object.

However, if your business logic is simple (like the travel application we're

building), you can also simply add custom properties to the EF class.

To do so, you need to create a partial class. In this case, let's create a

partial class for the Travel class.

[DataServiceKey(new string[] {

"PartitionKey",

"RowKey" })]

[IgnoreProperties(new string[] {

"EntityState",

"EntityKey", "GeoLocation" })]

public partial

class Travel

: EntityObject

The DataServiceKey and IgnoreProperties attributes are used by WCF Data

Services, which will be covered in the next post. For now, we add three custom

properties to the class: GeoLocationText, Latitude, and Longitude. Even though

we store the data as spatial data, our clients may still think latitude and

longitude is easier to understand. So we expose those two properties, as well as

the WKT representation of the spatial data (GeoLocationText).

Those properties are not automatically populated by EF when querying the

database. We need to populate them manually. To do so, implement the partial

method OnGeoLocationChanging, which will be invoked whenever the value of

GeoLocation property changes. The GeoLocation property is the binary

representation of the spatial data, which corresponds to the database view's

GeoLocation column.

partial void

OnGeoLocationChanging(global::System.Byte[] value)

{

if (value !=

null)

{

using (MemoryStream

ms = new

MemoryStream(value))

{

using (BinaryReader

reader = new

BinaryReader(ms))

{

SqlGeography sqlGeography = new

SqlGeography();

sqlGeography.Read(reader);

this.GeoLocationText = new

string(sqlGeography.STAsText().Value);

this.Latitude =

sqlGeography.Lat.Value;

this.Longitude = sqlGeography.Long.Value;

}

}

}

}

By the way, if you're not familiar with partial class and partial method, please

read

https://msdn.microsoft.com/en-us/library/wa80x488.aspx.

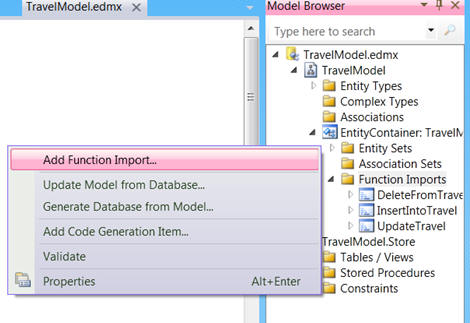

Using stored procedures with Entity Framework

As described in the last post, when working with spatial data, it is

recommended to handle insert/update/delete operations using stored

procedures. To work with stored procedures in EF, first you need to

import them to the model. This task is easy. Simply right click

"Function Imports" in the Model Browser, and choose "Add Function

Import". Then follow the wizard:

Entity Framework supports two kinds of stored procedures: CRUD stored procedures

and general stored procedures. For CRUD stored procedures, you need to

map them to the Insert/Update/Delete Functions in the "Mapping Details"

window. Then they will be invoked automatically when the default

implementation of SaveChanges is called.

For general stored procedures, you don't need to map them. But you need

to invoke them manually, just as invoke a normal method on the EF

object. Please refer to

https://msdn.microsoft.com/en-us/library/bb896279.aspx for more information.

Note while the above screenshot shows how to map a stored

procedure to CRUD functions, our sample actually does not use this

feature. The CRUD stored procedures have a limitation: It cannot use

custom properties like GeoLocationText. All parameters must be

properties defined in the model's conceptual models. That also means

they must appear in the storage model (that is, must be actual columns

in the database), because all properties in the conceptual model must be

mapped to properties in the storage model. Well, if you don't know what

is conceptual model and storage model, just keep in mind that CRUD

stored procedures have to use properties you defined in the EF designer.

Custom properties added in the partial class cannot be used. You can

read more from

https://msdn.microsoft.com/en-us/library/bb399232.aspx.

Override SaveChanges

Since we have to pass our custom property GeoLocationText to the stored

procedures, we have to treat them as general stored procedures, and manually

invoke the methods. The code should be written in, of course, the

ObjectContext's SaveChanges method:

public override

int SaveChanges(SaveOptions

options)

{

int returnValue = 0;

var EnsureConnectionMethod =

typeof(ObjectContext).GetMethod("EnsureConnection",

BindingFlags.Instance | BindingFlags.NonPublic);

EnsureConnectionMethod.Invoke(this,

null);

foreach (ObjectStateEntry

ose in this.ObjectStateManager.GetObjectStateEntries(EntityState.Added))

{

Travel travel = ose.Entity

as Travel;

if (travel !=

null)

{

RetryPolicy retryPolicy =

new RetryPolicy();

retryPolicy.Task = new

Action(() =>

{

this.InsertIntoTravel(travel.PartitionKey, travel.Place,

travel.GeoLocationText, travel.Time);

});

retryPolicy.Execute();

returnValue++;

}

}

foreach (ObjectStateEntry

ose in this.ObjectStateManager.GetObjectStateEntries(EntityState.Modified))

{

Travel travel = ose.Entity

as Travel;

if (travel !=

null)

{

RetryPolicy retryPolicy =

new RetryPolicy();

retryPolicy.Task = new

Action(() =>

{

this.UpdateTravel(travel.PartitionKey, travel.RowKey, travel.Place,

travel.GeoLocationText, travel.Time);

});

retryPolicy.Execute();

returnValue++;

}

}

foreach (ObjectStateEntry

ose in this.ObjectStateManager.GetObjectStateEntries(EntityState.Deleted))

{

Travel travel = ose.Entity

as Travel;

if (travel !=

null)

{

RetryPolicy retryPolicy =

new RetryPolicy();

retryPolicy.Task = new

Action(() =>

{

this.DeleteFromTravel(travel.PartitionKey, travel.RowKey);

});

retryPolicy.Execute();

returnValue++;

}

}

var ReleaseConnectionMethod =

typeof(ObjectContext).GetMethod("ReleaseConnection",

BindingFlags.Instance |

BindingFlags.NonPublic);

ReleaseConnectionMethod.Invoke(this,

null);

return returnValue;

}

Ignore the connection management and retry policy for now. When writing custom

logic for SaveChanges, you can take advantage of the

ObjectStateManager.GetObjectStateEntries method. Entity Framework keeps change

tracking automatically, and the GetObjectStateEntries method allows you to

obtain all added entities, all modified entities, as well as all deleted

entities. Thus you can invoke the corresponding CRUD methods.

The return value of the SaveChanges method is defined as how many rows are

affected. While many applications (including our sample) do not rely on this

feature, it is recommended to implement the return value as it's defined, to

avoid confusion.

Finally, note we do not invoke base.SaveChanges, to avoid the pre-defined logic

that tries to modify the database using generated SQL rather than stored

procedures.

Add a retry logic

So far, everything we described apply to both SQL Azure and local SQL Server.

This is good, since the code works fine both locally and in the cloud. However,

in SQL Azure, an operation is not guaranteed to always success. It may fail due

to unexpected reasons, such as network issues. But our SLA ensures if you try

the same operation again after 10 seconds, it should work fine. Actually in most

cases, if a connection problem occurs, wait 5 seconds and retry should be more

than enough. Note the SLA only covers service issues. If the problem is in your

application (such as forgetting to close connections), there's no SLA.

Anyway, it is very important to write a proper retry logic when working with any

storage service, especially cloud storage like SQL Azure. Fortunately, this is a

simple task. Please refer to the following code:

public class

RetryPolicy

{

public int

RetryNumber { get; set;

}

public

TimeSpan WaitTime { get;

set; }

public Action

Task { get; set;

}

public RetryPolicy()

{

this.RetryNumber = 3;

}

public RetryPolicy(int retryNumber)

{

this.RetryNumber = retryNumber;

this.WaitTime =

TimeSpan.FromSeconds(5d);

}

public void

Execute()

{

for (int

i = 0; i < this.RetryNumber; i++)

{

try

{

this.Task();

break;

}

catch (SqlException

ex)

{

if (i == this.RetryNumber

- 1)

{

throw new

SqlExceptionWithRetry(

"Maximum retry reached. Still unable to

process the request. See inner exception for more details.",

ex);

throw ex;

}

Thread.Sleep(this.WaitTime);

}

}

}

}

public class

SqlExceptionWithRetry :

Exception

{

public SqlExceptionWithRetry(string message,

SqlException innerException)

: base(message, innerException)

{

}

}

Here we have a property whose type is Action. This is actually a delegate which

performs the actual logic. For those who's not familiar with the Action

delegate, please refer to

https://msdn.microsoft.com/en-us/library/018hxwa8.aspx.

We try to execute the action. If it succeeds, then break the loop. If it fails,

we wait for a few seconds and try again, until the maximum retry number is

reached. Usually the maximum retry number should not be reached. If you do reach

it, either your application has a bug which results in an uncover able problem,

or the SQL Azure service is down and you can submit a ticket to see if you can

get refund.

To use the retry logic, simply write:

RetryPolicy retryPolicy =

new RetryPolicy();

retryPolicy.Task =

new Action(()

=>

{

this.InsertIntoTravel(travel.PartitionKey, travel.Place,

travel.GeoLocationText, travel.Time);

Additional considerations

Before we close this post, we'd like to point out two additional considerations

when working with SQL Azure.

First, it is recommended to enable MARS by setting MultipleActiveResultSets to

True in the connection string. This enables one connection to work on multiple

result sets, which is required by certain features of EF. Please refer to

https://blogs.msdn.com/b/adonet/archive/2010/06/09/remember-to-re-enable-mars-in-your-sql-azure-based-ef-apps.aspx

for more information.

Second, in the above SaveChanges code, since we're not invoking

base.SaveChanges, the connections will not be taken care of automatically. We

must make sure we open and close connections at proper time, to avoid service

throttling. EF internally uses the EnsureConnection and ReleaseConnection

methods, which takes care of open/close connections, as well a few others. So it

is a good idea to leverage those built-in methods. Unfortunately they're marked

as internal, which cannot be accessed directly in our own application. So we

have to use reflection to invoke them.

Conclusion

This post discussed how to access spatial data using Entity Framework, as well

as a few considerations you must take when working with SQL Azure. The next post

will discuss how to expose the data to the world using WCF Data Services.

Comments

- Anonymous

August 22, 2011

The comment has been removed