Modernizing "Did my dad influence me?" - Part 1

In 2013 I decided to investigate how much influence my dad had on my musical choice, you can read about it here. At that point I was doing a lot of Power BI work so I decided to build it all in Power BI. Fast forward a few years later and I was working with @nathan_gs to bring some of the data manipulation to Spark as an exercise.

Enter 2018 where things like VS Code, Containers, .NET Core, Azure Databricks are the new popular kids on the block. As such I started the journey to move the solution to these new set of technologies. A small disclaimer, I am not a fulltime developer so feel free to contribute to the code if you can improve it ;-)

For Part 1 let's focus on the basics, getting the LastFM API data on Azure Storage for later processing using .NET Core and Docker.

Prerequisites:

I decided to go for .NET Core and Visual Studio Code which are both cross-platform and open source. If you don't know Visual Studio Code, you should really give it a try. In fact, it has even been voted as default IDE in the Ubuntu Desktop Applications Survey.

You can find my code on GitHub and the container on DockerHub. I'm also using the WSL on Windows 10 (aka Windows Subsystem for Linux). Personally, I'm using Debian but there are different flavours like Ubuntu, Kali Linux, OpenSUSE or SLES.

There are a couple of NuGet Packages I'm using in the project. If you wonder how to add these to a project, you can do this from within VS Code using the Terminal window. It allows you to run bash or PowerShell scripts right within your IDE. Adding packages is as simple as running the following command:

dotnet add package Newtonsoft.Json

Reading the data from the API is simple. What you need to do is get an API key on the LastFM website. I'm just using the user.getRecentTracks API. This gives you the list of tracks you listened too including the needed metadata. It takes a page size parameter so first I determine the number of pages the API will return.

One of the interesting learnings for me was the way configuration works in .NET Core. I started out with a simple appsettings.json file but then realized it would be easier if it would also accept environment variables for Docker. It is a bit of magic but straightforward to do in .NET Core. Just use the AddEnvironmentVariables() method and that allows you to override the appsettings with environment variables. The tricky part was that you need to add the right NuGet packages for this to work, so here are the ones I used.

dotnet add package Microsoft.Extensions.Configuration

dotnet add package Microsoft.Extensions.Configuration.EnvironmentVariable

dotnet add package Microsoft.Extensions.Configuration.Json

If you decide you just want to export your data but aren't planning on installing the prerequisites and compiling this yourself, you can quickly run this as a container. You can find the documentation here on how to create a Docker file for a .NET Core application.

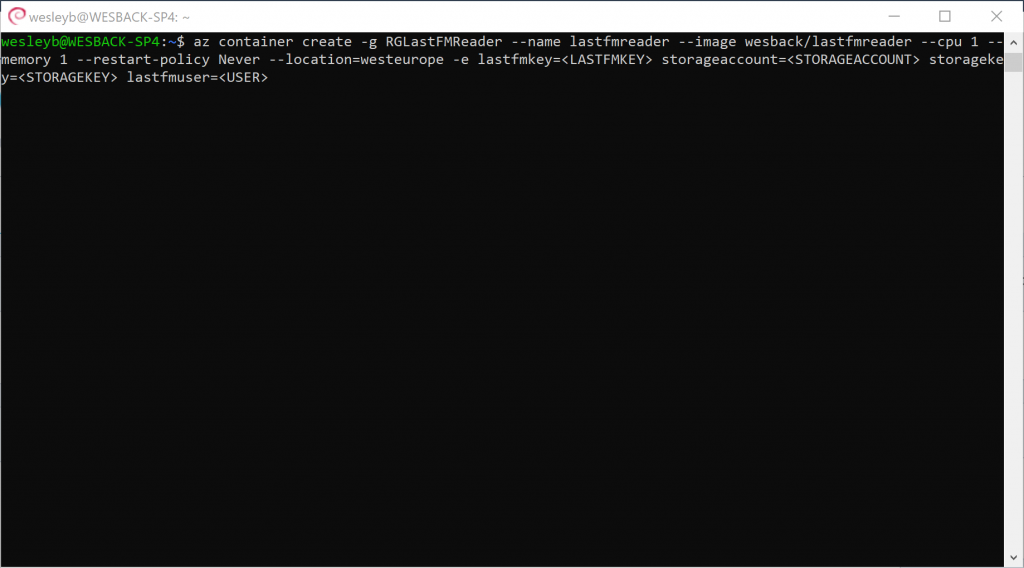

Executing a Docker container can obviously be done in different ways. For this particular type of application I decided to go for the easiest solution which are Azure Container Instances. For this I'm again using bash, install the Azure CLI or use the shell inside the browser to get started. I've added the code below the screenshots, just replace the parameters with your values and you should be good to go.

az login

az group create -l westeurope -n RGLastFMReader

az container create -g RGLastFMReader --name lastfmreader --image wesback/lastfmreader --cpu 1 --memory 1 --restart-policy Never --location=westeurope -e lastfmkey=<LASTFMKEY> storageaccount=<STORAGEACCOUNT> storagekey=<STORAGEKEY> lastfmuser=<USER>

In the next part we'll look at how we can automate the export and how we can use services like Azure Databricks to transform the data in a more useful format for reporting. Stay tuned!

I would like to thank LastFM for their fantastic work and rich API, if you are not tracking your listening habits yet do get an account there! Please make sure you read the TOS if you use the API.