Bringing Hyper-V to “Windows 8”

Steven Sinofsky posted this morning this post: https://blogs.msdn.com/b/b8/archive/2011/09/07/bringing-hyper-v-to-windows-8.aspx

In this post we talk about how we will support virtualization on the Windows "client" OS. Originally released for Windows Server where the technology has proven very popular and successful, we wanted to bring virtualization to a core set of scenarios for professionals using Windows. The two most common scenarios we focused on are for software developers working across multiple platforms and clients and servers, and IT professionals looking to manage virtualized clients and servers in a seamless manner. Mathew John is a program manager on our Hyper-V team and authored this post. One note is that, as with all features, we're discussing the engineering of the work and not the ultimate packaging, as those choices are made much later in the project. --Steven PS: We didn't plan on doing so many posts in a row so we'll return to more sustainable pace -- sorry if we inadvertently set expectations a bit too high. We're getting ready for BUILD full time right now!!

Whether you are a software developer, an IT administrator, or simply an enthusiast, many of you need to run multiple operating systems, usually on many different machines. Not all of us have access to a full suite of labs to house all these machines, and so virtualization can be a space and time saver.

In building Windows 8 we worked to enable Hyper-V, the machine virtualization technology that has been part of the last 2 releases of Windows Server, to function on the client OS as well. In brief, Hyper-V lets you run more than one 32-bit or 64-bit x86 operating system at the same time on the same computer. Instead of working directly with the computer’s hardware, the operating systems run inside of a virtual machine (VM).

Hyper-V enables developers to easily maintain multiple test environments and provides a simple mechanism to quickly switch between these environments without incurring additional hardware costs. For example, we release pre-configured virtual machines containing old versions of Internet Explorer to support web developers. The IT administrator gets the additional benefit of virtual machine parity and a common management experience across Hyper-V in Windows Server and Windows Client. We also know that many of you use virtualization to try out new things without risking changes to the PC you are actively using.

An introduction to Hyper-V

Hyper-V requires a 64-bit system that has Second Level Address Translation (SLAT). SLAT is a feature present in the current generation of 64-bit processors by Intel & AMD. You’ll also need a 64-bit version of Windows 8, and at least 4GB of RAM. Hyper-V does support creation of both 32-bit and 64-bit operating systems in the VMs.

Hyper-V’s dynamic memory allows memory needed by the VM to be allocated and de-allocated dynamically (you specify a minimum and maximum) and share unused memory between VMs. You can run 3 or 4 VMs on a machine that has 4GB of RAM but you will need more RAM for 5 or more VMs. On the other end of the spectrum, you can also create large VMs with 32 processors and 512GB RAM.

As for user experience with VMs, Windows provides two mechanisms to peek into the Virtual Machine: the VM Console and the Remote Desktop Connection.

The VM Console (also known as VMConnect) is a console view of the VM. It provides a single monitor view of the VM with resolution up to 1600x1200 in 32-bit color. This console provides you with the ability to view the VM’s booting process.

For a richer experience, you can connect to the VM using the Remote Desktop Connection (RDC). With RDC, the VM takes advantage of capabilities present on your physical PC. For example, if you have multiple monitors, then the VM can show its graphics on all these monitors. Similarly, if you have a multipoint touch-enabled interface on your PC, then the VM can use this interface to give you a touch experience. The VM also has full multimedia capability by leveraging the physical system’s speakers and microphone. The Root OS (i.e. the main Windows OS that’s managing the VMs) can also share its clipboard and folders with the VMs. And finally, with RDC, you can also attach any USB device directly to the VM.

For storage,you can add multiple hard disks to the IDE or SCSI controllers available in the VM. You can use Virtual Hard Disks (.VHD or .VHDX files) or actual disks that you pass directly through to the virtual machine. VHDs can also reside on a remote file server, making it easy to maintain and share a common set of predefined VHDs across a team.

Hyper-V’s “Live Storage Move” capability helps your VMs to be fairly independent of the underlying storage. With this, you could move the VM’s storage from one local drive to another, to a USB stick, or to a remote file share without needing to stop your VM. I’ve found this feature to be quite handy for fast deployments: when I need a VM quickly, I start one from a VM library maintained on a file share and then move the VM’s storage to my local drive.

Another great feature of Hyper-V is the ability to take snapshots of a virtual machine while it is running. A snapshot saves everything about the virtual machine allowing you to go back to a previous point in time in the life of a VM, and is a great tool when trying to debug tricky problems. At the same time, Hyper-V virtual machines have all of the manageability benefits of Windows. Windows Update can patch Hyper-V components, so you don’t need to set up additional maintenance processes. And Windows has all the same inherent capabilities with Hyper-V installed.

Having said this, using virtualization has its limitations. Features or applications that depend on specific hardware will not work well in a VM. For example, Windows BitLocker and Measured Boot, which rely on TPM (Trusted Platform Module), might not function properly in a VM, and games or applications that require processing with GPUs (without providing software fallback) might not work well either. Also, applications relying on sub 10ms timers, i.e. latency-sensitive high-precision apps such as live music mixing apps, etc. could have issues running in a VM. The root OS is also running on top of the Hyper-V virtualization layer, but it is special in that it has direct access to all the hardware. This is why applications with special hardware requirements continue to work unhindered in the root OS but latency-sensitive, high-precision apps could still have issues running in the root OS.

As a reminder, you will still need to license any operating systems you use in the VMs.

Here’s a quick run-through of how the Hyper-V works in Windows 8.

Your browser doesn't support HTML5 video.

Download this video to view it in your favorite media player:

High quality MP4 | Lower quality MP4

Supporting VM communication through wireless NICs

As you saw in the demo, creating an external network switch is as simple as selecting a physical network adapter (NIC) from a drop-down list and clicking OK. This already worked well for Windows Server Hyper-V, but to have similar results in Windows 8, we needed to get it working with wireless NICs, a new challenge.

The problem

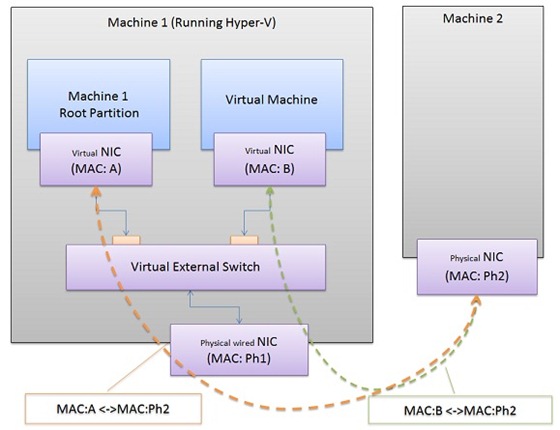

The virtual switch in Hyper-V is a “layer-2 switch,” which means that it switches (i.e. determines the route a certain Ethernet packet takes) using the MAC addresses that uniquely identify each (physical and virtual) network adapter card. The MAC address of the source and destination machines are sent in each Ethernet packet and a layer-2 switch uses this to determine where it should send the incoming packet. An external virtual switch is connected to the external world through the physical NIC. Ethernet packets from a VM destined for a machine in the external world are sent out through this physical NIC. This means that the physical NIC must be able to carry the traffic from all the VMs connected to this virtual switch, thus implying that the packets flowing through the physical NIC will contain multiple MAC addresses (one for each VM’s virtual NIC). This is supported on wired physical NICs (by putting the NIC in promiscuous mode), but not supported on wireless NICs since the wireless channel established by the WiFi NIC and its access point only allows Ethernet packets with the WiFi NIC’s MAC address and nothing else. In other words, Hyper-V couldn’t use WiFi NICs for an external switch if we continued to use the current virtual switch architecture.

Figure 1: Networking between VM and external machine using wired connection

Figure 1: Networking between VM and external machine using wired connection

The solution

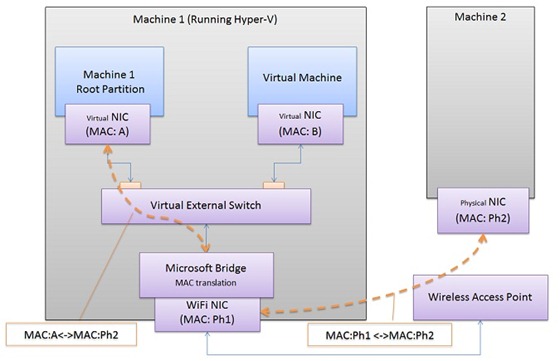

To work around this limitation, we used the Microsoft Bridging solution, which implements ARP proxying (for IPv4) and Neighbor Discovery proxying (for IPv6) to replace the virtual NICs’MAC address with the WiFi adapter’s MAC address for outgoing packets. The bridge maintains an internal mapping between the virtual NIC’s IP address and its MAC address to ensure that the packets coming from the external world are sent to the appropriate virtual NIC.

Hyper-V integrates the bridge as part of creating the virtual switch such that when you create an external virtual switch using a WiFi adapter, Hyper-V will:

- Create a single adapter bridge connected to the WiFi adapter

- Create the external virtual switch

- Bind the external virtual switch to use the bridge, instead of the WiFi adapter directly

In this model, Ethernet switching still happens in the virtual switch, and MAC translation occurs in the bridge. For the end user who is creating an external network, the workflow is the same whether you select a wired or a wireless NIC.

Figure 2: Networking between VM and external machine using WiFi connection

In conclusion, by bringing Hyper-V from Windows Server to Windows Client, we were able to provide a robust virtualization technology designed for the scalability, security, reliability, and performance needs of most data centers. With Hyper-V, developers and IT professionals can now build a more efficient and cost-effective environment for using and testing across multiple machines.

--Mathew John