Caveats for Authenticode Code Signing

Back in 2011, I wrote a long post about Authenticode, Microsoft’s Code Signing technology. In that post, I noted:

Digitally signing your code helps to ensure that it cannot be tampered with, either on your servers, or when it is being downloaded to a user’s computer, especially over an insecure protocol like HTTP or FTP. This provides the user the confidence of knowing that the program they’ve just downloaded is the same one that you built.

This summary is mostly correct, but it oversimplifies things a bit. In this post, we’ll take a deep dive into Authenticode to show that things aren’t quite as simple as they might appear.

Personalized Installers

Say you’re a service like Copilot, Dropbox, or GoToMeeting. Each of these services has a website and a client application that users download to interact with the service. However, unlike many traditional applications, each of these downloadable applications is meant to be personalized to an individual user; for instance, Copilot wants the application to connect to the remote assistance session without any prompts, GoToMeeting wants the user to immediately join an in-progress conference, and Dropbox wants their app to immediately begin syncing files to the user’s cloud account.

Each of these services is security-conscious, so they sign their installers with Authenticode to prevent tampering and to ensure that users have a painless install experience.

However, the use of Authenticode makes installer personalization tricky. There are three approaches:

- Have the installer attempt to detect the user by groveling data from browsers

- Dynamically generate and sign a new installer each user upon download

- “Cheat” -- Find some way to use one Authenticode signature for multiple different installers

Browser Groveling

Approach #1 is tricky: there are at least three popular browsers on Windows (Chrome, Firefox, and Internet Explorer). Of those, only Internet Explorer offers a standard published API for native code applications to retrieve cookies or cached data. And unfortunately, Protected Mode and Enhanced Protected Mode made things much harder.

Prior to the introduction of Protected Mode, an application could simply call InternetGetCookieEx and grab Internet Explorer’s cookie for the service’s website. However, that API doesn’t work to grab Protected Mode cookies, and the replacement API (IEGetProtectedModeCookie) doesn’t work if the calling process is running as Administrator, which many installers do. And if the user is using the Windows 8 “Immersive IE” or manually enabled Enhanced Protected Mode, there’s no API available to get the cookie at all.

While the “Browser Groveling” approach was once popular (it’s why the IEGetProtectedModeCookie API was created to begin with), it probably isn’t used very often these days.

Dynamic Generation and Signing

Approach #2 is conceptually simple: every time you get a download request, a server-side process prepares a custom version of the installer executable (say, by replacing a reserved block of null bytes with a token) and then signs the resulting program.

On one hand, this is quite straightforward, but it might be computationally expensive to generate a new installer and signature on every single download. Furthermore, using this approach requires careful attention to security: it would be insanely risky to put the code-signing private key on the web server and have that server do the work; you’ll want to have the frontend web server invoke a backend service running on a different machine to do the generation and signing.

The Copilot.com installer appears to make use of this architecture.

Cheating Authenticode

Approach #3 seems either silly or crazy—if the whole point of using Authenticode is to ensure the integrity of the code, then there shouldn’t be any way around that, right?

The devil, as always, is in the details.

using Authenticode is to ensure the integrity of the code

Authenticode indeed verifies the integrity of the code. But the code doesn’t make up the entire downloaded executable.

Microsoft helpfully publishes the Windows Authenticode Portable Executable Signature Format (and the less relevant Microsoft PE and COFF Specification) document, which outlines in great detail how Authenticode works.

The first document includes this very helpful map:

Items marked with a grey background aren’t included in the signed hash; the latter two grey blocks come from the signature itself, and the injection of the signature changes the file’s embedded checksum which is recomputed.

So, this all looks good, right? The signer is hashing everything in the file except for the bits related to the signature itself.

And there’s the rub—the signature blocks themselves can contain data. This data isn’t validated by the hash verification process, and while it isn’t code per-se, an executable with such data could examine itself, find the data, and make use of it.

WIN_CERTIFICATE Structure Padding

The first place that sneaky developers found to shove additional data is after the PKCS#7-formatted data. The WIN_CERTIFICATE structure has a length field which specifies how many bytes will be used to hold certificate data; this value can be larger than the number of bytes used by the certificate data.

MVP Didier Stevens wrote a great blog post describing this technique and offering a downloadable tool (with source) that allows you to explore whether there is additional data stored after the embedded certificates.

For instance, you can see this trick used to embed tracking data in an old “offline installer” for Chrome:

In December 2013, Microsoft announced that some developers had foolishly used this trick to store URLs of code that would be downloaded and installed by a signed “stub” installer. The bad guys noticed that they could edit these “stub” installers, changing the embedded URLs to point to malware, and the signature of the stub wouldn’t change. The file would appear legitimate and would pass all Authenticode checks, and when run, would proceed to pwn the victim’s computer.

Microsoft announced that this trick would be blocked in a future update by changing Authenticode Verification to ignore the signature in any file with non-null bytes after the certificates. Originally, the plan was to enable stricter verification by default in June of 2014, but after evaluating the compatibility impact, Microsoft decided not to turn on the restrictions by default; a registry key must be set to enable enforcement (Change your mind? Undo).

Unvalidated Attributes

You can imagine my surprise when Dropbox announced in early August 2014 that they were sending signed personalized installers to users. Even with the EnableCertPaddingCheck registry key set, the Dropbox client’s signature was deemed valid. How was this possible?

Microsoft’s blog post provides a hint:

Although this change prevents one form of this unsafe practice, it is not capable of preventing all such forms; for example, an application developer can place unverified data within the PKCS #7 blob itself which will not be taken into account when verifying the Authenticode signature.

Looking back at the Authenticode specification, we see:

unauthenticatedAttributes

If present, this field contains an Attributes object that in turn contains a set of Attribute objects. In Authenticode, this set contains only one Attribute object, which contains an Authenticode timestamp.

The prose in red is a bit misleading—while Microsoft might have expected only a timestamp to be stored there, in practice, a signer can put anything they want. So they do.

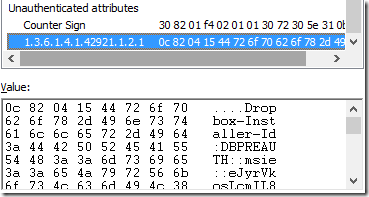

In Windows Explorer, right-click DropboxInstaller.exe and choose Properties. Click the Digital Signatures tab and click the Details button. On the Advanced tab, scroll to the bottom. In addition to the attribute “Counter Sign” (which is a cryptographically-signed timestamp, as described in my original blog) there’s an attribute whose OID (1.3.6.1.4.1.42921.2.1) lacks a friendly name. That attribute contains a 1KB block of unsigned data, in the format described in Dropbox’s post.

The GoToWebinarLauncher.exe uses the same trick, with a different OID (1.3.6.1.4.1.3845.3.9876.1.1.1) and a 6840 byte data block:

Is Unverified Data Safe?

Embedding unverified data into signed executables is absolutely a way to shoot yourself in the foot, but it is possible to do it securely. If you plan to go this route you must threat model and penetration test your approach to ensure that none of your code relying on such data trusts it. By sneaking around behind Authenticode, Windows is no longer validating the authenticity of that data—you must do so yourself.

Best Practices

The following are some best practices to consider:

- Don’t do this at all. Getting it right is very hard, and Windows may decide to break you in the future.

- Embed a signature within the data block yourself. If it doesn’t validate when your code examines it, reject the data.

- Do not embed private data in the block; the user may share the installer or fail to protect it because they don’t realize it contains their private data.

- If the data contains URLs to other code, validate that code’s signature when it is downloaded.

MD5

Last year, I sent an email to the Copilot team noting that they were still using the MD5 hash algorithm when signing their code. I pointed out that changing to use the more secure SHA algorithm would probably be a trivial matter of adding a command line argument to their existing signing command (e.g. -a sha1).

Unfortunately, they haven’t made this change yet [Update: They moved to SHA1 on 9/4/2014]; while they haven’t told me why, my guess is that it’s related to the architecture they invented to dynamically sign the installers that they generate.

Why does this matter? Because MD5 is scary broken and should not be used.

A digital signature is only as strong as the hash algorithm to which the signature was applied. If a hash algorithm is broken, signed hashes could be repurposed by bad guys.

When it comes to hashing, there are two types of attacks:

In a collision attack, an attacker can carefully craft two different programs (one good, and one evil) that hash to the same value. In Authenticode, this would only be interesting if he could convince someone else to sign the “good” program; that signature he could then apply to the “evil” variant. This attack is possible against MD5 today.

In a second-preimage attack, an attacker takes anyone else’s insecurely-signed legitimate program as input and he generates a new evil program that hashes to the same value as the legitimate file. He can then copy the signature to his file and it will appear that the evil file was signed by the good guy. As far as we know, there aren’t cheap MD5 preimage attacks yet, but there will be.

Best Practice: Don’t use MD5.

While much stronger than MD5, SHA1, the default used by most certificates and tools, is starting to show weakness as well. Over the next few years, it's expected that most certificates and signatures will move to using SHA256 or SHA512 as the hash algorithm. To use SHA256 with signtool.exe, specify the /fd SHA256 command line argument. Note that SHA256 Authenticode signatures cannot be created using the older signcode.exe tool, and cannot be verified on Windows XP, even when Service Pack 3 (which supports the use of SHA256 certificates) is installed.

-Eric Lawrence

MVP

Comments

Anonymous

September 04, 2014

What is fun is that MD5 is still used for VBA digital signatures with no other option.Anonymous

September 05, 2014

And I don't think Vista and Win7 can verify SHA256 Authenticode signatures (not certificates) yet either. MS once claimed they were working on a hotfix to add this support.Anonymous

September 07, 2014

The comment has been removedAnonymous

October 16, 2014

Note: Today I observed that Opera 25's installer makes use of the post-PKCS#7 padding trick, which is a clue that this is likely the reason that Microsoft has not enabled the restriction by default. Opera is considered a "Competitive Browser", and as such Microsoft is legally restricted from making changes that might impact its install experience.Anonymous

November 04, 2014

The comment has been removed