Be a testability champion!

Be a testability champion!

When you are in a design phase for a product test should be involved and comment on the designs. The job of the professional software tester at this point is to give actionable feedback on the testability of the product. Testability can be a nebulous concept. It’s more than just sprinkling in some “test hooks” near the end of coding. Everyone on the product team or feature crew should have a good idea what makes software testable. This often isn’t the case. The test owner must understand and champion testability. If your team mates don’t have a solid understanding, it’s up to you to educate them. If you don’t have a solid understanding, here is a starting point.

Testability is closely related to accessibility, maintainability and manageability. All of these desirable traits are highly correlated. Making sure your product is highly testable will pay dividends for the life of the code across many areas.

Let’s take a look at some things that make a product testable. I will be talking about APIs over and over. Think of a product in terms of the APIs and instead of implementation and components. That mind set is an important key to testability.

Testability flows from clear specifications.

Product designers often start with personas and scenarios as the first specification document. From these come the features of the product. Once the feature set is more or less set, the components are designed. The first thing that will allow the test team to succeed is having clear specifications. It’s often the case that the specs are never really completed. To some extent they do need to be “living documents” but you can give your testability a boost by making sure they are reviewed for quality and signed off before any coding milestones.

Specs should be single purpose.

You should be able to clearly answer “What kind of specification is this?” Some usual answers would be scenario, feature, component, product state descriptions (state machine) or other. You should insure the design makes sure every spec has a clear purpose and fits clearly into a specification category. A specification that mixes feature definition with component design makes things unclear. Make sure the purpose of the specification is clear and specific. Make sure that mixed-up specifications be split into smaller discreet chunks. Make sure that dependencies are well mapped out.

API’s and test hooks should be in the component designs.

A well designed product has APIs defined for each layer to communicate with its neighbors. It also has logging and performance tracking built in. Make sure that the APIs that the component implements and calls are in the spec for every communication that it will be involved with. Make sure your product has a consistent logging plan and that key performance metrics are called out in advance. Make sure the developers have a consistent plan for exposing this information across the product. WMI, ETL, performance counters and system logs all work well for many of these tasks. A product that uses an ad-hoc mix of them is a monster to test effectively.

APIs specifications should be completed before any coding starts.

It’s not enough to say “this component will implement an API that some other components will call” and then leave this to be grown organically as you write code. In order to have a testable product you need to have the APIs nailed down before anyone starts writing component code. Testing an organic API is a serious challenge. It’s very time consuming to reverse engineer the product. These kinds of API’s are also liable to change a lot as new features are added to the code. Testing will always be well behind in this scenario. Spend the time to lock your API’s down as much as possible at the start of your product coding. Only change them with serious discussion and testing. Make sure they API specifications are specific and technical. You should be able to write an emulator for the API based on nothing but the API specification documents.

Loosely coupled software is testable software

People pay a lot of lip service to loosely coupled designs. Everyone aspires to create and code them, but software can still end up tightly coupled. Loosely coupled software is easy to diagram. It’s a collection of components where each component only has one path to communicate with other components. If you are having a hard time making a clean diagram of your product you may have coupling problems.

Loosely coupled means “one path” for component connections.

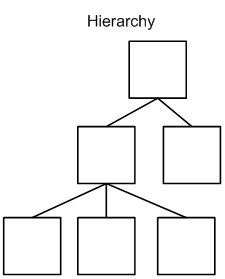

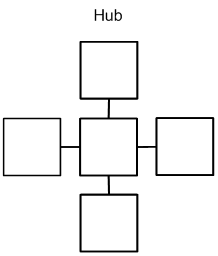

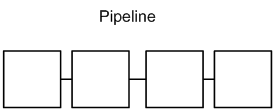

Books have been written on what loosely coupled designs look like. Here are some diagrams that show some good designs. On useful way to think about the concept at a high level is to think about data flow. From any component to any other there should be exactly one path for data to flow. Here are some common patterns that work well. You can mix and match them as well, just make sure you follow the one path rule.

Some good loosely coupled patterns

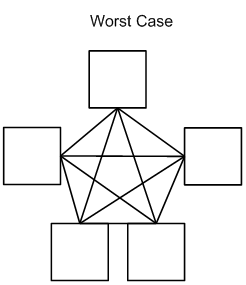

Products that lack direction and grow up from smaller bits over time can get really messy. If your product looks like the next picture, your first priority should be to refactor all the parts into discrete loosely coupled chunks. Until you do, test cost will be very high. The product will also be a serious challenge to maintain and update. This diagram may look like an exaggeration. Sadly, actual software products written by professionals can end up like this for real. Things don't have to get even close to this bad before testability is out the window.

The worst case coupling pattern

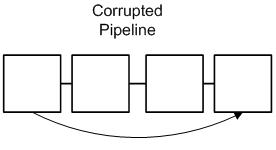

You should watch out for even small violations of the “one path” principle. For example, you might have a pipeline and realize that the end components need to transfer some information.

A corrupted pipeline

Just adding this one, innocent looking extra link is a big problem. In the pipeline model, data flows from one end to the other. Components should only need to know how to communicate with their neighbors. I have seen automated test systems violate this principle by embedding test case id’s into test code for example. Changing your test case repository would require you to recompile all your test code! The same thing can happen in any system with the pipeline pattern. It may seem tempting to violate the one path rule. In some senses it is the simplest solution to tactical problems. However it will cause you problems strategically. Don’t be tempted by short term gains to flush your long term return on investment down the toilet.

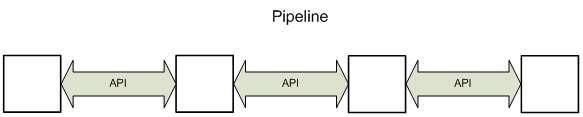

Loosely coupled software means clear APIs.

The previous diagrams show the connections as tiny little lines. It’s a useful way to show the relationships in a compact fashion. However, this visually minimizes the importance of these connections. You must make sure the APIs are first class citizens in your designs. It’s a good idea to ask to have the diagrams in your specifications done in a style with the APIs given as much importance as the components.

A pipeline with the APIs emphasized

Specifications are not complete unless they are very specific about how each component will communicate. Clear API’s are complete, technical and locked down. Again, you should know before you start coding all the APIs you need to implement or call. The APIs need to be specified in sufficiently technical language that there is no doubt that consumers and producers can independently create code to implement the API. If your APIs have sections marked “TBD” (to be determined) you aren’t ready to start coding.

It’s easy to emulate systems that are loosely coupled.

Writing emulators is a really good way to get a lot of test automation return on investment. When the design is loosely coupled and the APIs are well defined, writing emulators is no harder than it should be. The emulators shouldn’t duplicate what the product does. Just do something useful and debug-able. Imagine and interface that just concatenates input values and returns them. Using that emulator for an interface, you can verify that the user of that interface is sending the correct data easily. For example a password dialog of some kind. If you have a password verification emulator that just returns the entered password, you can verify the form is passing the password as typed easily (and automatically). You can also verify that both layers enforce important rules like password complexity independently.

Tightly coupled designs often aren’t worth automating. If your design is tightly coupled it doesn’t leave you with a good place to “stand” when you run the tests. You are pretty much limited to unit tests and scenario testing. You can’t break the product up into discrete chunks without emulating most of it. Imagine you wanted to test one of the components in the “death star” coupling pattern. You have to emulate every other part of the system before it will even run. It is true that it is possible to do this. However the time spent on creating such a large emulator won’t have a good pay-off. If you are stuck with a tightly coupled design your automation will never be as simple, fast, deterministic, diagnostic and maintainable as it should be.

Accessibility is testability.

Accessible software means a lot of things. It should follow the design guidelines for the environment. It should work with tools that make programs easier to use for people that need help. Compatibility with magnifiers, screen readers and design guidelines all contribute to good accessibility. Globalization and localization are also key accessibility features. If you can’t easily translate your product for new markets it will also be harder to test.

Test automation can use accessibility features.

When your product follows good design guidelines for accessibility you gain extra benefits. For example the same technology that makes screen readers work can be used to verify content and find controls. Having a consistent way to locate controls and read information from the UI makes automatic verification more cost effective. Using a method that also enables accessibility means you get two benefits for one coding cost. That’s a great deal.

“Hard coded” means hard to code automation.

Test automation can easily read resource files and modify them for tests. If every string in the product is localizable, you can substitute useful test strings automatically. You can walk the file and do automatic boundary checking on every single resource. With hard coded data and strings you have to recompile or do fancy injection for every test you want to run. Not only that but it might be almost impossible to prove you tested all the strings.

Testability sets up the whole team for success.

There are a lot of reasons to want a product with good testability. When you achieve it, you can graduate from finding bugs to measuring product quality. This is the difference between good testers and excellent testers and also the difference between “Me too” products and excellent ones.

Without good testability in the product the best the testers can do is “go fishing” for bugs. This approach works but it scales poorly and it’s an expensive way to create high quality software. If you don’t have the budget to do a lot of testing, you end up shipping a product your customers might wish you hadn’t.

The test owner must understand and champion testability. The entire product team wants the customer to have a great experience with the product. Everyone on the team is focused on this mission in a different way. Building testability into the product is an excellent way for test to champion the customer’s needs.

Get your team dedicated to pushing testability into every aspect of your product. You are then set up to ship high quality software on time and on budget.

Comments

- Anonymous

November 11, 2007

Dustin, this is great stuff! I too am a strong proponent of getting in there early an influencing designs to testable designs. Thank you.