Do waiting or suspended tasks tie up a worker thread?

I had a discussion the other day with someone about worker threads and their relation to tasks. I thought a quick demo might be worthwhile. When we have tasks that are waiting on a resource (whether it be a timer or a resource like a lock) we are tying up a worker thread. A worker thread is assigned to a task for the duration of that task. For most queries, this means for the duration of the user’s request or query. Let’s look at two examples below.

We could verify that the worker thread is tied up by verifying the state the worker thread is in and that it is assigned to our tasks through the following two DMVs:

select * from sys.dm_os_workers select * from sys.dm_os_tasks

If we start a 5 minute delay to tie up a worker thread using session 55 as follows:

waitfor delay '00:05:00'

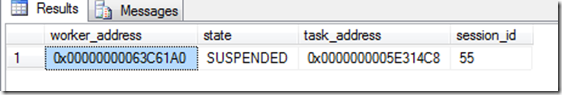

Then we can use the following to verify our worker thread is assigned to our task and suspended while it waits on the timer:

select w.worker_address, w.state, w.task_address, t.session_id from sys.dm_os_tasks t inner join sys.dm_os_workers w on t.worker_address = w.worker_address where t.session_id = 55

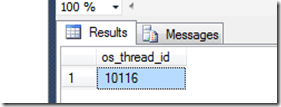

However, we can also get the worker’s OS thread ID and view the call stack to see that it is not merely waiting for work to do – but is tied up waiting to complete. For the above worker thread running on SPID 55, we can run the following to get the os thread id:

select os_thread_id from sys.dm_os_tasks t inner join sys.dm_os_workers w on t.worker_address = w.worker_address inner join sys.dm_os_threads o on o.worker_address = w.worker_address where t.session_id = 55

this gives us:

Now we can get the stack trace of os thread 8376. And it is:

kernel32.dll!SignalObjectAndWait+0x110 sqlservr.exe!SOS_Scheduler::Switch+0x181 sqlservr.exe!SOS_Scheduler::SuspendNonPreemptive+0xca sqlservr.exe!SOS_Scheduler::Suspend+0x2d sqlservr.exe!SOS_Task::Sleep+0xec sqlservr.exe!CStmtWait::XretExecute+0x38b sqlservr.exe!CMsqlExecContext::ExecuteStmts<1,1>+0x375 sqlservr.exe!CMsqlExecContext::FExecute+0x97e sqlservr.exe!CSQLSource::Execute+0x7b5 sqlservr.exe!process_request+0x64b sqlservr.exe!process_commands+0x4e5 sqlservr.exe!SOS_Task::Param::Execute+0x12a sqlservr.exe!SOS_Scheduler::RunTask+0x96 sqlservr.exe!SOS_Scheduler::ProcessTasks+0x128 sqlservr.exe!SchedulerManager::WorkerEntryPoint+0x2d2 sqlservr.exe!SystemThread::RunWorker+0xcc sqlservr.exe!SystemThreadDispatcher::ProcessWorker+0x2db sqlservr.exe!SchedulerManager::ThreadEntryPoint+0x173 MSVCR80.dll!_callthreadstartex+0x17 MSVCR80.dll!_threadstartex+0x84 kernel32.dll!BaseThreadInitThunk+0xd ntdll.dll!RtlUserThreadStart+0x1d

From the highlighted sections in the stack trace above, we can see this is a worker thread that is processing commands (our WAITFOR DELAY statement). It has entered a sleep as a result of our WAITFOR DELAY call and SQL Server OS has switched it off the scheduler since there isn’t anything it can do for 5 minutes. Once the timer expires, the thread will be signaled and can be placed back into the RUNNABLE queue in case there is any more work for it to do.

So our thread is in effect tied up and can’t do any work for 5 minutes. Extensive use of WAITFOR could be a good way to choke the system. What about normal resources? What if we are waiting to obtain a shared lock (LCK_M_S)? Same story… Let’s look…

We can create a simple table and do an insert without closing the transaction to hold the locks…

create table Customers ( ID INT IDENTITY(1,1) PRIMARY KEY CLUSTERED, FIRSTNAME NVARCHAR(30), LASTNAME NVARCHAR(30) ) ON [PRIMARY] GO BEGIN TRAN INSERT INTO CUSTOMERS (FIRSTNAME, LASTNAME) VALUES ('John', 'Doe') INSERT INTO CUSTOMERS (FIRSTNAME, LASTNAME) VALUES ('Jane', 'Doe') INSERT INTO CUSTOMERS (FIRSTNAME, LASTNAME) VALUES ('George', 'Doe')

Now from another session (session 56), we can try to read that table – which will block hopelessly…

select * from Customers

Once again, we use the query from above to get our OS Thread ID:

select os_thread_id from sys.dm_os_tasks t inner join sys.dm_os_workers w on t.worker_address = w.worker_address inner join sys.dm_os_threads o on o.worker_address = w.worker_address where t.session_id = 56

And now we are ready to get the stack for this thread:

kernel32.dll!SignalObjectAndWait+0x110 sqlservr.exe!SOS_Scheduler::Switch+0x181 sqlservr.exe!SOS_Scheduler::SuspendNonPreemptive+0xca sqlservr.exe!SOS_Scheduler::Suspend+0x2d sqlservr.exe!EventInternal<Spinlock<153,1,0> >::Wait+0x1a8 sqlservr.exe!LockOwner::Sleep+0x1f7 sqlservr.exe!lck_lockInternal+0xd7a sqlservr.exe!GetLock+0x1eb sqlservr.exe!BTreeRow::AcquireLock+0x1f9 sqlservr.exe!IndexRowScanner::AcquireNextRowLock+0x1e1 sqlservr.exe!IndexDataSetSession::GetNextRowValuesInternal+0x1397 sqlservr.exe!RowsetNewSS::FetchNextRow+0x159 sqlservr.exe!CQScanRowsetNew::GetRowWithPrefetch+0x47 sqlservr.exe!CQScanTableScanNew::GetRowDirectSelect+0x29 sqlservr.exe!CQScanTableScanNew::GetRow+0x71 sqlservr.exe!CQueryScan::GetRow+0x69 sqlservr.exe!CXStmtQuery::ErsqExecuteQuery+0x602 sqlservr.exe!CXStmtSelect::XretExecute+0x2dd sqlservr.exe!CMsqlExecContext::ExecuteStmts<1,1>+0x375 sqlservr.exe!CMsqlExecContext::FExecute+0x97e sqlservr.exe!CSQLSource::Execute+0x7b5 sqlservr.exe!process_request+0x64b sqlservr.exe!process_commands+0x4e5 sqlservr.exe!SOS_Task::Param::Execute+0x12a sqlservr.exe!SOS_Scheduler::RunTask+0x96 sqlservr.exe!SOS_Scheduler::ProcessTasks+0x128 sqlservr.exe!SchedulerManager::WorkerEntryPoint+0x2d2 sqlservr.exe!SystemThread::RunWorker+0xcc sqlservr.exe!SystemThreadDispatcher::ProcessWorker+0x2db sqlservr.exe!SchedulerManager::ThreadEntryPoint+0x173 MSVCR80.dll!_callthreadstartex+0x17 MSVCR80.dll!_threadstartex+0x84 kernel32.dll!BaseThreadInitThunk+0xd ntdll.dll!RtlUserThreadStart+0x1d

Again, our worker thread processes our command (the SELECT query) and goes into a sleep. Notice this is just the name of the method from the “LockOwner” class – not the same sleep as above that is bound to a timer. The SOS scheduler switches us off and we wait to be signaled that our lock is available. This thread is “tied up” – waiting to continue.

These are the reasons that massive blocking cases can eventually lead to worker thread depletion – and waits on THREADPOOL.

-Jay