Azure Data Services for Big Data

Big data is one of those over used and little understood terms that occasionally crop up in IT. Big data has also been extensively over hyped and yet it’s a pretty simple concept that does have huge potential. A good way to describe it is the three V’s , Volume, Variability and Velocity:

- Volume speaks for itself – often big data is simply to big to store in any but the biggest data centres – for example few of us could store all of twitter locally.

- Velocity reflects the rate of change of data and in this case it’s network capacity that limits your ability to keep up with data as it changes

- Variability is about the many formats that data now exists in from images, and video to the many semi and unstructured formats from logs, and the Internet of Things (IoT). This affects how we store data – in the past we might have spent time to write data into databases but this forces us to make assumptions about what questions we will ask of that data. However with big data it’s not always clear what use will be made of the data as it is stored and so in many solutions data is stored in its raw form for later processing.

These three Vs mean that big data puts stress on the compute storage and networking capacity we have and because its use is uncertain it can be difficult to justify any infrastructure investments for this. This is the main reason that cloud services like Azure make sense in this space. Cloud storage is very cheap, and as we’ll see compute can be on demand and very scalable so you just pay for what you need when you need it.

Enough of the theory how does that play out in Azure?

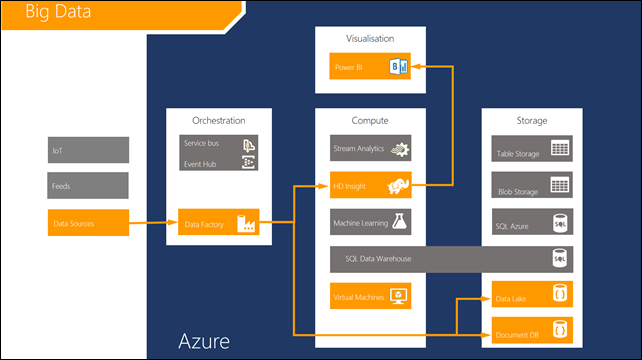

Working backwards – we need to have somewhere to store all of this data and at the moment we currently have three choices for big data :

- Azure Blob storage. This is nothing more than the default storage for azure - for example if you create a VM this is where the virtual hard disks are stored. objects get stored in containers for which permissions can be set much like any operating system except that these can be accessed over DNS and must therefore must have a valid DNS name e.g. https://myaccount.blob.core.windows.net/mycontainer

- Azure Table storage. This is a storage mechanism based on key value pairs, where the key is a unique identifier and the value can be pretty much anything you want and can vary between records in the same table The above rules about DNS also apply to table storage.

- Azure DocumentDB This is a NoSQL schema free data base like storage platform based on JSON data. While there is no concept of transaction you can write SQL like queries and the results come back as JSON (there’s a sandbox here where you can try this yourself.

- Azure Data Lake. This is a new Hadoop File System (HDFS) compatible filing system that was announced at Build 2015, which will supersede Azure Blob Storage as the default location for big data on Azure. However it’s only private preview at the time of writing so I don’t have more detail I can share.

Having found somewhere on Azure to store data, we need a mechanism to process it and the industry standard for this is Hadoop. On Azure this is referred to as HDInsight and while this is essentially a straight port of Hadoop on to the Windows or Linux designed to rub on Azure it has a number of benefits:

- Data can be quickly accessed from Azure Blob Storage by referring to its location with a wasb:// prefix and then the DNS entry to the folder where the files to be used exist. Note this approach allows us to consume all the files at once in a folder but assumes those files all have the same structure. This makes it ideal for accessing slices of data from logs or feeds which often get written out from a service in chunks to the same folder.

- You only spin up a cluster when you need it and pay only for the time it exists. What’s clever about this is that you’ll typically process a chunk of data into some other format say into a database, which will persist after the cluster is destroyed

- Clusters can be configure programmatically from and part of this process can be to add in additional libraries like Giraffe, or Storm and to address and use the storage locations needed for a process.

- You aren’t restricted to using HDInsight - you can if you want spin up Hadoop virtual machines with tooling from Horton Works or Cloudera already included (look for these in the VM Depot I have mentioned in previous posts). However HDInsight manes that you don’t have to worry about the underlying operating system or any of the detailed configuration need to build a cluster form a collection of VMs.

Creating cluster in HDInsight is still a manual process, and while we could create it and leaving it running this can get needlessly expensive if we aren’t using it all the time so many organisations will want to create a cluster run a job against it and then delete it. Moreover we might want to do that in line as part of a regular data processing run and we can do this in HD Insight in one several ways for example:

- An HDInsight cluster can be configured and controlled using PowerShell, and it’s also possible to execute jobs and queries against the cluster. This PowerShell can be part of a bigger script that performs other tasks in Azure and in your local data centre. PowerShell scripts can be adapted and run from Azure Automation which has a scheduling and logging service.

- Make use of Azure Data Factory (ADF) which can do everything that is possible with PowerShell but also shows how processes are connected and has a rich logging environment which can be accessed just form a browser. As I mentioned in my last post ADF can also be used to control batch processing in Azure ML (MAML) as well as connecting to storage and SQL Server and Azure SQL databases which might be the final resting home of some analysis done in HD Insight.

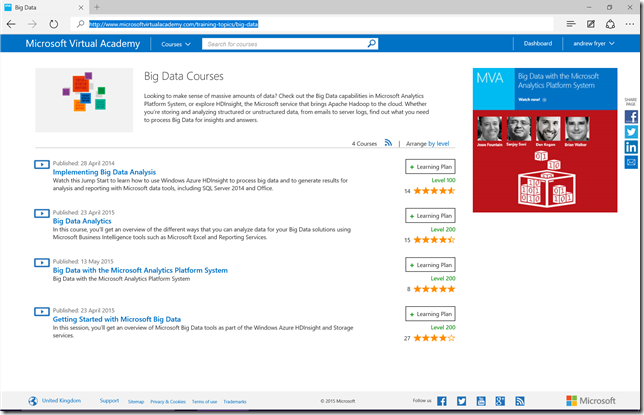

If you want to find out more about any of these services the Microsoft Virtual Academy now has a more structured view of the many courses on there including a special portal for Data Professionals..

and drilling into Big Data will show the key course to get you started..

Hopefully that was useful and in my next post in this series we’ll show how various Azure services can be used for near real time data analysis.

Comments

- Anonymous

September 01, 2015

If you have ever watched the Star Trek First Contact film the crew allude to the fact that then star - Anonymous

September 02, 2015

If you have ever watched the Star Trek First Contact film the crew allude to the fact that then star