Proceso de cargas de trabajo de aplicaciones HPC a gran escala en Azure Virtual Machines

Las cargas de trabajo de informática de alto rendimiento (HPC), también conocidas como aplicaciones de proceso grandes, son cargas de trabajo a gran escala que requieren muchos núcleos. HPC puede ayudar a sectores como energía, finanzas y fabricación en cada fase del proceso de desarrollo de productos.

Las aplicaciones de proceso grande suelen tener las siguientes características:

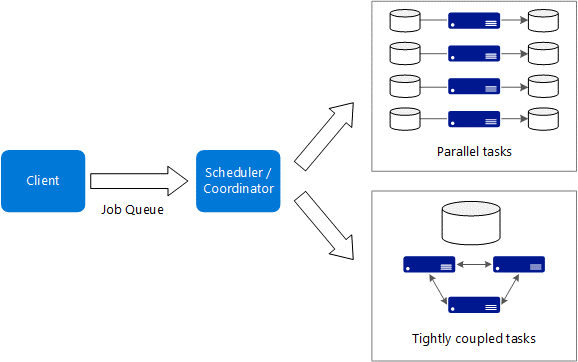

- Puede dividir la carga de trabajo en tareas discretas que se pueden ejecutar simultáneamente en muchos núcleos.

- Cada tarea toma la entrada, la procesa y genera la salida. Toda la aplicación se ejecuta durante un período de tiempo finito.

- La aplicación no necesita ejecutarse constantemente, pero debe ser capaz de controlar errores y bloqueos de nodo.

- Las tareas pueden ser independientes o estrechamente acopladas, lo que requiere tecnologías de red de alta velocidad como InfiniBand y conectividad de acceso directo a memoria remota (RDMA).

- Puede usar tamaños de máquina virtual (VM) intensivos de proceso, como H16r, H16mr y A9. La selección depende de la carga de trabajo.

Azure proporciona una variedad de instancias de máquina virtual optimizadas para cargas de trabajo intensivas de CPU y gpu intensivas. Estas máquinas virtuales se pueden ejecutar en Conjuntos de escalado de máquinas virtuales de Azure para proporcionar resistencia y equilibrio de carga. Azure también es la única plataforma en la nube que ofrece hardware habilitado para InfiniBand. InfiniBand proporciona una ventaja significativa de rendimiento para tareas como el modelado de riesgos financieros, el análisis de estrés de ingeniería y la ejecución de la simulación de depósitos y cargas de trabajo sísmicas. Esta ventaja da como resultado el rendimiento que se aproxima o supera el rendimiento actual de la infraestructura local.

Azure proporciona varios tamaños de máquina virtual para la informática optimizada para HPC y GPU. Es importante seleccionar un tamaño de máquina virtual adecuado para la carga de trabajo. Para encontrar la mejor opción, consulte Tamaños de máquinas virtuales en Azure y Herramienta de selección de máquinas virtuales.

Tenga en cuenta que no todos los productos de Azure están disponibles en todas las regiones. Para ver lo que está disponible en su área, consulte Productos disponibles por región.

Para más información sobre las opciones de proceso de Azure, consulte el blog de proceso de Azure o Elegir un servicio de proceso de Azure.

Azure proporciona máquinas virtuales basadas en CPU y habilitadas para GPU. Las máquinas virtuales de la serie N cuentan con GPU NVIDIA diseñadas para aplicaciones de proceso intensivo o de uso intensivo de gráficos, como ia, aprendizaje y visualización.

Los productos HPC están diseñados para escenarios de alto rendimiento. Pero otros productos, como la serie E y F, también son adecuados para cargas de trabajo específicas.

Consideraciones de diseño

Al diseñar la infraestructura de HPC, hay varias herramientas y servicios disponibles para ayudarle a administrar y programar las cargas de trabajo.

Azure Batch es un servicio administrado para ejecutar aplicaciones HPC a gran escala. Use Batch para configurar un grupo de máquinas virtuales y cargar las aplicaciones y los archivos de datos. A continuación, el servicio Batch configura las máquinas virtuales, asigna tareas a las máquinas virtuales, ejecuta las tareas y supervisa el progreso. Batch puede aumentar o reducir automáticamente el número de máquinas virtuales en respuesta a los cambios en las cargas de trabajo. Batch también proporciona una funcionalidad de programación de trabajos.

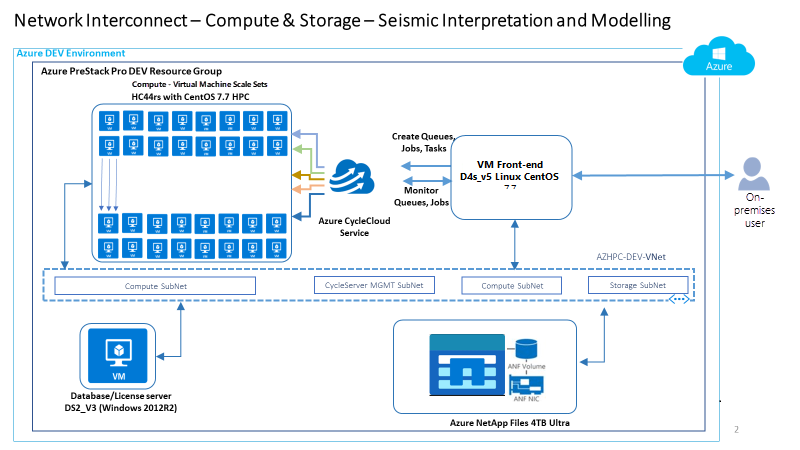

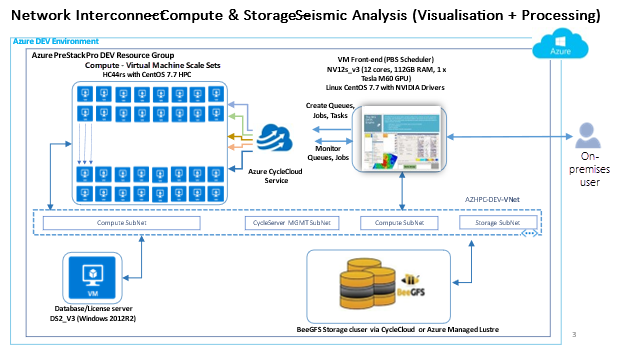

Azure CycleCloud es una herramienta para crear, administrar, operar y optimizar HPC y clústeres de proceso grandes en Azure. Use Azure CycleCloud para configurar dinámicamente clústeres de Azure de HPC y organizar datos y trabajos para flujos de trabajo híbridos y en la nube. Azure CycleCloud proporciona la manera más sencilla de administrar cargas de trabajo de HPC mediante un administrador de cargas de trabajo. Azure CycleCloud admite administradores de cargas de trabajo como Grid Engine, Microsoft HPC Pack, HTCondor, LSF, PBS Pro, SLURM y Symphony.

azure Logic Apps es un servicio especializado para programar trabajos intensivos de proceso para ejecutarse en un grupo administrado de máquinas virtuales. Puede escalar automáticamente los recursos de computación para satisfacer las necesidades de los trabajos.

En las secciones siguientes se describen las arquitecturas de referencia para las industrias energéticas, financieras y de fabricación.

Arquitectura de referencia energética

Tenga en cuenta las siguientes recomendaciones y casos de uso al diseñar una arquitectura para cargas de trabajo de energía.

Recomendaciones de diseño

Comprenda que los flujos de trabajo de reservorios y sísmicos suelen tener requisitos similares para el cálculo y la programación de trabajos.

Tenga en cuenta las necesidades de red. Azure HPC proporciona tamaños de máquina virtual de la serie HBv2 y HBv3 para simulaciones de yacimientos e imagen sísmica intensiva en memoria.

Utilice máquinas virtuales de la serie HB para aplicaciones dependientes del ancho de banda de memoria y máquinas virtuales de la serie HC para simulaciones de reservorios intensivas en cálculo.

Use máquinas virtuales de la serie NV para el modelado de depósitos 3D y la visualización de datos sísmicos.

Use máquinas virtuales de la serie NCv4 para el análisis de inversión de onda completa (FWI) acelerado por GPU.

Para el procesamiento de moldeo de transferencia de resina (RTM) intensivo en datos, el tamaño de máquina virtual NDv4 es la mejor opción, ya que proporciona unidades de memoria rápida no volátil (NVMe) que tienen una capacidad acumulativa de 7 TB.

Para obtener el mejor rendimiento posible en las máquinas virtuales de la serie HB con cargas de trabajo de interfaz de paso de mensajes (MPI), realice un anclaje óptimo de procesos a los núcleos de los procesadores. Para más información, consulte ubicación óptima de procesos de MPI para máquinas virtuales de la serie HB de Azure.

Las máquinas virtuales de la serie NCv4 también proporcionan herramientas dedicadas para garantizar el anclaje correcto de los procesos de aplicación paralelos.

Debido a la arquitectura compleja de las máquinas virtuales de la serie NDv4, preste atención al configurar las máquinas virtuales para asegurarse de que inicia las aplicaciones aceleradas por GPU de forma óptima. Para obtener más información, consulte VM escalable de GPU de Azure.

Casos de uso para la arquitectura de referencia de simulación sísmica y de yacimientos de petróleo y gas

Los flujos de trabajo de depósitos y sísmicos suelen tener requisitos similares para el cómputo y la planificación de tareas. Sin embargo, las cargas de trabajo sísmicas desafían las capacidades de almacenamiento de la infraestructura. A veces necesitan varios PB de requisitos de almacenamiento y rendimiento que podrían medirse en cientos de GB. Por ejemplo, un único proyecto de procesamiento sísmico podría comenzar con 500 TB de datos sin procesar, lo que requiere potencialmente varios PB de almacenamiento a largo plazo.

Consulte las siguientes arquitecturas de referencia que pueden ayudarle a cumplir correctamente sus objetivos para ejecutar la aplicación en Azure.

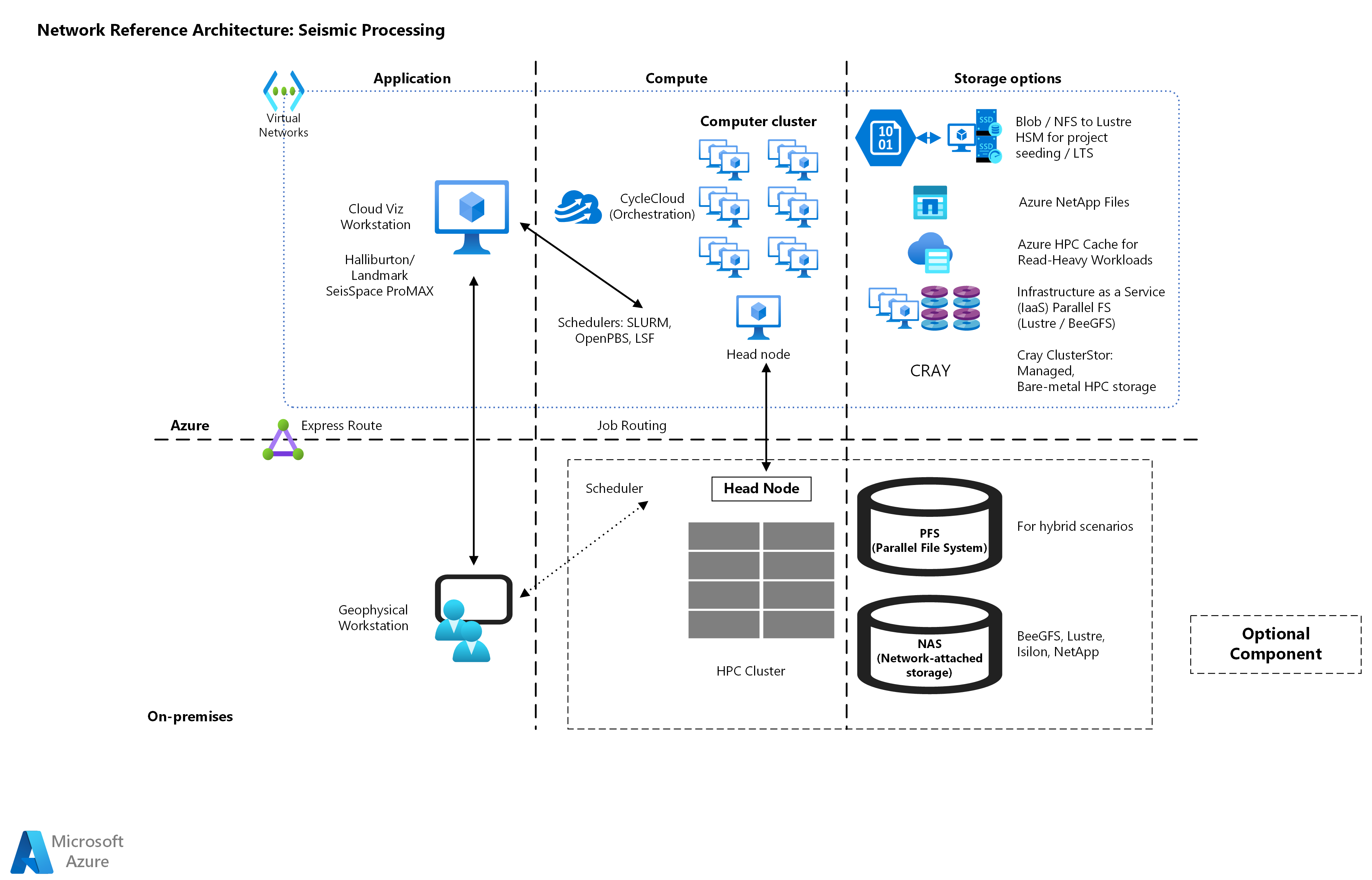

Arquitectura de referencia para el procesamiento sísmico

El procesamiento sísmico y la creación de imágenes son fundamentales para la industria del petróleo y el gas porque crean un modelo de subsurface basado en los datos de exploración. Los geocientistas suelen llevar a cabo el proceso de calificar y cuantificar lo que podría estar en el subsurface. Los geocientistas suelen usar el centro de datos y el software enlazado a la nube. En ocasiones acceden al software de forma remota o en la nube mediante la tecnología de escritorio virtual.

La calidad del modelo del subsuelo y la resolución de los datos son fundamentales para tomar las decisiones empresariales adecuadas sobre la licitación de concesiones o decidir dónde explorar. Las imágenes de interpretación de imágenes sísmicas pueden mejorar la posición de los pozos y reducir el riesgo de perforar un agujero seco . Para las empresas de petróleo y gas, tener una mejor comprensión de las estructuras subsurfaces se traduce directamente en reducir el riesgo de exploración. Básicamente, cuanto mayor sea la precisión de la comprensión de la empresa de la zona geográfica, mejor será su posibilidad de encontrar petróleo cuando perfore.

Este trabajo es intensivo en datos y cálculo. La empresa necesita procesar terabytes de datos. Este procesamiento de datos requiere una potencia de cálculo masiva y rápida, que incluye redes rápidas. Debido a la naturaleza de los datos y la informática intensiva de la imagen sísmica, las empresas usan la computación paralela para procesar datos y reducir el tiempo de compilación y finalización.

Las empresas procesan constantemente grandes volúmenes de datos de adquisición sísmica para localizar y cuantificar con precisión y calificar el contenido de hidrocarburos en los depósitos que detectan en la subsufa antes de comenzar las operaciones de recuperación. Los datos de adquisición no están estructurados y pueden alcanzar fácilmente petabytes de almacenamiento para un campo potencial de petróleo y gas. Debido a estos factores, solo puede completar la actividad de procesamiento sísmico dentro de un período de tiempo razonable mediante HPC y otras estrategias de administración de datos adecuadas.

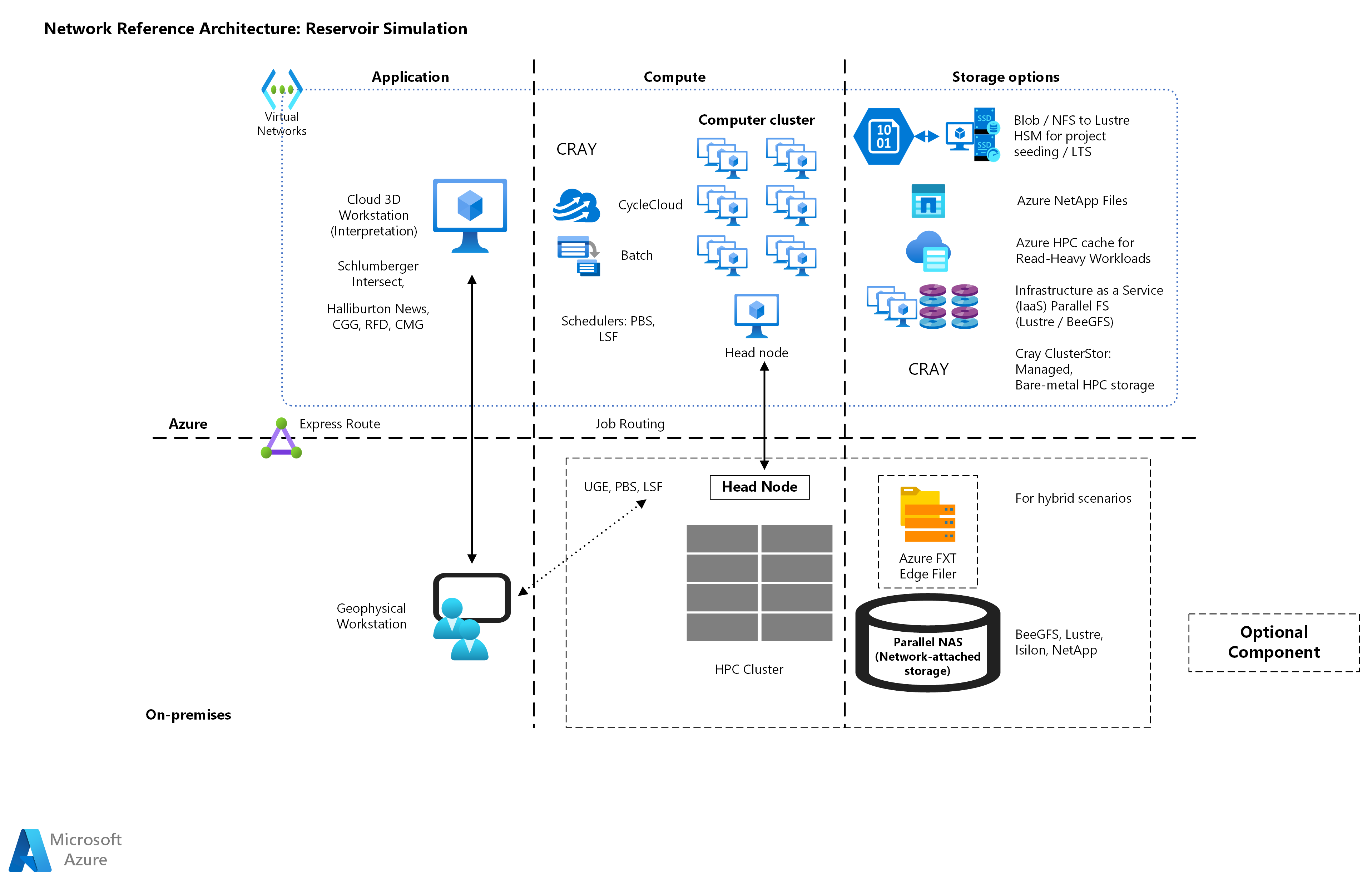

Arquitectura de referencia para la simulación y el modelado de depósitos

Las características físicas de subsuperficie, como la saturación de agua, la porosidad y la permeabilidad, también son datos valiosos en el modelado de depósitos. Estos datos son importantes para determinar qué tipo de enfoque de recuperación y equipos implementar y, en última instancia, dónde colocar los pozos.

El trabajo de modelado de reservorios también es un área de la ingeniería de reservorios. La carga de trabajo combina la física, las matemáticas y la programación informática en un modelo de depósito para analizar y predecir el comportamiento fluido en el depósito a lo largo del tiempo. Este análisis requiere una alta potencia de cálculo y grandes demandas de carga de trabajo de proceso, incluidas las redes rápidas.

Arquitectura de referencia financiera

La arquitectura siguiente es un ejemplo de cómo usar máquinas virtuales en HPC para cargas de trabajo financieras.

Esta carga de trabajo usa nodos de proceso de la serie HB de HPC Pack.

Las máquinas virtuales de la serie HB están optimizadas para aplicaciones HPC, como el análisis financiero, la simulación meteorológica y el modelado de nivel de transferencia de registros de silicio (RTL). Característica de máquinas virtuales HB:

- Hasta 120 núcleos de CPU de la serie AMD EPYC™ 7003.

- 448 GB de RAM.

- Sin tecnología de hiperprocesamiento.

Las máquinas virtuales de la serie HB también proporcionan:

- 350 GB por segundo de ancho de banda de memoria.

- Hasta 32 MB de caché L3 por núcleo.

- Hasta 7 GB por segundo de rendimiento de unidad de estado sólido (SSD).

- Frecuencias de reloj de hasta 3,675 GHz.

Para el nodo principal de HPC, la carga de trabajo usa una máquina virtual de tamaño diferente. En concreto, usa una máquina virtual D16s_v4, un tipo de producto de uso general.

Arquitectura de referencia de fabricación

La arquitectura siguiente es un ejemplo de cómo usar máquinas virtuales en HPC en fabricación.

Esta arquitectura usa recursos compartidos de Azure Files y cuentas de Azure Storage que están conectadas a una subred de Azure Private Link.

La arquitectura usa Azure CycleCloud en su propia subred. Las máquinas virtuales de la serie HC se usan en una organización de nodos de clúster.

Las máquinas virtuales de la serie HC están optimizadas para aplicaciones HPC que usan un cálculo intensivo. Entre los ejemplos se incluyen el análisis de elementos implícitos y finitos, la simulación de depósitos y las aplicaciones de química computacional. Las máquinas virtuales HC cuentan con 44 núcleos de procesador Intel Xeon Platinum 8168, 8 GB de RAM por núcleo de CPU, sin hyperthreading y hasta cuatro discos administrados. La plataforma Intel Xeon Platinum admite el amplio ecosistema de herramientas y características de software de Intel y una velocidad de reloj de todos los núcleos de 3,4 GHz para la mayoría de las cargas de trabajo.

Pasos siguientes

Para obtener más información sobre las aplicaciones que admiten los casos de uso de este artículo, consulte los siguientes recursos:

- Serie de máquinas virtuales.

- Azure HPC certification.github.io.

- Microsoft Azure HPC OnDemand Platform. Es posible que esta arquitectura de referencia independiente no sea compatible con el paradigma de la zona de aterrizaje de Azure.

En los artículos siguientes se proporcionan instrucciones para varias fases del proceso de adopción de la nube. Estos recursos pueden ayudarle a adoptar correctamente los entornos de HPC de fabricación para la nube.