Introduction to Parallel Query Execution

SQL Server has the ability to execute queries using multiple CPUs simultaneously. We refer to this capability as parallel query execution. Parallel query execution can be used to reduce the response time of (i.e., speed up) a large query. It can also be used to a run a bigger query (one that processes more data) in about the same amount of time as a smaller query (i.e., scale up) by increasing the number of CPUs used in processing the query. For most large queries SQL Server generally scales linearly or nearly linearly. For speed up, this means that if we double the number of CPUs, we see the response time drop in half. For scale up, it means that if we double the number of CPUs and the size of the query, we see the same response time.

When is parallelism useful?

As I noted above parallelism can be used to reduce the response time of a single query. However, parallelism comes with a cost: it increases the overhead associated with executing a query. While this overhead is relatively small, it does make parallelism inappropriate for small queries (especially for OLTP queries) where the overhead would dominate the total execution time. Moreover, while SQL Server does generally scale linearly, if we compare the same query running serially (i.e., without parallelism and on a single CPU) and in parallel on two CPUs, we will typically find that the parallel execution time is more than half of the serial execution time. Again, this effect is due to the parallelism overhead.

Parallelism is primarily useful on servers running a relatively small number of concurrent queries. On this type of server, parallelism can enable a small set of queries to keep many CPUs busy. On servers running many concurrent queries (such as an OLTP system), we do not need parallelism to keep the CPUs busy; the mere fact that we have so many queries to execute can keep the CPUs busy. Running these queries in parallel would just add overhead that would reduce the overall throughput of the system.

How does SQL Server parallelize queries?

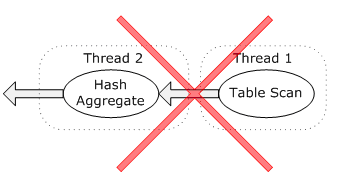

SQL Server parallelizes queries by horizontally partitioning the input data into approximately equal sized sets, assigning one set to each CPU, and then performing the same operation (e.g., aggregate, join, etc.) on each set. For example, suppose that we want to use two CPUs to execute a hash aggregate that happens to be grouping on an integer column. We create two threads (one for each CPU). Each thread executes the same hash aggregate operator. We might partition the input data by sending rows where the group by column is odd to one thread and rows where the group by column is even to the other thread. As long as all rows that belong to one group are processed by one hash aggregate operator and one thread, we get the correct result.

This method of parallel query execution is both simple and scales well. In the above example, both hash aggregate threads execute independently. The two threads do not need to communicate or coordinate their work in any way. If we want to increase the degree of parallelism (DOP), we can simple add more threads and adjust our partitioning function. (In practice, we use a hash function to distribute rows for a hash aggregate. The hash function handles any data type, any number of group by columns, and any number of threads.)

The actual partitioning and movement of data between threads is handled by the parallelism (or exchange) iterator. Although it is unique in many respects, the parallelism iterator implements the same interfaces as any other iterator. Most of the other iterators do not need to be aware that they are executing in parallel. We simply place appropriate parallelism iterators in the plan and it runs in parallel.

Note that this method of parallelism is not the same as “pipeline” parallelism where multiple unrelated operators run concurrently in different threads. Although SQL Server frequently places different operators in different threads, the primary reason for doing so is to allow repartitioning of the data as it flows from one operator to the next. With pipeline parallelism the degree of parallelism and the total number of threads would be limited to the number of operators.

Who decides whether to parallelize a query?

The query optimizer decides whether we should execute a query in parallel. This decision, like most others, is cost based. A complex and expensive query that processes many rows is more likely to result in a parallel plan than a simple query that processes very few rows.

Who decides the degree of parallelism (DOP)?

The DOP is not part of the cached compiled plan and may change with each execution. We decide the DOP at the start of execution by considering the number of CPUs on the server, the “max degree of parallelism” and “max worker threads” sp_configure settings (only visible if the “show advanced options” setting is on), and the query MAXDOP hint if any. In short, we choose a DOP that maximizes parallelism while ensuring that we do not run out of worker threads. If you choose MAXDOP 1, we remove all parallelism iterators and run the query as a serial plan using a single thread.

Note that the number of threads used by a parallel query may exceed the DOP. If you check sys.sysprocesses while running a parallel query, you may see more threads than the DOP. As I noted above, if we need to repartition data between two operators, we place them in different threads. The DOP determines the number of threads per operator not the total number of threads per query plan. In SQL Server 2000 if the DOP was less than the number of CPUs, the extra threads could use the extra CPUs effectively defeating the MAXDOP settings. In SQL Server 2005, when we run a query with a given DOP, we also limit the number of schedulers to the selected DOP. That is, all threads used by the query are assigned to the same set of DOP schedulers and the query uses only DOP CPUs regardless of the total number of threads.

Comments

- Anonymous

October 25, 2006

The comment has been removed - Anonymous

September 04, 2007

PARALLELISM is a close friend of DBA where you get to see it as a common occurence in the multi-processor - Anonymous

January 18, 2008

In some of my past posts, I've discussed at how SQL Server implements aggregation including the stream - Anonymous

January 18, 2008

In some of my past posts, I've discussed at how SQL Server implements aggregation including the stream - Anonymous

November 28, 2008

This is the first of a four-part series: Introduction to Query Parallelism (this post) Flipping the Bit