Hi ,

I am decrypting tar file and extracting to blob version by calling python code in azure function

code is working fine in vs studio local

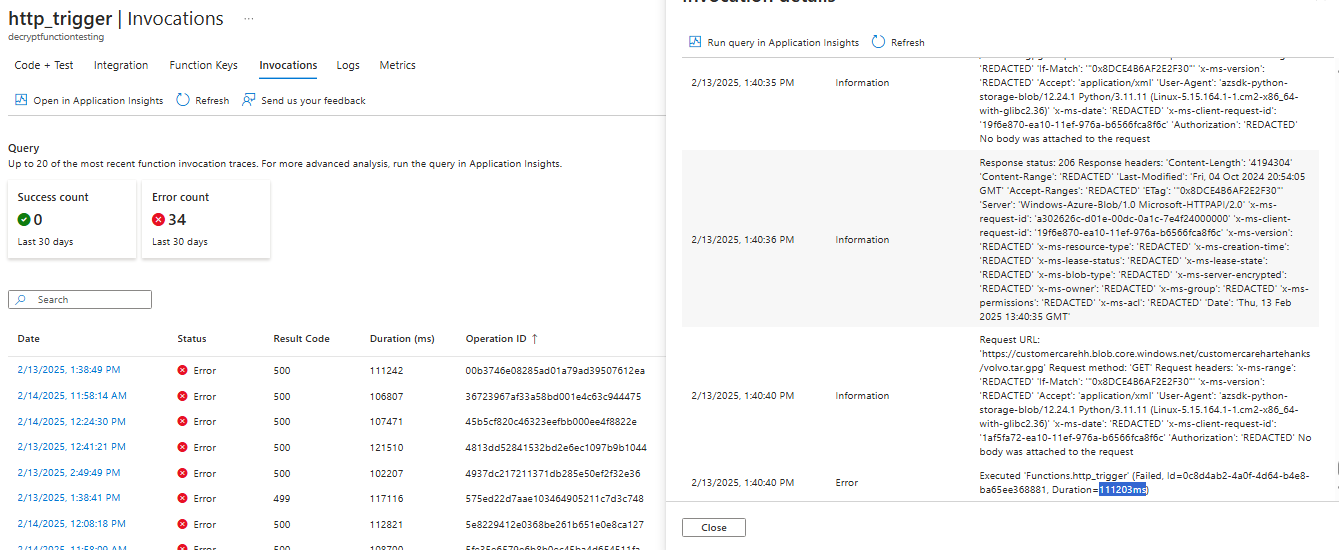

but it shows failure without any reason in azure functions portal and also when called from adf pipeline.

I was unable to track back anything . help required

import io

import os

import logging

import gnupg

import tarfile

import azure.functions as func

from azure.storage.blob import BlobServiceClient

# Constants

CONTAINER_NAME = ""

BLOB_NAME = ""

OUTPUT_FOLDER = ""

GPG_PASSPHRASE = "*"

# Blob Storage Connection

BLOB_CONNECTION_STRING = ""

# Initialize Blob Service Clients

blob_service_client = BlobServiceClient.from_connection_string(BLOB_CONNECTION_STRING)

container_client = blob_service_client.get_container_client(CONTAINER_NAME)

app = func.FunctionApp()

@app.route(route="http_trigger")

def http_trigger(req: func.HttpRequest) -> func.HttpResponse:

logging.info('Processing HTTP trigger function.')

try:

main()

return func.HttpResponse("Hello, World! Decryption and extraction completed.", status_code=200)

except Exception as e:

logging.error(f"Function failed: {str(e)}")

return func.HttpResponse(f"Error: {str(e)}", status_code=500)

# GPG Decryption Function

def decrypt_gpg_from_blob(blob_name: str, passphrase: str) -> io.BytesIO:

logging.info(f"Downloading and decrypting {blob_name} from Azure Blob Storage...")

try:

# Get the blob client

blob_client = container_client.get_blob_client(blob_name)

encrypted_data = blob_client.download_blob().readall()

# Initialize GPG

gpg = gnupg.GPG()

# Perform decryption

decrypted_data = gpg.decrypt(encrypted_data, passphrase=passphrase)

if not decrypted_data.ok:

logging.error(f"Decryption failed: {decrypted_data.stderr}")

raise ValueError(f"GPG decryption failed: {decrypted_data.stderr}")

logging.info("Decryption successful.")

return io.BytesIO(decrypted_data.data.encode()) # Ensure proper encoding

except Exception as e:

logging.error(f"Error during GPG decryption: {str(e)}")

raise

# Extract & Upload Files to Azure Blob

def extract_and_upload_to_blob(decrypted_data: io.BytesIO, output_folder: str):

logging.info(f"Extracting and uploading files to {output_folder} in Azure Blob Storage...")

try:

output_container_client = blob_service_client.get_container_client(output_folder)

# Ensure the output container exists

if not output_container_client.exists():

output_container_client.create_container()

with tarfile.open(fileobj=decrypted_data, mode='r:*') as tar:

for member in tar.getmembers():

if member.isfile():

file_stream = tar.extractfile(member)

if file_stream:

file_data = file_stream.read()

cleaned_path = os.path.basename(member.name)

upload_blob_with_debug(output_container_client, cleaned_path, file_data)

logging.info(f"Uploaded {cleaned_path} to {output_folder}.")

except tarfile.TarError as e:

logging.error(f"Error extracting tar file: {str(e)}")

raise

except Exception as e:

logging.error(f"Error during file extraction or upload: {str(e)}")

raise

# Upload to Azure Blob with Debug Logging

def upload_blob_with_debug(blob_container_client, blob_name, data):

try:

blob_client = blob_container_client.get_blob_client(blob_name)

logging.info(f"Uploading blob: {blob_name} to {blob_client.url}")

blob_client.upload_blob(data, blob_type="BlockBlob", overwrite=True)

logging.info("Blob uploaded successfully.")

except Exception as e:

logging.error(f"Error uploading blob: {e}")

# Main Function

def main():

logging.basicConfig(level=logging.INFO)

try:

decrypted_data = decrypt_gpg_from_blob(BLOB_NAME, GPG_PASSPHRASE)

extract_and_upload_to_blob(decrypted_data, OUTPUT_FOLDER)

logging.info("Decryption and extraction completed successfully.")

except Exception as e:

logging.error(f"An error occurred: {str(e)}")

i am seeing issue as below

Any help is greatly appreciated