Activate a background app in Cortana using voice commands

Warning

This feature is no longer supported as of the Windows 10 May 2020 Update (version 2004, codename "20H1").

In addition to using voice commands within Cortana to access system features, you may also extend Cortana with features and functionality from your app (as a background task) using voice commands that specify an action or command to run. When an app handles a voice command in the background, it does not take focus. Instead, it returns all feedback and results through the Cortana canvas and the Cortana voice.

Apps may be activated to the foreground (the app takes focus) or activated in the background (Cortana retains focus), depending on the complexity of the interaction. For example, voice commands that require additional context or user input (such as sending a message to a specific contact) are best handled in a foreground app, while basic commands (such as listing upcoming trips) may be handled in Cortana through a background app.

If you want to activate an app to the foreground using voice commands, see Activate a foreground app with voice commands through Cortana.

Note

A voice command is a single utterance with a specific intent, defined in a Voice Command Definition (VCD) file, directed at an installed app via Cortana.

A VCD file defines one or more voice commands, each with a unique intent.

Voice command definitions can vary in complexity. They can support anything from a single, constrained utterance to a collection of more flexible, natural language utterances, all denoting the same intent.

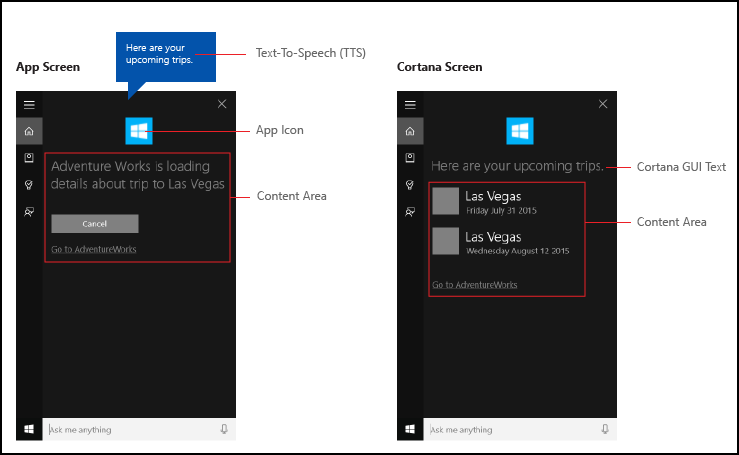

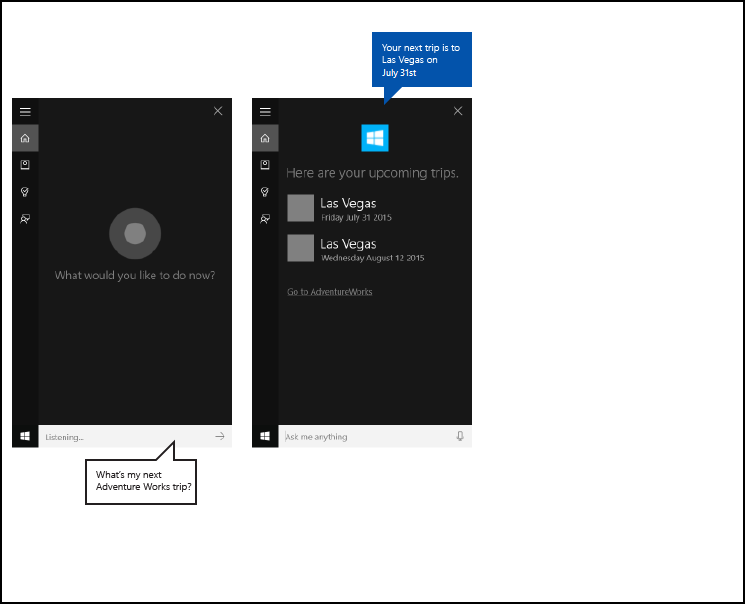

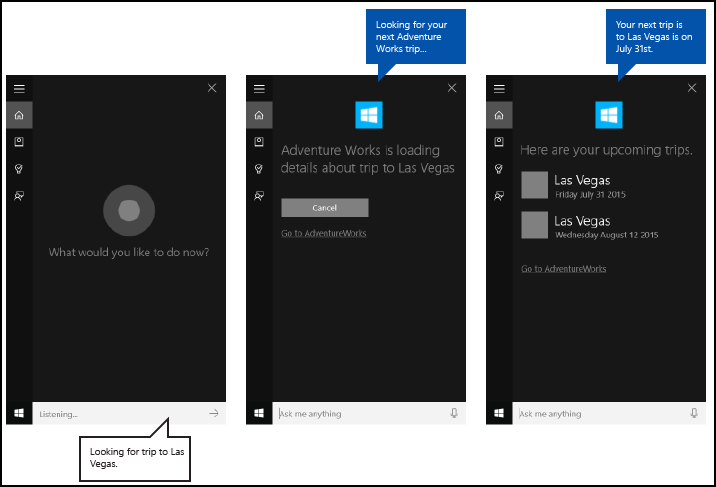

We use a trip planning and management app named Adventure Works integrated into the Cortana UI, shown here, to demonstrate many of the concepts and features we discuss. For more info, see the Cortana voice command sample.

To view an Adventure Works trip without Cortana, a user would launch the app and navigate to the Upcoming trips page.

Using voice commands through Cortana to launch your app in the background, the user may instead just say, Adventure Works, when is my trip to Las Vegas?. Your app handles the command and Cortana displays results along with your app icon and other app info, if provided.

The following basic steps add voice-command functionality and extend Cortana with background functionality from your app using speech or keyboard input.

- Create an app service (see Windows.ApplicationModel.AppService) that Cortana invokes in the background.

- Create a VCD file. The VCD file is an XML document that defines all the spoken commands that the user may say to initiate actions or invoke commands when activating your app. See VCD elements and attributes v1.2.

- Register the command sets in the VCD file when the app is launched.

- Handle the background activation of the app service and the running of the voice command.

- Display and speak the appropriate feedback to the voice command within Cortana.

Tip

Prerequisites

If you're new to developing Universal Windows Platform (UWP) apps, have a look through these topics to get familiar with the technologies discussed here.

- Create your first app

- Learn about events with Events and routed events overview

User experience guidelines

See Cortana design guidelines for info about how to integrate your app with Cortana and Speech interactions for helpful tips on designing a useful and engaging speech-enabled app.

Create a New Solution with a Primary Project in Visual Studio

Launch Microsoft Visual Studio 2015.

The Visual Studio 2015 Start page appears.On the File menu, select New > Project.

The New Project dialog appears. The left pane of the dialog lets you select the type of templates to display.In the left pane, expand Installed > Templates > Visual C# > Windows, then pick the Universal template group. The center pane of the dialog displays a list of project templates for Universal Windows Platform (UWP) apps.

In the center pane, select the Blank App (Universal Windows) template.

The Blank App template creates a minimal UWP app that compiles and runs. The Blank App template includes no user-interface controls or data. You add controls to the app using this page as a guide.In the Name text box, type your project name. Example: Use

AdventureWorks.Click on the OK button to create the project.

Microsoft Visual Studio creates your project and displays it in the Solution Explorer.

Add Image Assets to Primary Project and Specify them in the App Manifest

UWP apps should automatically select the most appropriate images. The selection is based upon specific settings and device capabilities (high contrast, effective pixels, locale, and so on). You must provide the images and ensure that you use the appropriate naming convention and folder organization within your app project for the different resource versions.

If you do not provide the recommended resource versions, then the user experience may suffer in the following ways.

- Accessibility

- Localization

- Image quality

The resource versions are used to adapt the following changes in the user experience. - User preferences

- Abilities

- Device type

- Location

For more detail about image resources for high contrast and scale factors, visit the Guidelines for tile and icon assets page located at msdn.microsoft.com/windows/uwp/controls-and-patterns/tiles-and-notifications-app-assets.

You must name resources using qualifiers. Resource qualifiers are folder and filename modifiers that identify the context in which a particular version of a resource should be used.

The standard naming convention is foldername/qualifiername-value[_qualifiername-value]/filename.qualifiername-value[_qualifiername-value].ext.

Example: images/logo.scale-100_contrast-white.png, which may refer to code using just the root folder and the filename: images/logo.png.

For more information, visit the How to name resources using qualifiers page located at msdn.microsoft.com/library/windows/apps/xaml/hh965324.aspx.

Microsoft recommends that you mark the default language on string resource files (such as en-US\resources.resw) and the default scale factor on images (such as logo.scale-100.png), even if you do not currently plan to provide localized or multiple resolution resources. However, at a minimum, Microsoft recommends that you provide assets for 100, 200, and 400 scale factors.

Important

The app icon used in the title area of the Cortana canvas is the Square44x44Logo icon specified in the Package.appxmanifest file.

You may also specify an icon for each entry in the content area of the Cortana canvas. Valid image sizes for the results icons are:

- 68w x 68h

- 68w x 92h

- 280w x 140h

The content tile is not validated until a VoiceCommandResponse object is passed to the VoiceCommandServiceConnection class. If you pass a VoiceCommandResponse object to Cortana that includes a content tile with an image that does not adhere to these size ratios, then an exception may occur.

Example: The Adventure Works app (VoiceCommandService\\AdventureWorksVoiceCommandService.cs) specifies a simple, grey square (GreyTile.png) on the VoiceCommandContentTile class using the TitleWith68x68IconAndText tile template. The logo variants are located in VoiceCommandService\\Images, and are retrieved using the GetFileFromApplicationUriAsync method.

var destinationTile = new VoiceCommandContentTile();

destinationTile.ContentTileType = VoiceCommandContentTileType.TitleWith68x68IconAndText;

destinationTile.Image = await StorageFile.GetFileFromApplicationUriAsync(

new Uri("ms-appx:///AdventureWorks.VoiceCommands/Images/GreyTile.png")

);

Create an App Service Project

Right-click on your Solution name, select New > Project.

Under Installed > Templates > Visual C# > Windows > Universal, select Windows Runtime Component. The Windows Runtime Component is the component that implements the app service (Windows.ApplicationModel.AppService).

Type a name for the project and click on the OK button.

Example:VoiceCommandService.In Solution Explorer, select the

VoiceCommandServiceproject and rename theClass1.csfile generated by Visual Studio. Example: The Adventure Works usesAdventureWorksVoiceCommandService.cs.Click on the Yes button; when asked if you want to rename all occurrences of

Class1.cs.In the

AdventureWorksVoiceCommandService.csfile:- Add the following using directive.

using Windows.ApplicationModel.Background; - When you create a new project, the project name is used as the default root namespace in all files. Rename the namespace to nest the app service code under the primary project.

Example:

namespace AdventureWorks.VoiceCommands. - Right-click on the app service project name in Solution Explorer and select Properties.

- On the Library tab, update the Default namespace field with this same value.

Example:AdventureWorks.VoiceCommands). - Create a new class that implements the IBackgroundTask interface. This class requires a Run method, which is the entry point when Cortana recognizes the voice command.

Example: A basic background task class from the Adventure Works app.

Note

The background task class itself, as well as all classes in the background task project, must be sealed public classes.

namespace AdventureWorks.VoiceCommands { ... /// <summary> /// The VoiceCommandService implements the entry point for all voice commands. /// The individual commands supported are described in the VCD xml file. /// The service entry point is defined in the appxmanifest. /// </summary> public sealed class AdventureWorksVoiceCommandService : IBackgroundTask { ... /// <summary> /// The background task entrypoint. /// /// Background tasks must respond to activation by Cortana within 0.5 second, and must /// report progress to Cortana every 5 seconds (unless Cortana is waiting for user /// input). There is no running time limit on the background task managed by Cortana, /// but developers should use plmdebug (https://msdn.microsoft.com/library/windows/hardware/jj680085%28v=vs.85%29.aspx) /// on the Cortana app package in order to prevent Cortana timing out the task during /// debugging. /// /// The Cortana UI is dismissed if Cortana loses focus. /// The background task is also dismissed even if being debugged. /// Use of Remote Debugging is recommended in order to debug background task behaviors. /// Open the project properties for the app package (not the background task project), /// and enable Debug -> "Do not launch, but debug my code when it starts". /// Alternatively, add a long initial progress screen, and attach to the background task process while it runs. /// </summary> /// <param name="taskInstance">Connection to the hosting background service process.</param> public void Run(IBackgroundTaskInstance taskInstance) { // // TODO: Insert code // // } } }- Add the following using directive.

Declare your background task as an AppService in the app manifest.

- In Solution Explorer, right-click on the

Package.appxmanifestfile and select View Code. - Find the

Applicationelement. - Add an

Extensionselement to theApplicationelement. - Add a

uap:Extensionelement to theExtensionselement. - Add a

Categoryattribute to theuap:Extensionelement and set the value of theCategoryattribute towindows.appService. - Add an

EntryPointattribute to theuap: Extensionelement and set the value of theEntryPointattribute to the name of the class that implementsIBackgroundTask.

Example:AdventureWorks.VoiceCommands.AdventureWorksVoiceCommandService. - Add a

uap:AppServiceelement to theuap:Extensionelement. - Add a

Nameattribute to theuap:AppServiceelement and set the value of theNameattribute to a name for the app service, in this caseAdventureWorksVoiceCommandService. - Add a second

uap:Extensionelement to theExtensionselement. - Add a

Categoryattribute to thisuap:Extensionelement and set the value of theCategoryattribute towindows.personalAssistantLaunch.

Example: A manifest from the Adventure Works app.

<Package> <Applications> <Application> <Extensions> <uap:Extension Category="windows.appService" EntryPoint="CortanaBack1.VoiceCommands.AdventureWorksVoiceCommandService"> <uap:AppService Name="AdventureWorksVoiceCommandService"/> </uap:Extension> <uap:Extension Category="windows.personalAssistantLaunch"/> </Extensions> <Application> <Applications> </Package>- In Solution Explorer, right-click on the

Add this app service project as a reference in the primary project.

- Right-click on the References.

- Select Add Reference....

- In the Reference Manager dialog, expand Projects and select the app service project.

- Click on the OK button.

Create a VCD File

- In Visual Studio, right-click on your primary project name, select Add > New Item. Add an XML File.

- Type a name for the VCD file.

Example:AdventureWorksCommands.xml. - Click on the Add button.

- In Solution Explorer, select the VCD file.

- In the Properties window, set Build action to Content, and then set Copy to output directory to Copy if newer.

Edit the VCD File

Add a

VoiceCommandselement with anxmlnsattribute pointing tohttps://schemas.microsoft.com/voicecommands/1.2.For each language supported by your app, create a

CommandSetelement that includes the voice commands supported by your app.

You are able to declare multipleCommandSetelements, each with a differentxml:langattribute so your app to be used in different markets. For example, an app for the United States might have aCommandSetfor English and aCommandSetfor Spanish.Important

To activate an app and initiate an action using a voice command, the app must register a VCD file that includes a

CommandSetelement with a language that matches the speech language indicated in the device of the user. The speech language is located in Settings > System > Speech > Speech Language.Add a

Commandelement for each command you want to support.

EachCommanddeclared in a VCD file must include this information:A

Nameattribute that your application uses to identify the voice command at runtime.An

Exampleelement that includes a phrase describing how a user invokes the command. Cortana shows the example when the user saysWhat can I say?,Help, or taps See more.A

ListenForelement that includes the words or phrases that your app recognizes as a command. EachListenForelement may contain references to one or morePhraseListelements that contain specific words relevant to the command.Note

ListenForelements must not be programmatically modified. However,PhraseListelements associated withListenForelements may be programmatically modified. Applications should modify the content of thePhraseListelement at runtime based on the data set generated as the user uses the app.For more information, see Dynamically modify Cortana VCD phrase lists.

A

Feedbackelement that includes the text for Cortana to display and speak as the application is launched.

A Navigate element indicates that the voice command activates the app to the foreground. In this example, the showTripToDestination command is a foreground task.

A VoiceCommandService element indicates that the voice command activates the app in the background. The value of the Target attribute of this element should match the value of the Name attribute of the uap:AppService element in the package.appxmanifest file. In this example, the whenIsTripToDestination and cancelTripToDestination commands are background tasks that specify the name of the app service as AdventureWorksVoiceCommandService.

For more detail, see the VCD elements and attributes v1.2 reference.

Example: A portion of the VCD file that defines the en-us voice commands for the Adventure Works app.

<?xml version="1.0" encoding="utf-8" ?>

<VoiceCommands xmlns="https://schemas.microsoft.com/voicecommands/1.2">

<CommandSet xml:lang="en-us" Name="AdventureWorksCommandSet_en-us">

<AppName> Adventure Works </AppName>

<Example> Show trip to London </Example>

<Command Name="showTripToDestination">

<Example> Show trip to London </Example>

<ListenFor RequireAppName="BeforeOrAfterPhrase"> show [my] trip to {destination} </ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified"> show [my] {builtin:AppName} trip to {destination} </ListenFor>

<Feedback> Showing trip to {destination} </Feedback>

<Navigate />

</Command>

<Command Name="whenIsTripToDestination">

<Example> When is my trip to Las Vegas?</Example>

<ListenFor RequireAppName="BeforeOrAfterPhrase"> when is [my] trip to {destination}</ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified"> when is [my] {builtin:AppName} trip to {destination} </ListenFor>

<Feedback> Looking for trip to {destination}</Feedback>

<VoiceCommandService Target="AdventureWorksVoiceCommandService"/>

</Command>

<Command Name="cancelTripToDestination">

<Example> Cancel my trip to Las Vegas </Example>

<ListenFor RequireAppName="BeforeOrAfterPhrase"> cancel [my] trip to {destination}</ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified"> cancel [my] {builtin:AppName} trip to {destination} </ListenFor>

<Feedback> Cancelling trip to {destination}</Feedback>

<VoiceCommandService Target="AdventureWorksVoiceCommandService"/>

</Command>

<PhraseList Label="destination">

<Item>London</Item>

<Item>Las Vegas</Item>

<Item>Melbourne</Item>

<Item>Yosemite National Park</Item>

</PhraseList>

</CommandSet>

Install the VCD Commands

Your app must run once to install the VCD.

Note

Voice command data is not preserved across app installations. To ensure the voice command data for your app remains intact, consider initializing your VCD file each time your app is launched or activated, or maintain a setting that indicates if the VCD is currently installed.

In the app.xaml.cs file:

Add the following using directive:

using Windows.Storage;Mark the

OnLaunchedmethod with the async modifier.protected async override void OnLaunched(LaunchActivatedEventArgs e)Call the

InstallCommandDefinitionsFromStorageFileAsyncmethod in theOnLaunchedhandler to register the voice commands that should be recognized.

Example: The Adventure Works app defines aStorageFileobject.

Example: Call theGetFileAsyncmethod to initialize theStorageFileobject with theAdventureWorksCommands.xmlfile.

TheStorageFileobject is then passed toInstallCommandDefinitionsFromStorageFileAsyncmethod.try { // Install the main VCD. StorageFile vcdStorageFile = await Package.Current.InstalledLocation.GetFileAsync( @"AdventureWorksCommands.xml" ); await Windows.ApplicationModel.VoiceCommands.VoiceCommandDefinitionManager.InstallCommandDefinitionsFromStorageFileAsync(vcdStorageFile); // Update phrase list. ViewModel.ViewModelLocator locator = App.Current.Resources["ViewModelLocator"] as ViewModel.ViewModelLocator; if(locator != null) { await locator.TripViewModel.UpdateDestinationPhraseList(); } } catch (Exception ex) { System.Diagnostics.Debug.WriteLine("Installing Voice Commands Failed: " + ex.ToString()); }

Handle Activation

Specify how your app responds to subsequent voice command activations.

Note

You must launch your app at least once after the voice command sets have been installed.

Confirm that your app was activated by a voice command.

Override the

Application.OnActivatedevent and check whether IActivatedEventArgs.Kind is VoiceCommand.Determine the name of the command and what was spoken.

Get a reference to a

VoiceCommandActivatedEventArgsobject from the IActivatedEventArgs and query theResultproperty for aSpeechRecognitionResultobject.To determine what the user said, check the value of Text or the semantic properties of the recognized phrase in the

SpeechRecognitionSemanticInterpretationdictionary.Take the appropriate action in your app, such as navigating to the desired page.

Note

If you need to refer to your VCD, visit the Edit the VCD File section.

After receiving the speech-recognition result for the voice command, you get the command name from the first value in the

RulePatharray. Since the VCD file defines more than one possible voice command, you must verify that the value matches the command names in the VCD and take the appropriate action.The most common action for an application is to navigate to a page with content relevant to the context of the voice command.

Example: Open the TripPage page and pass in the value of the voice command, how the command was input, and the recognized destination phrase (if applicable). Alternatively, the app may send a navigation parameter to the SpeechRecognitionResult when navigating to the TripPage page.You are able to find out whether the voice command that launched your app was actually spoken, or whether it was typed in as text, from the

SpeechRecognitionSemanticInterpretation.Propertiesdictionary using the commandMode key. The value of that key will be eithervoiceortext. If the value of the key isvoice, consider using speech synthesis (Windows.Media.SpeechSynthesis) in your app to provide the user with spoken feedback.Use the SpeechRecognitionSemanticInterpretation.Properties to find out the content spoken in the

PhraseListorPhraseTopicconstraints of aListenForelement. The dictionary key is the value of theLabelattribute of thePhraseListorPhraseTopicelement. Example: The following code for How to access the value of {destination} phrase./// <summary> /// Entry point for an application activated by some means other than normal launching. /// This includes voice commands, URI, share target from another app, and so on. /// /// NOTE: /// A previous version of the VCD file might remain in place /// if you modify it and update the app through the store. /// Activations might include commands from older versions of your VCD. /// Try to handle these commands gracefully. /// </summary> /// <param name="args">Details about the activation method.</param> protected override void OnActivated(IActivatedEventArgs args) { base.OnActivated(args); Type navigationToPageType; ViewModel.TripVoiceCommand? navigationCommand = null; // Voice command activation. if (args.Kind == ActivationKind.VoiceCommand) { // Event args may represent many different activation types. // Cast the args so that you only get useful parameters out. var commandArgs = args as VoiceCommandActivatedEventArgs; Windows.Media.SpeechRecognition.SpeechRecognitionResult speechRecognitionResult = commandArgs.Result; // Get the name of the voice command and the text spoken. // See VoiceCommands.xml for supported voice commands. string voiceCommandName = speechRecognitionResult.RulePath[0]; string textSpoken = speechRecognitionResult.Text; // commandMode indicates whether the command was entered using speech or text. // Apps should respect text mode by providing silent (text) feedback. string commandMode = this.SemanticInterpretation("commandMode", speechRecognitionResult); switch (voiceCommandName) { case "showTripToDestination": // Access the value of {destination} in the voice command. string destination = this.SemanticInterpretation("destination", speechRecognitionResult); // Create a navigation command object to pass to the page. navigationCommand = new ViewModel.TripVoiceCommand( voiceCommandName, commandMode, textSpoken, destination ); // Set the page to navigate to for this voice command. navigationToPageType = typeof(View.TripDetails); break; default: // If not able to determine what page to launch, then go to the default entry point. navigationToPageType = typeof(View.TripListView); break; } } // Protocol activation occurs when a card is selected within Cortana (using a background task). else if (args.Kind == ActivationKind.Protocol) { // Extract the launch context. In this case, use the destination from the phrase set (passed // along in the background task inside Cortana), which makes no attempt to be unique. A unique id or // identifier is ideal for more complex scenarios. The destination page is left to check if the // destination trip still exists, and navigate back to the trip list if it does not. var commandArgs = args as ProtocolActivatedEventArgs; Windows.Foundation.WwwFormUrlDecoder decoder = new Windows.Foundation.WwwFormUrlDecoder(commandArgs.Uri.Query); var destination = decoder.GetFirstValueByName("LaunchContext"); navigationCommand = new ViewModel.TripVoiceCommand( "protocolLaunch", "text", "destination", destination ); navigationToPageType = typeof(View.TripDetails); } else { // If launched using any other mechanism, fall back to the main page view. // Otherwise, the app will freeze at a splash screen. navigationToPageType = typeof(View.TripListView); } // Repeat the same basic initialization as OnLaunched() above, taking into account whether // or not the app is already active. Frame rootFrame = Window.Current.Content as Frame; // Do not repeat app initialization when the Window already has content, // just ensure that the window is active. if (rootFrame == null) { // Create a frame to act as the navigation context and navigate to the first page. rootFrame = new Frame(); App.NavigationService = new NavigationService(rootFrame); rootFrame.NavigationFailed += OnNavigationFailed; // Place the frame in the current window. Window.Current.Content = rootFrame; } // Since the expectation is to always show a details page, navigate even if // a content frame is in place (unlike OnLaunched). // Navigate to either the main trip list page, or if a valid voice command // was provided, to the details page for that trip. rootFrame.Navigate(navigationToPageType, navigationCommand); // Ensure the current window is active Window.Current.Activate(); } /// <summary> /// Returns the semantic interpretation of a speech result. /// Returns null if there is no interpretation for that key. /// </summary> /// <param name="interpretationKey">The interpretation key.</param> /// <param name="speechRecognitionResult">The speech recognition result to get the semantic interpretation from.</param> /// <returns></returns> private string SemanticInterpretation(string interpretationKey, SpeechRecognitionResult speechRecognitionResult) { return speechRecognitionResult.SemanticInterpretation.Properties[interpretationKey].FirstOrDefault(); }

Handle the Voice Command in the App Service

Process the voice command in the app service.

Add the following using directives to your voice command service file.

Example:AdventureWorksVoiceCommandService.cs.using Windows.ApplicationModel.VoiceCommands; using Windows.ApplicationModel.Resources.Core; using Windows.ApplicationModel.AppService;Take a service deferral so your app service is not terminated while handling the voice command.

Confirm that your background task is running as an app service activated by a voice command.

- Cast the IBackgroundTaskInstance.TriggerDetails to Windows.ApplicationModel.AppService.AppServiceTriggerDetails.

- Check that IBackgroundTaskInstance.TriggerDetails.Name is the name of the app service in the

Package.appxmanifestfile.

Use IBackgroundTaskInstance.TriggerDetails to create a VoiceCommandServiceConnection to Cortana to retrieve the voice command.

Register an event handler for VoiceCommandServiceConnection. VoiceCommandCompleted to receive notification when the app service is closed due to a user cancellation.

Register an event handler for the IBackgroundTaskInstance.Canceled to receive notification when the app service is closed due to an unexpected failure.

Determine the name of the command and what was spoken.

- Use the VoiceCommand.CommandName property to determine the name of the voice command.

- To determine what the user said, check the value of Text or the semantic properties of the recognized phrase in the

SpeechRecognitionSemanticInterpretationdictionary.

Take the appropriate action in your app service.

Display and speak the feedback to the voice command using Cortana.

- Determine the strings that you want Cortana to display and speak to the user in response to the voice command and create a

VoiceCommandResponseobject. For guidance on how to select the feedback strings that Cortana shows and speaks, see Cortana design guidelines. - Use the VoiceCommandServiceConnection instance to report progress or completion to Cortana by calling ReportProgressAsync or ReportSuccessAsync with the

VoiceCommandServiceConnectionobject.

Note

If you need to refer to your VCD, visit the Edit the VCD File section.

public sealed class VoiceCommandService : IBackgroundTask { private BackgroundTaskDeferral serviceDeferral; VoiceCommandServiceConnection voiceServiceConnection; public async void Run(IBackgroundTaskInstance taskInstance) { //Take a service deferral so the service isn't terminated. this.serviceDeferral = taskInstance.GetDeferral(); taskInstance.Canceled += OnTaskCanceled; var triggerDetails = taskInstance.TriggerDetails as AppServiceTriggerDetails; if (triggerDetails != null && triggerDetails.Name == "AdventureWorksVoiceServiceEndpoint") { try { voiceServiceConnection = VoiceCommandServiceConnection.FromAppServiceTriggerDetails( triggerDetails); voiceServiceConnection.VoiceCommandCompleted += VoiceCommandCompleted; VoiceCommand voiceCommand = await voiceServiceConnection.GetVoiceCommandAsync(); switch (voiceCommand.CommandName) { case "whenIsTripToDestination": { var destination = voiceCommand.Properties["destination"][0]; SendCompletionMessageForDestination(destination); break; } // As a last resort, launch the app in the foreground. default: LaunchAppInForeground(); break; } } finally { if (this.serviceDeferral != null) { // Complete the service deferral. this.serviceDeferral.Complete(); } } } } private void VoiceCommandCompleted(VoiceCommandServiceConnection sender, VoiceCommandCompletedEventArgs args) { if (this.serviceDeferral != null) { // Insert your code here. // Complete the service deferral. this.serviceDeferral.Complete(); } } private async void SendCompletionMessageForDestination( string destination) { // Take action and determine when the next trip to destination // Insert code here. // Replace the hardcoded strings used here with strings // appropriate for your application. // First, create the VoiceCommandUserMessage with the strings // that Cortana will show and speak. var userMessage = new VoiceCommandUserMessage(); userMessage.DisplayMessage = "Here's your trip."; userMessage.SpokenMessage = "Your trip to Vegas is on August 3rd."; // Optionally, present visual information about the answer. // For this example, create a VoiceCommandContentTile with an // icon and a string. var destinationsContentTiles = new List<VoiceCommandContentTile>(); var destinationTile = new VoiceCommandContentTile(); destinationTile.ContentTileType = VoiceCommandContentTileType.TitleWith68x68IconAndText; // The user taps on the visual content to launch the app. // Pass in a launch argument to enable the app to deep link to a // page relevant to the item displayed on the content tile. destinationTile.AppLaunchArgument = string.Format("destination={0}", "Las Vegas"); destinationTile.Title = "Las Vegas"; destinationTile.TextLine1 = "August 3rd 2015"; destinationsContentTiles.Add(destinationTile); // Create the VoiceCommandResponse from the userMessage and list // of content tiles. var response = VoiceCommandResponse.CreateResponse( userMessage, destinationsContentTiles); // Cortana displays a "Go to app_name" link that the user // taps to launch the app. // Pass in a launch to enable the app to deep link to a page // relevant to the voice command. response.AppLaunchArgument = string.Format( "destination={0}", "Las Vegas"); // Ask Cortana to display the user message and content tile and // also speak the user message. await voiceServiceConnection.ReportSuccessAsync(response); } private async void LaunchAppInForeground() { var userMessage = new VoiceCommandUserMessage(); userMessage.SpokenMessage = "Launching Adventure Works"; var response = VoiceCommandResponse.CreateResponse(userMessage); // When launching the app in the foreground, pass an app // specific launch parameter to indicate what page to show. response.AppLaunchArgument = "showAllTrips=true"; await voiceServiceConnection.RequestAppLaunchAsync(response); } }- Determine the strings that you want Cortana to display and speak to the user in response to the voice command and create a

Once activated, the app service has 0.5 second to call ReportSuccessAsync. Cortana shows and says a feedback string.

Note

You are able to declare a Feedback string in the VCD file. The string does not affect the UI text displayed on the Cortana canvas, it only affects the text spoken by Cortana.

If the app takes longer than 0.5 second to make the call, Cortana inserts a hand-off screen, as shown here. Cortana displays the hand-off screen until the application calls ReportSuccessAsync, or for up to 5 seconds. If the app service does not call ReportSuccessAsync, or any of the VoiceCommandServiceConnection methods that provide Cortana with information, the user receives an error message and the app service is canceled.

Related articles

Windows developer