Exercise - Image guardrails

Contoso Camping Store gives customers the ability to upload photos to complement their product reviews. Customers find this feature useful, because it provides insight into how products look and function outside the generic marketing images.

You can apply an AI model to detect whether the images that customers post are harmful. You can then use the detection results to implement the necessary precautions.

Safe content

First, test an image of a family camping:

On the Content Safety page, select Moderate image content.

Select Browse for a file and upload the family-builds-campfire.JPG file. The file is within the data/Image Moderation folder.

Set all Threshold level values to Medium.

Select Run test.

As expected, this image content is Allowed. The severity level is Safe across all categories.

Violent content

You should anticipate that customers can potentially post harmful image content. To ensure that you account for such a scenario, test the detection of harmful image content.

Note

The image that you use for testing contains a graphic depiction of a bear attack. The image is blurred by default in the image preview. However, you're welcome to use the Blur image toggle to change this setting.

- Select Browse for a file and upload the bear-attack-blood.JPG file.

- Set all Threshold level values to Medium.

- Select Run test.

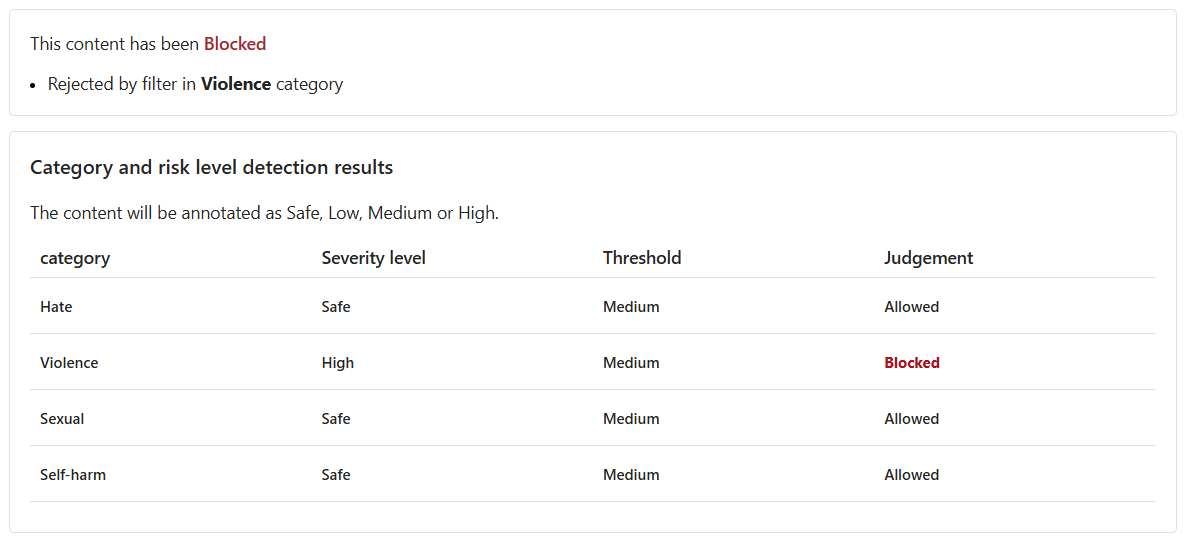

The content is Blocked. The Violence filter rejected it and shows a severity level of High.

Run a bulk test

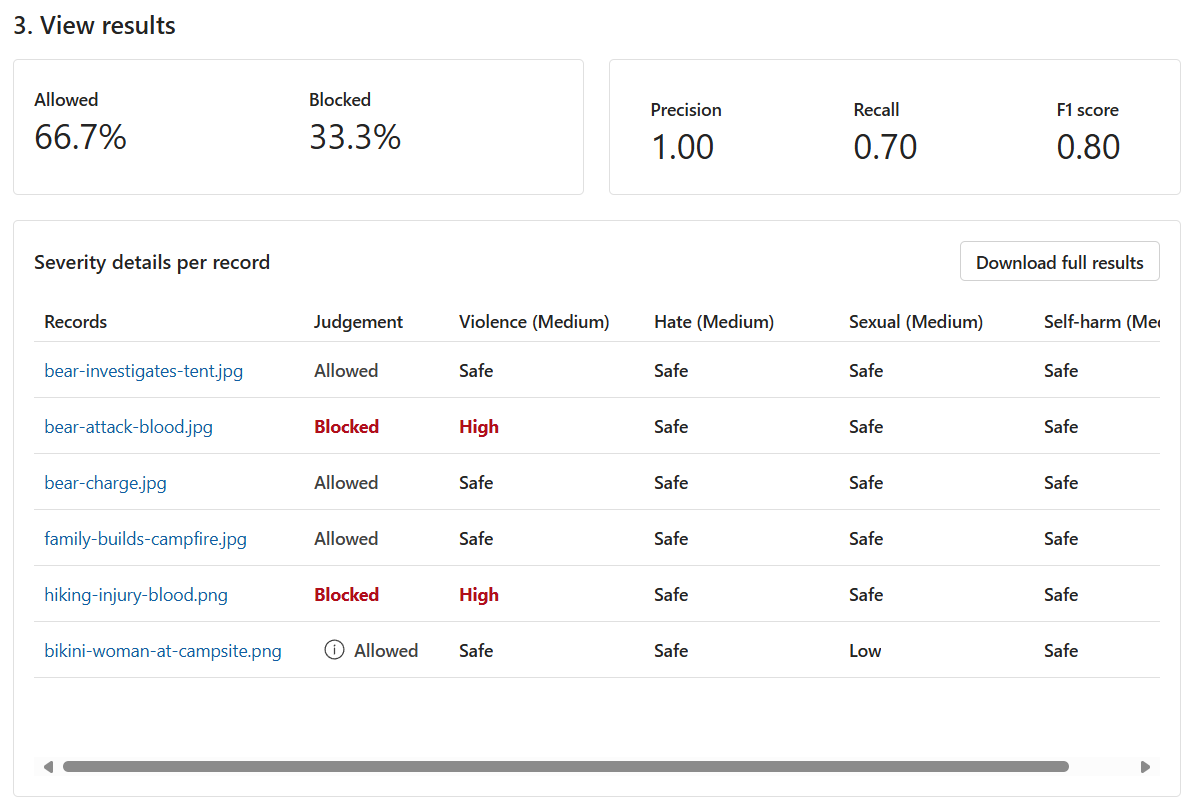

So far, you've tested image content for singular, isolated images. However, you can test a bulk dataset of image content all at once and receive metrics based on the model's performance.

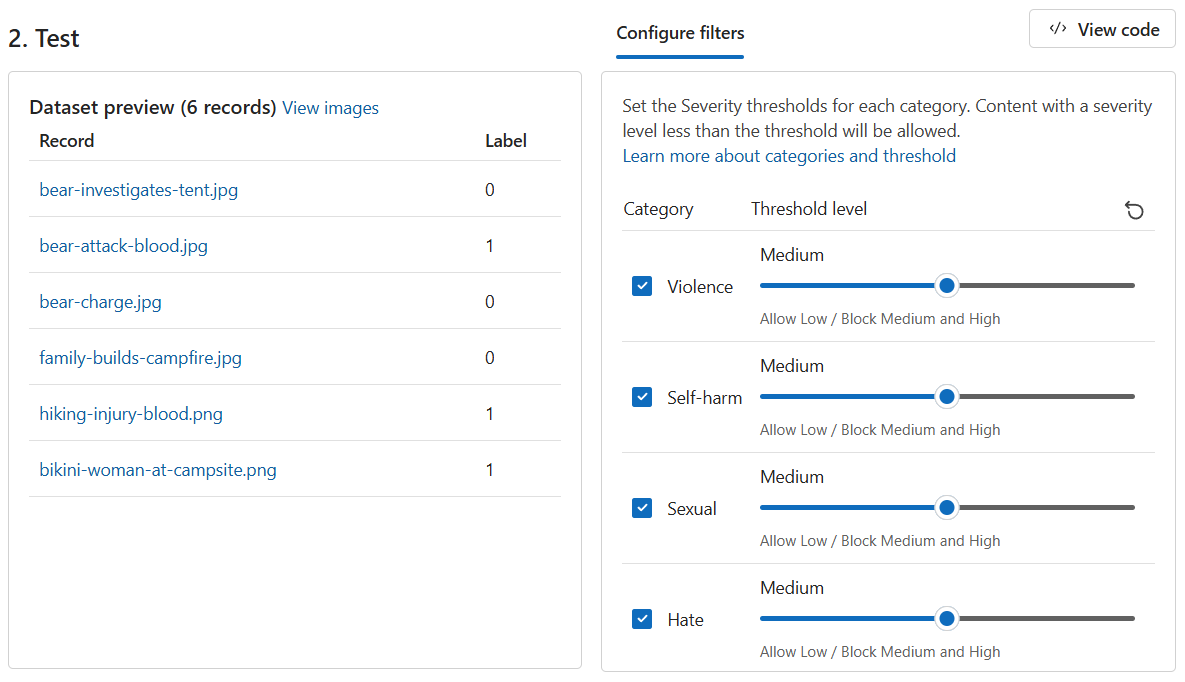

You have a bulk dataset of images from customers. The dataset also includes sample harmful images to test the model's ability to detect harmful content. Each record in the dataset includes a label to indicate whether the content is harmful.

Do another test round, but this time with the dataset:

Switch to the Run a bulk test tab.

Select Browse for a file and upload the bulk-image-moderation-dataset.zip file.

In the Dataset preview section, browse through the records and their corresponding labels. A label of 0 indicates that the content is acceptable (not harmful). A label of 1 indicates that the content is unacceptable (harmful).

Set all Threshold level values to Medium.

Select Run test.

Examine the results.

Is there room for improvement? If so, adjust the Threshold levels until the Precision, Recall, and F1 score metrics are closer to 1.