What is vector search?

Vector search is a capability available in AI Search used to index, store and retrieve vector embedding from a search index. You can use it to power applications implementing the Retrieval Augmented Generation (RAG) architecture, similarity and multi-modal searches or recommendation engines.

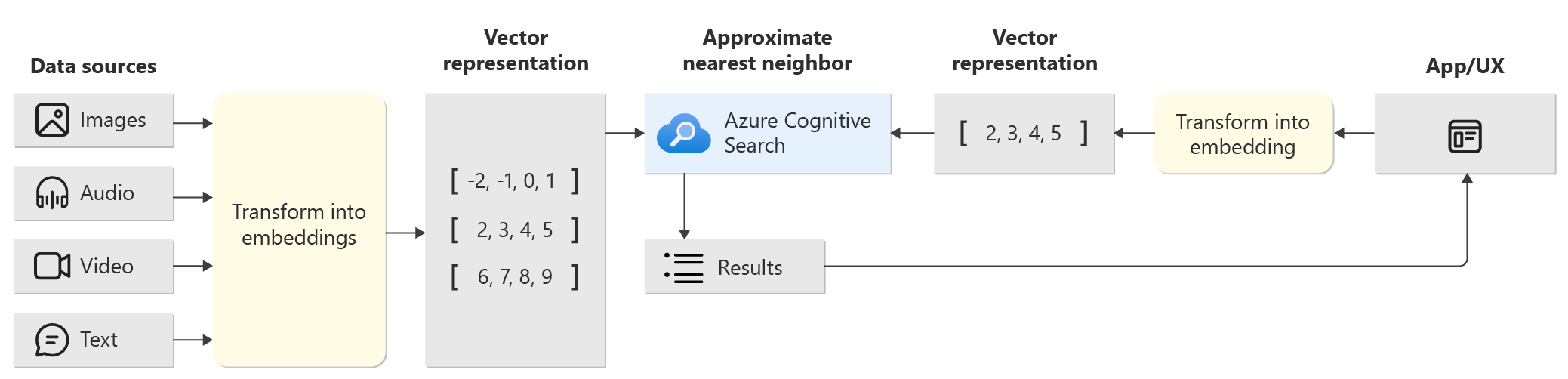

Below is an illustration of the indexing and query workflows for vector search.

A vector query can be used to match criteria across different types of source data by providing a mathematical representation of the content generated by machine learning models. This eliminates the limitations of text based searches returning relevant results by using the intent of the query.

When to use vector search

Here are some scenarios where you should use vector search:

- Use OpenAI or open source models to encode text, and use queries encoded as vectors to retrieve documents.

- Do a similarity search across encoded images, text, video and audio, or a mixture of these (multi-modal).

- Represent documents in different languages using a multi-lingual embedded model to find documents in any language.

- Build hybrid searches from vector and searchable text fields as vector searches are implemented at field level. The results will be merged to return a single response.

- Apply filters to text and numeric fields and include this in your query to reduce the data that your vector search needs to process.

- Create a vector database to provide an external knowledge base or use as a long term memory.

Limitations

There are a few limitations when using vector search, which you should note:

- You'll need to provide the embeddings using Azure OpenAI or a similar open source solution, as Azure AI Search doesn't generate these for your content.

- Customer Managed Keys (CMK) aren't supported.

- There are storage limitations applicable so you should check what your service quota provides.

Note

If your documents are large, you consider chunking. Use the Chunking large documents for vector search solutions in AI Search documentation for more information.