Understand when to fine-tune a language model

Before you start fine-tuning a model, you need to have a clear understanding of what fine-tuning is and when you should use it.

When you want to develop a chat application with Azure AI Foundry, you can use prompt flow to create a chat application that is integrated with a language model to generate responses. To improve the quality of the responses the model generates, you can try various strategies. The easiest strategy is to apply prompt engineering. You can change the way you format your question, but you can also update the system message that is sent along with the prompt to the language model.

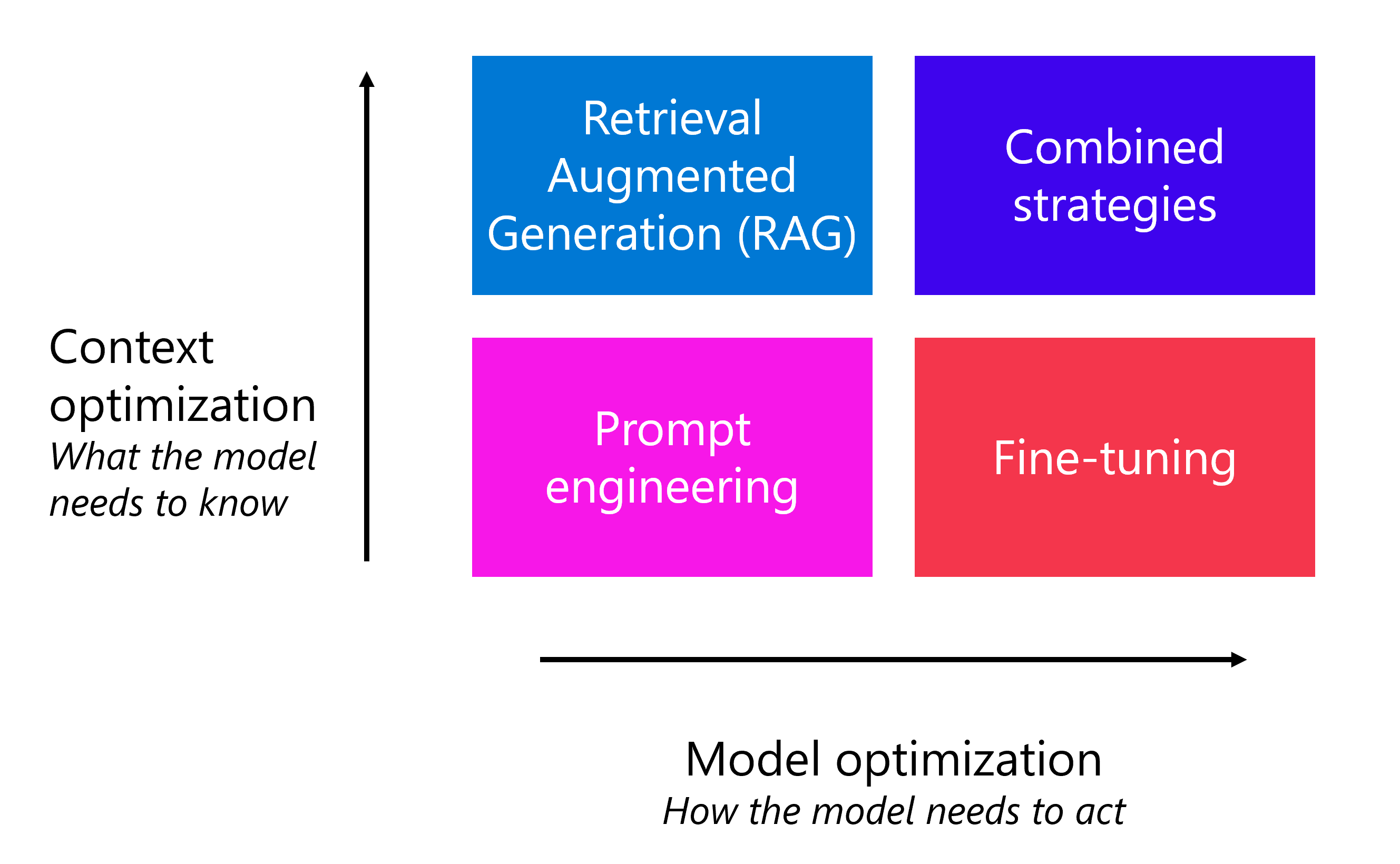

Prompt engineering is a quick and easy way to improve how the model acts, and what the model needs to know. When you want to improve the quality of the model even further, there are two common techniques that are used:

- Retrieval Augmented Generation (RAG): Ground your data by first retrieving context from a data source before generating a response.

- Fine-tuning: Train a base language model on a dataset before integrating it in your application.

RAG is most commonly applied when you need the model's responses to be factual and grounded in specific data. For example, you want customers to ask questions about hotels that you're offering in your travel booking catalog. On the other hand, when you want the model to behave a certain way, fine-tuning can help you achieve your goal. You can also use a combination of optimization strategies, like RAG and a fine-tuned model, to improve your language application.

How the model needs to act mostly relates to the style, format, and tone of the responses generated by a model. When you want your model to adhere to a specific style and format when responding, you can instruct the model to do so through prompt engineering too. Sometimes however, prompt engineering might not lead to consistent results. It can still happen that a model ignores your instructions and behaves differently.

Within prompt engineering, a technique used to "force" the model to generate output in a specific format, is to provide the model with various examples of what the desired output might look like, also known as one-shot (one example) or few-shot (few examples). Still, it can happen that your model doesn't always generate the output in the style and format you specified.

To maximize the consistency of the model's behavior, you can fine-tune a base model with your own training data.