Examine the Microsoft approach to Responsible AI

As Artificial Intelligence (AI) transforms our lives, the technology industry must collectively define new rules, norms, and practices for the use and impact of this technology. In 2017, Microsoft developed Version 1 of its Responsible AI Standard. This document defined the company's principles and approach to AI development and use. The company's hope was that these guidelines would help ensure that society uses AI technology ethically, with a driving force of prioritizing people first.

In June 2022, Microsoft released Version 2 of the Responsible AI Standard. This version is the product of a multi-year effort to define product development requirements for Responsible AI. Microsoft made available this second version of the Responsible AI Standard to:

- Share what it learned.

- Invite feedback from others.

- Contribute to the discussion about building better norms and practices around AI.

This Standard remains a living document, evolving to address new research, technologies, laws, and learnings from within and outside the company.

What is Responsible AI?

Responsible AI is an approach to developing, assessing, and deploying artificial intelligent systems in a safe, trustworthy, and ethical way. AI systems are the product of many decisions made by the people who develop and deploy them. From system purpose to how people interact with AI systems, Responsible AI can help proactively guide these decisions toward more beneficial and equitable outcomes. That means keeping people and their goals at the center of system design decisions and respecting enduring values like fairness, reliability, and transparency.

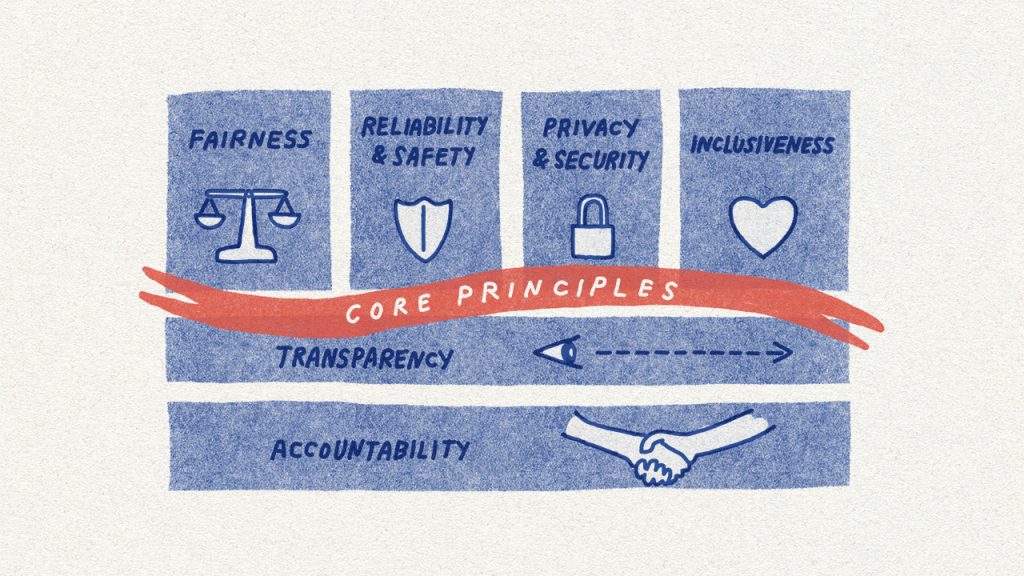

Toward that end, Microsoft developed its Responsible AI Standard to guide its AI journey. This Standard is Microsoft's framework for building AI systems according to six principles: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. For Microsoft, these principles are the cornerstone of a responsible and trustworthy approach to AI, especially as intelligent technology becomes more prevalent in products and services that people use every day.

Microsoft intended its Responsible AI Standard to provide guidance not just for its own engineers, but for all AI creators. The principles outlined in its Standard represent best practices for the responsible development and use of AI by all individuals and organizations. Microsoft made the Standard open source and free for anyone to adopt. It actively encourages other companies and organizations to use it, not just internal teams within Microsoft. In fact, Microsoft participates broadly in AI ethics dialogues, research, and policy initiatives. If it developed its standard for just itself, that would go against its outward ethos of advocating Responsible AI industry-wide.

Microsoft's approach to Responsible AI

Microsoft itself guides its actions based on:

- Its Ethical AI principles

- Its Responsible AI Standard

- Decades of research on AI, grounding, and privacy-preserving machine learning

It compiled a multidisciplinary team of researchers, engineers and policy experts to review its AI systems for potential harms and mitigations. Microsoft has chartered this team with:

- Refining training data.

- Filtering to limit harmful content, query- and result-blocking sensitive subjects.

- Applying Microsoft technologies like InterpretML and Fairlearn to help detect and correct data bias.

Microsoft's goal is the make it clear how its AI systems make decisions. It does so by noting limitations, linking to sources, and prompting users to review, fact-check, and adjust content based on subject-matter expertise.

Microsoft also wants to help its customers use its AI products responsibly, sharing its learnings, and building trust-based partnerships. For these new services, it wants to provide its customers with information about the intended uses, capabilities, and limitations of its AI platform service. Microsoft wants to provide its customers with the knowledge necessary to make responsible deployment choices. It also wants to share resources and templates with developers inside enterprises and with ISVs, to help them build effective, safe, and transparent AI solutions.

Knowledge check

Choose the best response for the following question.