Considerations when working with AI

Generative AI is relatively new to most people. Schools are making important decisions on how educators and learners should best approach this fast-paced technology.

School policies and guidelines

Before using AI with learners, be sure to check your school's policy or guidelines to determine your approach. These guidelines might include:

- Guardian permissions to use generative AI

- Acceptable and unacceptable uses of generative AI models

- Citation requirements when using generative AI

- Academic Honor Policy

When you’re using a generative AI platform with learners, be sure to provide clear instructions on what’s acceptable and what isn’t in each assignment or assessment. For more information for schools, refer to the AI Toolkit.

Digital equity

Learners with access to the internet at home can use this powerful tool for assignments and assessments. Learners who don’t have internet access won’t be able to access the same tool. The digital divide, or digital inequity, could continue to grow. Providing opportunities to use generative AI tools in class ensures that all learners have access to this powerful tool. It also helps build skills that enhance a learner's productivity and efficiency in school and beyond.

Bias and fabrications

A large language model generates new information based on a learned dataset. The information it generates can be false or what is known as fabrication. The person consuming the information is responsible for determining if the information is factual, which requires critical thinking. Copilot in Edge provides websites for the user to read laterally to ensure the AI’s accuracy. This feature is one small step in how Microsoft supports responsible use of AI.

Generative AI tools might generate biased outputs. Biased outputs can happen because many AI models are trained using information that mostly comes from Western-centric datasets. Algorithms don’t always consider these important factors. AI outputs that display discriminatory views can continue to perpetuate unfair beliefs about underrepresented groups of people.

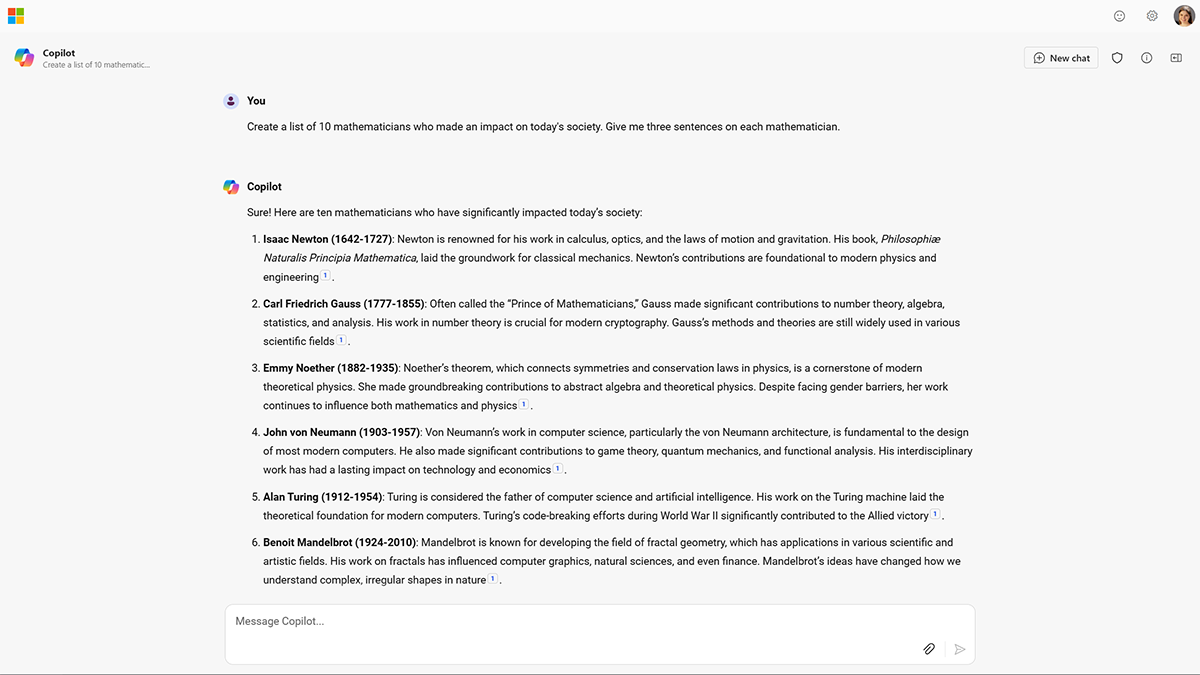

A learner is about to begin a research presentation on famous mathematicians who impacted the world. The educator allows AI to be used in the ideation portion of the assignment. The learner launches Microsoft Copilot and writes the prompt "Create a list of 10 mathematicians who made an impact on today's society. Give me three sentences on each mathematician." Copilot begins to generate the list.

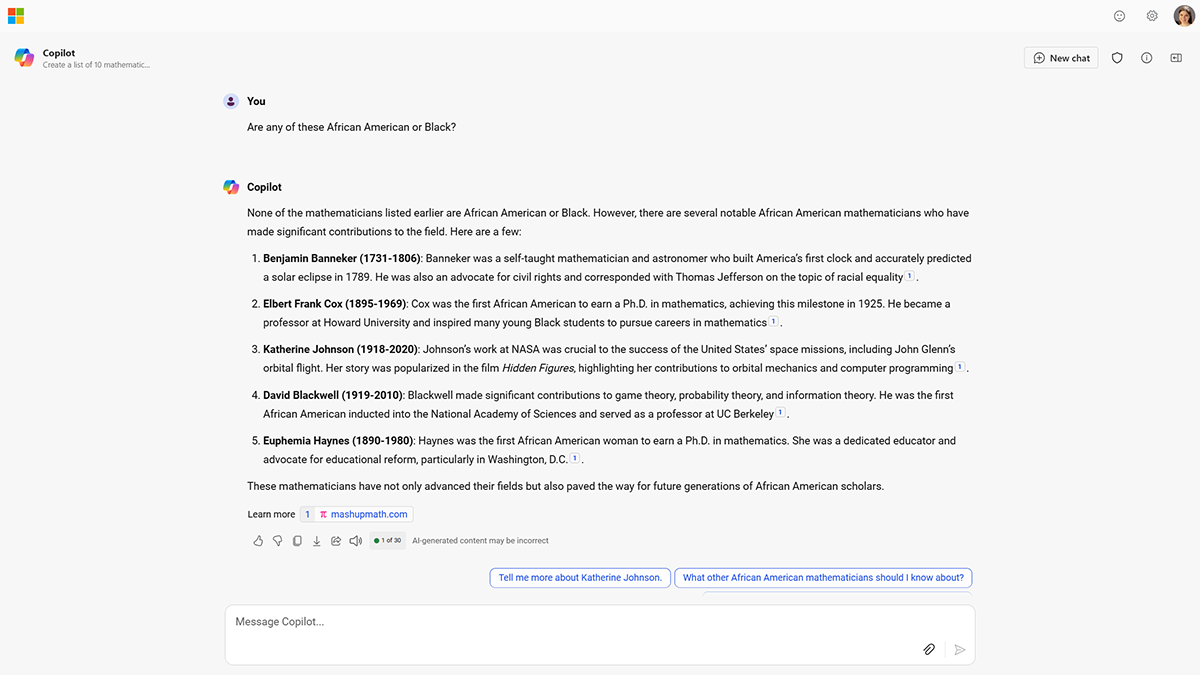

Out of the 10 names that Copilot generated, three were female, and none were Black or African American. Because the learner had lessons in class that prepared them for this bias, they respond with another prompt: "Are any of these African American or Black?" Copilot generates a list of five African American or Black mathematicians.

Addressing bias is a persistent problem in generative AI that requires active efforts from developers. Thinking critically and checking what the AI generates for fairness helps in the journey toward responsible AI use. Microsoft is addressing this issue by ensuring that the learned dataset from the LLM represents inclusive data sets alongside other measures.

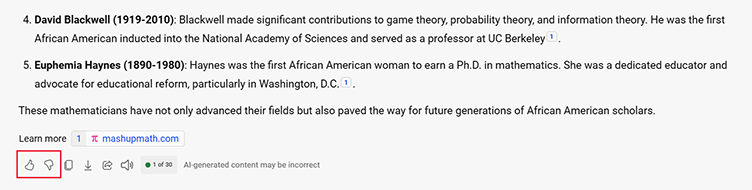

Tip

Give Copilot feedback regarding a response using the thumbs-up or thumbs-down button at the end of the response. Selecting the thumbs-down button opens a feedback window where you can provide details.

Data and privacy

Microsoft is committed to advancing AI responsibly. When it was designing Copilot, Microsoft built fact-checking tools into the model to include sources where generated content comes from. Users should be aware of the data and privacy policies of the AI tools they’re using. As a reminder, sensitive or private information should always be safeguarded.