Develop data transformation

Data management is an important feature to help you asynchronously import or export data with other systems. The Data entity element in the Application Object Tree (AOT) runs the data transfer operations. As part of the export or import process, you're required to perform intermediate data transformation to prepare the data for the target application. You can transform data through configuration. Additionally, options are available to help you develop code to perform complex transformations.

Transformation using configuration

Finance and operations apps has a concept of staging tables between the source and the destination tables. From the source, data is inserted or updated into the staging table and then transferred to the destination. You can transform the data before it's inserted into the staging table. Data transformation is also possible while data is being transferred to the destination from staging.

Data transformation between the external data source and staging table

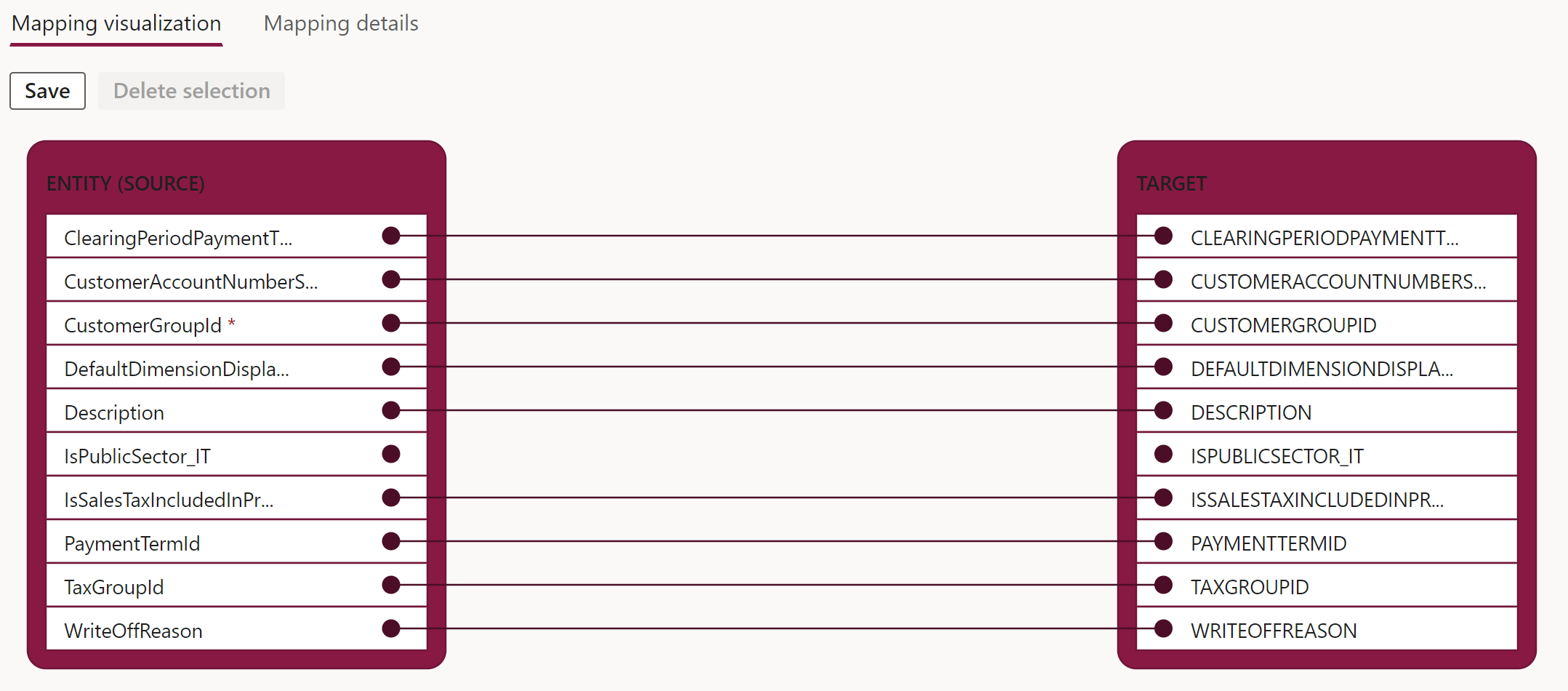

When a new Export or Import project is created in Data management, an icon will appear in the project line in the View map column. When you select this icon, the mapping visualization between the staging table and the external data source appears, as shown in the following screenshot. The Mapping details tab, next to the Mapping visualization tab, provides the source-to-target field mapping details and an option for basic transformation of the data.

The functionalities that you can achieve from the Mapping details page include:

Ignore blank values - If a requirement is in place to ignore a record if a specific field value is blank, then you should use this feature.

Text qualifier - Requirements are in place, such as the data transfer file needs to contain a column of type string and the value of that column might contain a comma, which is also the column delimiter. For example, your organization names include commas, and the value that you're using for a column delimiter is also a comma. You can use a text qualifier to resolve this conflict, which will add double quotes to identify the Text column.

Use enum label - This option is a check box, which you can select to use the enum label in the data transfer file.

Auto-generated - Some fields in an entity might have requirements to update system-generated data during import instead of providing the data in the source import file. In such a scenario, you can use auto-generated functionality in the mapping details for the entity. For example, the customer entity can have the party number be auto generated during import and the Global Address Book information created. When you select the Auto-generated checkbox for a field, the source field will change to Auto.

Auto default - Some fields in an entity might have requirements to update default data during import instead of providing the data in the source import file. For such a scenario, you can select the Auto default checkbox in the mapping details for the entity. When you select the Auto default checkbox, the Default value button will be enabled in the Map source to the staging page, where the default value should be entered.

Conversion - Some fields in an entity might have requirements to update specific values during import based on the value that's provided in the source import file. For example, in the source application, the value of a certain field is false or true. While you're importing in Dynamics 365, the value should be imported as No or Yes. The Conversion button in the Map source to staging form will allow you to enter value-to-value mapping information, which will update the involved fields during the import operation.

Data transformation between finance and operations apps and the Staging table

You can access a data entity from the Data entities tile in the Data management workspace. To add data transformation logic, select the Modify target mapping button in the command bar for the selected data entity. The Mapping details page should appear.

Options to create virtual fields and computed columns are available in the data entity. These fields will appear in the Staging field, which you can map with the Target fields in finance and operations. You can develop the data transformation logic in virtual fields or computed columns by writing X++ code.

Computed columns

Consider the following factors when developing the data transformation logic in computed columns:

- Value is generated by an SQL view computed column.

- During read, data is computed by SQL and is fetched directly from the view.

- For writes, custom X++ code must parse the input value and then write the parsed values to the regular fields of the data entity. The values are stored in the regular fields of the data sources of the entity.

- Computed fields are used mostly for reads.

- If possible, you should use computed columns instead of virtual fields because they're computed at the SQL Server level, whereas virtual fields are computed row by row in X++.

Virtual fields

Consider the following factors when developing the data transformation logic in virtual fields:

- Virtual fields are non-persisted.

- Virtual fields are controlled by custom X++ code.

- Read and write happens through custom X++ code.

- Virtual fields are typically used for intake values that are calculated by using X++ code and can't be replaced by computed columns.