Data quality native support for Iceberg format (preview)

Microsoft Purview native support for Apache Iceberg open table format is in public preview. Microsoft Purview customers using Microsoft Azure Data Lake Storage (ADLS) Gen2, Microsoft Fabric Lakehouse, Amazon Web Services (AWS) S3, and Google Cloud Platform (GCP) Google Cloud Storage (GCS) can now use Microsoft Purview to curate, govern, and perform data health control and data quality assessment on Iceberg data assets.

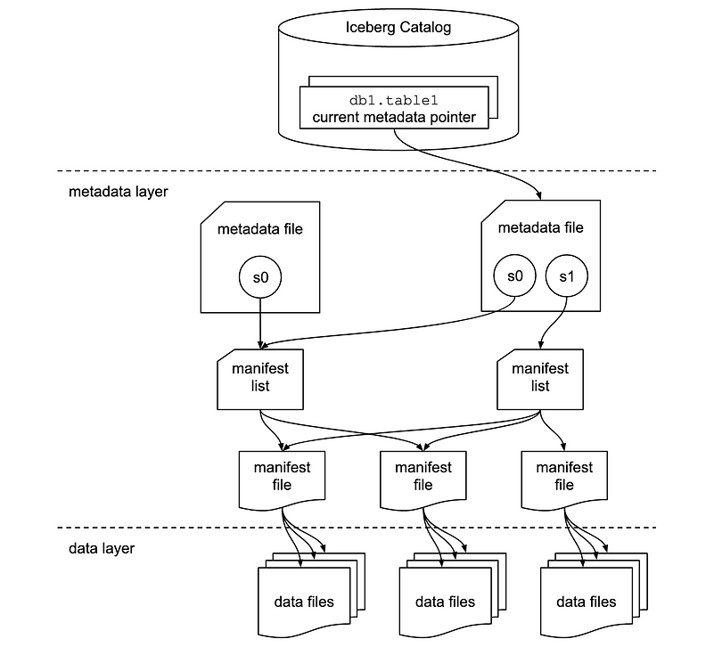

Iceberg file structure

An Iceberg table is more than just a collection of data files. It includes various metadata files that track the state of the table and facilitate operations like reads, writes, and schema evolution. Below is an exploration of the critical components involved in an Iceberg table. The data files in an Iceberg table are typically stored in columnar formats like Apache Parquet, Apache Avro, or Apache Optimized Row Columnar (ORC). These files contain the actual data that users interact with during queries.

Iceberg catalog

At the top is the Iceberg catalog, which stores the current metadata pointer for each table. This catalog enables tracking the most recent state of a table by referencing the current metadata file.

Metadata layer

The metadata layer is central to Iceberg’s functionality and is composed of several key elements:

- Metadata file: This file contains information about the table’s schema, partitioning, and snapshots. In the diagram, s0 refers to a snapshot, which is essentially a record of the table’s state at a given point in time. If multiple snapshots exist, such as s0 and s1, the metadata file tracks both.

- Manifest list: This list points to one or more manifest files. A manifest list acts as a container of references to these manifests, and it helps Iceberg efficiently manage which data files should be read or written during different operations. Each snapshot might have its own manifest list.

Data layer

In the data layer, the manifest files act as an intermediary between the metadata and the actual data files. Each manifest file points to a collection of data files, providing a map of the physical files stored in the data lake.

- Manifest files: These files store the metadata for a group of data files, including row counts, partition information, and file paths. They allow Iceberg to prune and access specific files quickly, enabling efficient querying.

- Data files: The actual data resides in these files, which might be in formats like Parquet, ORC or Avro. Iceberg organizes data files based on partitions, allowing for performance optimizations during query execution by minimizing unnecessary data scans.

How it works together

When an operation such as a query or update is performed, Iceberg first looks up the table’s metadata file via the catalog. The metadata file references the current snapshot (or multiple snapshots), which then points to the manifest list. The manifest list contains references to the manifest files, which in turn list the individual data files. This hierarchical structure allows Iceberg to manage large datasets efficiently while ensuring transactional consistency, enabling features like time travel and schema evolution.

This multi-layered design enhances the performance and scalability of batch and streaming operations, as only the necessary data files are accessed, and updates are managed through snapshots without affecting the entire dataset.

Iceberg data in OneLake

You can seamlessly consume Iceberg-formatted data across Microsoft Fabric with no data movement or duplication. You can use OneLake shortcuts to directly point to a data layer.

Iceberg data is stored in OneLake, written using Snowflake or another Iceberg writer. OneLake virtualizes the table as a Delta Lake table, ensuring broad compatibility across Fabric engines. For example, you can create a volume in Snowflake and directly point it to the Fabric Lakehouse. Once the table is created in Fabric OneLake, autosync ensures that any data updates are reflected in real time. This streamlined process facilitates working with Iceberg data in Microsoft Fabric. Get further details from Snowflake documentation.

Important

Iceberg data in AWS S3 and GCS also need to be auto synced as delta for to curate, govern, and to measure and monitor data quality.

Data quality for Iceberg data

For all users natively hydrating data in Iceberg on (Parquet, ORC, Avro) on ADLS Gen2 or Fabric Lakehouse must simply confiture a scan pointing to the location of the directory hosting the data and metadata Iceberg directories. Follow the steps listed below:

Configure and run a scan in Microsoft Purview Data Map.

Configure the dir (hosting data and metadata) as data asset and associate it to data product. This forms the Iceberg dataset. Associate Iceberg data assets to a data product in Microsoft Purview Unified Catalog. Learn how to associate data assets to a data product.

In Unified Catalog, under Health management select Data quality view to find your Iceberg files (data asset) and to set up data source connection.

3.1 To set up AdlsG2 connection, follow the steps described in the DQ connection document

3.2 To set up Fabric OneLake connection, follow the steps described in the fabric data estate DQ document.

Apply data quality rules, and run data quality scans for column- and table-level data quality scoring.

On the Schema page of the selected Iceberg file (data asset), select Import schema to import the schema from Iceberg file's data source.

Before running the profiling job or data quality scanning job, go to the Iceberg file's Overview page, and at the Data asset dropdown menu, select Iceberg.

Profiling and data quality scanning

After you finish the connection setup and data asset file format selection, you can profile, create and apply rules, and run a data quality scan of your data in Iceberg open format files. Follow the step-by-step guideline described in below documents:

Important

- Support for the Iceberg open format in catalog discovery, curation, data profiling, and data quality scanning features is now in preview.

- For data profiling and data quality assessment, you need to retrieve and set the schema from the Data Quality Schema page.

- Consumer Discovery Experience: Consumers won't see the schema in the data asset view because the Data Map doesn't yet support the Iceberg open table format. Data Quality steward will able to import the schema from the Data Quality schema page.

Limitations

Current release supports data created in Iceberg format with Apache Hadoop catalog only.

Lakehouse Path and ADLS Gen2 Path

- Iceberg Metadata stores the complete path for the data and metadata. Ensure to use the complete path for ADLS Gen2 and Microsoft Fabric Lakehouse. Additionally for Microsoft Fabric Lakehouse path during the write, ensure operating (WRITES, UPSERTS) with the ID paths.

abfss://c4dd39bb-77e2-43d3-af10-712845dc2179@onelake.dfs.fabric.microsoft.com/5e8ea953-56fc-49c1-bc8c-0b3498cf1a9c/Files/CustomerData. - Filesystem as Id and Lakehouse as Id. Absolute and not relative paths are necessary for Microsoft Purview to perform DQ on Iceberg. To validate, ensure you check the snapshots path to point as complete Fully Qualified Name (FQN) paths.

Schema detection

- Data Map has a limitation on inability to detect the Iceberg schema. When curating the Iceberg directories on Fabric Lakehouse or ADLS Gen2, you won't be able to review the schema. However, the DQ fetch schema is able to pull up the schema for the curated asset.

Recommendations

- If you're using SNOWFLAKE Catalog for Iceberg Format, with VOLUME storage as ADLS Gen2, AWS S3 or GCP GCS, use Microsoft Fabric OneLake Table shortcut and perform DQ as DELTA table. FYI: Supports only Iceberg with Parquet file format.

- If you're using ADLS for Iceberg Format with Hadoop catalog, scan the directory directly, use DQ engine as default for Iceberg format for DQ. FYI: Supports Iceberg with Parquet, ORC, Avro file format.

- If you're using Snowflake for Iceberg format, you could point the VOLUME storage directly to Microsoft Fabric Lakehouse path and use OneLake Table to then create a Delta compatible version for DQ. FYI: Supports only Iceberg with Parquet file format.

- If you're using Microsoft Fabric Lakehouse for Iceberg Format with Hadoop catalog, scan the lakehouse directory directly, use DQ engine as default for Iceberg format for DQ. FYI: Supports Iceberg with Parquet, ORC, Avro file format.