Analyze emissions data

Important

Some or all of this functionality is available as part of a preview release. The content and the functionality are subject to change.

You can use two methods for accessing emissions data with the Azure emissions insights capability in Sustainability data solutions in Microsoft Fabric:

Access emissions data through SQL

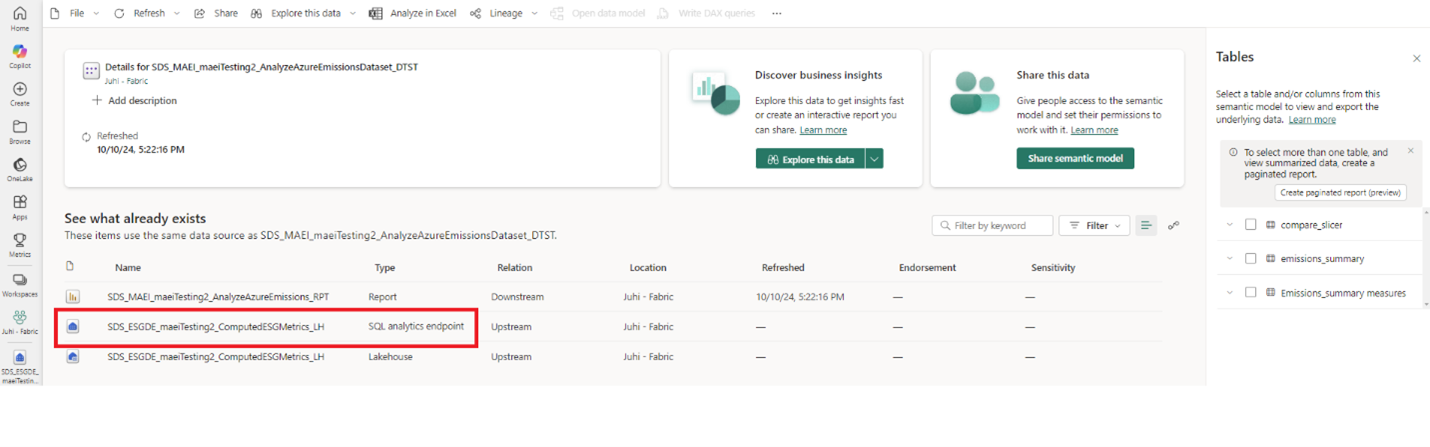

You can access the aggregated data in the ComputedESGMetrics lakehouse through the SQL endpoint available in the AnalyzeAzureEmissionsDataset with these steps:

On the Azure emissions insights capability home page, select the AnalyzeAzureEmissionsDataset semantic model.

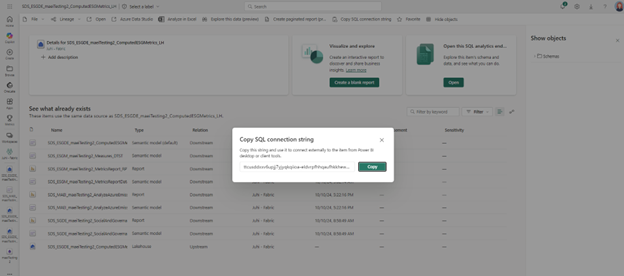

Navigate to the ComputedESGMetrics SQL end point.

You can use Copy SQL connection string to use the dataset externally with compatible SQL client tools, such as Azure Data Studio and SQL Server Management Studio (SSMS).

Access emissions data through APIs

Create an app identity in your Microsoft Entra ID tenant and create a new client secret for the app identity.

For this use case, register the application with an account type of Accounts in this organizational directory only. Skip the redirect URI, because it's not required in this case. You can also skip the Configure platform settings steps, because as they aren't required for this scenario.

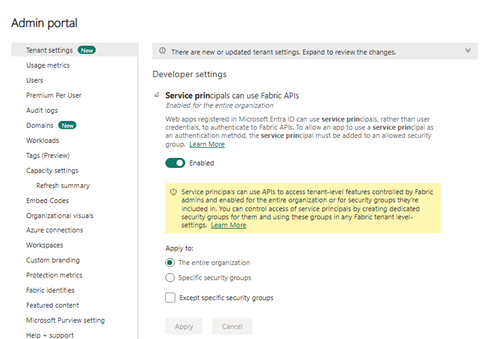

Configure admin-level tenant settings. Ensure that you have one of the admin roles as defined in Understand Microsoft Fabric admin roles in the Microsoft Fabric documentation. Check with your Microsoft Fabric tenant admin to complete these steps if required:

Open the workspace in Fabric where you deployed Sustainability data solutions.

Select the Settings icon in the top right corner to open the setting side panel, and then select Admin portal.

In the Tenant settings section, go to Developer settings and enable the Service principals can use Fabric APIs permission for the entire organization or for a specific security group.

Verify that the app identity you created earlier is a member of the security group.

Assign permissions to the app identity:

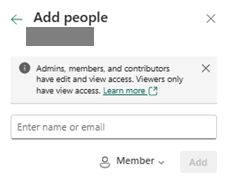

Navigate to the workspace view and select the Manage access option to manage roles at workspace level.

Select the Add people or groups button to add the app identity with one of the following roles: Member, Contributor, or Admin. This step is required, because the accessed data is a OneLake internal shortcut.

Call the REST APIs:

Note

You can integrate these REST API calls in any HTTP client application.

Fetch the access token for the configured app identity. Refer to Acquire tokens to call a web API using a daemon application in the Microsoft Entra ID documentation to fetch the token for the app identity using client credentials OAuth 2.0 workflow. The scope parameter should be "https://storage.azure.com/.default" in the token request.

List the data partition files present in the IngestedRawData Lakehouse using the following API call.

workspaceId: The unique identifier of the Microsoft Fabric workspace. For example, it's the first GUID in the URL when the IngestedRawData Lakehouse is opened in the Microsoft Fabric.

lakehouseId: The unique identifier of the Lakehouse having the imported Azure emissions data. Here, the ingested raw data Lakehouse ID. For example, it is the second GUID in the URL when the IngestedRawData Lakehouse is opened in the Microsoft Fabric.

enrollmentNumber: Billing enrollment ID whose data needs to be read. The billing enrollment ID being passed here is same as one of the folder names available under the path of "Files/AzureEmissions" in the IngestedRawData Lakehouse.

Request:

HTTP GET https://onelake.dfs.fabric.microsoft.com/<workspaceId>?recursive=false&resource=filesystem&directory=<lakehouseId>%2FFiles%2FAzureEmissions%2F<enrollmentNumber>Response:

Returns a list of file properties. These are the data partition files storing the emissions data for the specified enrollment number.

Fetch the content of the specific data partition file.

workspaceId: The unique identifier of the Microsoft Fabric workspace.

emissionsPartitionFilePath: Relative path of one of the partition files fetched from previous API call. For example, this can be visualized as follows:

<lakehouseId>/Files/AzureEmissions/<enrollmentNumber>/<emissionsPartitionFileName>.parquet

Request:

HTTP GET https://onelake.dfs.fabric.microsoft.com/<workspaceId>/<emissionsPartitionFilePath>Response:

The content of the emissions data partition file. Here, as the API is reading the Parquet file, the response body must be parsed through an appropriate Parquet library or tool. For example, if you're using a .NET application to read the emissions data using APIs, you can use one of the C# Parquet libraries for decoding the Parquet content and storing it on the application end.