Overview of Azure Databricks configurations

Azure Databricks is a cloud-based platform that combines the best features of data engineering and data science. It lets you build, manage, and analyze data pipelines using Apache Spark, a fast, and scalable open-source framework for big-data processing. Azure Databricks also provides a collaborative workspace for data scientists and engineers to work together on machine learning and artificial intelligence projects.

If you work in the government or public sector industry, this reference architecture documentation provides opinionated guidance for using Azure Databricks with a Sovereign Landing Zone or Azure Landing Zone deployment with sovereignty baseline policy initiatives applied.

The Azure Databricks product documentation offers extensive information on various articles. This document complements the documentation by giving you curated recommendations on key concepts and options for configuring an Azure Databricks environment.

Key features of Azure Databricks

Azure Databricks has a rich set of features, but for this reference architecture, we focus on its infrastructure elements. Azure Databricks offers:

Interactive notebooks: Use notebooks to write code in Python, Scala, SQL, or R, and visualize the results with charts and graphs. Share and comment on notebooks with team members and integrate them with popular tools like GitHub and Azure DevOps.

Compute options: Azure Databricks provides various compute options to support data engineering, data science, and data analytics workloads. These options include on-demand, scalable serverless compute for notebooks and jobs, provisioned compute for all-purpose analysis and automated jobs, and SQL warehouses for executing SQL commands. Instance pools offer idle, ready-to-use instances to reduce start and autoscaling times, enhancing efficiency across different data processing scenarios.

Data integration: Easily connect to various data sources and destinations, such as Azure Blob Storage, Azure Data Lake Storage, Azure SQL Database, Azure Synapse Analytics, Azure Cosmos DB, and more. Use Delta Lake, a reliable and performant data lake solution that supports ACID transactions and schema enforcement.

Machine learning: Build, train, and deploy machine learning models using popular frameworks like TensorFlow, PyTorch, Scikit-learn, and XGBoost. Use MLflow, an open-source platform for managing the machine learning lifecycle, to track experiments, log metrics, and deploy models.

Enterprise security: Securely access and process your data, with features like role-based access control, encryption, auditing, and compliance. Integrate Azure Databricks with Microsoft Entra ID, Azure Key Vault, and Azure Private Link for identity and data protection.

Data governance and sharing: Unity Catalog simplifies data sharing within organizations and secure analytics in the cloud by providing a managed version of Delta Sharing for external sharing and a unified data governance model for the data lakehouse.

High-level Databricks architecture

Azure Databricks operates out of a control plane and a compute plane. The reference architecture recommends configuration options in each of these control planes. The following diagram describes the overall Azure Databricks architecture.

Control plane

The control plane is the layer of Azure Databricks that manages the lifecycle of clusters and jobs, and the authentication and authorization of users and data access. The control plane includes the backend services managed by Azure Databricks in your Azure Databricks account. The web application is in the control plane.

The control plane runs in an Azure subscription owned by Azure Databricks and communicates with the classic and serverless compute planes via secure APIs. The control plane also provides the web interface and REST APIs for users to interact with Azure Databricks.

Compute plane

The compute plane is where your data is processed. There are two types of compute planes - serverless and classic. The serverless compute plane offers instant and elastic resources, while the classic compute plane relies on pre-provisioned infrastructure.

Serverless compute plane

Serverless compute is ideal for ad-hoc queries, notebooks, and short-lived workloads. For example, you can use serverless compute to run SQL commands in notebooks or execute lightweight jobs. In the serverless compute plane, resources run in a compute layer within the Azure Databricks account.

Azure Databricks creates a serverless compute plane in the same Azure region as your workspace classic compute plane. It operates a pool of servers, located in Databricks' account, running Kubernetes containers that can be assigned to a user within seconds. For more information, see Announcing Databricks Serverless SQL: Instant, Managed, Secured, and Production-ready Platform for SQL Workloads - The Databricks Blog.

The compute platform quickly expands the cluster with more servers when users run reports or queries simultaneously to handle the concurrent load. Databricks manages the entire configuration of the server and automatically performs the patching and upgrades as needed. Serverless compute is billed per usage (for example, per query execution or job run).

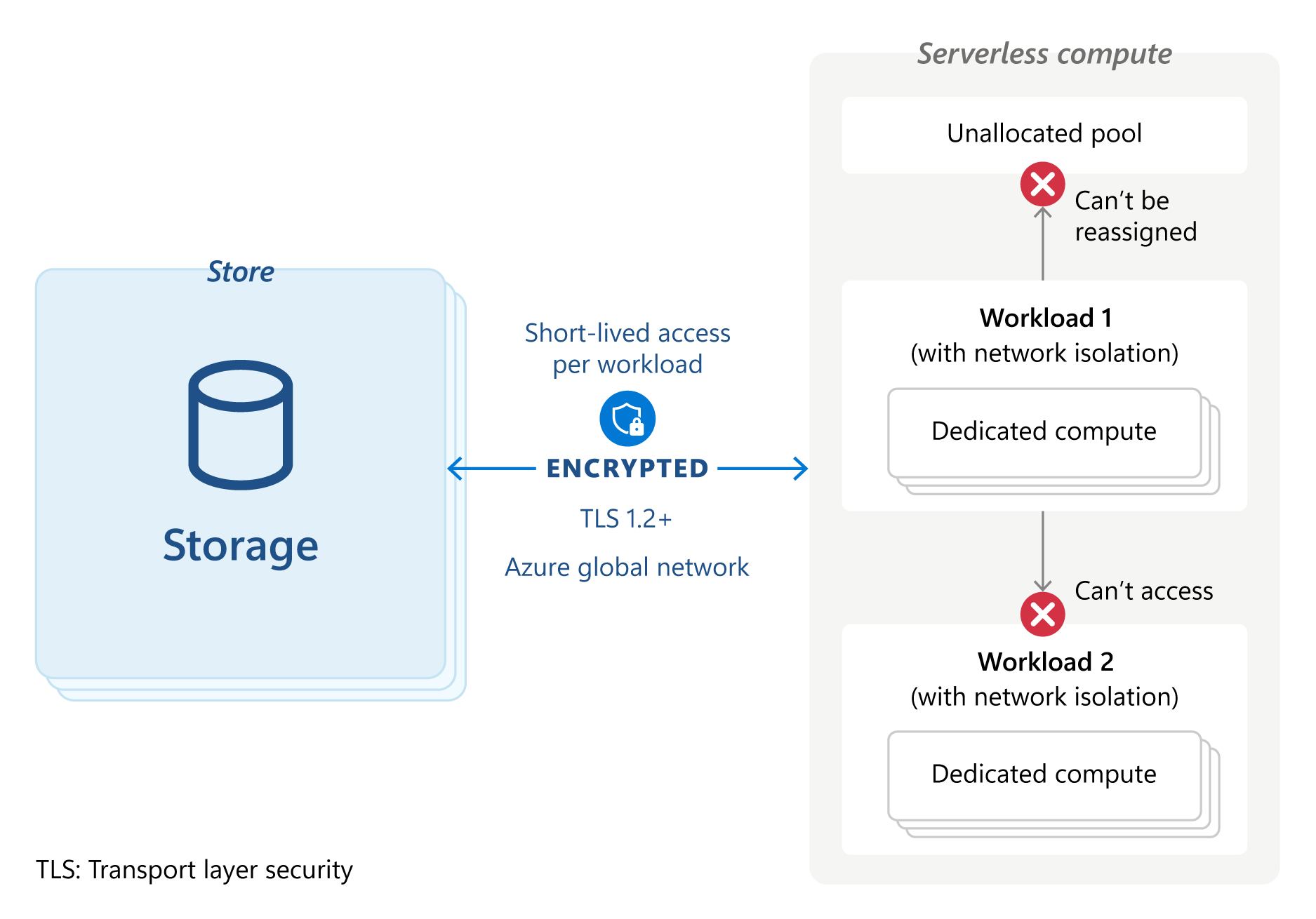

Each server runs a secure configuration, and all processing secured by three layers of isolation – the Kubernetes container hosting the runtime, the VM hosting the container, and the virtual network for the workspace. Each layer is isolated into one workspace with no sharing or cross-network traffic allowed.

The containers use hardened configurations, VMs are shut down and not reused, and network traffic is restricted to nodes in the same cluster. All compute is ephemeral, dedicated exclusively to that workload, and securely wiped after the workload is complete.

All traffic between you, the control plane, the compute plane, and cloud services are routed over Azure's global network, not the public internet. The serverless compute plane for serverless SQL warehouses doesn't use the customer-configurable back-end Azure Private Link connectivity. The Azure Databricks control plane connects to the serverless compute plane with mTLS with IP access allowed only for the control plane IP address.

All attached storage is protected by industry-standard AES-256 encryption and all traffic between the user, the control plane, the compute plane, and cloud services are encrypted with at least TLS 1.2. Serverless SQL warehouses don't use customer-managed keys for managed disks.

Workloads have no privileges or credentials for systems outside the scope of that workload and access to the data is via short-lived (one-hour) tokens. These tokens are passed securely to each specific workload.

As of June 2024, Azure Confidential Computing isn't supported for serverless compute, but your workload is protected by multiple layers of isolation as shown in the Azure Serverless compute isolation diagram.

For more information, see Deploy Your Workloads Safely on Serverless Compute.

Classic compute plane

The classic compute plane is suitable for long-running jobs, production workloads, and consistent resource needs. For example, you can use provisioned compute for ETL pipelines, machine learning training, and data engineering tasks.

A classic compute plane has natural isolation because it runs in your own Azure subscription. New compute resources are created and configured within each workspace's virtual network in your Azure subscription. Compute resources remain constant until explicitly modified and are billed based on the instance type and duration. Clusters can use customer-managed keys for managed disks and spot instances are supported.

Azure Databricks administrators can use cluster policies to control many aspects of the clusters, including available instance types, Databricks versions, and instances sizes.

Databricks virtual network injection is a feature that allows you to deploy Azure Databricks classic compute plane resources in your own virtual network. This feature helps you to connect Azure Databricks to other Azure services in a more secure manner using service endpoints or private endpoints. You can also use virtual network peering to peer the virtual network that your Azure Databricks workspace is running with another Azure virtual network.