Semantic search and identification

Auto-annotations today are typically a semi-automated process in the whole automotive industry. The process requires video or image material to run through services that automatically attempt to detect objects such as traffic signs or scene descriptions, for example, overtaking of a vehicle in the images or frames. The objects are essential in identifying relevant scenarios for training and validation of the autonomous perception stack. Because of these various factors, the entire process becomes cumbersome.

To improve the process, a data management foundation such as one detailed in DataOps reference architecture is important. This foundation allows indexing, annotation, searching, and selection of relevant data sets used for training of computer vision models required for autonomous driving. Vector search and embeddings can help in identifying relevant scenes and scenarios that are annotated for the learning and validation downstream processes. You can perform manual reviews and enhance the processes to focus on workloads for important scenes.

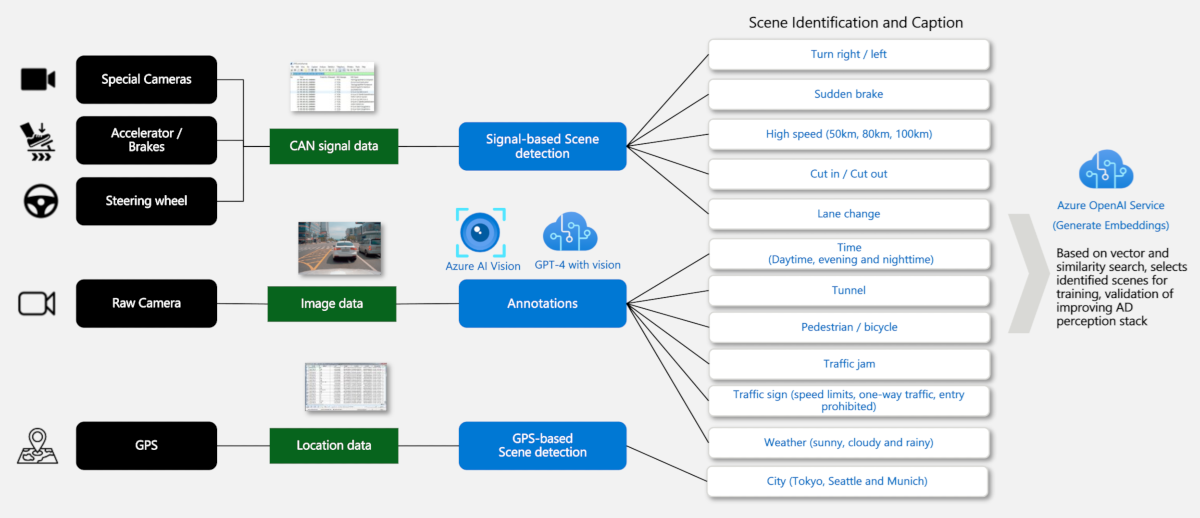

The following diagram depicts the workflow to support this scenario:

The workflow starts with real recordings from vehicles. The recordings also help in synthetically deriving scenarios at a later stage based on identified scenes. The Azure AI Vision service auto-annotates the recordings to detect objects and gets an understanding of the recorded frames for camera and images.

The vehicle and specially equipped cameras already provide information such as lane change.

Different signal streams such as camera, CAN telemetry, and locations are merged to identify and capture scenes, and get an understanding of the contained objects. For example, we partnered with Tata Consultancy Services to enhance auto-annotation generated by Azure AI Vision service with CAN based scene detection, see Bringing life to smart and sustainable vehicles.

The Azure OpenAI service receives information to generate embedding as a preparation for semantic and vector-based scene searches. Generate embedding is the process of converting text into a dense vector of floating-point numbers, which denote a data representation for machine learning models and algorithms to easily utilize. For details, see Learn how to generate embeddings with Azure OpenAI.

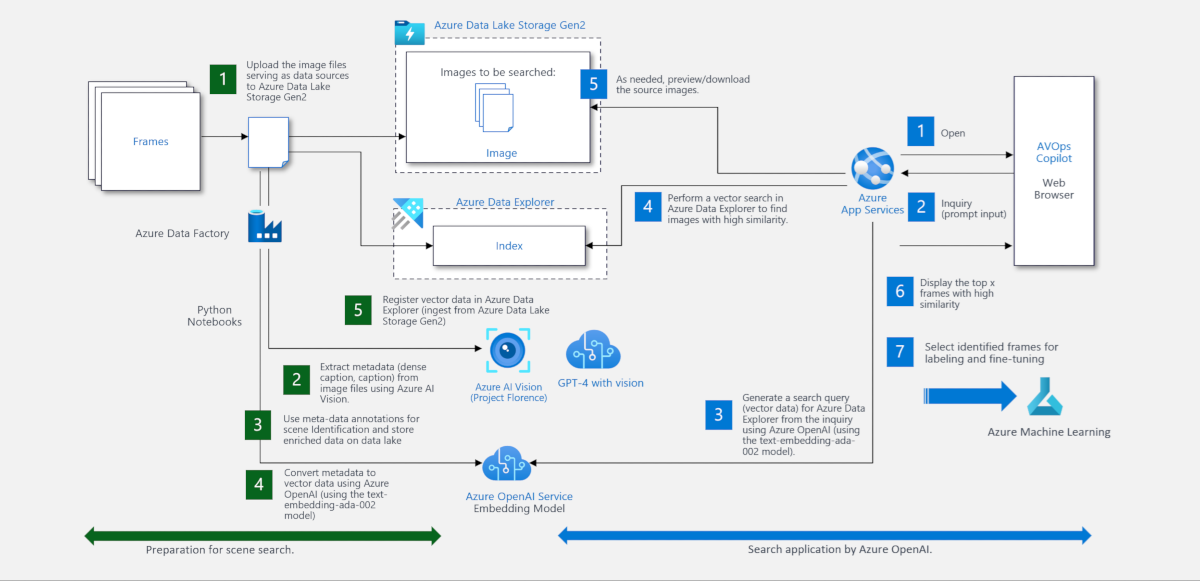

The following diagram shows the technical architecture using Microsoft AI services:

You can perform scene search inclusion of annotated results using, for instance, a notebook, or integrate them in custom UI web tools or Copilot for AD developers to select and extract relevant scenes.