Tutorial: Set up dbt for Fabric Data Warehouse

Applies to: ✅ Warehouse in Microsoft Fabric

This tutorial guides you through setting up dbt and deploying your first project to a Fabric Warehouse.

Introduction

The dbt (Data Build Tool) open-source framework simplifies data transformation and analytics engineering. It focuses on SQL-based transformations within the analytics layer, treating SQL as code. dbt supports version control, modularization, testing, and documentation.

The dbt adapter for Microsoft Fabric can be used to create dbt projects, which can then be deployed to a Fabric Data Warehouse.

You can also change the target platform for the dbt project by simply changing the adapter, for example; a project built for Azure Synapse dedicated SQL pool can be upgraded in a few seconds to a Fabric Data Warehouse.

Prerequisites for the dbt adapter for Microsoft Fabric

Follow this list to install and set up the dbt prerequisites:

Latest version of the dbt-fabric adapter from the PyPI (Python Package Index) repository using

pip install dbt-fabric.pip install dbt-fabricNote

By changing

pip install dbt-fabrictopip install dbt-synapseand using the following instructions, you can install the dbt adapter for Synapse dedicated SQL pool.Make sure to verify that dbt-fabric and its dependencies are installed by using

pip listcommand:pip listA long list of the packages and current versions should be returned from this command.

If you don't already have one, create a Warehouse. You can use the trial capacity for this exercise: sign up for the Microsoft Fabric free trial, create a workspace, and then create a warehouse.

Get started with dbt-fabric adapter

This tutorial uses Visual Studio Code, but you can use your preferred tool of your choice.

Clone the jaffle_shop demo dbt project onto your machine.

- You can clone a repo with Visual Studio Code's built-in source control.

- Or, for example, you can use the

git clonecommand:

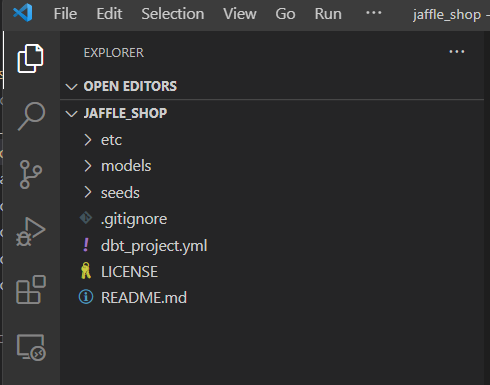

git clone https://github.com/dbt-labs/jaffle_shop.gitOpen the

jaffle_shopproject folder in Visual Studio Code.

You can skip the sign-up if you have created a Warehouse already.

Create a

profiles.ymlfile. Add the following configuration toprofiles.yml. This file configures the connection to your warehouse in Microsoft Fabric using the dbt-fabric adapter.config: partial_parse: true jaffle_shop: target: fabric-dev outputs: fabric-dev: authentication: CLI database: <put the database name here> driver: ODBC Driver 18 for SQL Server host: <enter your SQL analytics endpoint here> schema: dbo threads: 4 type: fabricNote

Change the

typefromfabrictosynapseto switch the database adapter to Azure Synapse Analytics, if desired. Any existing dbt project's data platform can be updated by changing the database adapter. For more information, see the dbt list of supported data platforms.Authenticate yourself to Azure in the Visual Studio Code terminal.

- Run

az loginin Visual Studio Code terminal if you're using Azure CLI authentication. - For Service Principal or other Microsoft Entra ID (formerly Azure Active Directory) authentication in Microsoft Fabric, refer to dbt (Data Build Tool) setup and dbt Resource Configurations. For more information, see Microsoft Entra authentication as an alternative to SQL authentication in Microsoft Fabric.

- Run

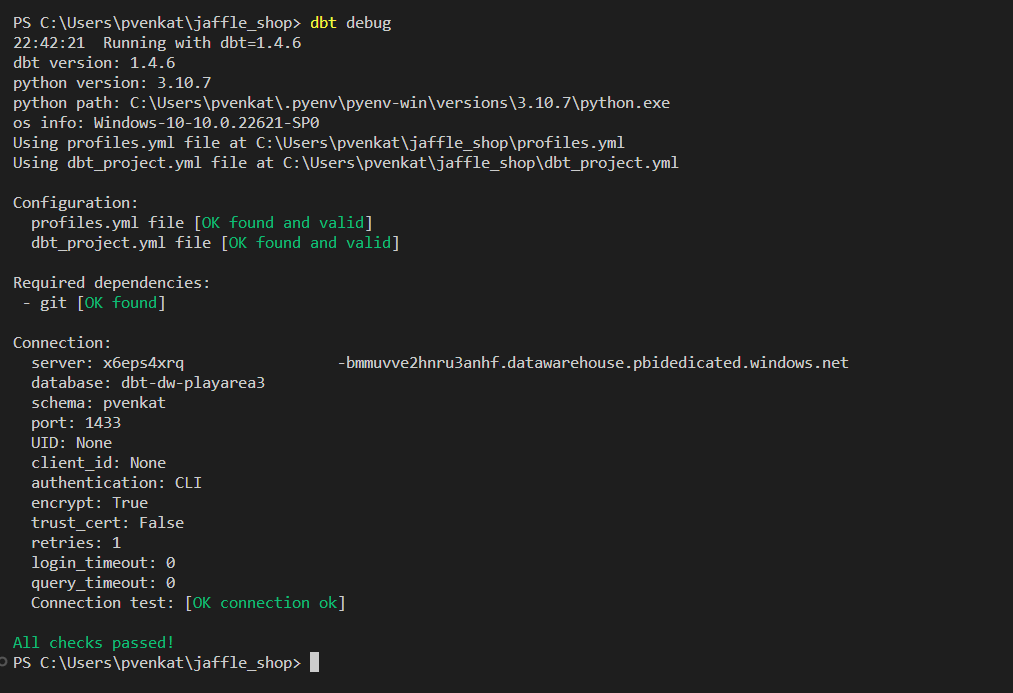

Now you're ready to test the connectivity. To test the connectivity to your warehouse, run

dbt debugin the Visual Studio Code terminal.dbt debugAll checks are passed, which means you can connect your warehouse using dbt-fabric adapter from the

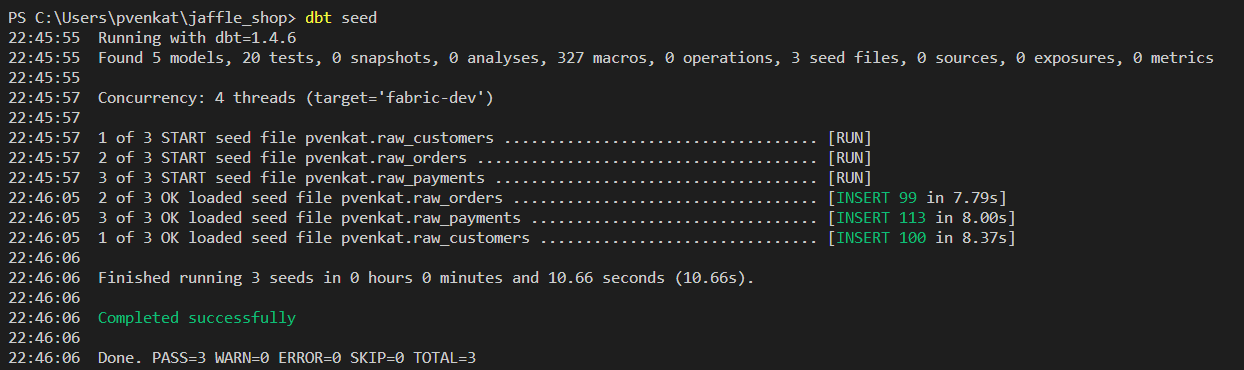

jaffle_shopdbt project.Now, it's time to test if the adapter is working or not. First run

dbt seedto insert sample data into the warehouse.Run

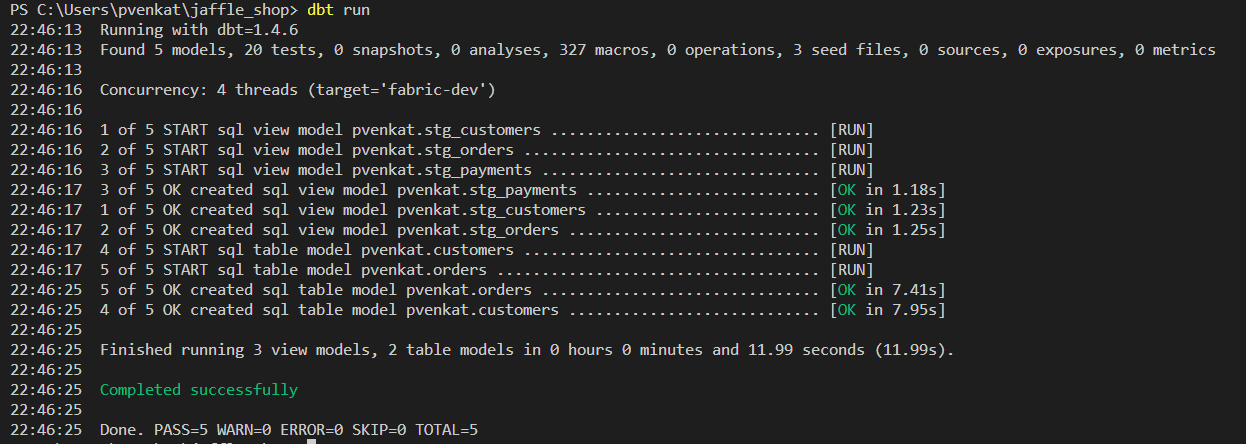

dbt runto validate data against some tests.dbt runRun

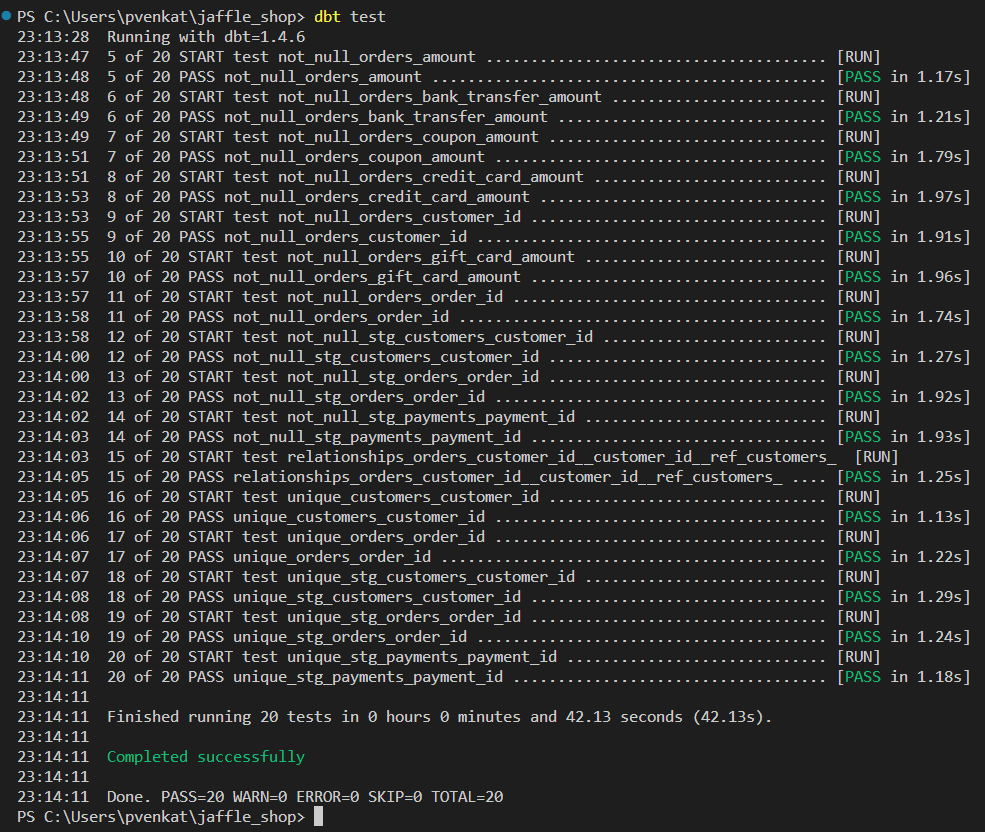

dbt testto run the models defined in the demo dbt project.dbt test

You have now deployed a dbt project to Fabric Data Warehouse.

Move between different warehouses

It's simple move the dbt project between different warehouses. A dbt project on any supported warehouse can be quickly migrated with this three step process:

Install the new adapter. For more information and full installation instructions, see dbt adapters.

Update the

typeproperty in theprofiles.ymlfile.Build the project.

dbt in Fabric Data Factory

When integrated with Apache Airflow, a popular workflow management system, dbt becomes a powerful tool for orchestrating data transformations. Airflow's scheduling and task management capabilities allow data teams to automate dbt runs. It ensures regular data updates and maintains a consistent flow of high-quality data for analysis and reporting. This combined approach, using dbt's transformation expertise with Airflow's workflow management, delivers efficient and robust data pipelines, ultimately leading to faster and more insightful data-driven decisions.

Apache Airflow is an open-source platform used to programmatically create, schedule, and monitor complex data workflows. It allows you to define a set of tasks, called operators, that can be combined into directed acyclic graphs (DAGs) to represent data pipelines.

For more information to operationalize dbt with your warehouse, see Transform data using dbt with Data Factory in Microsoft Fabric.

Considerations

Important things to consider when using dbt-fabric adapter:

Review the current limitations in Microsoft Fabric data warehousing.

Fabric supports Microsoft Entra ID (formerly Azure Active Directory) authentication for user principals, user identities, and service principals. The recommended authentication mode to interactively work on warehouse is CLI (command-line interfaces) and use service principals for automation.

Review the T-SQL (Transact-SQL) commands not supported in Fabric Data Warehouse.

Some T-SQL commands are supported by dbt-fabric adapter using

Create Table as Select(CTAS),DROP, andCREATEcommands, such asALTER TABLE ADD/ALTER/DROP COLUMN,MERGE,TRUNCATE,sp_rename.Review Unsupported data types to learn about the supported and unsupported data types.

You can log issues on the dbt-fabric adapter on GitHub by visiting Issues · microsoft/dbt-fabric · GitHub.