On-premises data gateway considerations for data destinations in Dataflow Gen2

This article lists the limitations and considerations when using the Data Gateway with data destinations scenarios in Dataflow Gen2.

Network issues with port 1433

When using Microsoft Fabric Dataflow Gen2 with an on-premises data gateway, you might encounter issues with the dataflow refresh process. The underlying problem occurs when the gateway is unable to connect to the dataflow staging Lakehouse in order to read the data before copying it to the desired data destination. This issue can occur regardless of the type of data destination being used.

During the overall dataflow refresh, the tables refresh can show as "Succeeded," but the activities section shows as "Failed". The error details for the activity WriteToDatabaseTableFrom_... indicate the following error:

Mashup Exception Error: Couldn't refresh the entity because of an issue with the mashup document MashupException.Error: Microsoft SQL: A network-related or instance-specific error occurred while establishing a connection to SQL Server. The server was not found or was not accessible. Verify that the instance name is correct and that SQL Server is configured to allow remote connections. (provider: TCP Provider, error: 0 - An attempt was made to access a socket in a way forbidden by its access permissions.) Details: DataSourceKind = Lakehouse;DataSourcePath = Lakehouse;Message = A network-related or instance-specific error occurred while establishing a connection to SQL Server. The server was not found or was not accessible. Verify that the instance name is correct and that SQL Server is configured to allow remote connections. (provider: TCP Provider, error: 0 - An attempt was made to access a socket in a way forbidden by its access permissions.);ErrorCode = -2146232060;Number = 10013

Note

From an architectural perspective, the dataflow engine uses an outbound HTTPS (port 443) endpoint to write data into a Lakehouse. However, reading data from the Lakehouse requires the use of the TDS protocol (TCP over port 1433). This protocol is utilized to copy the data from the staging lakehouse to the data destination. This explains why the Tables Load step succeeds while the data destination activity fails, even when both lakehouses are in the same OneLake instance.

Troubleshooting

To troubleshoot the issue, follow these steps:

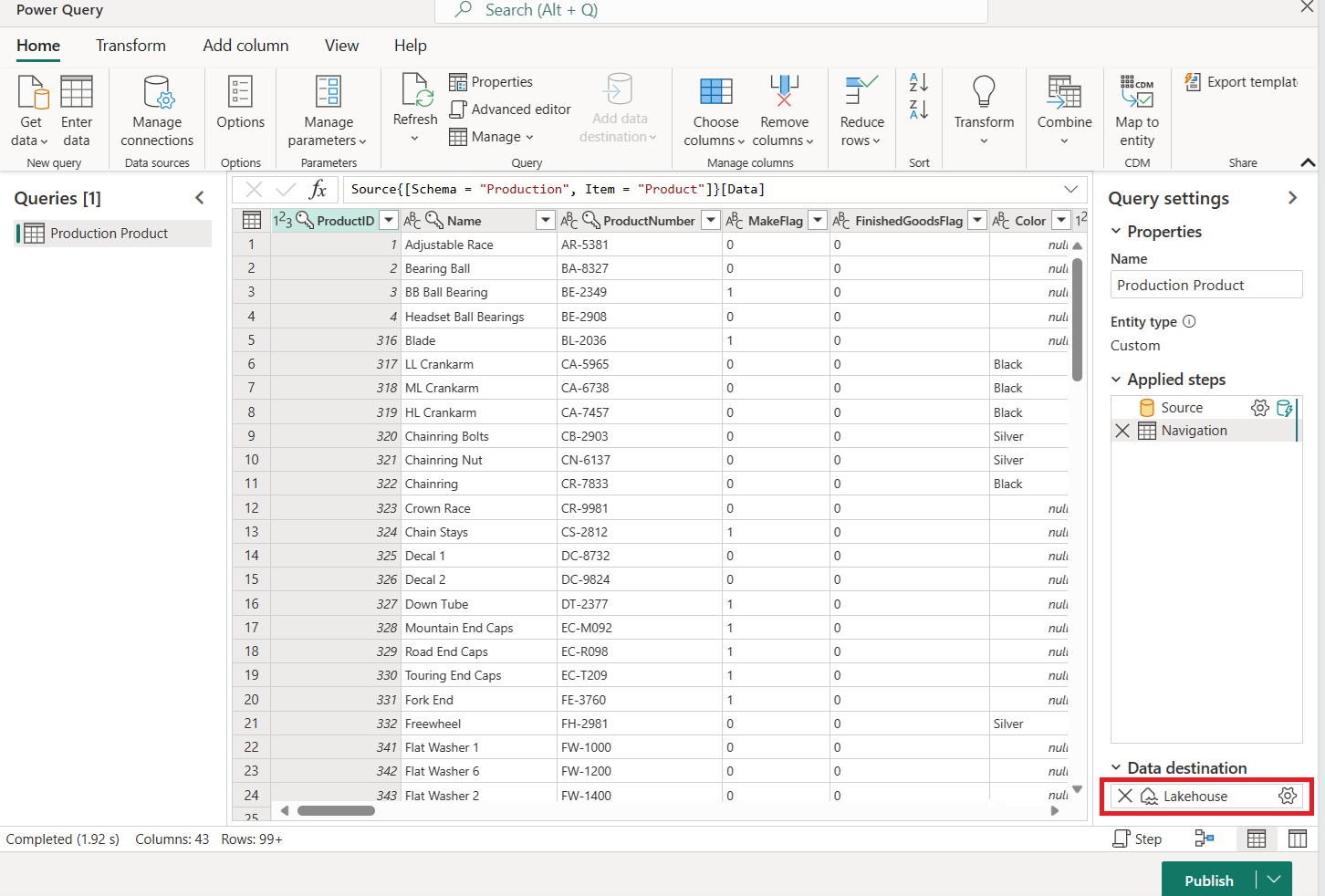

Confirm that the dataflow is configured with a data destination.

Verify that the dataflow refresh fails, with tables refresh showing as "Succeeded" and activities showing as "Failed".

Review the error details for the Activity

WriteToDatabaseTableFrom_..., which provides information about the encountered error.

Solution: Set new firewall rules on server running the gateway

The firewall rules on the gateway server and/or customer's proxy servers need to be updated to allow outbound traffic from the gateway server to the below endpoints. If your firewall doesn't support wildcards, then use the IP addresses from Azure IP Ranges and Service Tags. They need to be kept in sync each month.

- Protocol: TCP

- Endpoints: *.datawarehouse.pbidedicated.windows.net, *.datawarehouse.fabric.microsoft.com, *.dfs.fabric.microsoft.com

- Port: 1433

Note

In certain scenarios, especially when the capacity is located in a region that is not the nearest to the Gateway, it might be necessary to configure the firewall to allow access to multiple endpoints(*cloudapp.azure.com). This adjustment is required to accommodate redirections that may occur under these conditions. If the traffic destined to *.cloudapp.azure.com do not get intercepted by the rule, you can alternatively allow the IP addresses for your data region in your firewall.

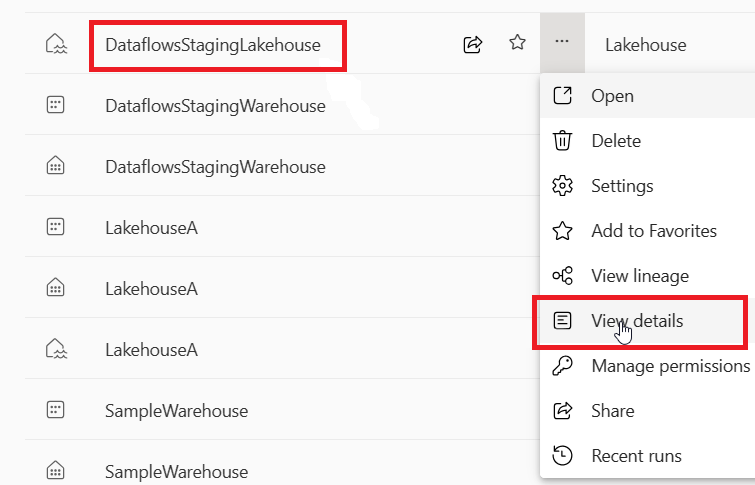

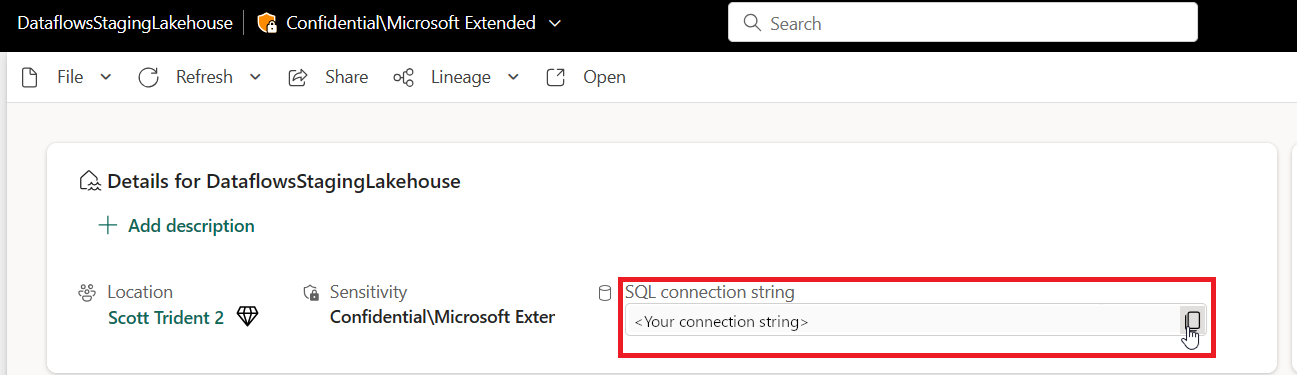

If you want to narrow down the scope of the endpoint to the actual OneLake instance in a workspace (instead of the wildcard *.datawarehouse.pbidedicated.windows.net), that URL can be found by navigating to the Fabric workspace, locating DataflowsStagingLakehouse, and selecting View Details. Then, copy and paste the SQL connection string.

The entire endpoint name looks similar to the following example:

x6eps4xrq2xudenlfv6naeo3i4-l27nd6wdk4oephe4gz4j7mdzka.datawarehouse.pbidedicated.windows.net

Workaround: Split dataflow in a separate ingest and load dataflow

If you're unable to update the firewall rules, you can split the dataflow into two separate dataflows. The first dataflow is responsible for ingesting the data into the staging lakehouse. The second dataflow is responsible for loading the data from the staging lakehouse into the data destination. This workaround isn't ideal, as it requires the use of two separate dataflows, but it can be used as a temporary solution until the firewall rules can be updated.

To implement this workaround, follow these steps:

Remove the data destination from your current dataflow that ingests data via your gateway.

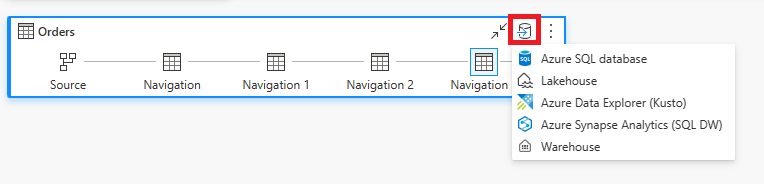

Create a new dataflow that uses the dataflow connector to connect to the ingested dataflow. This dataflow is responsible for ingesting the data from staging into the data destination.

Set the data destination to be the data destination of your choice for this new dataflow.

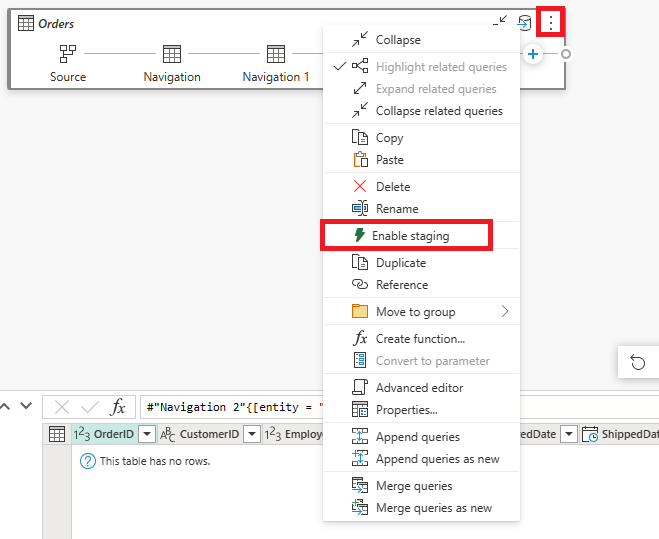

Optionally, you can disable staging for this new dataflow. This change prevents the data from being copied to the staging lakehouse again and instead copies the data directly from the ingested dataflow to the data destination.