Lakehouse tutorial: Create a lakehouse, ingest sample data, and build a report

In this tutorial, you build a lakehouse, ingest sample data into the Delta table, apply transformation where required, and then create reports. In this tutorial, you'll learn to:

- Create a lakehouse in Microsoft Fabric

- Download and ingest sample customer data

- Add tables to the semantic model

- Build a report

If you don’t have Microsoft Fabric, sign up for a free trial capacity.

Prerequisites

- Before you create a lakehouse, you must create a Fabric workspace.

- Before you ingest a CSV file, you must have OneDrive configured. If you don't have OneDrive configured, sign up for the Microsoft 365 free trial: Free Trial - Try Microsoft 365 for a month.

Create a lakehouse

In this section, you create a lakehouse in Fabric.

In Fabric, select Workspaces from the navigation bar.

To open your workspace, enter its name in the search box located at the top and select it from the search results.

From the workspace, select New item, then select Lakehouse.

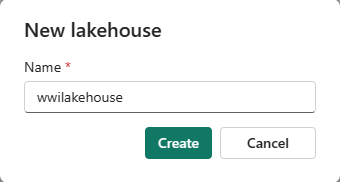

In the New lakehouse dialog box, enter wwilakehouse in the Name field.

Select Create to create and open the new lakehouse.

Ingest sample data

In this section, you ingest sample customer data into the lakehouse.

Note

If you don't have OneDrive configured, sign up for the Microsoft 365 free trial: Free Trial - Try Microsoft 365 for a month.

Download the dimension_customer.csv file from the Fabric samples repo.

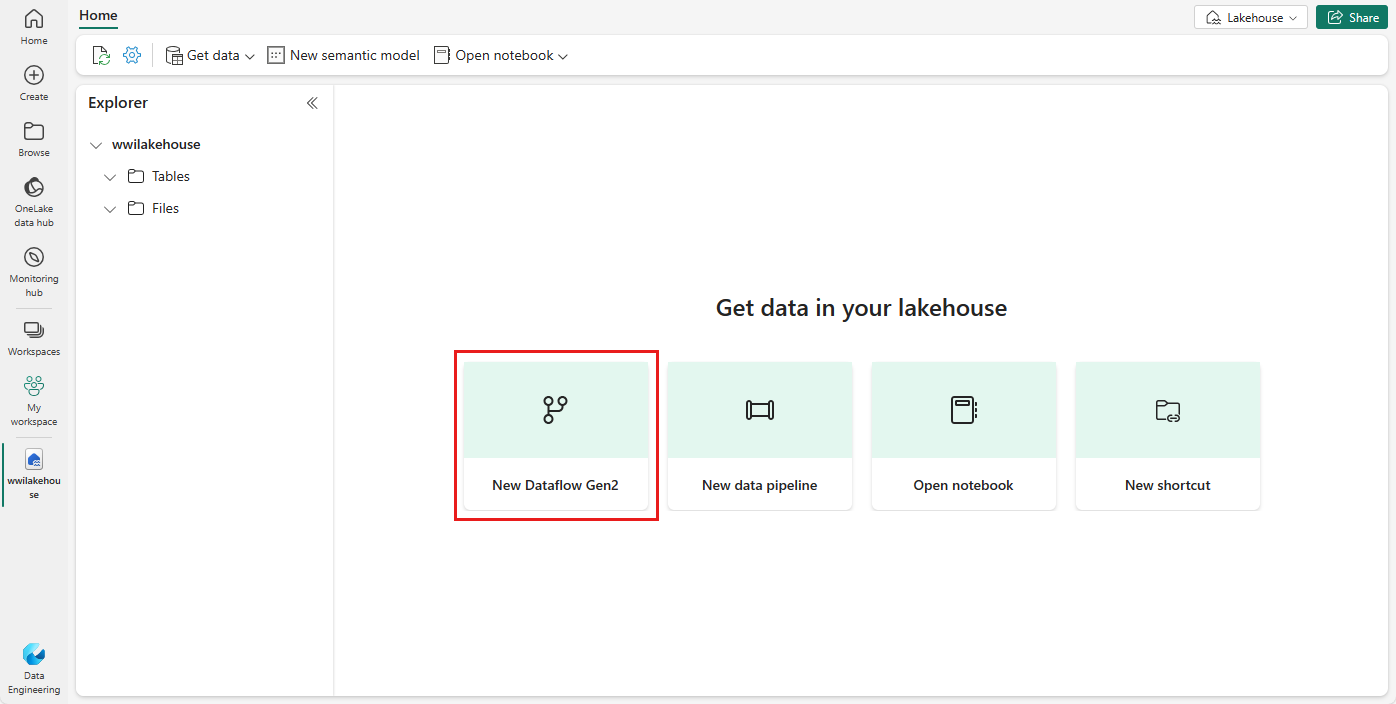

In the Home tab, under Get data in your lakehouse, you see options to load data into the lakehouse. Select New Dataflow Gen2.

On the new dataflow screen, select Import from a Text/CSV file.

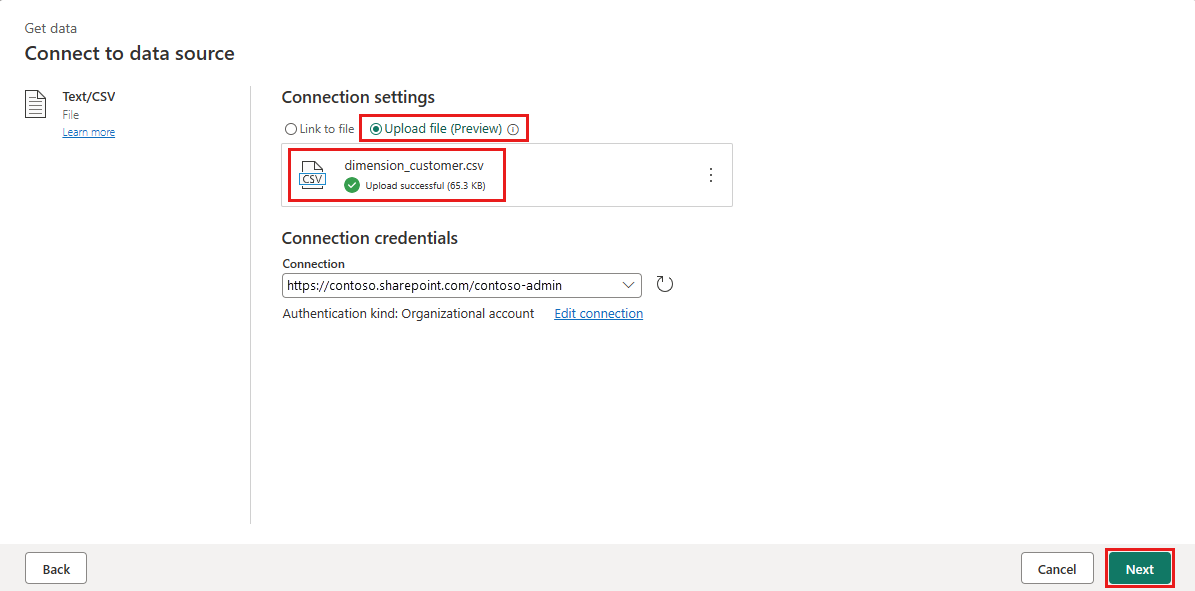

On the Connect to data source screen, select the Upload file radio button. Drag and drop the dimension_customer.csv file that you downloaded in step 1. After the file is uploaded, select Next.

From the Preview file data page, preview the data and select Create to proceed and return back to the dataflow canvas.

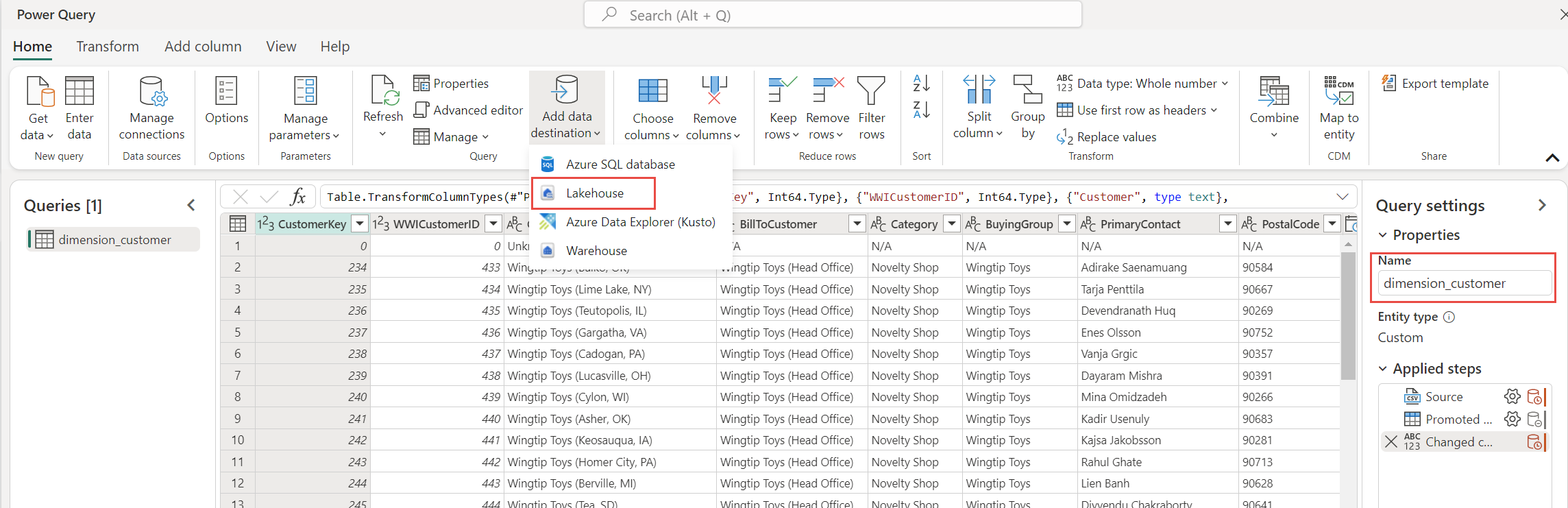

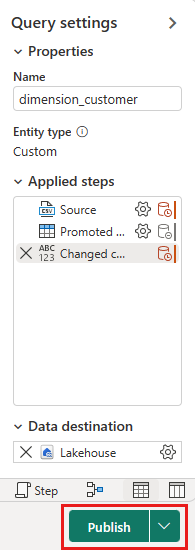

In the Query settings pane, update the Name field to dimension_customer.

Note

Fabric adds a space and number at the end of the table name by default. Table names must be lower case and must not contain spaces. Please rename it appropriately and remove any spaces from the table name.

In this tutorial, you associated the customer data with a lakehouse. If you have other data items that you want to associate with the lakehouse, you can add them:

From the menu items, select Add data destination and select Lakehouse. From the Connect to data destination screen, sign into your account if necessary and select Next.

Navigate to the wwilakehouse in your workspace.

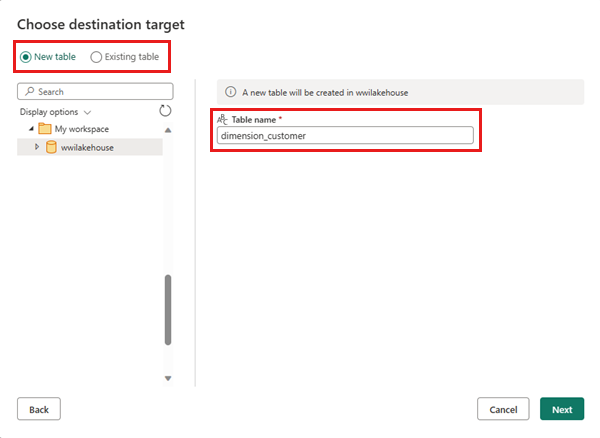

If the dimension_customer table doesn't exist, select the New table setting and enter the table name dimension_customer. If the table already exists, select the Existing table setting and choose dimension_customer from the list of tables in the object explorer. Select Next.

On the Choose destination settings pane, select Replace as Update method. Select Save settings to return to the dataflow canvas.

From the dataflow canvas, you can easily transform the data based on your business requirements. For simplicity, we aren't making any changes in this tutorial. To proceed, select Publish at the bottom right of the screen.

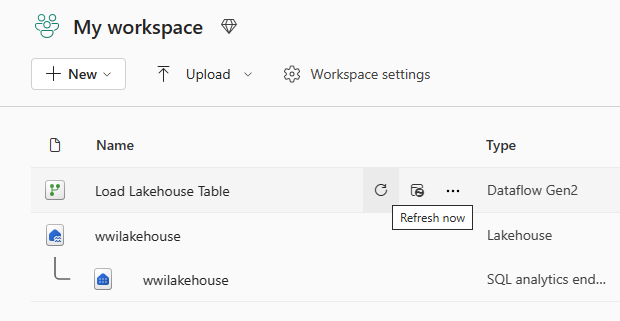

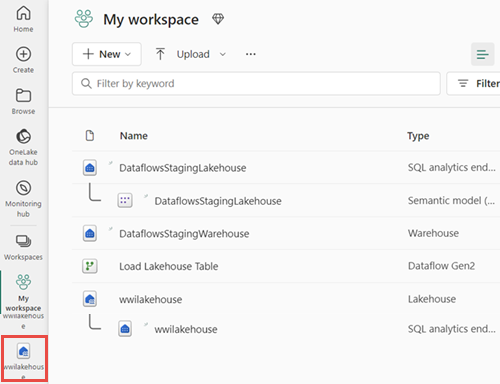

A spinning circle next to the dataflow's name indicates publishing is in progress in the item view. When publishing is complete, select the ... and select Properties. Rename the dataflow to Load Lakehouse Table and select Save.

Select the Refresh now option next to the data flow name to refresh the dataflow. This option runs the data flow and moves data from the source file to lakehouse table. While it's in progress, you see a spinning circle under Refreshed column in the item view.

Once the dataflow is refreshed, select your new lakehouse in the navigation bar to view the dimension_customer Delta table.

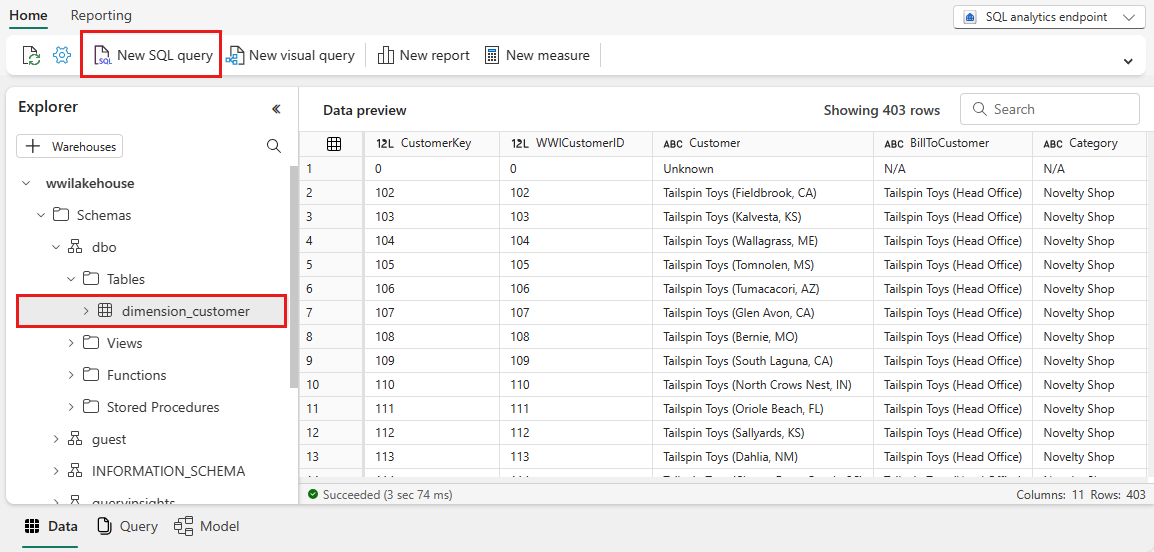

Select the table to preview its data. You can also use the SQL analytics endpoint of the lakehouse to query the data with SQL statements. Select SQL analytics endpoint from the Lakehouse dropdown menu at the top right of the screen.

Select the dimension_customer table to preview its data or select New SQL query to write your SQL statements.

The following sample query aggregates the row count based on the BuyingGroup column of the dimension_customer table. SQL query files are saved automatically for future reference, and you can rename or delete these files based on your need.

To run the script, select the Run icon at the top of the script file.

SELECT BuyingGroup, Count(*) AS Total FROM dimension_customer GROUP BY BuyingGroup

Build a report

In this section, you'll build a report from the ingested data.

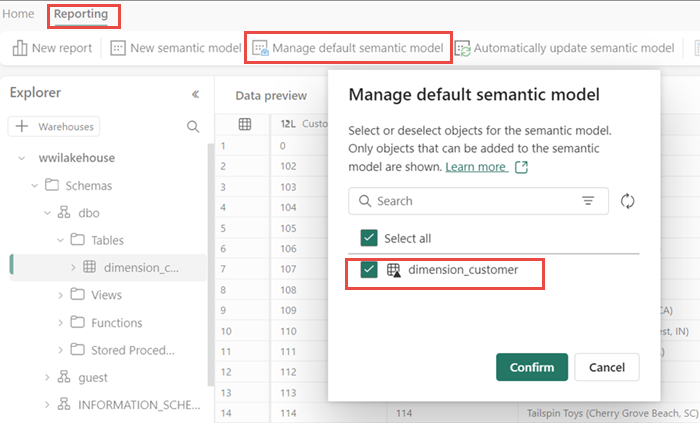

Previously all the lakehouse tables and views were automatically added to the semantic model. With recent updates, for new lakehouses, you must manually add your tables to the semantic model. Open your lakehouse and switch to the SQL analytics endpoint view. From the Reporting tab, select Manage default semantic model and select the tables that you want to add to the semantic model. In this case, select the dimension_customer table.

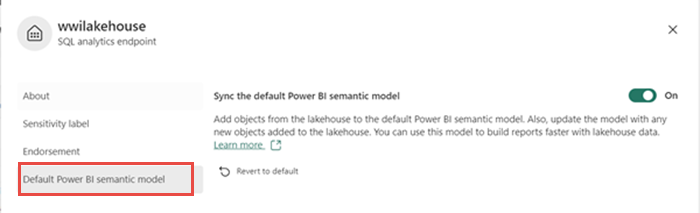

To ensure that the tables in the semantic model are always in sync, switch to the SQL analytics endpoint view and open the lakehouse settings pane. Select Default Power BI semantic model and turn on Sync the default Power BI semantic model. For more information, see Default Power BI semantic models.

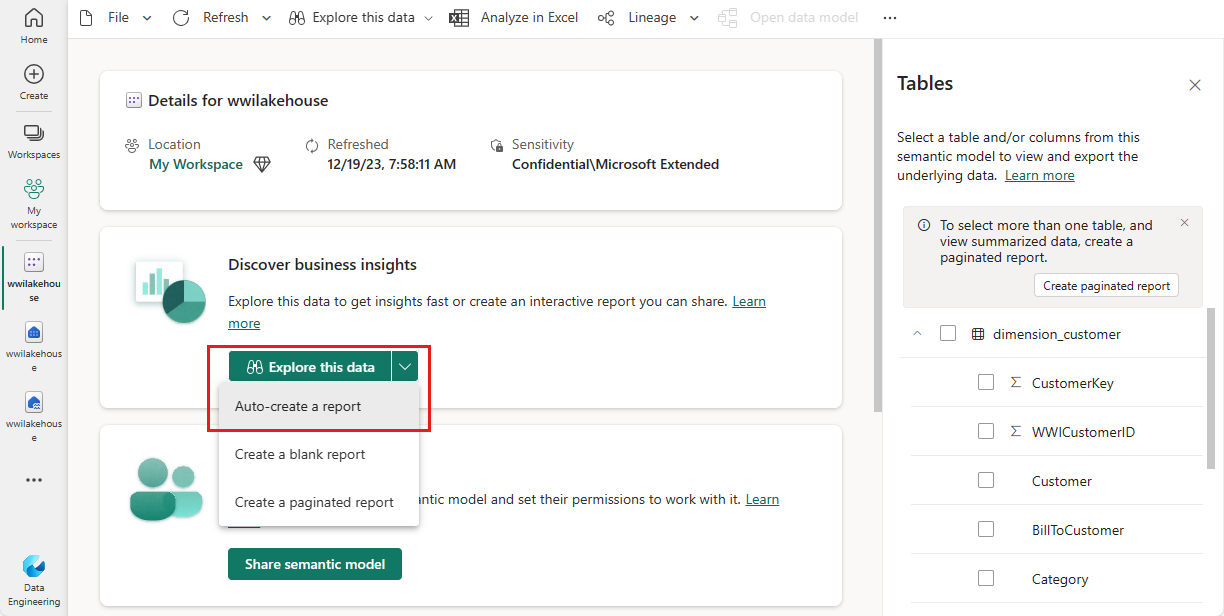

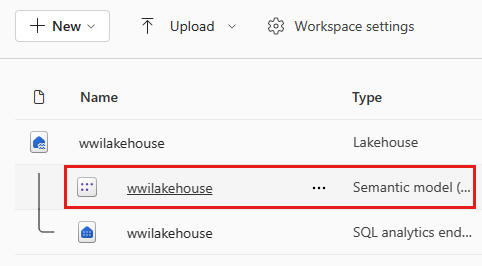

After the table is added, Fabric creates a semantic model with the same name as the lakehouse.

From the semantic model pane, you can view all the tables. You have options to create reports either from scratch, paginated reports, or let Power BI automatically create a report based on your data. For this tutorial, under Explore this data, select Auto-create a report. In the next tutorial, we create a report from scratch.

Because the table is a dimension and there are no measures in it, Power BI creates a measure for the row count and aggregates it across different columns, and creates different charts as shown in the following image. You can save this report for the future by selecting Save from the top ribbon. You can make more changes to this report to meet your requirements by including or excluding other tables or columns.