Development workflow for Docker apps

Tip

This content is an excerpt from the eBook, .NET Microservices Architecture for Containerized .NET Applications, available on .NET Docs or as a free downloadable PDF that can be read offline.

The application development life cycle starts at your computer, as a developer, where you code the application using your preferred language and test it locally. With this workflow, no matter which language, framework, and platform you choose, you're always developing and testing Docker containers, but doing so locally.

Each container (an instance of a Docker image) includes the following components:

- An operating system selection, for example, a Linux distribution, Windows Nano Server, or Windows Server Core.

- Files added during development, for example, source code and application binaries.

- Configuration information, such as environment settings and dependencies.

Workflow for developing Docker container-based applications

This section describes the inner-loop development workflow for Docker container-based applications. The inner-loop workflow means it's not considering the broader DevOps workflow, which can include up to production deployment, and just focuses on the development work done on the developer's computer. The initial steps to set up the environment aren't included, since those steps are done only once.

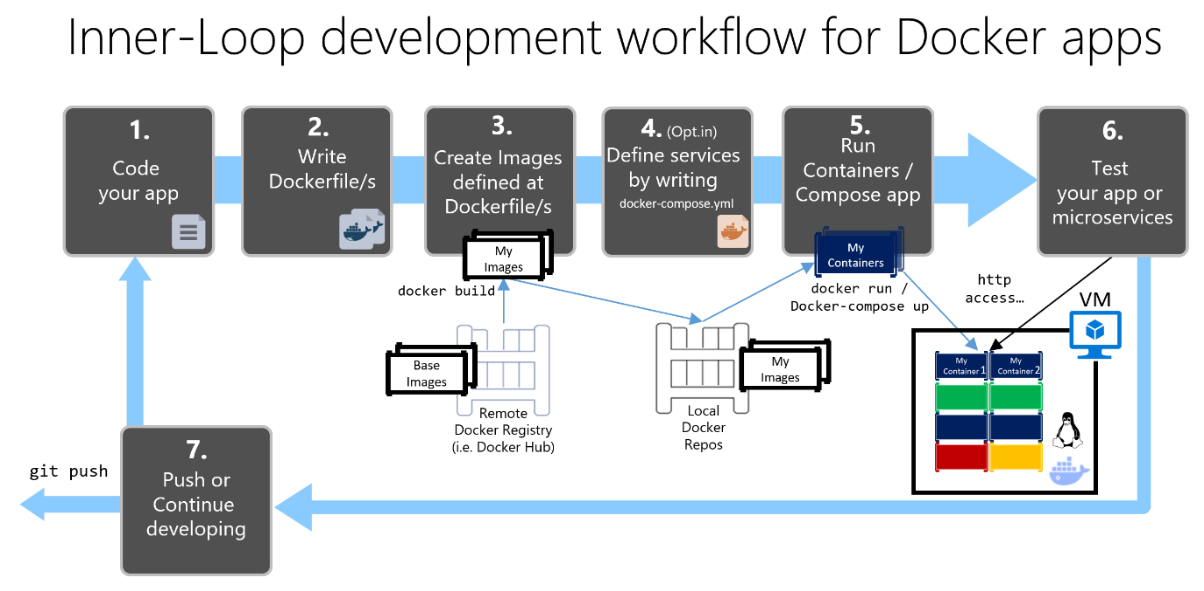

An application is composed of your own services plus additional libraries (dependencies). The following are the basic steps you usually take when building a Docker application, as illustrated in Figure 5-1.

The development process for Docker apps: 1 - Code your App, 2 - Write Dockerfile/s, 3 - Create images defined at Dockerfile/s, 4 - (optional) Compose services in the docker-compose.yml file, 5 - Run container or docker-compose app, 6 - Test your app or microservices, 7 - Push to repo and repeat.

Figure 5-1. Step-by-step workflow for developing Docker containerized apps

In this section, this whole process is detailed and every major step is explained by focusing on a Visual Studio environment.

When you're using an editor/CLI development approach (for example, Visual Studio Code plus Docker CLI on macOS or Windows), you need to know every step, generally in more detail than if you're using Visual Studio. For more information about working in a CLI environment, see the e-book Containerized Docker Application lifecycle with Microsoft Platforms and Tools.

When you're using Visual Studio 2022, many of those steps are handled for you, which dramatically improves your productivity. This is especially true when you're using Visual Studio 2022 and targeting multi-container applications. For instance, with just one mouse click, Visual Studio adds the Dockerfile and docker-compose.yml file to your projects with the configuration for your application. When you run the application in Visual Studio, it builds the Docker image and runs the multi-container application directly in Docker; it even allows you to debug several containers at once. These features will boost your development speed.

However, just because Visual Studio makes those steps automatic doesn't mean that you don't need to know what's going on underneath with Docker. Therefore, the following guidance details every step.

Step 1. Start coding and create your initial application or service baseline

Developing a Docker application is similar to the way you develop an application without Docker. The difference is that while developing for Docker, you're deploying and testing your application or services running within Docker containers in your local environment (either a Linux VM setup by Docker or directly Windows if using Windows Containers).

Set up your local environment with Visual Studio

To begin, make sure you have Docker Desktop for Windows for Windows installed, as explained in the following instructions:

Get started with Docker Desktop for Windows

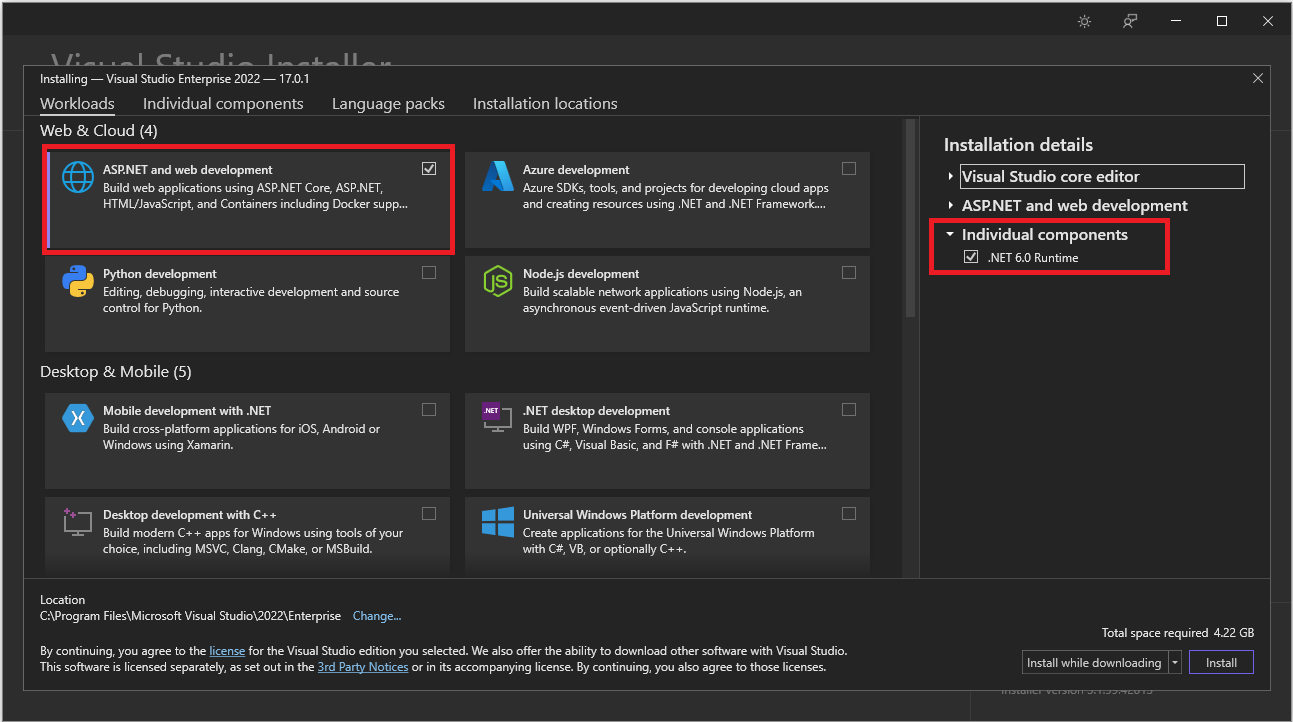

In addition, you need Visual Studio 2022 version 17.0, with the .ASP.NET and web development workload installed, as shown in Figure 5-2.

Figure 5-2. Selecting the ASP.NET and web development workload during Visual Studio 2022 setup

You can start coding your application in plain .NET (usually in .NET Core or later if you're planning to use containers) even before enabling Docker in your application and deploying and testing in Docker. However, it is recommended that you start working on Docker as soon as possible, because that will be the real environment and any issues can be discovered as soon as possible. This is encouraged because Visual Studio makes it so easy to work with Docker that it almost feels transparent—the best example when debugging multi-container applications from Visual Studio.

Additional resources

Get started with Docker Desktop for Windows

https://docs.docker.com/docker-for-windows/Visual Studio 2022

https://visualstudio.microsoft.com/downloads/

Step 2. Create a Dockerfile related to an existing .NET base image

You need a Dockerfile for each custom image you want to build; you also need a Dockerfile for each container to be deployed, whether you deploy automatically from Visual Studio or manually using the Docker CLI (docker run and docker-compose commands). If your application contains a single custom service, you need a single Dockerfile. If your application contains multiple services (as in a microservices architecture), you need one Dockerfile for each service.

The Dockerfile is placed in the root folder of your application or service. It contains the commands that tell Docker how to set up and run your application or service in a container. You can manually create a Dockerfile in code and add it to your project along with your .NET dependencies.

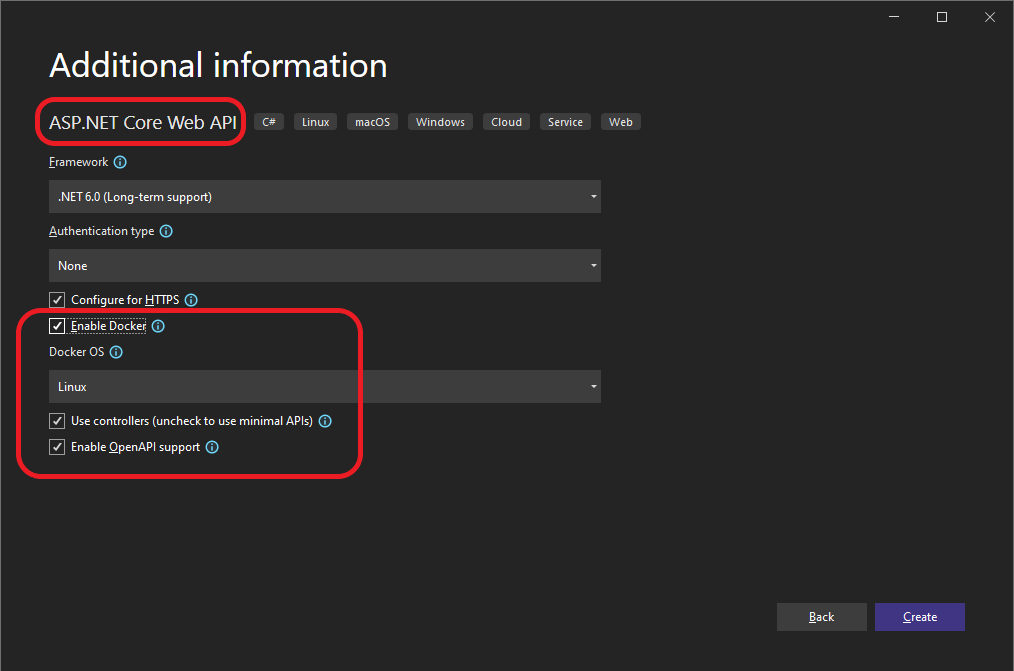

With Visual Studio and its tools for Docker, this task requires only a few mouse clicks. When you create a new project in Visual Studio 2022, there's an option named Enable Docker Support, as shown in Figure 5-3.

Figure 5-3. Enabling Docker Support when creating a new ASP.NET Core project in Visual Studio 2022

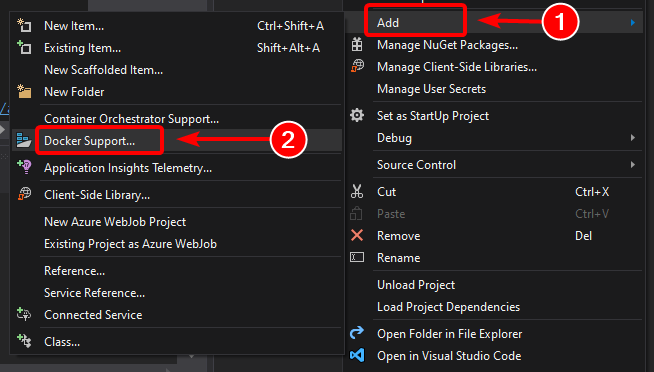

You can also enable Docker support on an existing ASP.NET Core web app project by right-clicking the project in Solution Explorer and selecting Add > Docker Support..., as shown in Figure 5-4.

Figure 5-4. Enabling Docker support in an existing Visual Studio 2022 project

This action adds a Dockerfile to the project with the required configuration, and is only available on ASP.NET Core projects.

In a similar fashion, Visual Studio can also add a docker-compose.yml file for the whole solution with the option Add > Container Orchestrator Support.... In step 4, we'll explore this option in greater detail.

Using an existing official .NET Docker image

You usually build a custom image for your container on top of a base image you get from an official repository like the Docker Hub registry. That's precisely what happens under the covers when you enable Docker support in Visual Studio. Your Dockerfile will use an existing dotnet/core/aspnet image.

Earlier we explained which Docker images and repos you can use, depending on the framework and OS you've chosen. For instance, if you want to use ASP.NET Core (Linux or Windows), the image to use is mcr.microsoft.com/dotnet/aspnet:8.0. Therefore, you just need to specify what base Docker image you'll use for your container. You do that by adding FROM mcr.microsoft.com/dotnet/aspnet:8.0 to your Dockerfile. This is automatically performed by Visual Studio, but if you were to update the version, you update this value.

Using an official .NET image repository from Docker Hub with a version number ensures that the same language features are available on all machines (including development, testing, and production).

The following example shows a sample Dockerfile for an ASP.NET Core container.

FROM mcr.microsoft.com/dotnet/aspnet:8.0

ARG source

WORKDIR /app

EXPOSE 80

COPY ${source:-obj/Docker/publish} .

ENTRYPOINT ["dotnet", " MySingleContainerWebApp.dll "]

In this case, the image is based on version 8.0 of the official ASP.NET Core Docker image (multi-arch for Linux and Windows). This is the setting FROM mcr.microsoft.com/dotnet/aspnet:8.0. (For more information about this base image, see the ASP.NET Core Docker Image page.) In the Dockerfile, you also need to instruct Docker to listen on the TCP port you will use at runtime (in this case, port 80, as configured with the EXPOSE setting).

You can specify additional configuration settings in the Dockerfile, depending on the language and framework you're using. For instance, the ENTRYPOINT line with ["dotnet", "MySingleContainerWebApp.dll"] tells Docker to run a .NET application. If you're using the SDK and the .NET CLI (dotnet CLI) to build and run the .NET application, this setting would be different. The bottom line is that the ENTRYPOINT line and other settings will be different depending on the language and platform you choose for your application.

Additional resources

Building Docker Images for ASP.NET Core Applications

https://learn.microsoft.com/dotnet/core/docker/building-net-docker-imagesBuilding container images. In the official Docker documentation.

https://docs.docker.com/get-started/docker-concepts/building-images/Staying up-to-date with .NET Container Images

https://devblogs.microsoft.com/dotnet/staying-up-to-date-with-net-container-images/Using .NET and Docker Together - DockerCon 2018 Update

https://devblogs.microsoft.com/dotnet/using-net-and-docker-together-dockercon-2018-update/

Using multi-arch image repositories

A single repo can contain platform variants, such as a Linux image and a Windows image. This feature allows vendors like Microsoft (base image creators) to create a single repo to cover multiple platforms (that is, Linux and Windows). For example, the .NET repository available in the Docker Hub registry provides support for Linux and Windows Nano Server by using the same repo name.

If you specify a tag, targeting a platform that is explicit like in the following cases:

mcr.microsoft.com/dotnet/aspnet:8.0-bullseye-slim

Targets: .NET 8 runtime-only on Linuxmcr.microsoft.com/dotnet/aspnet:8.0-nanoserver-ltsc2022

Targets: .NET 8 runtime-only on Windows Nano Server

But, if you specify the same image name, even with the same tag, the multi-arch images (like the aspnet image) will use the Linux or Windows version depending on the Docker host OS you're deploying, as shown in the following example:

mcr.microsoft.com/dotnet/aspnet:8.0

Multi-arch: .NET 8 runtime-only on Linux or Windows Nano Server depending on the Docker host OS

This way, when you pull an image from a Windows host, it will pull the Windows variant, and pulling the same image name from a Linux host will pull the Linux variant.

Multi-stage builds in Dockerfile

The Dockerfile is similar to a batch script. Similar to what you would do if you had to set up the machine from the command line.

It starts with a base image that sets up the initial context, it's like the startup filesystem, that sits on top of the host OS. It's not an OS, but you can think of it like "the" OS inside the container.

The execution of every command line creates a new layer on the filesystem with the changes from the previous one, so that, when combined, produce the resulting filesystem.

Since every new layer "rests" on top of the previous one and the resulting image size increases with every command, images can get very large if they have to include, for example, the SDK needed to build and publish an application.

This is where multi-stage builds get into the plot (from Docker 17.05 and higher) to do their magic.

The core idea is that you can separate the Dockerfile execution process in stages, where a stage is an initial image followed by one or more commands, and the last stage determines the final image size.

In short, multi-stage builds allow splitting the creation in different "phases" and then assemble the final image taking only the relevant directories from the intermediate stages. The general strategy to use this feature is:

Use a base SDK image (doesn't matter how large), with everything needed to build and publish the application to a folder and then

Use a base, small, runtime-only image and copy the publishing folder from the previous stage to produce a small final image.

Probably the best way to understand multi-stage is going through a Dockerfile in detail, line by line, so let's begin with the initial Dockerfile created by Visual Studio when adding Docker support to a project and will get into some optimizations later.

The initial Dockerfile might look something like this:

1 FROM mcr.microsoft.com/dotnet/aspnet:8.0 AS base

2 WORKDIR /app

3 EXPOSE 80

4

5 FROM mcr.microsoft.com/dotnet/sdk:8.0 AS build

6 WORKDIR /src

7 COPY src/Services/Catalog/Catalog.API/Catalog.API.csproj …

8 COPY src/BuildingBlocks/HealthChecks/src/Microsoft.AspNetCore.HealthChecks …

9 COPY src/BuildingBlocks/HealthChecks/src/Microsoft.Extensions.HealthChecks …

10 COPY src/BuildingBlocks/EventBus/IntegrationEventLogEF/ …

11 COPY src/BuildingBlocks/EventBus/EventBus/EventBus.csproj …

12 COPY src/BuildingBlocks/EventBus/EventBusRabbitMQ/EventBusRabbitMQ.csproj …

13 COPY src/BuildingBlocks/EventBus/EventBusServiceBus/EventBusServiceBus.csproj …

14 COPY src/BuildingBlocks/WebHostCustomization/WebHost.Customization …

15 COPY src/BuildingBlocks/HealthChecks/src/Microsoft.Extensions …

16 COPY src/BuildingBlocks/HealthChecks/src/Microsoft.Extensions …

17 RUN dotnet restore src/Services/Catalog/Catalog.API/Catalog.API.csproj

18 COPY . .

19 WORKDIR /src/src/Services/Catalog/Catalog.API

20 RUN dotnet build Catalog.API.csproj -c Release -o /app

21

22 FROM build AS publish

23 RUN dotnet publish Catalog.API.csproj -c Release -o /app

24

25 FROM base AS final

26 WORKDIR /app

27 COPY --from=publish /app .

28 ENTRYPOINT ["dotnet", "Catalog.API.dll"]

And these are the details, line by line:

Line #1: Begin a stage with a "small" runtime-only base image, call it base for reference.

Line #2: Create the /app directory in the image.

Line #3: Expose port 80.

Line #5: Begin a new stage with the "large" image for building/publishing. Call it build for reference.

Line #6: Create directory /src in the image.

Line #7: Up to line 16, copy referenced .csproj project files to be able to restore packages later.

Line #17: Restore packages for the Catalog.API project and the referenced projects.

Line #18: Copy all directory tree for the solution (except the files/directories included in the .dockerignore file) to the /src directory in the image.

Line #19: Change the current folder to the Catalog.API project.

Line #20: Build the project (and other project dependencies) and output to the /app directory in the image.

Line #22: Begin a new stage continuing from the build. Call it publish for reference.

Line #23: Publish the project (and dependencies) and output to the /app directory in the image.

Line #25: Begin a new stage continuing from base and call it final.

Line #26: Change the current directory to /app.

Line #27: Copy the /app directory from stage publish to the current directory.

Line #28: Define the command to run when the container is started.

Now let's explore some optimizations to improve the whole process performance that, in the case of eShopOnContainers, means about 22 minutes or more to build the complete solution in Linux containers.

You'll take advantage of Docker's layer cache feature, which is quite simple: if the base image and the commands are the same as some previously executed, it can just use the resulting layer without the need to execute the commands, thus saving some time.

So, let's focus on the build stage, lines 5-6 are mostly the same, but lines 7-17 are different for every service from eShopOnContainers, so they have to execute every single time, however if you changed lines 7-16 to:

COPY . .

Then it would be just the same for every service, it would copy the whole solution and would create a larger layer but:

The copy process would only be executed the first time (and when rebuilding if a file is changed) and would use the cache for all other services and

Since the larger image occurs in an intermediate stage, it doesn't affect the final image size.

The next significant optimization involves the restore command executed in line 17, which is also different for every service of eShopOnContainers. If you change that line to just:

RUN dotnet restore

It would restore the packages for the whole solution, but then again, it would do it just once, instead of the 15 times with the current strategy.

However, dotnet restore only runs if there's a single project or solution file in the folder, so achieving this is a bit more complicated and the way to solve it, without getting into too many details, is this:

Add the following lines to .dockerignore:

*.sln, to ignore all solution files in the main folder tree!eShopOnContainers-ServicesAndWebApps.sln, to include only this solution file.

Include the

/ignoreprojectextensions:.dcprojargument todotnet restore, so it also ignores the docker-compose project and only restores the packages for the eShopOnContainers-ServicesAndWebApps solution.

For the final optimization, it just happens that line 20 is redundant, as line 23 also builds the application and comes, in essence, right after line 20, so there goes another time-consuming command.

The resulting file is then:

1 FROM mcr.microsoft.com/dotnet/aspnet:8.0 AS base

2 WORKDIR /app

3 EXPOSE 80

4

5 FROM mcr.microsoft.com/dotnet/sdk:8.0 AS publish

6 WORKDIR /src

7 COPY . .

8 RUN dotnet restore /ignoreprojectextensions:.dcproj

9 WORKDIR /src/src/Services/Catalog/Catalog.API

10 RUN dotnet publish Catalog.API.csproj -c Release -o /app

11

12 FROM base AS final

13 WORKDIR /app

14 COPY --from=publish /app .

15 ENTRYPOINT ["dotnet", "Catalog.API.dll"]

Creating your base image from scratch

You can create your own Docker base image from scratch. This scenario is not recommended for someone who is starting with Docker, but if you want to set the specific bits of your own base image, you can do so.

Additional resources

Multi-arch .NET Core images.

https://github.com/dotnet/announcements/issues/14Create a base image. Official Docker documentation.

https://docs.docker.com/develop/develop-images/baseimages/

Step 3. Create your custom Docker images and embed your application or service in them

For each service in your application, you need to create a related image. If your application is made up of a single service or web application, you just need a single image.

Note that the Docker images are built automatically for you in Visual Studio. The following steps are only needed for the editor/CLI workflow and explained for clarity about what happens underneath.

You, as a developer, need to develop and test locally until you push a completed feature or change to your source control system (for example, to GitHub). This means that you need to create the Docker images and deploy containers to a local Docker host (Windows or Linux VM) and run, test, and debug against those local containers.

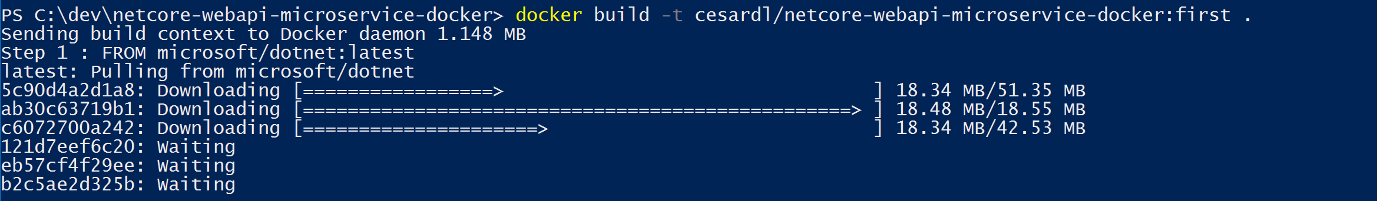

To create a custom image in your local environment by using Docker CLI and your Dockerfile, you can use the docker build command, as in Figure 5-5.

Figure 5-5. Creating a custom Docker image

Optionally, instead of directly running docker build from the project folder, you can first generate a deployable folder with the required .NET libraries and binaries by running dotnet publish, and then use the docker build command.

This will create a Docker image with the name cesardl/netcore-webapi-microservice-docker:first. In this case, :first is a tag that represents a specific version. You can repeat this step for each custom image you need to create for your composed Docker application.

When an application is made of multiple containers (that is, it is a multi-container application), you can also use the docker-compose up --build command to build all the related images with a single command by using the metadata exposed in the related docker-compose.yml files.

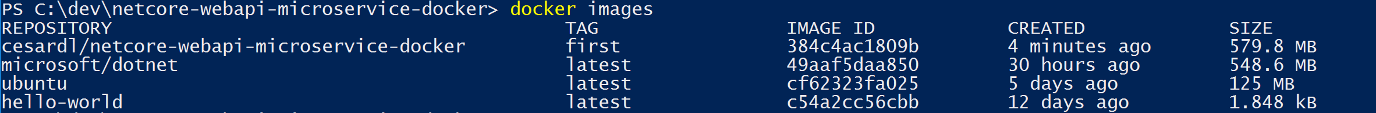

You can find the existing images in your local repository by using the docker images command, as shown in Figure 5-6.

Figure 5-6. Viewing existing images using the docker images command

Creating Docker images with Visual Studio

When you use Visual Studio to create a project with Docker support, you don't explicitly create an image. Instead, the image is created for you when you press F5 (or Ctrl+F5) to run the dockerized application or service. This step is automatic in Visual Studio and you won't see it happen, but it's important that you know what's going on underneath.

Step 4. Define your services in docker-compose.yml when building a multi-container Docker application

The docker-compose.yml file lets you define a set of related services to be deployed as a composed application with deployment commands. It also configures its dependency relations and runtime configuration.

To use a docker-compose.yml file, you need to create the file in your main or root solution folder, with content similar to that in the following example:

version: '3.4'

services:

webmvc:

image: eshop/web

environment:

- CatalogUrl=http://catalog-api

- OrderingUrl=http://ordering-api

ports:

- "80:80"

depends_on:

- catalog-api

- ordering-api

catalog-api:

image: eshop/catalog-api

environment:

- ConnectionString=Server=sqldata;Port=1433;Database=CatalogDB;…

ports:

- "81:80"

depends_on:

- sqldata

ordering-api:

image: eshop/ordering-api

environment:

- ConnectionString=Server=sqldata;Database=OrderingDb;…

ports:

- "82:80"

extra_hosts:

- "CESARDLBOOKVHD:10.0.75.1"

depends_on:

- sqldata

sqldata:

image: mcr.microsoft.com/mssql/server:latest

environment:

- SA_PASSWORD=[PLACEHOLDER]

- ACCEPT_EULA=Y

ports:

- "5433:1433"

Important

Microsoft recommends that you use the most secure authentication flow available. If you're connecting to Azure SQL, Managed Identities for Azure resources is the recommended authentication method.

This docker-compose.yml file is a simplified and merged version. It contains static configuration data for each container (like the name of the custom image), which is always required, and configuration information that might depend on the deployment environment, like the connection string. In later sections, you will learn how to split the docker-compose.yml configuration into multiple docker-compose files and override values depending on the environment and execution type (debug or release).

The docker-compose.yml file example defines four services: the webmvc service (a web application), two microservices (ordering-api and basket-api), and one data source container, sqldata, based on SQL Server for Linux running as a container. Each service will be deployed as a container, so a Docker image is required for each.

The docker-compose.yml file specifies not only what containers are being used, but how they are individually configured. For instance, the webmvc container definition in the .yml file:

Uses a pre-built

eshop/web:latestimage. However, you could also configure the image to be built as part of the docker-compose execution with an additional configuration based on a build: section in the docker-compose file.Initializes two environment variables (CatalogUrl and OrderingUrl).

Forwards the exposed port 80 on the container to the external port 80 on the host machine.

Links the web app to the catalog and ordering service with the depends_on setting. This causes the service to wait until those services are started.

We will revisit the docker-compose.yml file in a later section when we cover how to implement microservices and multi-container apps.

Working with docker-compose.yml in Visual Studio 2022

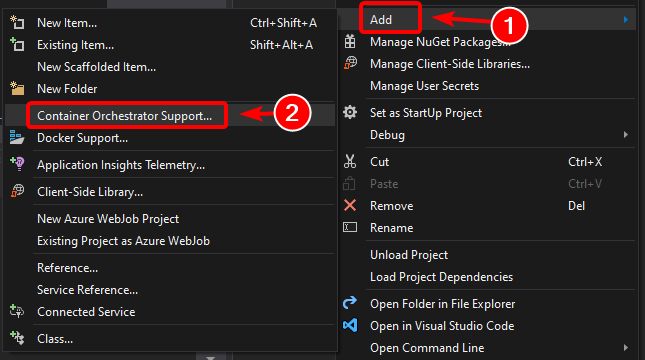

Besides adding a Dockerfile to a project, as we mentioned before, Visual Studio 2017 (from version 15.8 on) can add orchestrator support for Docker Compose to a solution.

When you add container orchestrator support, as shown in Figure 5-7, for the first time, Visual Studio creates the Dockerfile for the project and creates a new (service section) project in your solution with several global docker-compose*.yml files, and then adds the project to those files. You can then open the docker-compose.yml files and update them with additional features.

Repeat this operation for every project you want to include in the docker-compose.yml file.

At the time of this writing, Visual Studio supports Docker Compose orchestrators.

Figure 5-7. Adding Docker support in Visual Studio 2022 by right-clicking an ASP.NET Core project

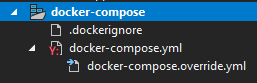

After you add orchestrator support to your solution in Visual Studio, you will also see a new node (in the docker-compose.dcproj project file) in Solution Explorer that contains the added docker-compose.yml files, as shown in Figure 5-8.

Figure 5-8. The docker-compose tree node added in Visual Studio 2022 Solution Explorer

You could deploy a multi-container application with a single docker-compose.yml file by using the docker-compose up command. However, Visual Studio adds a group of them so you can override values depending on the environment (development or production) and execution type (release or debug). This capability will be explained in later sections.

Step 5. Build and run your Docker application

If your application only has a single container, you can run it by deploying it to your Docker host (VM or physical server). However, if your application contains multiple services, you can deploy it as a composed application, either using a single CLI command (docker-compose up), or with Visual Studio, which will use that command under the covers. Let's look at the different options.

Option A: Running a single-container application

Using Docker CLI

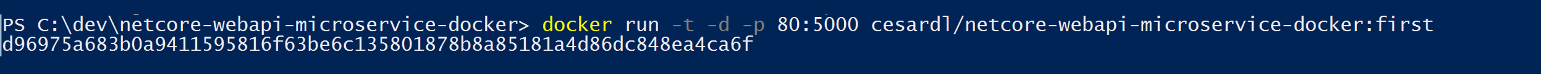

You can run a Docker container using the docker run command, as shown in Figure 5-9:

docker run -t -d -p 80:5000 cesardl/netcore-webapi-microservice-docker:first

The above command will create a new container instance from the specified image, every time it's run. You can use the --name parameter to give a name to the container and then use docker start {name} (or use the container ID or automatic name) to run an existing container instance.

Figure 5-9. Running a Docker container using the docker run command

In this case, the command binds the internal port 5000 of the container to port 80 of the host machine. This means that the host is listening on port 80 and forwarding to port 5000 on the container.

The hash shown is the container ID and it's also assigned a random readable name if the --name option is not used.

Using Visual Studio

If you haven't added container orchestrator support, you can also run a single container app in Visual Studio by pressing Ctrl+F5 and you can also use F5 to debug the application within the container. The container runs locally using docker run.

Option B: Running a multi-container application

In most enterprise scenarios, a Docker application will be composed of multiple services, which means you need to run a multi-container application, as shown in Figure 5-10.

Figure 5-10. VM with Docker containers deployed

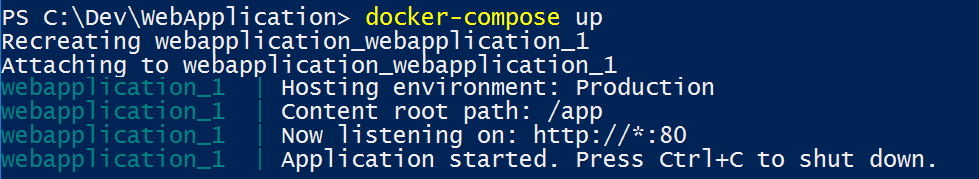

Using Docker CLI

To run a multi-container application with the Docker CLI, you use the docker-compose up command. This command uses the docker-compose.yml file that you have at the solution level to deploy a multi-container application. Figure 5-11 shows the results when running the command from your main solution directory, which contains the docker-compose.yml file.

Figure 5-11. Example results when running the docker-compose up command

After the docker-compose up command runs, the application and its related containers are deployed into your Docker host, as depicted in Figure 5-10.

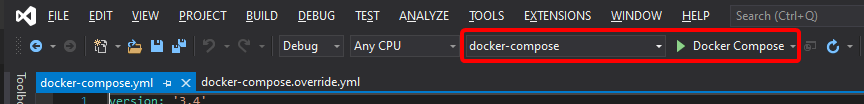

Using Visual Studio

Running a multi-container application using Visual Studio 2019 can't get any simpler. You just press Ctrl+F5 to run or F5 to debug, as usual, setting up the docker-compose project as the startup project. Visual Studio handles all needed setup, so you can create breakpoints as usual and debug what finally become independent processes running in "remote servers", with the debugger already attached, just like that.

As mentioned before, each time you add Docker solution support to a project within a solution, that project is configured in the global (solution-level) docker-compose.yml file, which lets you run or debug the whole solution at once. Visual Studio will start one container for each project that has Docker solution support enabled, and perform all the internal steps for you (dotnet publish, docker build, etc.).

If you want to take a peek at all the drudgery, take a look at the file:

{root solution folder}\obj\Docker\docker-compose.vs.debug.g.yml

The important point here is that, as shown in Figure 5-12, in Visual Studio 2019 there is an additional Docker command for the F5 key action. This option lets you run or debug a multi-container application by running all the containers that are defined in the docker-compose.yml files at the solution level. The ability to debug multiple-container solutions means that you can set several breakpoints, each breakpoint in a different project (container), and while debugging from Visual Studio you will stop at breakpoints defined in different projects and running on different containers.

Figure 5-12. Running multi-container apps in Visual Studio 2022

Additional resources

- Deploy an ASP.NET container to a remote Docker host

https://learn.microsoft.com/visualstudio/containers/hosting-web-apps-in-docker

A note about testing and deploying with orchestrators

The docker-compose up and docker run commands (or running and debugging the containers in Visual Studio) are adequate for testing containers in your development environment. But you should not use this approach for production deployments, where you should target orchestrators like Kubernetes or Service Fabric. If you're using Kubernetes, you have to use pods to organize containers and services to network them. You also use deployments to organize pod creation and modification.

Step 6. Test your Docker application using your local Docker host

This step will vary depending on what your application is doing. In a simple .NET Web application that is deployed as a single container or service, you can access the service by opening a browser on the Docker host and navigating to that site, as shown in Figure 5-13. (If the configuration in the Dockerfile maps the container to a port on the host that is anything other than 80, include the host port in the URL.)

Figure 5-13. Example of testing your Docker application locally using localhost

If localhost is not pointing to the Docker host IP (by default, when using Docker CE, it should), to navigate to your service, use the IP address of your machine's network card.

This URL in the browser uses port 80 for the particular container example being discussed. However, internally the requests are being redirected to port 5000, because that was how it was deployed with the docker run command, as explained in a previous step.

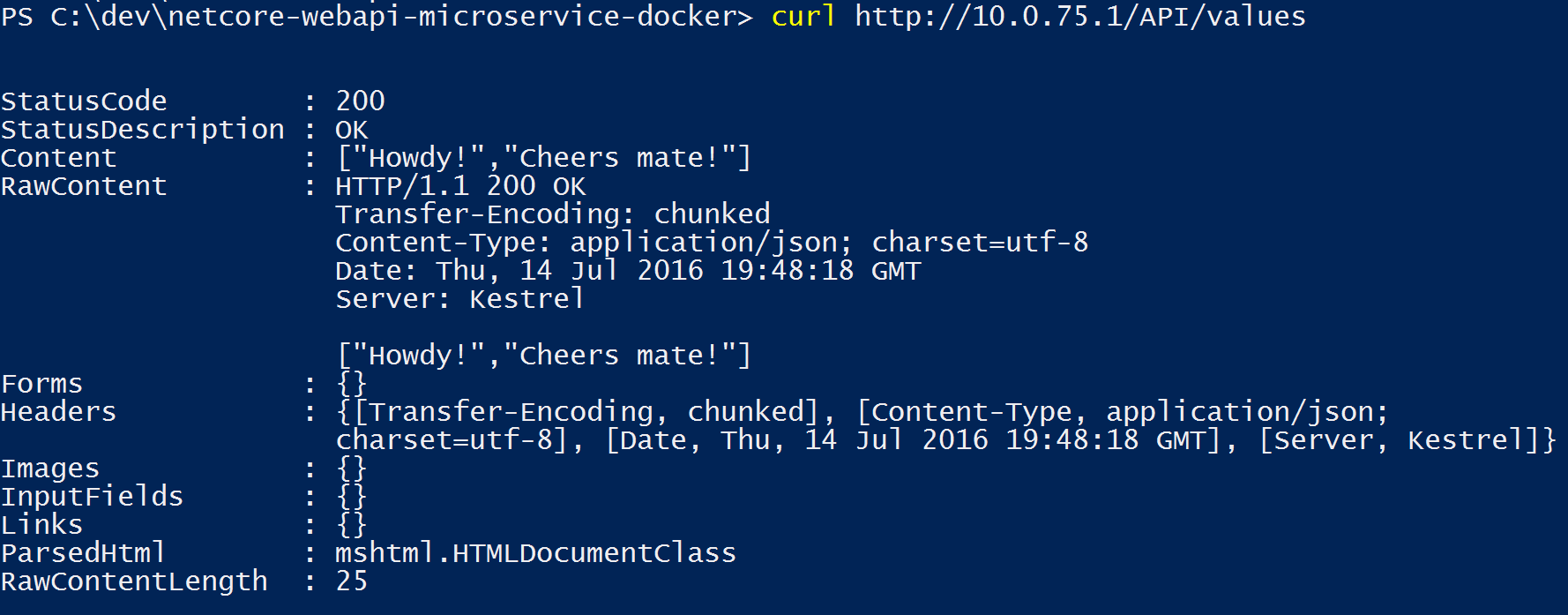

You can also test the application using curl from the terminal, as shown in Figure 5-14. In a Docker installation on Windows, the default Docker Host IP is always 10.0.75.1 in addition to your machine's actual IP address.

Figure 5-14. Example of testing your Docker application locally using curl

Testing and debugging containers with Visual Studio 2022

When running and debugging the containers with Visual Studio 2022, you can debug the .NET application in much the same way as you would when running without containers.

Testing and debugging without Visual Studio

If you're developing using the editor/CLI approach, debugging containers is more difficult and you'll probably want to debug by generating traces.

Additional resources

Quickstart: Docker in Visual Studio.

https://learn.microsoft.com/visualstudio/containers/container-toolsDebugging apps in a local Docker container

https://learn.microsoft.com/visualstudio/containers/edit-and-refresh

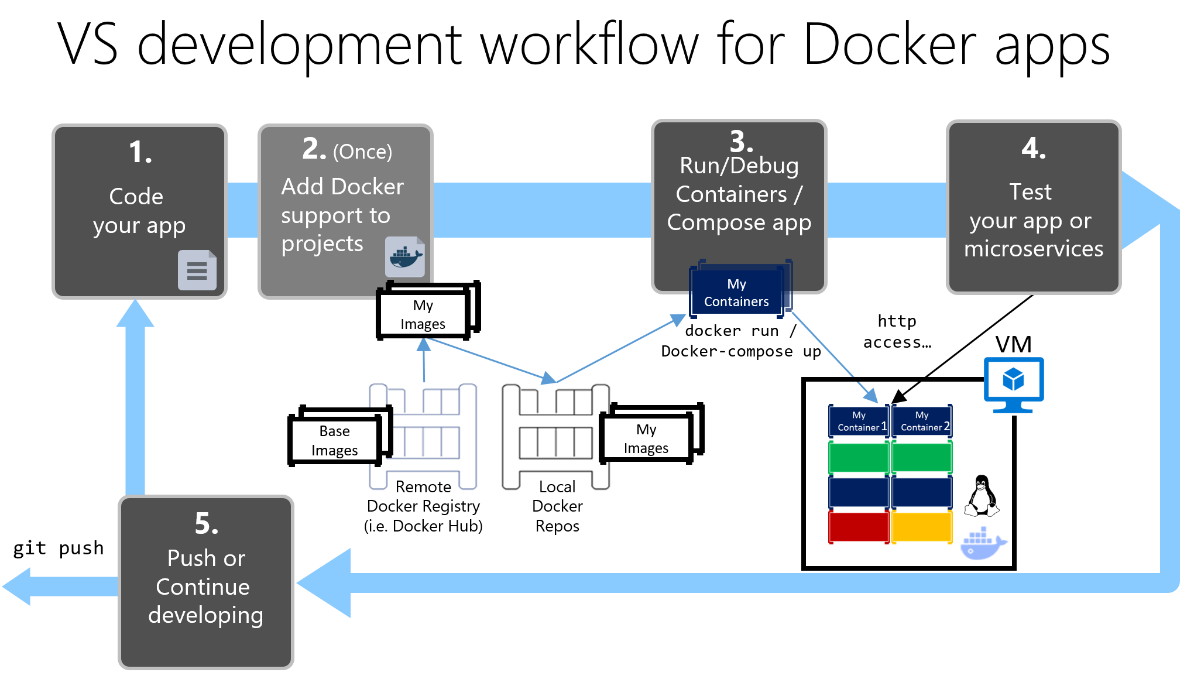

Simplified workflow when developing containers with Visual Studio

Effectively, the workflow when using Visual Studio is a lot simpler than if you use the editor/CLI approach. Most of the steps required by Docker related to the Dockerfile and docker-compose.yml files are hidden or simplified by Visual Studio, as shown in Figure 5-15.

The development process for Docker apps: 1 - Code your App, 2 - Write Dockerfile/s, 3 - Create images defined at Dockerfile/s, 4 - (optional) Compose services in the docker-compose.yml file, 5 - Run container or docker-compose app, 6 - Test your app or microservices, 7 - Push to repo and repeat.

Figure 5-15. Simplified workflow when developing with Visual Studio

In addition, you need to perform step 2 (adding Docker support to your projects) just once. Therefore, the workflow is similar to your usual development tasks when using .NET for any other development. You need to know what is going on under the covers (the image build process, what base images you're using, deployment of containers, etc.) and sometimes you will also need to edit the Dockerfile or docker-compose.yml file to customize behaviors. But most of the work is greatly simplified by using Visual Studio, making you a lot more productive.

Using PowerShell commands in a Dockerfile to set up Windows Containers

Windows Containers allow you to convert your existing Windows applications into Docker images and deploy them with the same tools as the rest of the Docker ecosystem. To use Windows Containers, you run PowerShell commands in the Dockerfile, as shown in the following example:

FROM mcr.microsoft.com/windows/servercore

LABEL Description="IIS" Vendor="Microsoft" Version="10"

RUN powershell -Command Add-WindowsFeature Web-Server

CMD [ "ping", "localhost", "-t" ]

In this case, we are using a Windows Server Core base image (the FROM setting) and installing IIS with a PowerShell command (the RUN setting). In a similar way, you could also use PowerShell commands to set up additional components like ASP.NET 4.x, .NET Framework 4.6, or any other Windows software. For example, the following command in a Dockerfile sets up ASP.NET 4.5:

RUN powershell add-windowsfeature web-asp-net45

Additional resources

- aspnet-docker/Dockerfile. Example PowerShell commands to run from dockerfiles to include Windows features.

https://github.com/Microsoft/aspnet-docker/blob/master/4.7.1-windowsservercore-ltsc2016/runtime/Dockerfile