Step 6. Make & evaluate quality fixes on the AI agent

This article walks you through the steps to iterate through and evaluate quality fixes in your generative AI agent based on root cause analysis.

For more information about evaluating an AI agent, see What is Mosaic AI Agent Evaluation?.

Requirements

- Based on your root cause analysis, you have identified a potential fixes to either retrieval or generation to implement and evaluate.

- Your POC application (or another baseline chain) is logged to an MLflow run with an Agent Evaluation evaluation stored in the same run.

See the GitHub repository for the sample code in this section.

Expected outcome in Agent Evaluation

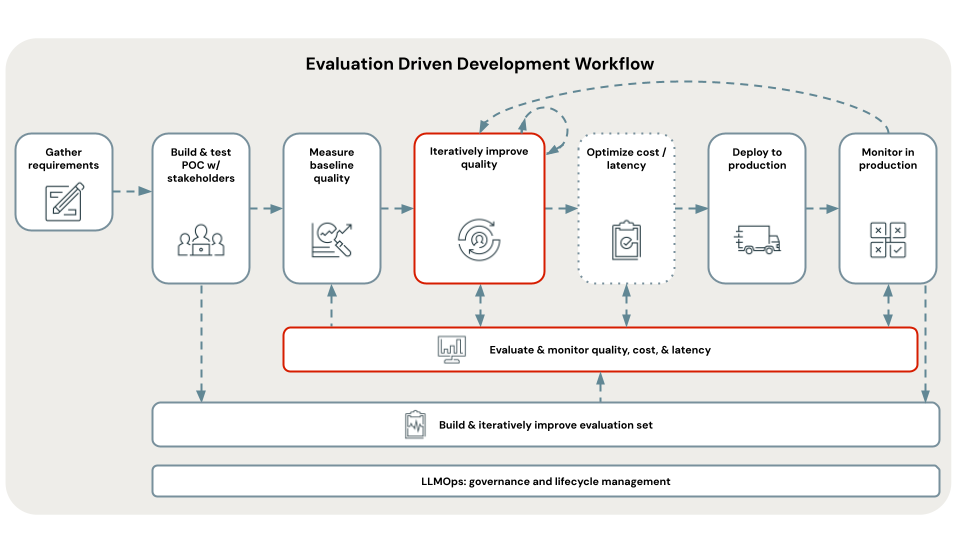

The preceding image shows the Agent Evaluation output in MLflow.

How to fix, evaluate, and iterate on the AI agent

For all types, use the B_quality_iteration/02_evaluate_fixes notebook to evaluate the resulting chain versus your baseline configuration, your POC, and pick a “winner”. This notebook helps you pick the winning experiment and deploy it to the review app or a production-ready, scalable REST API.

- In Azure Databricks, open the B_quality_iteration/02_evaluate_fixes notebook.

- Based on the type of fix you are implementing:

- For data pipeline fixes:

- Follow Step 6 (pipelines). Implement data pipeline fixes to create the new data pipeline and get the name of the resulting MLflow run.

- Add the run name to the

DATA_PIPELINE_FIXES_RUN_NAMESvariable.

- For chain configuration fixes:

- Follow the instructions in the

Chain configurationsection of the 02_evaluate_fixes notebook to add chain configuration fixes to theCHAIN_CONFIG_FIXESvariable.

- Follow the instructions in the

- For chain code fixes:

- Create a modified chain code file and save it to the B_quality_iteration/chain_code_fixes folder. Alternatively, select one of the provided chain code fixes from that folder.

- Follow the instructions in the

Chain codesection of the 02_evaluate_fixes notebook to add the chain code file and any additional chain configuration that is required to theCHAIN_CODE_FIXESvariable.

- For data pipeline fixes:

- The following happens when you run the notebook from the

Run evaluationcell:- Evaluate each fix.

- Determine the fix with the best quality/cost/latency metrics.

- Deploy the best one to the Review App and a production-ready REST API to get stakeholder feedback.

Next step

Continue with Step 6 (pipelines). Implement data pipeline fixes.