Authorize unattended access to Azure Databricks resources with a service principal using OAuth

This topic provides steps and details for authorizing access to Azure Databricks resources when automating Azure Databricks CLI commands or calling Azure Databricks REST APIs from code that will run from an unattended process.

Azure Databricks uses OAuth as the preferred protocol for user authorization and authentication when interacting with Azure Databricks resources outside of the UI. Azure Databricks also provides the unified client authentication tool to automate the refresh of the access tokens generated as part of OAuth’s authentication method. This applies to service principals as well as user accounts, but you must configure a service principal with the appropriate permissions and privileges for the Azure Databricks resources it must access as part of its operations.

For more high-level details, see Authorizing access to Azure Databricks resources.

What are my options for authorization and authentication when using a Azure Databricks service principal?

In this topic, authorization refers to the protocol (OAuth) used to negotiate access to specific Azure Databricks resources through delegation. Authentication refers to the mechanism by which credentials are represented, transmitted, and verified—which, in this case, are access tokens.

Azure Databricks uses OAuth 2.0-based authorization to enable access to Azure Databricks account and workspace resources from the command line or code on behalf of a service principal with the permissions to access those resources. Once a Azure Databricks service principal is configured and its credentials are verified when it runs a CLI command or calls a REST API, an OAuth token is given to the participating tool or SDK to perform token-based authentication on the service principal’s behalf from that time forward. The OAuth access token has a lifespan of one hour, following which the tool or SDK involved will make an automatic background attempt to obtain a new token that is also valid for one hour.

Azure Databricks supports two ways to authorize access for a service principal with OAuth:

- Mostly automatically, using the Databricks unified client authentication support. Use this simplified approach if you are using specific Azure Databricks SDKs (such as the Databricks Terraform SDK) and tools. Supported tools and SDKs are listed in Databricks unified client authentication. This approach is well-suited to automation or other unattended process scenarios.

- Manually, by directly generating an OAuth code verifier/challenge pair and an authorization code, and using them to create the initial OAuth token you will provide in your configuration. Use this approach when you are not using an API supported by Databricks unified client authentication. In this case, you may need to develop your own mechanism to handle the refresh of access tokens specific to the 3rd party tool or API you are using. For more details, see: Manually generate and use access tokens for OAuth service principal authentication.

Before you start, you must configure a Azure Databricks service principal and assign it the appropriate permissions to access the resources it must use when your automation code or commands request them.

Prerequisite: Create a service principal

Account admins and workspace admins can create service principals. This step describes creating a service principal in a Azure Databricks workspace. For details on the Azure Databricks account console itself, see Manage service principals in your account.

You can also create an an Microsoft Entra ID managed service principal and add it to Azure Databricks. For more information, see Databricks and Microsoft Entra ID service principals.

- As a workspace admin, log in to the Azure Databricks workspace.

- Click your username in the top bar of the Azure Databricks workspace and select Settings.

- Click on the Identity and access tab.

- Next to Service principals, click Manage.

- Click Add service principal.

- Click the drop-down arrow in the search box and then click Add new.

- Under Management, choose Databricks managed.

- Enter a name for the service principal.

- Click Add.

The service principal is added to both your workspace and the Azure Databricks account.

Step 1: Assign permissions to your service principal

- Click the name of your service principal to open its details page.

- On the Configurations tab, check the box next to each entitlement that you want your service principal to have for this workspace, and then click Update.

- On the Permissions tab, grant access to any Azure Databricks users, service principals, and groups that you want to manage and use this service principal. See Manage roles on a service principal.

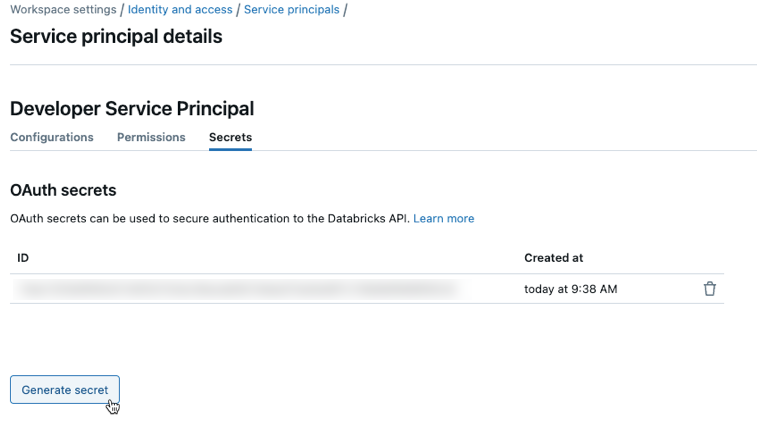

Step 2: Create an OAuth secret for a service principal

Before you can use OAuth to authorize access to your Azure Databricks resources, you must first create an OAuth secret, which can be used to generate OAuth access tokens for authentication. A service principal can have up to five OAuth secrets.

Account admins and workspace admins can create an OAuth secret for a service principal.

On your service principal’s details page click the Secrets tab.

Under OAuth secrets, click Generate secret.

Copy the displayed Secret and Client ID, and then click Done.

The secret will only be revealed once during creation. The client ID is the same as the service principal’s application ID.

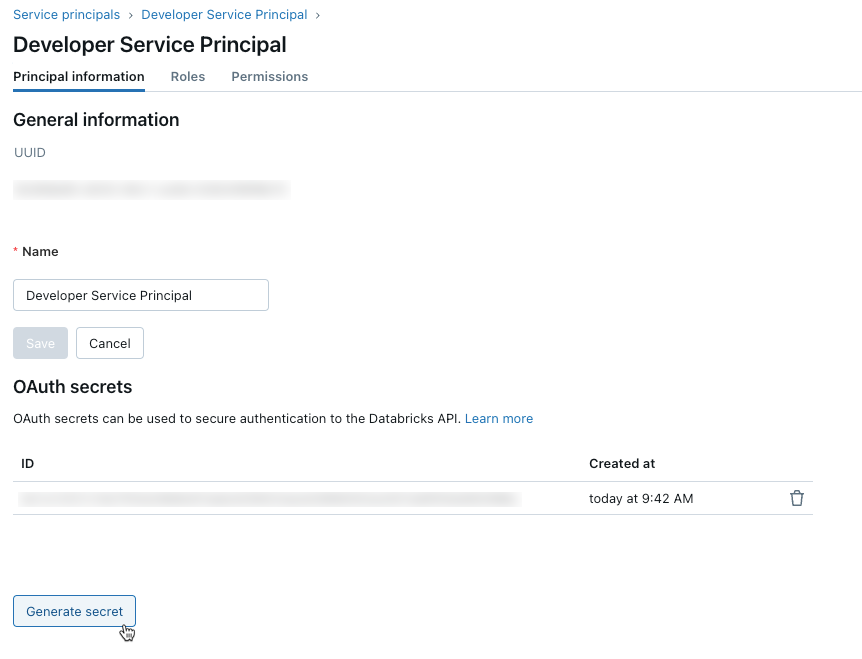

Account admins can also generate an OAuth secret from the service principal details page in the account console.

As an account admin, log in to the account console.

Click

User management.

User management.On the Service principals tab, select your service principal.

Under OAuth secrets, click Generate secret.

Copy the displayed Secret and Client ID, and then click Done.

Note

To enable the service principal to use clusters or SQL warehouses, you must give the service principal access to them. See Compute permissions or Manage a SQL warehouse.

Step 3: Use OAuth authorization

To use OAuth authorization with the unified client authentication tool, you must set the following associated environment variables, .databrickscfg fields, Terraform fields, or Config fields:

- The Azure Databricks host, specified as

https://accounts.azuredatabricks.netfor account operations or the target per-workspace URL, for examplehttps://adb-1234567890123456.7.azuredatabricks.netfor workspace operations. - The Azure Databricks account ID, for Azure Databricks account operations.

- The service principal client ID.

- The service principal secret.

To perform OAuth service principal authentication, integrate the following within your code, based on the participating tool or SDK:

Environment

To use environment variables for a specific Azure Databricks authentication type with a tool or SDK, see Authorizing access to Azure Databricks resources or the tool’s or SDK’s documentation. See also Environment variables and fields for client unified authentication and the Default methods for client unified authentication.

For account-level operations, set the following environment variables:

DATABRICKS_HOST, set to the Azure Databricks account console URL,https://accounts.azuredatabricks.net.DATABRICKS_ACCOUNT_IDDATABRICKS_CLIENT_IDDATABRICKS_CLIENT_SECRET

For workspace-level operations, set the following environment variables:

DATABRICKS_HOST, set to the Azure Databricks per-workspace URL, for examplehttps://adb-1234567890123456.7.azuredatabricks.net.DATABRICKS_CLIENT_IDDATABRICKS_CLIENT_SECRET

Profile

Create or identify an Azure Databricks configuration profile with the following fields in your .databrickscfg file. If you create the profile, replace the placeholders with the appropriate values. To use the profile with a tool or SDK, see Authorizing access to Azure Databricks resources or the tool’s or SDK’s documentation. See also Environment variables and fields for client unified authentication and the Default methods for client unified authentication.

For account-level operations, set the following values in your .databrickscfg file. In this case, the Azure Databricks account console URL is https://accounts.azuredatabricks.net:

[<some-unique-configuration-profile-name>]

host = <account-console-url>

account_id = <account-id>

client_id = <service-principal-client-id>

client_secret = <service-principal-secret>

For workspace-level operations, set the following values in your .databrickscfg file. In this case, the host is the Azure Databricks per-workspace URL, for example https://adb-1234567890123456.7.azuredatabricks.net:

[<some-unique-configuration-profile-name>]

host = <workspace-url>

client_id = <service-principal-client-id>

client_secret = <service-principal-secret>

CLI

For the Databricks CLI, do one of the following:

- Set the environment variables as specified in this article’s “Environment” section.

- Set the values in your

.databrickscfgfile as specified in this article’s “Profile” section.

Environment variables always take precedence over values in your .databrickscfg file.

See also OAuth machine-to-machine (M2M) authentication.

Connect

Note

OAuth service principal authentication is supported on the following Databricks Connect versions:

- For Python, Databricks Connect for Databricks Runtime 14.0 and above.

- For Scala, Databricks Connect for Databricks Runtime 13.3 LTS and above. The Databricks SDK for Java that is included with Databricks Connect for Databricks Runtime 13.3 LTS and above must be upgraded to Databricks SDK for Java 0.17.0 or above.

For Databricks Connect, you can do one of the following:

- Set the values in your

.databrickscfgfile for Azure Databricks workspace-level operations as specified in this article’s “Profile” section. Also set thecluster_idenvironment variable in your profile to your per-workspace URL, for examplehttps://adb-1234567890123456.7.azuredatabricks.net. - Set the environment variables for Azure Databricks workspace-level operations as specified in this article’s “Environment” section. Also set the

DATABRICKS_CLUSTER_IDenvironment variable to your per-workspace URL, for examplehttps://adb-1234567890123456.7.azuredatabricks.net.

Values in your .databrickscfg file always take precedence over environment variables.

To initialize the Databricks Connect client with these environment variables or values in your .databrickscfg file, see Compute configuration for Databricks Connect.

VS Code

For the Databricks extension for Visual Studio Code, do the following:

- Set the values in your

.databrickscfgfile for Azure Databricks workspace-level operations as specified in this article’s “Profile” section. - In the Configuration pane of the Databricks extension for Visual Studio Code, click Configure Databricks.

- In the Command Palette, for Databricks Host, enter your per-workspace URL, for example

https://adb-1234567890123456.7.azuredatabricks.net, and then pressEnter. - In the Command Palette, select your target profile’s name in the list for your URL.

For more details, see Set up authorization for the Databricks extension for Visual Studio Code.

Terraform

For account-level operations, for default authentication:

provider "databricks" {

alias = "accounts"

}

For direct configuration (replace the retrieve placeholders with your own implementation to retrieve the values from the console or some other configuration store, such as HashiCorp Vault. See also Vault Provider). In this case, the Azure Databricks account console URL is https://accounts.azuredatabricks.net:

provider "databricks" {

alias = "accounts"

host = <retrieve-account-console-url>

account_id = <retrieve-account-id>

client_id = <retrieve-client-id>

client_secret = <retrieve-client-secret>

}

For workspace-level operations, for default authentication:

provider "databricks" {

alias = "workspace"

}

For direct configuration (replace the retrieve placeholders with your own implementation to retrieve the values from the console or some other configuration store, such as HashiCorp Vault. See also Vault Provider). In this case, the host is the Azure Databricks per-workspace URL, for example https://adb-1234567890123456.7.azuredatabricks.net:

provider "databricks" {

alias = "workspace"

host = <retrieve-workspace-url>

client_id = <retrieve-client-id>

client_secret = <retrieve-client-secret>

}

For more information about authenticating with the Databricks Terraform provider, see Authentication.

Python

For account-level operations, use the following for default authentication:

from databricks.sdk import AccountClient

a = AccountClient()

# ...

For direct configuration, use the following, replacing the retrieve placeholders with your own implementation, to retrieve the values from the console or other configuration store, such as Azure KeyVault. In this case, the Azure Databricks account console URL is https://accounts.azuredatabricks.net:

from databricks.sdk import AccountClient

a = AccountClient(

host = retrieve_account_console_url(),

account_id = retrieve_account_id(),

client_id = retrieve_client_id(),

client_secret = retrieve_client_secret()

)

# ...

For workspace-level operations, specifically default authentication:

from databricks.sdk import WorkspaceClient

w = WorkspaceClient()

# ...

For direct configuration, replace the retrieve placeholders with your own implementation to retrieve the values from the console, or other configuration store, such as Azure KeyVault. In this case, the host is the Azure Databricks per-workspace URL, for example https://adb-1234567890123456.7.azuredatabricks.net:

from databricks.sdk import WorkspaceClient

w = WorkspaceClient(

host = retrieve_workspace_url(),

client_id = retrieve_client_id(),

client_secret = retrieve_client_secret()

)

# ...

For more information about authenticating with Databricks tools and SDKs that use Python and implement Databricks client unified authentication, see:

- Set up the Databricks Connect client for Python

- Authenticate the Databricks SDK for Python with your Azure Databricks account or workspace

Note

The Databricks extension for Visual Studio Code uses Python but has not yet implemented OAuth service principal authentication.

Java

For workspace-level operations using default authentication:

import com.databricks.sdk.WorkspaceClient;

// ...

WorkspaceClient w = new WorkspaceClient();

// ...

For direct configuration (replace the retrieve placeholders with your own implementation to retrieve the values from the console, or other configuration store, such as Azure KeyVault). In this case, the host is the Azure Databricks per-workspace URL, for example https://adb-1234567890123456.7.azuredatabricks.net:

import com.databricks.sdk.WorkspaceClient;

import com.databricks.sdk.core.DatabricksConfig;

// ...

DatabricksConfig cfg = new DatabricksConfig()

.setHost(retrieveWorkspaceUrl())

.setClientId(retrieveClientId())

.setClientSecret(retrieveClientSecret());

WorkspaceClient w = new WorkspaceClient(cfg);

// ...

For more information about authenticating with Databricks tools and SDKs that use Java and implement Databricks client unified authentication, see:

- Set up the Databricks Connect client for Scala (the Databricks Connect client for Scala uses the included Databricks SDK for Java for authentication)

- Authenticate the Databricks SDK for Java with your Azure Databricks account or workspace

Go

For account-level operations using default authentication:

import (

"github.com/databricks/databricks-sdk-go"

)

// ...

w := databricks.Must(databricks.NewWorkspaceClient())

// ...

For direct configuration (replace the retrieve placeholders with your own implementation to retrieve the values from the console, or other configuration store, such as Azure KeyVault). In this case, the Azure Databricks account console URL is https://accounts.azuredatabricks.net:

import (

"github.com/databricks/databricks-sdk-go"

)

// ...

w := databricks.Must(databricks.NewWorkspaceClient(&databricks.Config{

Host: retrieveAccountConsoleUrl(),

AccountId: retrieveAccountId(),

ClientId: retrieveClientId(),

ClientSecret: retrieveClientSecret(),

}))

// ...

For workspace-level operations using default authentication:

import (

"github.com/databricks/databricks-sdk-go"

)

// ...

a := databricks.Must(databricks.NewAccountClient())

// ...

For direct configuration (replace the retrieve placeholders with your own implementation to retrieve the values from the console, or other configuration store, such as Azure KeyVault). In this case, the host is the Azure Databricks per-workspace URL, for example https://adb-1234567890123456.7.azuredatabricks.net:

import (

"github.com/databricks/databricks-sdk-go"

)

// ...

a := databricks.Must(databricks.NewAccountClient(&databricks.Config{

Host: retrieveWorkspaceUrl(),

ClientId: retrieveClientId(),

ClientSecret: retrieveClientSecret(),

}))

// ...

For more information about authenticating with Databricks tools and SDKs that use Go and that implement Databricks client unified authentication, see Authenticate the Databricks SDK for Go with your Azure Databricks account or workspace.

Manually generate and use access tokens for OAuth service principal authentication

Azure Databricks tools and SDKs that implement the Databricks client unified authentication standard will automatically generate, refresh, and use Azure Databricks OAuth access tokens on your behalf as needed for OAuth service principal authentication.

Databricks recommends using client unified authentication, however if you must manually generate, refresh, or use Azure Databricks OAuth access tokens, follow the instructions in this section.

Use the service principal’s client ID and OAuth secret to request an OAuth access token to authenticate to both account-level REST APIs and workspace-level REST APIs. The access token will expire in one hour. You must request a new OAuth access token after the expiration. The scope of the OAuth access token depends on the level that you create the token from. You can create a token at either the account level or the workspace level, as follows:

- To call account-level and workspace-level REST APIs within accounts and workspaces that the service principal has access to, manually generate an access token at the account level.

- To call REST APIs within only one of the workspaces that the service principal has access to, manually generate an access token at the workspace level for only that workspace.

Manually generate an account-level access token

An OAuth access token created from the account level can be used against Databricks REST APIs in the account, and in any workspaces the service principal has access to.

Click the down arrow next to your username in the upper right corner.

Copy your Account ID.

Construct the token endpoint URL by replacing

<my-account-id>in the following URL with the account ID that you copied.https://accounts.azuredatabricks.net/oidc/accounts/<my-account-id>/v1/tokenUse a client such as

curlto request an OAuth access token with the token endpoint URL, the service principal’s client ID (also known as an application ID), and the service principal’s OAuth secret you created. Theall-apisscope requests an OAuth access token that can be used to access all Databricks REST APIs that the service principal has been granted access to.- Replace

<token-endpoint-URL>with the preceding token endpoint URL. - Replace

<client-id>with the service principal’s client ID, which is also known as an application ID. - Replace

<client-secret>with the service principal’s OAuth secret that you created.

export CLIENT_ID=<client-id> export CLIENT_SECRET=<client-secret> curl --request POST \ --url <token-endpoint-URL> \ --user "$CLIENT_ID:$CLIENT_SECRET" \ --data 'grant_type=client_credentials&scope=all-apis'This generates a response similar to:

{ "access_token": "eyJraWQiOiJkYTA4ZTVjZ…", "token_type": "Bearer", "expires_in": 3600 }Copy the

access_tokenfrom the response.- Replace

Manually generate a workspace-level access token

An OAuth access token created from the workspace level can only access REST APIs in that workspace, even if the service principal is an account admin or is a member of other workspaces.

Construct the token endpoint URL by replacing

https://<databricks-instance>with the workspace URL of your Azure Databricks deployment:https://<databricks-instance>/oidc/v1/tokenUse a client such as

curlto request an OAuth access token with the token endpoint URL, the service principal’s client ID (also known as an application ID), and the service principal’s OAuth secret you created. Theall-apisscope requests an OAuth access token that can be used to access all Databricks REST APIs that the service principal has been granted access to within the workspace that you are requesting the token from.- Replace

<token-endpoint-URL>with the preceding token endpoint URL. - Replace

<client-id>with the service principal’s client ID, which is also known as an application ID. - Replace

<client-secret>with the service principal’s OAuth secret that you created.

export CLIENT_ID=<client-id> export CLIENT_SECRET=<client-secret> curl --request POST \ --url <token-endpoint-URL> \ --user "$CLIENT_ID:$CLIENT_SECRET" \ --data 'grant_type=client_credentials&scope=all-apis'This generates a response similar to:

{ "access_token": "eyJraWQiOiJkYTA4ZTVjZ…", "token_type": "Bearer", "expires_in": 3600 }Copy the

access_tokenfrom the response.- Replace

Call a Databricks REST API

You can use the OAuth access token to authenticate to Azure Databricks account-level REST APIs and workspace-level REST APIs. The service principal must have account admin privileges to call account-level REST APIs.

Include the access token in the authorization header using Bearer authentication. You can use this approach with curl or any client that you build.

Example account-level REST API request

This example uses Bearer authentication to get a list of all workspaces associated with an account.

- Replace

<oauth-access-token>with the service principal’s OAuth access token that you copied in the previous step. - Replace

<account-id>with your account ID.

export OAUTH_TOKEN=<oauth-access-token>

curl --request GET --header "Authorization: Bearer $OAUTH_TOKEN" \

'https://accounts.azuredatabricks.net/api/2.0/accounts/<account-id>/workspaces'

Example workspace-level REST API request

This example uses Bearer authentication to list all available clusters in the specified workspace.

- Replace

<oauth-access-token>with the service principal’s OAuth access token that you copied in the previous step. - Replace

<workspace-URL>with your base workspace URL, which has the form similar todbc-a1b2345c-d6e7.cloud.databricks.com.

export OAUTH_TOKEN=<oauth-access-token>

curl --request GET --header "Authorization: Bearer $OAUTH_TOKEN" \

'https://<workspace-URL>/api/2.0/clusters/list'

Additional resources

- Service principals

- Overview of the Databricks identity model

- Additional information about authentication and access control