Solution ideas

This article describes a solution idea. Your cloud architect can use this guidance to help visualize the major components for a typical implementation of this architecture. Use this article as a starting point to design a well-architected solution that aligns with your workload's specific requirements.

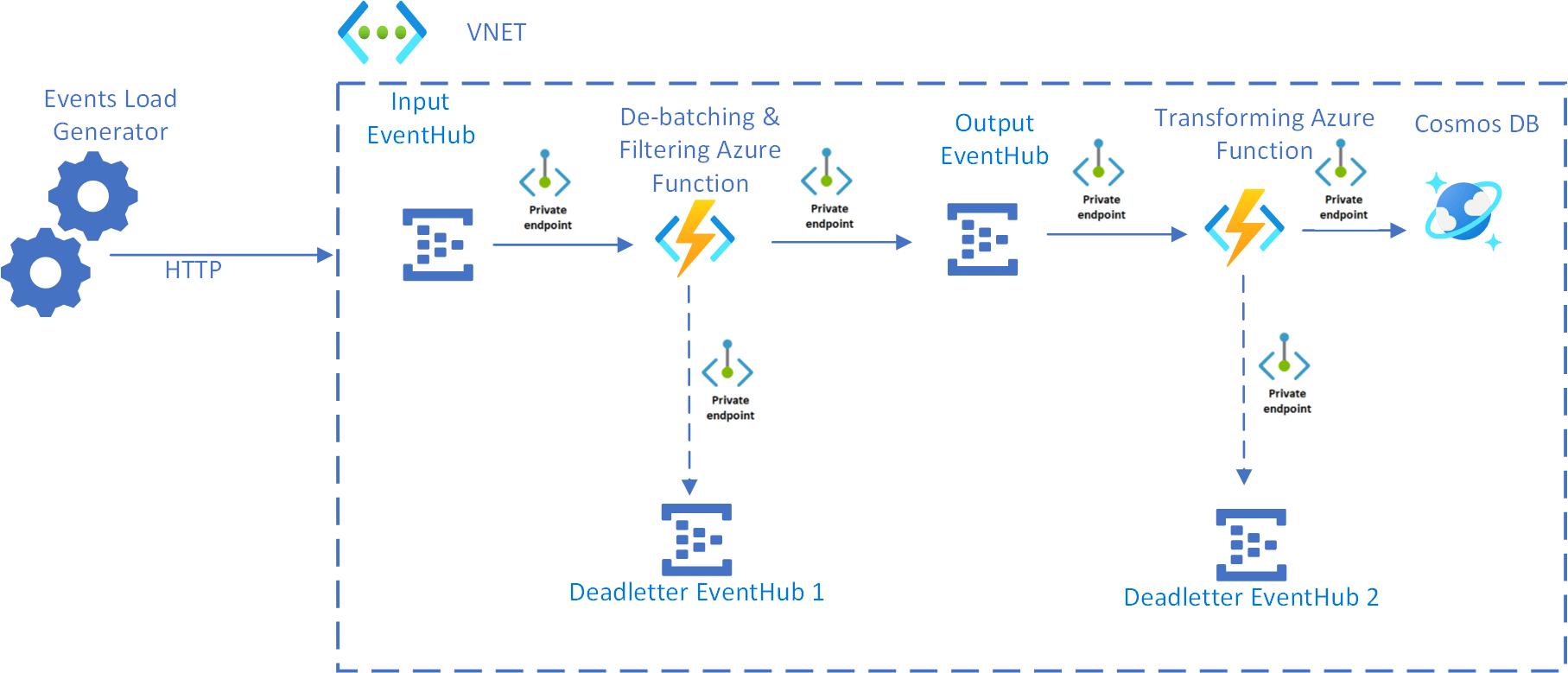

This article describes a serverless event-driven architecture in a virtual network that ingests and processes a stream of data and then writes the results to a database.

Architecture

Dataflow

- VNet integration is used to put all Azure resources behind Azure Private Endpoints.

- Events arrive at the Input Event Hub.

- The De-batching and Filtering Azure Function is triggered to handle the event. This step filters out unwanted events and de-batches the received events before submitting them to the Output Event Hub.

- If the De-batching and Filtering Azure Function fails to store the event successfully, the event is submitted to the Deadletter Event Hub 1.

- Events arriving at the Output Event Hub trigger the Transforming Azure Function. This Azure Function transforms the event into a message for the Azure Cosmos DB instance.

- The event is stored in an Azure Cosmos DB database.

- If the Transforming Azure Function fails to store the event successfully, the event is saved to the Deadletter Event Hub 2.

Note

For simplicity, subnets are not shown in the diagram.

Components

- Azure Private Endpoint is a network interface that connects you privately and securely to a service powered by Azure Private Link. Private Endpoint uses a private IP address from your VNet, effectively bringing the service into your VNet.

- Event Hubs ingests the data stream. Event Hubs is designed for high-throughput data streaming scenarios.

- Azure Functions is a serverless compute option. It uses an event-driven model, where a piece of code (a function) is invoked by a trigger.

- Azure Cosmos DB is a multi-model database service that is available in a serverless, consumption-based mode. For this scenario, the event-processing function stores JSON records, using the Azure Cosmos DB for NoSQL.

Scenario details

This solution idea shows a variation of a serverless event-driven architecture that ingests a stream of data, processes the data, and writes the results to a back-end database. In this example, the solution is hosted inside a virtual network with all Azure resources behind private endpoints.

To learn more about the basic concepts, considerations, and approaches for serverless event processing, consult the Serverless event processing reference architecture.

Potential use cases

A popular use case for implementing an end-to-end event stream processing pattern includes the Event Hubs streaming ingestion service to receive and process events per second by using de-batching and transformation logic implemented with highly scalable functions triggered by Event Hubs.

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal author:

- Rajasa Savant | Senior Software Development Engineer

To see non-public LinkedIn profiles, sign in to LinkedIn.

Next steps

- Manage a Private Endpoint connection

- Private Endpoint quickstart guides:

- Azure Event Hubs documentation

- Introduction to Azure Functions

- Azure Functions documentation

- Overview of Azure Cosmos DB

- Choose an API in Azure Cosmos DB

Related resources

- Serverless event processing is a reference architecture detailing a typical architecture of this type, with code samples and discussion of important considerations.

- Azure Kubernetes in event stream processing describes a variation of a serverless event-driven architecture running on Azure Kubernetes with KEDA scaler.