Deploy a highly available PostgreSQL database on AKS

In this article, you deploy a highly available PostgreSQL database on AKS.

- If you haven't already created the required infrastructure for this deployment, follow the steps in Create infrastructure for deploying a highly available PostgreSQL database on AKS to get set up, and then you can return to this article.

Important

Open-source software is mentioned throughout AKS documentation and samples. Software that you deploy is excluded from AKS service-level agreements, limited warranty, and Azure support. As you use open-source technology alongside AKS, consult the support options available from the respective communities and project maintainers to develop a plan.

For example, the Ray GitHub repository describes several platforms that vary in response time, purpose, and support level.

Microsoft takes responsibility for building the open-source packages that we deploy on AKS. That responsibility includes having complete ownership of the build, scan, sign, validate, and hotfix process, along with control over the binaries in container images. For more information, see Vulnerability management for AKS and AKS support coverage.

Create secret for bootstrap app user

Generate a secret to validate the PostgreSQL deployment by interactive login for a bootstrap app user using the

kubectl create secretcommand.PG_DATABASE_APPUSER_SECRET=$(echo -n | openssl rand -base64 16) kubectl create secret generic db-user-pass \ --from-literal=username=app \ --from-literal=password="${PG_DATABASE_APPUSER_SECRET}" \ --namespace $PG_NAMESPACE \ --context $AKS_PRIMARY_CLUSTER_NAMEValidate that the secret was successfully created using the

kubectl getcommand.kubectl get secret db-user-pass --namespace $PG_NAMESPACE --context $AKS_PRIMARY_CLUSTER_NAME

Set environment variables for the PostgreSQL cluster

Deploy a ConfigMap to set environment variables for the PostgreSQL cluster using the following

kubectl applycommand:cat <<EOF | kubectl apply --context $AKS_PRIMARY_CLUSTER_NAME -n $PG_NAMESPACE -f - apiVersion: v1 kind: ConfigMap metadata: name: cnpg-controller-manager-config data: ENABLE_AZURE_PVC_UPDATES: 'true' EOF

Install the Prometheus PodMonitors

Prometheus creates PodMonitors for the CNPG instances using a set of default recording rules stored on the CNPG GitHub samples repo. In a production environment, these rules would be modified as needed.

Add the Prometheus Community Helm repo using the

helm repo addcommand.helm repo add prometheus-community \ https://prometheus-community.github.io/helm-chartsUpgrade the Prometheus Community Helm repo and install it on the primary cluster using the

helm upgradecommand with the--installflag.helm upgrade --install \ --namespace $PG_NAMESPACE \ -f https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/main/docs/src/samples/monitoring/kube-stack-config.yaml \ prometheus-community \ prometheus-community/kube-prometheus-stack \ --kube-context=$AKS_PRIMARY_CLUSTER_NAME

Create a federated credential

In this section, you create a federated identity credential for PostgreSQL backup to allow CNPG to use AKS workload identity to authenticate to the storage account destination for backups. The CNPG operator creates a Kubernetes service account with the same name as the cluster named used in the CNPG Cluster deployment manifest.

Get the OIDC issuer URL of the cluster using the

az aks showcommand.export AKS_PRIMARY_CLUSTER_OIDC_ISSUER="$(az aks show \ --name $AKS_PRIMARY_CLUSTER_NAME \ --resource-group $RESOURCE_GROUP_NAME \ --query "oidcIssuerProfile.issuerUrl" \ --output tsv)"Create a federated identity credential using the

az identity federated-credential createcommand.az identity federated-credential create \ --name $AKS_PRIMARY_CLUSTER_FED_CREDENTIAL_NAME \ --identity-name $AKS_UAMI_CLUSTER_IDENTITY_NAME \ --resource-group $RESOURCE_GROUP_NAME \ --issuer "${AKS_PRIMARY_CLUSTER_OIDC_ISSUER}" \ --subject system:serviceaccount:"${PG_NAMESPACE}":"${PG_PRIMARY_CLUSTER_NAME}" \ --audience api://AzureADTokenExchange

Deploy a highly available PostgreSQL cluster

In this section, you deploy a highly available PostgreSQL cluster using the CNPG Cluster custom resource definition (CRD).

The following table outlines the key properties set in the YAML deployment manifest for the Cluster CRD:

| Property | Definition |

|---|---|

inheritedMetadata |

Specific to the CNPG operator. Metadata is inherited by all objects related to the cluster. |

annotations: service.beta.kubernetes.io/azure-dns-label-name |

DNS label for use when exposing the read-write and read-only Postgres cluster endpoints. |

labels: azure.workload.identity/use: "true" |

Indicates that AKS should inject workload identity dependencies into the pods hosting the PostgreSQL cluster instances. |

topologySpreadConstraints |

Require different zones and different nodes with label "workload=postgres". |

resources |

Configures a Quality of Service (QoS) class of Guaranteed. In a production environment, these values are key for maximizing usage of the underlying node VM and vary based on the Azure VM SKU used. |

bootstrap |

Specific to the CNPG operator. Initializes with an empty app database. |

storage / walStorage |

Specific to the CNPG operator. Defines storage templates for the PersistentVolumeClaims (PVCs) for data and log storage. It's also possible to specify storage for tablespaces to shard out for increased IOPs. |

replicationSlots |

Specific to the CNPG operator. Enables replication slots for high availability. |

postgresql |

Specific to the CNPG operator. Maps settings for postgresql.conf, pg_hba.conf, and pg_ident.conf config. |

serviceAccountTemplate |

Contains the template needed to generate the service accounts and maps the AKS federated identity credential to the UAMI to enable AKS workload identity authentication from the pods hosting the PostgreSQL instances to external Azure resources. |

barmanObjectStore |

Specific to the CNPG operator. Configures the barman-cloud tool suite using AKS workload identity for authentication to the Azure Blob Storage object store. |

Deploy the PostgreSQL cluster with the Cluster CRD using the

kubectl applycommand.cat <<EOF | kubectl apply --context $AKS_PRIMARY_CLUSTER_NAME -n $PG_NAMESPACE -v 9 -f - apiVersion: postgresql.cnpg.io/v1 kind: Cluster metadata: name: $PG_PRIMARY_CLUSTER_NAME spec: inheritedMetadata: annotations: service.beta.kubernetes.io/azure-dns-label-name: $AKS_PRIMARY_CLUSTER_PG_DNSPREFIX labels: azure.workload.identity/use: "true" instances: 3 startDelay: 30 stopDelay: 30 minSyncReplicas: 1 maxSyncReplicas: 1 replicationSlots: highAvailability: enabled: true updateInterval: 30 topologySpreadConstraints: - maxSkew: 1 topologyKey: topology.kubernetes.io/zone whenUnsatisfiable: DoNotSchedule labelSelector: matchLabels: cnpg.io/cluster: $PG_PRIMARY_CLUSTER_NAME affinity: nodeSelector: workload: postgres resources: requests: memory: '8Gi' cpu: 2 limits: memory: '8Gi' cpu: 2 bootstrap: initdb: database: appdb owner: app secret: name: db-user-pass dataChecksums: true storage: size: 2Gi pvcTemplate: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi storageClassName: managed-csi-premium walStorage: size: 2Gi pvcTemplate: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi storageClassName: managed-csi-premium monitoring: enablePodMonitor: true postgresql: parameters: archive_timeout: '5min' auto_explain.log_min_duration: '10s' checkpoint_completion_target: '0.9' checkpoint_timeout: '15min' shared_buffers: '256MB' effective_cache_size: '512MB' pg_stat_statements.max: '1000' pg_stat_statements.track: 'all' max_connections: '400' max_prepared_transactions: '400' max_parallel_workers: '32' max_parallel_maintenance_workers: '8' max_parallel_workers_per_gather: '8' max_replication_slots: '32' max_worker_processes: '32' wal_keep_size: '512MB' max_wal_size: '1GB' pg_hba: - host all all all scram-sha-256 serviceAccountTemplate: metadata: annotations: azure.workload.identity/client-id: "$AKS_UAMI_WORKLOAD_CLIENTID" labels: azure.workload.identity/use: "true" backup: barmanObjectStore: destinationPath: "https://${PG_PRIMARY_STORAGE_ACCOUNT_NAME}.blob.core.windows.net/backups" azureCredentials: inheritFromAzureAD: true retentionPolicy: '7d' EOFValidate that the primary PostgreSQL cluster was successfully created using the

kubectl getcommand. The CNPG Cluster CRD specified three instances, which can be validated by viewing running pods once each instance is brought up and joined for replication. Be patient as it can take some time for all three instances to come online and join the cluster.kubectl get pods --context $AKS_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACE -l cnpg.io/cluster=$PG_PRIMARY_CLUSTER_NAMEExample output

NAME READY STATUS RESTARTS AGE pg-primary-cnpg-r8c7unrw-1 1/1 Running 0 4m25s pg-primary-cnpg-r8c7unrw-2 1/1 Running 0 3m33s pg-primary-cnpg-r8c7unrw-3 1/1 Running 0 2m49s

Validate the Prometheus PodMonitor is running

The CNPG operator automatically creates a PodMonitor for the primary instance using the recording rules created during the Prometheus Community installation.

Validate the PodMonitor is running using the

kubectl getcommand.kubectl --namespace $PG_NAMESPACE \ --context $AKS_PRIMARY_CLUSTER_NAME \ get podmonitors.monitoring.coreos.com \ $PG_PRIMARY_CLUSTER_NAME \ --output yamlExample output

kind: PodMonitor metadata: annotations: cnpg.io/operatorVersion: 1.23.1 ...

If you are using Azure Monitor for Managed Prometheus, you will need to add another pod monitor using the custom group name. Managed Prometheus does not pick up the custom resource definitions (CRDs) from the Prometheus community. Aside from the group name, the CRDs are the same. This allows pod monitors for Managed Prometheus to exist side-by-side those that use the community pod monitor. If you are not using Managed Prometheus, you can skip this. Create a new pod monitor:

cat <<EOF | kubectl apply --context $AKS_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACE -f -

apiVersion: azmonitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: cnpg-cluster-metrics-managed-prometheus

namespace: ${PG_NAMESPACE}

labels:

azure.workload.identity/use: "true"

cnpg.io/cluster: ${PG_PRIMARY_CLUSTER_NAME}

spec:

selector:

matchLabels:

azure.workload.identity/use: "true"

cnpg.io/cluster: ${PG_PRIMARY_CLUSTER_NAME}

podMetricsEndpoints:

- port: metrics

EOF

Verify that the pod monitor is created (note the difference in the group name).

kubectl --namespace $PG_NAMESPACE \

--context $AKS_PRIMARY_CLUSTER_NAME \

get podmonitors.azmonitoring.coreos.com \

-l cnpg.io/cluster=$PG_PRIMARY_CLUSTER_NAME \

-o yaml

Option A - Azure Monitor Workspace

Once you have deployed the Postgres cluster and the pod monitor, you can view the metrics using the Azure portal in an Azure Monitor workspace.

Option B - Managed Grafana

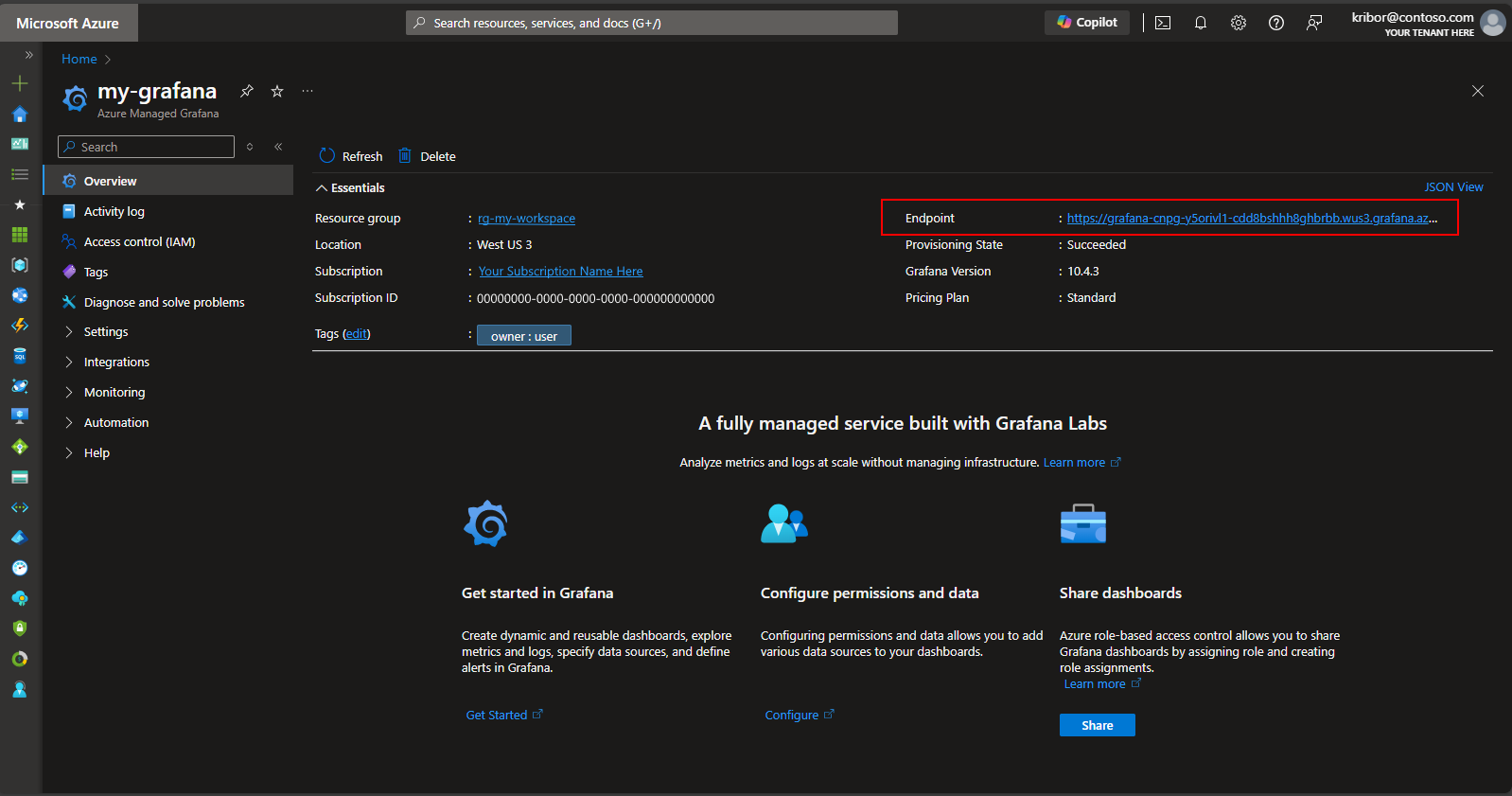

Alternatively, Once you have deployed the Postgres cluster and pod monitors, you can create a metrics dashboard on the Managed Grafana instance created by the deployment script to visualize the metrics exported to the Azure Monitor workspace. You can access the Managed Grafana via the Azure portal. Navigate to the Managed Grafana instance created by the deployment script and click on the Endpoint link as shown here:

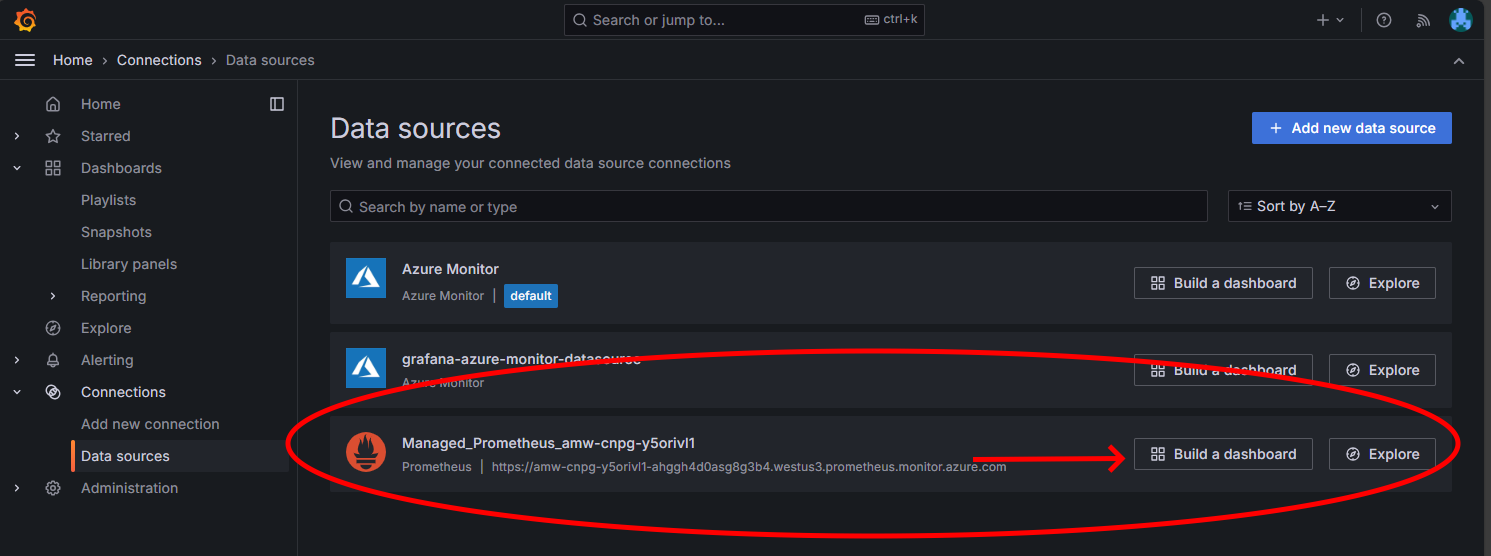

Clicking on the Endpoint link will cause a new browser window to open where you can create dashboards on the Managed Grafana instance. Following the instructions to configure an Azure Monitor data source, you can then add visualizations to create a dashboard of metrics from the Postgres cluster. After setting up the data source connection, from the main menu, click the Data sources option and you should see a set of data source options for the data source connection as shown here:

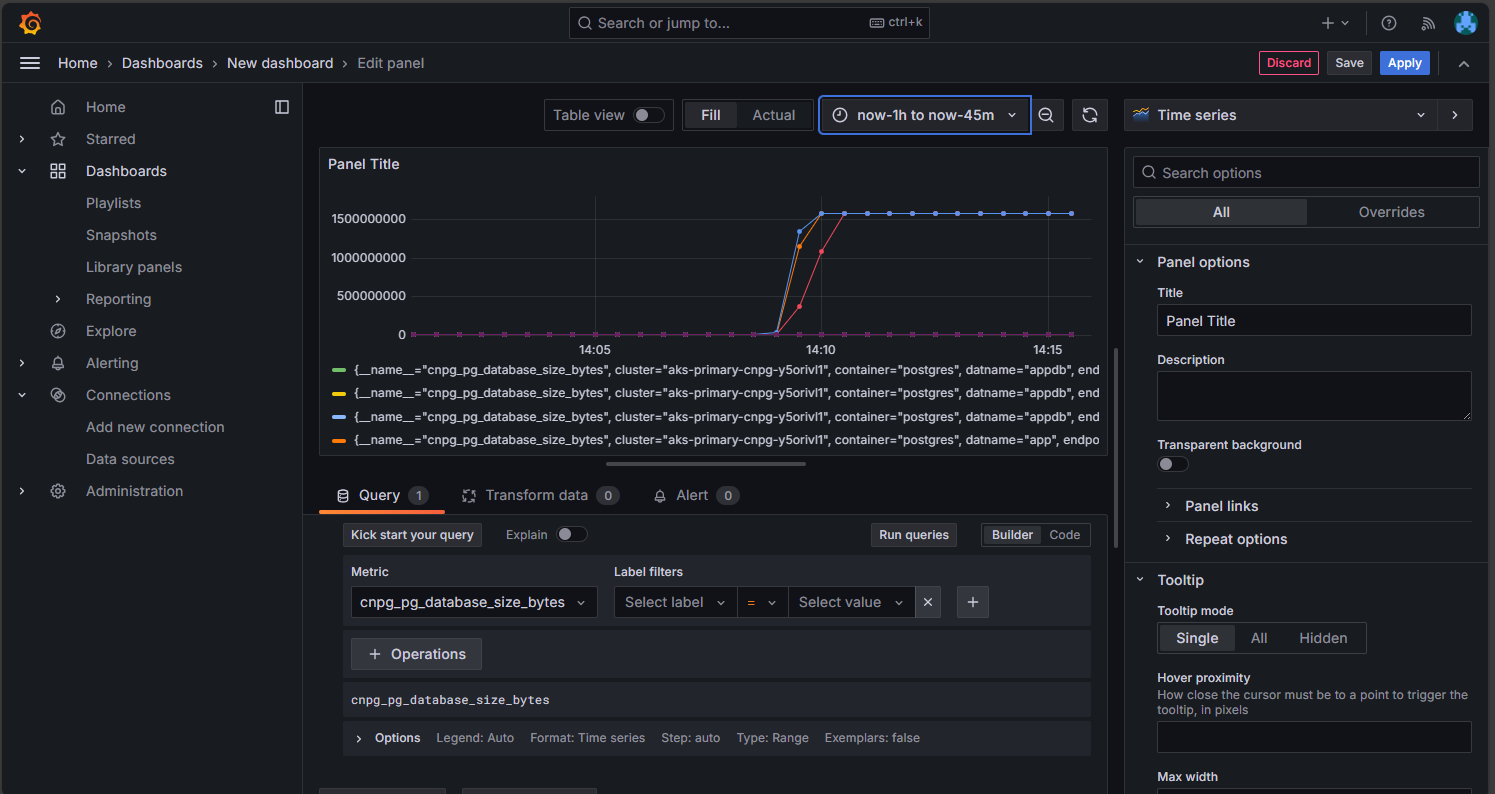

On the Managed Prometheus option, click the option to build a dashboard to open the dashboard editor. Once the editor window opens, click the Add visualization option then click the Managed Prometheus option to browse the metrics from the Postgres cluster. Once you have selected the metric you want to visualize, click the Run queries button to fetch the data for the visualization as shown here:

Click the Save button to add the panel to your dashboard. You can add other panels by clicking the Add button in the dashboard editor and repeating this process to visualize other metrics. Adding the metrics visualizations, you should have something that looks like this:

Click the Save icon to save your dashboard.

Inspect the deployed PostgreSQL cluster

Validate that PostgreSQL is spread across multiple availability zones by retrieving the AKS node details using the kubectl get command.

kubectl get nodes \

--context $AKS_PRIMARY_CLUSTER_NAME \

--namespace $PG_NAMESPACE \

--output json | jq '.items[] | {node: .metadata.name, zone: .metadata.labels."failure-domain.beta.kubernetes.io/zone"}'

Your output should resemble the following example output with the availability zone shown for each node:

{

"node": "aks-postgres-15810965-vmss000000",

"zone": "westus3-1"

}

{

"node": "aks-postgres-15810965-vmss000001",

"zone": "westus3-2"

}

{

"node": "aks-postgres-15810965-vmss000002",

"zone": "westus3-3"

}

{

"node": "aks-systempool-26112968-vmss000000",

"zone": "westus3-1"

}

{

"node": "aks-systempool-26112968-vmss000001",

"zone": "westus3-2"

}

Connect to PostgreSQL and create a sample dataset

In this section, you create a table and insert some data into the app database that was created in the CNPG Cluster CRD you deployed earlier. You use this data to validate the backup and restore operations for the PostgreSQL cluster.

Create a table and insert data into the app database using the following commands:

kubectl cnpg psql $PG_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACE# Run the following PSQL commands to create a small dataset # postgres=# CREATE TABLE datasample (id INTEGER,name VARCHAR(255)); INSERT INTO datasample (id, name) VALUES (1, 'John'); INSERT INTO datasample (id, name) VALUES (2, 'Jane'); INSERT INTO datasample (id, name) VALUES (3, 'Alice'); SELECT COUNT(*) FROM datasample; # Type \q to exit psqlYour output should resemble the following example output:

CREATE TABLE INSERT 0 1 INSERT 0 1 INSERT 0 1 count ------- 3 (1 row)

Connect to PostgreSQL read-only replicas

Connect to the PostgreSQL read-only replicas and validate the sample dataset using the following commands:

kubectl cnpg psql --replica $PG_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACE#postgres=# SELECT pg_is_in_recovery();Example output

# pg_is_in_recovery #------------------- # t #(1 row)#postgres=# SELECT COUNT(*) FROM datasample;Example output

# count #------- # 3 #(1 row) # Type \q to exit psql

Set up on-demand and scheduled PostgreSQL backups using Barman

Validate that the PostgreSQL cluster can access the Azure storage account specified in the CNPG Cluster CRD and that

Working WAL archivingreports asOKusing the following command:kubectl cnpg status $PG_PRIMARY_CLUSTER_NAME 1 \ --context $AKS_PRIMARY_CLUSTER_NAME \ --namespace $PG_NAMESPACEExample output

Continuous Backup status First Point of Recoverability: Not Available Working WAL archiving: OK WALs waiting to be archived: 0 Last Archived WAL: 00000001000000000000000A @ 2024-07-09T17:18:13.982859Z Last Failed WAL: -Deploy an on-demand backup to Azure Storage, which uses the AKS workload identity integration, using the YAML file with the

kubectl applycommand.export BACKUP_ONDEMAND_NAME="on-demand-backup-1" cat <<EOF | kubectl apply --context $AKS_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACE -v 9 -f - apiVersion: postgresql.cnpg.io/v1 kind: Backup metadata: name: $BACKUP_ONDEMAND_NAME spec: method: barmanObjectStore cluster: name: $PG_PRIMARY_CLUSTER_NAME EOFValidate the status of the on-demand backup using the

kubectl describecommand.kubectl describe backup $BACKUP_ONDEMAND_NAME \ --context $AKS_PRIMARY_CLUSTER_NAME \ --namespace $PG_NAMESPACEExample output

Type Reason Age From Message ---- ------ ---- ---- ------- Normal Starting 6s cloudnative-pg-backup Starting backup for cluster pg-primary-cnpg-r8c7unrw Normal Starting 5s instance-manager Backup started Normal Completed 1s instance-manager Backup completedValidate that the cluster has a first point of recoverability using the following command:

kubectl cnpg status $PG_PRIMARY_CLUSTER_NAME 1 \ --context $AKS_PRIMARY_CLUSTER_NAME \ --namespace $PG_NAMESPACEExample output

Continuous Backup status First Point of Recoverability: 2024-06-05T13:47:18Z Working WAL archiving: OKConfigure a scheduled backup for every hour at 15 minutes past the hour using the YAML file with the

kubectl applycommand.export BACKUP_SCHEDULED_NAME="scheduled-backup-1" cat <<EOF | kubectl apply --context $AKS_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACE -v 9 -f - apiVersion: postgresql.cnpg.io/v1 kind: ScheduledBackup metadata: name: $BACKUP_SCHEDULED_NAME spec: # Backup once per hour schedule: "0 15 * ? * *" backupOwnerReference: self cluster: name: $PG_PRIMARY_CLUSTER_NAME EOFValidate the status of the scheduled backup using the

kubectl describecommand.kubectl describe scheduledbackup $BACKUP_SCHEDULED_NAME \ --context $AKS_PRIMARY_CLUSTER_NAME \ --namespace $PG_NAMESPACEView the backup files stored on Azure blob storage for the primary cluster using the

az storage blob listcommand.az storage blob list \ --account-name $PG_PRIMARY_STORAGE_ACCOUNT_NAME \ --container-name backups \ --query "[*].name" \ --only-show-errorsYour output should resemble the following example output, validating the backup was successful:

[ "pg-primary-cnpg-r8c7unrw/base/20240605T134715/backup.info", "pg-primary-cnpg-r8c7unrw/base/20240605T134715/data.tar", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000001", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000002", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000003", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000003.00000028.backup", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000004", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000005", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000005.00000028.backup", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000006", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000007", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000008", "pg-primary-cnpg-r8c7unrw/wals/0000000100000000/000000010000000000000009" ]

Restore the on-demand backup to a new PostgreSQL cluster

In this section, you restore the on-demand backup you created earlier using the CNPG operator into a new instance using the bootstrap Cluster CRD. A single instance cluster is used for simplicity. Remember that the AKS workload identity (via CNPG inheritFromAzureAD) accesses the backup files, and that the recovery cluster name is used to generate a new Kubernetes service account specific to the recovery cluster.

You also create a second federated credential to map the new recovery cluster service account to the existing UAMI that has "Storage Blob Data Contributor" access to the backup files on blob storage.

Create a second federated identity credential using the

az identity federated-credential createcommand.export PG_PRIMARY_CLUSTER_NAME_RECOVERED="$PG_PRIMARY_CLUSTER_NAME-recovered-db" az identity federated-credential create \ --name $PG_PRIMARY_CLUSTER_NAME_RECOVERED \ --identity-name $AKS_UAMI_CLUSTER_IDENTITY_NAME \ --resource-group $RESOURCE_GROUP_NAME \ --issuer "${AKS_PRIMARY_CLUSTER_OIDC_ISSUER}" \ --subject system:serviceaccount:"${PG_NAMESPACE}":"${PG_PRIMARY_CLUSTER_NAME_RECOVERED}" \ --audience api://AzureADTokenExchangeRestore the on-demand backup using the Cluster CRD with the

kubectl applycommand.cat <<EOF | kubectl apply --context $AKS_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACE -v 9 -f - apiVersion: postgresql.cnpg.io/v1 kind: Cluster metadata: name: $PG_PRIMARY_CLUSTER_NAME_RECOVERED spec: inheritedMetadata: annotations: service.beta.kubernetes.io/azure-dns-label-name: $AKS_PRIMARY_CLUSTER_PG_DNSPREFIX labels: azure.workload.identity/use: "true" instances: 1 affinity: nodeSelector: workload: postgres # Point to cluster backup created earlier and stored on Azure Blob Storage bootstrap: recovery: source: clusterBackup storage: size: 2Gi pvcTemplate: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi storageClassName: managed-csi-premium volumeMode: Filesystem walStorage: size: 2Gi pvcTemplate: accessModes: - ReadWriteOnce resources: requests: storage: 2Gi storageClassName: managed-csi-premium volumeMode: Filesystem serviceAccountTemplate: metadata: annotations: azure.workload.identity/client-id: "$AKS_UAMI_WORKLOAD_CLIENTID" labels: azure.workload.identity/use: "true" externalClusters: - name: clusterBackup barmanObjectStore: destinationPath: https://${PG_PRIMARY_STORAGE_ACCOUNT_NAME}.blob.core.windows.net/backups serverName: $PG_PRIMARY_CLUSTER_NAME azureCredentials: inheritFromAzureAD: true wal: maxParallel: 8 EOFConnect to the recovered instance, then validate that the dataset created on the original cluster where the full backup was taken is present using the following command:

kubectl cnpg psql $PG_PRIMARY_CLUSTER_NAME_RECOVERED --namespace $PG_NAMESPACEpostgres=# SELECT COUNT(*) FROM datasample;Example output

# count #------- # 3 #(1 row) # Type \q to exit psqlDelete the recovered cluster using the following command:

kubectl cnpg destroy $PG_PRIMARY_CLUSTER_NAME_RECOVERED 1 \ --context $AKS_PRIMARY_CLUSTER_NAME \ --namespace $PG_NAMESPACEDelete the federated identity credential using the

az identity federated-credential deletecommand.az identity federated-credential delete \ --name $PG_PRIMARY_CLUSTER_NAME_RECOVERED \ --identity-name $AKS_UAMI_CLUSTER_IDENTITY_NAME \ --resource-group $RESOURCE_GROUP_NAME \ --yes

Expose the PostgreSQL cluster using a public load balancer

In this section, you configure the necessary infrastructure to publicly expose the PostgreSQL read-write and read-only endpoints with IP source restrictions to the public IP address of your client workstation.

You also retrieve the following endpoints from the Cluster IP service:

- One primary read-write endpoint that ends with

*-rw. - Zero to N (depending on the number of replicas) read-only endpoints that end with

*-ro. - One replication endpoint that ends with

*-r.

Get the Cluster IP service details using the

kubectl getcommand.kubectl get services \ --context $AKS_PRIMARY_CLUSTER_NAME \ --namespace $PG_NAMESPACE \ -l cnpg.io/cluster=$PG_PRIMARY_CLUSTER_NAMEExample output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE pg-primary-cnpg-sryti1qf-r ClusterIP 10.0.193.27 <none> 5432/TCP 3h57m pg-primary-cnpg-sryti1qf-ro ClusterIP 10.0.237.19 <none> 5432/TCP 3h57m pg-primary-cnpg-sryti1qf-rw ClusterIP 10.0.244.125 <none> 5432/TCP 3h57mNote

There are three services:

namespace/cluster-name-romapped to port 5433,namespace/cluster-name-rw, andnamespace/cluster-name-rmapped to port 5433. It’s important to avoid using the same port as the read/write node of the PostgreSQL database cluster. If you want applications to access only the read-only replica of the PostgreSQL database cluster, direct them to port 5433. The final service is typically used for data backups but can also function as a read-only node.Get the service details using the

kubectl getcommand.export PG_PRIMARY_CLUSTER_RW_SERVICE=$(kubectl get services \ --namespace $PG_NAMESPACE \ --context $AKS_PRIMARY_CLUSTER_NAME \ -l "cnpg.io/cluster" \ --output json | jq -r '.items[] | select(.metadata.name | endswith("-rw")) | .metadata.name') echo $PG_PRIMARY_CLUSTER_RW_SERVICE export PG_PRIMARY_CLUSTER_RO_SERVICE=$(kubectl get services \ --namespace $PG_NAMESPACE \ --context $AKS_PRIMARY_CLUSTER_NAME \ -l "cnpg.io/cluster" \ --output json | jq -r '.items[] | select(.metadata.name | endswith("-ro")) | .metadata.name') echo $PG_PRIMARY_CLUSTER_RO_SERVICEConfigure the load balancer service with the following YAML files using the

kubectl applycommand.cat <<EOF | kubectl apply --context $AKS_PRIMARY_CLUSTER_NAME -f - apiVersion: v1 kind: Service metadata: annotations: service.beta.kubernetes.io/azure-load-balancer-resource-group: $AKS_PRIMARY_CLUSTER_NODERG_NAME service.beta.kubernetes.io/azure-pip-name: $AKS_PRIMARY_CLUSTER_PUBLICIP_NAME service.beta.kubernetes.io/azure-dns-label-name: $AKS_PRIMARY_CLUSTER_PG_DNSPREFIX name: cnpg-cluster-load-balancer-rw namespace: "${PG_NAMESPACE}" spec: type: LoadBalancer ports: - protocol: TCP port: 5432 targetPort: 5432 selector: cnpg.io/instanceRole: primary cnpg.io/podRole: instance loadBalancerSourceRanges: - "$MY_PUBLIC_CLIENT_IP/32" EOF cat <<EOF | kubectl apply --context $AKS_PRIMARY_CLUSTER_NAME -f - apiVersion: v1 kind: Service metadata: annotations: service.beta.kubernetes.io/azure-load-balancer-resource-group: $AKS_PRIMARY_CLUSTER_NODERG_NAME service.beta.kubernetes.io/azure-pip-name: $AKS_PRIMARY_CLUSTER_PUBLICIP_NAME service.beta.kubernetes.io/azure-dns-label-name: $AKS_PRIMARY_CLUSTER_PG_DNSPREFIX name: cnpg-cluster-load-balancer-ro namespace: "${PG_NAMESPACE}" spec: type: LoadBalancer ports: - protocol: TCP port: 5433 targetPort: 5432 selector: cnpg.io/instanceRole: replica cnpg.io/podRole: instance loadBalancerSourceRanges: - "$MY_PUBLIC_CLIENT_IP/32" EOFGet the service details using the

kubectl describecommand.kubectl describe service cnpg-cluster-load-balancer-rw \ --context $AKS_PRIMARY_CLUSTER_NAME \ --namespace $PG_NAMESPACE kubectl describe service cnpg-cluster-load-balancer-ro \ --context $AKS_PRIMARY_CLUSTER_NAME \ --namespace $PG_NAMESPACE export AKS_PRIMARY_CLUSTER_ALB_DNSNAME="$(az network public-ip show \ --resource-group $AKS_PRIMARY_CLUSTER_NODERG_NAME \ --name $AKS_PRIMARY_CLUSTER_PUBLICIP_NAME \ --query "dnsSettings.fqdn" --output tsv)" echo $AKS_PRIMARY_CLUSTER_ALB_DNSNAME

Validate public PostgreSQL endpoints

In this section, you validate that the Azure Load Balancer is properly set up using the static IP that you created earlier and routing connections to the primary read-write and read-only replicas and use the psql CLI to connect to both.

Remember that the primary read-write endpoint maps to TCP port 5432 and the read-only replica endpoints map to port 5433 to allow the same PostgreSQL DNS name to be used for readers and writers.

Note

You need the value of the app user password for PostgreSQL basic auth that was generated earlier and stored in the $PG_DATABASE_APPUSER_SECRET environment variable.

Validate the public PostgreSQL endpoints using the following

psqlcommands:echo "Public endpoint for PostgreSQL cluster: $AKS_PRIMARY_CLUSTER_ALB_DNSNAME" # Query the primary, pg_is_in_recovery = false psql -h $AKS_PRIMARY_CLUSTER_ALB_DNSNAME \ -p 5432 -U app -d appdb -W -c "SELECT pg_is_in_recovery();"Example output

pg_is_in_recovery ------------------- f (1 row)echo "Query a replica, pg_is_in_recovery = true" psql -h $AKS_PRIMARY_CLUSTER_ALB_DNSNAME \ -p 5433 -U app -d appdb -W -c "SELECT pg_is_in_recovery();"Example output

# Example output pg_is_in_recovery ------------------- t (1 row)When successfully connected to the primary read-write endpoint, the PostgreSQL function returns

ffor false, indicating that the current connection is writable.When connected to a replica, the function returns

tfor true, indicating the database is in recovery and read-only.

Simulate an unplanned failover

In this section, you trigger a sudden failure by deleting the pod running the primary, which simulates a sudden crash or loss of network connectivity to the node hosting the PostgreSQL primary.

Check the status of the running pod instances using the following command:

kubectl cnpg status $PG_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACEExample output

Name Current LSN Rep role Status Node --------------------------- ----------- -------- ------- ----------- pg-primary-cnpg-sryti1qf-1 0/9000060 Primary OK aks-postgres-32388626-vmss000000 pg-primary-cnpg-sryti1qf-2 0/9000060 Standby (sync) OK aks-postgres-32388626-vmss000001 pg-primary-cnpg-sryti1qf-3 0/9000060 Standby (sync) OK aks-postgres-32388626-vmss000002Delete the primary pod using the

kubectl deletecommand.PRIMARY_POD=$(kubectl get pod \ --namespace $PG_NAMESPACE \ --no-headers \ -o custom-columns=":metadata.name" \ -l role=primary) kubectl delete pod $PRIMARY_POD --grace-period=1 --namespace $PG_NAMESPACEValidate that the

pg-primary-cnpg-sryti1qf-2pod instance is now the primary using the following command:kubectl cnpg status $PG_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACEExample output

pg-primary-cnpg-sryti1qf-2 0/9000060 Primary OK aks-postgres-32388626-vmss000001 pg-primary-cnpg-sryti1qf-1 0/9000060 Standby (sync) OK aks-postgres-32388626-vmss000000 pg-primary-cnpg-sryti1qf-3 0/9000060 Standby (sync) OK aks-postgres-32388626-vmss000002Reset the

pg-primary-cnpg-sryti1qf-1pod instance as the primary using the following command:kubectl cnpg promote $PG_PRIMARY_CLUSTER_NAME 1 --namespace $PG_NAMESPACEValidate that the pod instances have returned to their original state before the unplanned failover test using the following command:

kubectl cnpg status $PG_PRIMARY_CLUSTER_NAME --namespace $PG_NAMESPACEExample output

Name Current LSN Rep role Status Node --------------------------- ----------- -------- ------- ----------- pg-primary-cnpg-sryti1qf-1 0/9000060 Primary OK aks-postgres-32388626-vmss000000 pg-primary-cnpg-sryti1qf-2 0/9000060 Standby (sync) OK aks-postgres-32388626-vmss000001 pg-primary-cnpg-sryti1qf-3 0/9000060 Standby (sync) OK aks-postgres-32388626-vmss000002

Clean up resources

Once you're finished reviewing your deployment, delete all the resources you created in this guide using the

az group deletecommand.az group delete --resource-group $RESOURCE_GROUP_NAME --no-wait --yes

Next steps

In this how-to guide, you learned how to:

- Use Azure CLI to create a multi-zone AKS cluster.

- Deploy a highly available PostgreSQL cluster and database using the CNPG operator.

- Set up monitoring for PostgreSQL using Prometheus and Grafana.

- Deploy a sample dataset to the PostgreSQL database.

- Perform PostgreSQL and AKS cluster upgrades.

- Simulate a cluster interruption and PostgreSQL replica failover.

- Perform a backup and restore of the PostgreSQL database.

To learn more about how you can leverage AKS for your workloads, see What is Azure Kubernetes Service (AKS)?

Contributors

Microsoft maintains this article. The following contributors originally wrote it:

- Ken Kilty | Principal TPM

- Russell de Pina | Principal TPM

- Adrian Joian | Senior Customer Engineer

- Jenny Hayes | Senior Content Developer

- Carol Smith | Senior Content Developer

- Erin Schaffer | Content Developer 2

- Adam Sharif | Customer Engineer 2

Azure Kubernetes Service